Releases: mratsim/Arraymancer

v0.7.0 "Memories of Ice"

Arraymancer v0.7.0 Jul. 4 2021 "Memories of Ice"

This release is named after "Memories of Ice" (2001), the third book of Steven Erikson epic dark fantasy masterpiece "The Malazan Book of the Fallen".

Changes :

- Add

toUnsafeViewas replacement ofdataArrayto return aptr UncheckedArray - Doc generation fixes

cumsum,cumprod- Fix least square solver

- Fix mean square error backpropagation

- Adapt to upstream symbol resolution changes

- Basic Graph Convolution Network

- Slicing tutorial revamp

- n-dimensional tensor pretty printing

- Compilation fixes to handle Nim v1.0 to Nim devel

Thanks to @Vindaar for maintaining the repo, the docs, pretty-printing and answering many many questions on Discord while I took a step back.

Thanks to @filipeclduarte for the cumsum/cumprod, @Clonkk for updating raw data accesses, @struggle for finding a bug in mean square error backprop,

@timotheecour for spreading new upstream requirements downstream and @anon767 for Graph Neural Network.

v0.6.1

Windwalkers

Arraymancer v0.6.0 - Windwalkers

This release is named after "Windwalkers" (2004, French "La Horde du Contrevent"), by Alain Damasio.

Changes:

- The

symeigproc to compute eigenvectors of a symmetric matrix

now accepts an "uplo" char parameter. This allows to fill only the Upper or Lower

part of the matrix, the other half is not used in computation. - Added

svd_randomized, a fast and accurate SVD approximation via random sampling.

This is the standard driver for large scale SVD applications as SVD on large matrices is very slow. pcanow uses the randomized SVD instead of computing the covariance matrix.

It can now efficiently deal with large scale problems.

It now accepts acenter,n_oversamplesandn_power_itersarguments.

Note thatpcawithout centering is equivalent to a truncated SVD.- LU decomposition has been added

- QR decomposition has been added

hilberthas been introduced. It creates the famous ill-conditioned Hilbert matrix.

The matrix is suitable to stress test decompositions.- The

arangeprocedure has been introduced. It creates evenly spaced value within a specified range

and step - The ordering of arguments to error functions has been converted to

(y_pred, y_target)(from (y_target, y_pred)), enabling the syntaxy_pred.accuracy_score(y).

All existing error functions in Arraymancer were commutative w.r.t. to arguments

so existing code will keep working. - A

solveprocedure has been added to solve linear system of equations represented as matrices. - A

softmaxlayer has been added to the autograd and neural networks

complementing the SoftmaxCrossEntropy layer which fused softmax + Negative-loglikelihood. - The stochastic gradient descent now has a version with Momentum

Bug fixes:

gemmcould crash when the result was column major.- The automatic fusion of matrix multiplication with matrix addition

(A * X) + bcould update the b matrix. - Complex converters do not pollute the global namespace and do not

prevent string conversion via$of number types due to ambiguous call. - in-place division has been fixed, a typo made it into substraction.

- A conflict between NVIDIA "nanosecond" and Nim times module "nanosecond"

preventing CUDA compilation has been fixed

Breaking:

- In

symeig, theeigenvectorsargument is now calledreturn_eigenvectors. - In

symeigwith slice, the newuploprecedes the slice argument. - pca input "nb_components" has been renamed "n_components".

- pca output tuple used the names (results, components). It has been renamed to (projected, components).

- A

pcaoverload that projected a data matrix on already existing principal axes

was removed. Simply multiply the mean-centered data matrix with the components instead. - Complex converters were removed. This prevents hard to debug and workaround implicit conversion bug in downstream library.

If necessary, users can re-implement converters themselves.

This also provides a 20% boost in Arraymancer compilation times

Deprecation:

- The syntax gemm(A, B, C) is now deprecated.

Use explicit "gemm(1.0, A, B, 0.0, C)" instead.

Arguably not zero-ing C could also be a reasonable default. - The dot in broadcasting and elementwise operators has changed place

Use+.,*.,/.,-.,^.,+.=,*.=,/.=,-.=,^.=

instead of the previous order.+and.+=.

This allows the broadcasting operators to have the same precedence as the

natural operators.

This also align Arraymancer with other Nim packages: Manu and NumericalNim

Thanks to @dynalagreen for the SGD with Momentum, @xcokazaki for spotting the in-place division typo,

@Vindaar for fixing the automatic matrix multiplication and addition fusion,

@Imperator26 for the Softmax layer, @brentp for reviewing and augmenting the SVD and PCA API,

@auxym for the linear equation solver and @berquist for the reordering all error functions to the new API.

Thanks @B3liever for suggesting the dot change to solve the precedence issue in broadcasting and elementwise operators.

v0.5.2

v0.5.1

Changes affecting backward compatibility:

- None

Changes:

- 0.20.x compatibility (commit 0921190)

- Complex support

Einsum- Naive whitespace tokenizer for NLP

- Preview of Laser backend for matrix multiplication without SIMD autodetection (already 5x faster on integer matrix multiplication)

Fix:

- Fix height/width order when reading an image in tensor

Thanks to @chimez for the complex support and updating for 0.20, @metasyn for the tokenizer,

@xcokazaki for the image dimension fix and @Vindaar for the einsum implemention

Sign of the Unicorn

This release is named after "Sign of the Unicorn" (1975), the third book of Roger Zelazny masterpiece "The Chronicles of Amber".

Changes affecting backward compatibility:

- PCA has been split into 2

- The old PCA with input

pca(x: Tensor, nb_components: int)now returns a tuple

of result and principal components tensors in descending order instead of just a result - A new PCA

pca(x: Tensor, principal_axes: Tensor)will project the input x

on the principal axe supplied

- The old PCA with input

Changes:

-

Datasets:

- MNIST is now autodownloaded and cached

- Added IMDB Movie Reviews dataset

-

IO:

- Numpy file format support

- Image reading and writing support (jpg, bmp, png, tga)

- HDF5 reading and writing

-

Machine learning

- Kmeans clustering

-

Neural network and autograd:

- Support substraction, sum and stacking in neural networks

- Recurrent NN: GRUCell, GRU and Fused Stacked GRU support

- The NN declarative lang now supports GRU

- Added Embedding layer with up to 3D input tensors [batch_size, sequence_length, features] or [sequence_length, batch_size, features]. Indexing can be done with any sized integers, byte or chars and enums.

- Sparse softmax cross-entropy now supports target tensors with indices of type: any size integers, byte, chars or enums.

- Added ADAM optimiser (Adaptative Moment Estimation)

- Added Hadamard product backpropagation (Elementwise matrix multiply)

- Added Xavier Glorot, Kaiming He and Yann Lecun weight initialisations

- The NN declarative lang automatically initialises weights with the following scheme:

- Linear and Convolution: Kaiming (suitable for Relu activation)

- GRU: Xavier (suitable for the internal tanh and sigmoid)

- Embedding: Not supported in declarative lang at the moment

-

Tensors:

- Add tensor splitting and chunking

- Fancy indexing via

index_select - division broadcasting, scalar division and multiplication broadcasting

- High-dimensional

toSeqexports

-

End-to-end Examples:

- Sequence/mini time-series classification example using RNN

- Training and text generation example with Shakespeare and Jane Austen style. This can be applied to any text-based dataset (including blog posts, Latex papers and code). It should contain at least 700k characters (0.7 MB), this is considered small already.

-

Important fixes:

- Convolution shape inference on non-unit strided convolutions

- Support the future OpenMP changes from nim#devel

- GRU: inference was squeezing all singleton dimensions instead of just the "layer" dimension.

- Autograd: remove pointers to avoid pointing to wrong memory when the garbage collector moves it under pressure. This unfortunately comes at the cost of more GC pressure, this will be addressed in the future.

- Autograd: remove all methods. They caused issues with generic instantiation and object variants.

Special thanks to @metasyn (MNIST caching, IMDB dataset, Kmeans) and @Vindaar (HDF5 support and the example of using Arraymancer + Plot.ly) for their large contributions on this release.

Ecosystem:

-

Using Arraymancer + Plotly for NN training visualisation:

https://github.com/Vindaar/NeuralNetworkLiveDemo

-

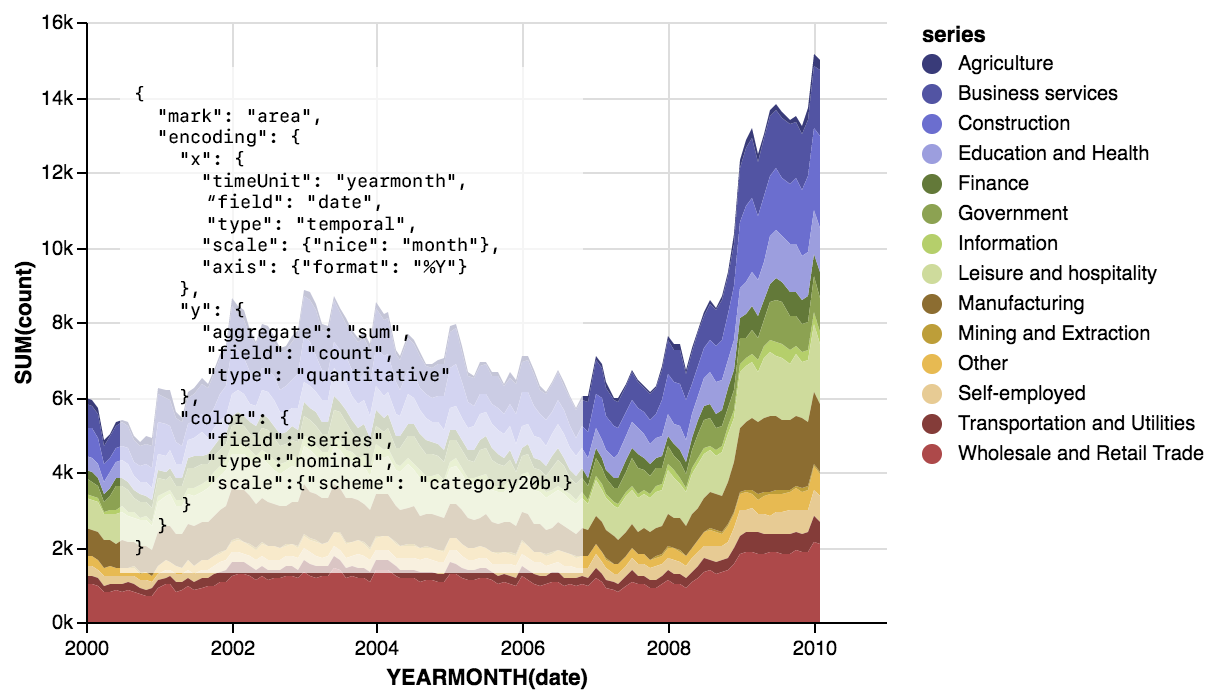

Monocle, proof-of-concept data visualisation in Nim using Vega. Hopefully allowing this kind of visualisation in the future:

and compatibility with the Vega ecosystem, especially the Tableau-like Voyager.

-

Agent Smith, reinforcement learning framework.

Currently it wraps theArcade Learning Environmentfor practicing reinforcement learning on Atari games.

In the future it will wrap Starcraft 2 AI bindings

and provides a high-level interface and examples to reinforcement learning algorithms. -

Laser, the future Arraymancer backend

which provides:- SIMD intrinsics

- OpenMP templates with fine-grained control

- Runtime CPU features detection for ARM and x86

- A proof-of-concept JIT Assembler

- A raw minimal tensor type which can work as a view to arbitrary buffers

- Loop fusion macros for iteration on an arbitrary number of tensors.

As far as I know it should provide the fastest multi-threaded

iteration scheme on strided tensors all languages and libraries included. - Optimized reductions, exponential and logarithm functions reaching

4x to 10x the speed of naively compiled for loops - Optimised parallel strided matrix multiplication reaching 98% of OpenBLAS performance

- This is a generic implementation that can also be used for integers

- It will support preprocessing (relu_backward, tanh_backward, sigmoid_backward)

and epilogue (relu, tanh, sigmoid, bias addition) operation fusion

to avoid looping an extra time with a memory bandwidth bound pass.

- Convolutions will be optimised with a preprocessing pass fused into matrix multiplication. Traditional

im2colsolutions can only reach 16% of matrix multiplication efficiency on the common deep learning filter sizes - State-of-the art random distributions and random sampling implementations

for stochastic algorithms, text generation and reinforcement learning.

Future breaking changes.

-

Arraymancer backend will switch to

Laserfor next version.

Impact:- At a low-level CPU tensors will become a view on top of a pointer+len

fon old data types instead of using the default Nim seqs. This will enable plenty of no-copy use cases

and even using memory-mapped tensors for out-of-core processing.

However libraries relying on teh very low-level representation of tensors will break.

The future type is already implemented in Laser. - Tensors of GC-allocated types like seq, string and references will keep using Nim seqs.

- While it was possible to use the Javascript backend by modifying the iteration scheme

this will not be possible at all. Use JS->C FFI or WebAssembly compilation instead. - The inline iteration templates

map_inline,map2_inline,map3_inline,apply_inline,apply2_inline,apply3_inline,reduce_inline,fold_inline,fold_axis_inlinewill be removed and replace byforEachandforEachStagedwith the following syntax:

forEach x in a, y in b, z in c: x += y * z

Both will work with an arbitrary number of tensors and will generate 2x to 3x more compact code wile being about 30% more efficient for strided iteration. Furthermore

forEachStagedwill allow precise control of the parallelisation strategy including pre-loop and post-loop synchronisation with thread-local variables, locks, atomics and barriers.

The existing higer-order functionsmap,map2,apply,apply2,fold,reducewill not be impacted. For small inlinable functions it will be recommended to use theforEachmacro to remove function call overhead (Yyou can't inline a proc parameter). - At a low-level CPU tensors will become a view on top of a pointer+len

-

The neural network domain specific language will use less magic

for theforwardproc.

Currently the neural net domain specific language only allows the type

Variable[T]for inputs and the result.

This prevents its use with embedding layers which also requires an index input.

Furthermore this prevents usingtuple[output, hidden: Variable]result type

which is very useful to pass RNNs hidden state for generative neural networks (for example text sequence or time-series).

So unfortunately the syntax will go from the current

forward x, y:shortcut to classic Nimproc forward[T](x, y: Variable[T]): Variable[T] -

Once CuDNN GRU is implemented, the GRU layer might need some adjustments to give the same results on CPU and Nvidia's GPU and allow using GPU trained weights on CPU and vice-versa.

Thanks:

- metasyn: Datasets and Kmeans clustering

- vindaar: HDF5 support and Plot.ly demo

- bluenote10: toSeq exports

- andreaferetti: Adding axis parameter to Mean layer autograd

- all the contributors of fixes in code and documentation

- the Nim community for the encouragements

The Name of the Wind

This release is named after "The Name of the Wind" (2007), the first book of Patrick Rothfuss masterpiece "The Kingkiller Chronicle".

Changes:

-

Core:

- OpenCL tensors are now available! However Arraymancer will naively select the first backend available. It can be CPU, it can be GPU. They support basic and broadcasted operations (Addition, matrix multiplication, elementwise multiplication, ...)

- Addition of an

argmaxandargmax_maxprocs.

-

Datasets:

- Loading the MNIST dataset from http://yann.lecun.com/exdb/mnist/

- Reading and writing from CSV

-

Linear algebra:

- Least squares solver

- Eigenvalues and eigenvectors decomposition for symmetric matrices

-

Machine Learning

- Principal Component Analysis (PCA)

-

Statistics

- Computation of covariance matrices

-

Neural network

- Introduction of a short intuitive syntax to build neural networks! (A blend of Keras and PyTorch).

- Maxpool2D layer

- Mean Squared Error loss

- Tanh and softmax activation functions

-

Examples and tutorials

- Digit recognition using Convolutional Neural Net

- Teaching Fizzbuzz to a neural network

-

Tooling

- Plotting tensors through Python

Several updates linked to Nim rapid development and several bugfixes.

Wizard's First Rule

This release is named after "Wizard's First Rule" (1994), the first book of Terry Goodkind masterpiece "The Sword of Truth".

I am very excited to announce the third release of Arraymancer which includes numerous improvements, features and (unfortunately!) breaking changes.

Warning ⚠: ALL deprecated procs will be removed next release due to deprecated spam and to reduce maintenance burden.

Changes:

-

Very Breaking

- Tensors uses reference semantics now:

let a = bwill share data by default and copies must be made explicitly.- There is no need to use

unsafeproc to avoid copies especially for slices. - Unsafe procs are deprecated and will be removed leading to a smaller and simpler codebase and API/documentation.

- Tensors and CudaTensors now works the same way.

- Use

cloneto do copies. - Arraymancer now works like Numpy and Julia, making it easier to port code.

- Unfortunately it makes it harder to debug unexpected data sharing.

- There is no need to use

- Tensors uses reference semantics now:

-

Breaking (?)

- The max number of dimensions supported has been reduced from 8 to 7 to reduce cache misses.

Note, in deep learning the max number of dimensions needed is 6 for 3D videos: [batch, time, color/feature channels, Depth, Height, Width]

- The max number of dimensions supported has been reduced from 8 to 7 to reduce cache misses.

-

Documentation

- Documentation has been completely revamped and is available here: https://mratsim.github.io/Arraymancer/

-

Huge performance improvements

- Use non-initialized seq

- shape and strides are now stored on the stack

- optimization via inlining all higher-order functions

apply_inline,map_inline,fold_inlineandreduce_inlinetemplates are available.

- all higher order functions are parallelized through OpenMP

- integer matrix multiplication uses SIMD, loop unrolling, restrict and 64-bit alignment

- prevent false sharing/cache contention in OpenMP reduction

- remove temporary copies in several proc

- runtime checks/exception are now behind

unlikely A*B + CandC+=A*Bare automatically fused in one operation- do not initialized result tensors

-

Neural network:

- Added

linear,sigmoid_cross_entropy,softmax_cross_entropylayers - Added Convolution layer

- Added

-

Shapeshifting:

- Added

unsqueezeandstack

- Added

-

Math:

- Added

min,max,abs,reciprocal,negateand in-placemnegateandmreciprocal

- Added

-

Statistics:

- Added variance and standard deviation

-

Broadcasting

- Added

.^(broadcasted exponentiation)

- Added

-

Cuda:

- Support for convolution primitives: forward and backward

- Broadcasting ported to Cuda

-

Examples

- Added perceptron learning

xorfunction example

- Added perceptron learning

-

Precision

- Arraymancer uses

ln1p(ln(1 + x)) andexp1mprocs (exp(1 - x)) where appropriate to avoid catastrophic cancellation

- Arraymancer uses

-

Deprecated

- Version 0.3.1 with the ALL deprecated proc removed will be released in a week. Due to issue nim-lang/Nim#6436,

even using non-deprecated proc likezeros,ones,newTensoryou will get a deprecated warning. newTensor,zeros,onesarguments have been changed fromzeros([5, 5], int)tozeros[int]([5, 5])- All

unsafeproc are now default and deprecated.

- Version 0.3.1 with the ALL deprecated proc removed will be released in a week. Due to issue nim-lang/Nim#6436,

The Color of Magic

This release is named after "The Colour of Magic" (1983), the first book of Terry Pratchett masterpiece "Discworld".

I am very excited to announce the second release of Arraymancer which includes numerous improvements blablabla ...

Without further ado:

-

Community

- There is a Gitter room!

-

Breaking

shallowCopyis nowunsafeViewand acceptsletarguments- Element-wise multiplication is now

.*instead of|*| - vector dot product is now

dotinstead of.*

-

Deprecated

- All tensor initialization proc have their

Backendparameter deprecated. fmapis nowmapaggandagg_in_placeare nowfoldand nothing (too bad!)

- All tensor initialization proc have their

-

Initial support for Cuda !!!

- All linear algebra operations are supported

- Slicing (read-only) is supported

- Transforming a slice to a new contiguous Tensor is supported

-

Tensors

- Introduction of

unsafeoperations that works without copy:unsafeTranspose,unsafeReshape,unsafebroadcast,unsafeBroadcast2,unsafeContiguous, - Implicit broadcasting via

.+, .*, ./, .-and their in-place equivalent.+=, .-=, .*=, ./= - Several shapeshifting operations:

squeeze,atand theirunsafeversion. - New property:

size - Exporting:

export_tensorandtoRawSeq - Reduce and reduce on axis

- Introduction of

-

Ecosystem:

- I express my deep thanks to @edubart for testing Arraymancer, contributing new functions, and improving its overall performance. He built arraymancer-demos and arraymancer-vision,check those out you can load images in Tensor and do logistic regression on those!

Magician Apprentice

This release is named after "Magician: Apprentice" (1982), the first book of Raymond E. Feist masterpiece "The Riftwar Cycle".

First public release.

Include:

- Slicing,

- basic linear algebra operations,

- reshaping, broadcasting, concatenating,

- universal function

- iterators

- ...

See README