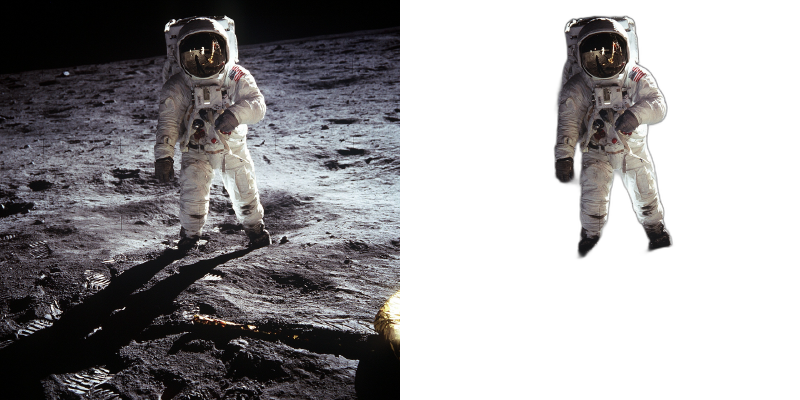

BackgroundRemover is a command line tool to remove background from image and video using AI, made by nadermx to power https://BackgroundRemoverAI.com. If you wonder why it was made read this short blog post.

-

python >= 3.6

-

python3.6-dev #or what ever version of python you use

-

torch and torchvision stable version (https://pytorch.org)

-

ffmpeg 4.4+

-

To clarify, you must install both python and whatever dev version of python you installed. IE; python3.10-dev with python3.10 or python3.8-dev with python3.8

Go to https://pytorch.org and scroll down to INSTALL PYTORCH section and follow the instructions.

For example:

PyTorch Build: Stable (1.7.1)

Your OS: Windows

Package: Pip

Language: Python

CUDA: None

To install ffmpeg and python-dev

sudo apt install ffmpeg python3.6-dev

To Install backgroundremover, install it from pypi

pip install --upgrade pip

pip install backgroundremoverPlease note that when you first run the program, it will check to see if you have the u2net models, if you do not, it will pull them from this repo

It is also possible to run this without installing it via pip, just clone the git to local start a virtual env and install requirements and run

python -m backgroundremover.cmd.cli -i "video.mp4" -mk -o "output.mov"and for windows

python.exe -m backgroundremover.cmd.cli -i "video.mp4" -mk -o "output.mov"git clone https://github.com/nadermx/backgroundremover.git

cd backgroundremover

docker build -t bgremover .

alias backgroundremover='docker run -it --rm -v "$(pwd):/tmp" bgremover:latest'Remove the background from a local file image

backgroundremover -i "/path/to/image.jpeg" -o "output.png"Sometimes it is possible to achieve better results by turning on alpha matting. Example:

backgroundremover -i "/path/to/image.jpeg" -a -ae 15 -o "output.png"change the model for different background removal methods between u2netp, u2net, or u2net_human_seg

backgroundremover -i "/path/to/image.jpeg" -m "u2net_human_seg" -o "output.png"backgroundremover -i "/path/to/video.mp4" -tv -o "output.mov"backgroundremover -i "/path/to/video.mp4" -tov "/path/to/videtobeoverlayed.mp4" -o "output.mov"backgroundremover -i "/path/to/video.mp4" -toi "/path/to/videtobeoverlayed.mp4" -o "output.mov"backgroundremover -i "/path/to/video.mp4" -tg -o "output.gif"Make a matte file for premiere

backgroundremover -i "/path/to/video.mp4" -mk -o "output.matte.mp4"Change the framerate of the video (default is set to 30)

backgroundremover -i "/path/to/video.mp4" -fr 30 -tv -o "output.mov"Set total number of frames of the video (default is set to -1, ie the remove background from full video)

backgroundremover -i "/path/to/video.mp4" -fl 150 -tv -o "output.mov"Change the gpu batch size of the video (default is set to 1)

backgroundremover -i "/path/to/video.mp4" -gb 4 -tv -o "output.mov"Change the number of workers working on video (default is set to 1)

backgroundremover -i "/path/to/video.mp4" -wn 4 -tv -o "output.mov"change the model for different background removal methods between u2netp, u2net, or u2net_human_seg and limit the frames to 150

backgroundremover -i "/path/to/video.mp4" -m "u2net_human_seg" -fl 150 -tv -o "output.mov"from backgroundremover.bg import remove

def remove_bg(src_img_path, out_img_path):

model_choices = ["u2net", "u2net_human_seg", "u2netp"]

f = open(src_img_path, "rb")

data = f.read()

img = remove(data, model_name=model_choices[0],

alpha_matting=True,

alpha_matting_foreground_threshold=240,

alpha_matting_background_threshold=10,

alpha_matting_erode_structure_size=10,

alpha_matting_base_size=1000)

f.close()

f = open(out_img_path, "wb")

f.write(img)

f.close()

- convert logic from video to image to utilize more GPU on image removal

- clean up documentation a bit more

- add ability to adjust and give feedback images or videos to datasets

- add ability to realtime background removal for videos, for streaming

- finish flask server api

- add ability to use other models than u2net, ie your own

- other

Accepted

Give a link to our project BackgroundRemoverAI.com or this git, telling people that you like it or use it.

We made it our own package after merging together parts of others, adding in a few features of our own via posting parts as bounty questions on superuser, etc. As well as asked on hackernews earlier to open source the image part, so decided to add in video, and a bit more.

- https://arxiv.org/pdf/2005.09007.pdf

- https://github.com/NathanUA/U-2-Net

- https://github.com/pymatting/pymatting

- https://github.com/danielgatis/rembg

- https://github.com/ecsplendid/rembg-greenscreen

- https://superuser.com/questions/1647590/have-ffmpeg-merge-a-matte-key-file-over-the-normal-video-file-removing-the-backg

- https://superuser.com/questions/1648680/ffmpeg-alphamerge-two-videos-into-a-gif-with-transparent-background/1649339?noredirect=1#comment2522687_1649339

- https://superuser.com/questions/1649817/ffmpeg-overlay-a-video-after-alphamerging-two-others/1649856#1649856

- Copyright (c) 2021-present Johnathan Nader

- Copyright (c) 2020-present Lucas Nestler

- Copyright (c) 2020-present Dr. Tim Scarfe

- Copyright (c) 2020-present Daniel Gatis

Code Licensed under MIT License Models Licensed under Apache License 2.0