-

Notifications

You must be signed in to change notification settings - Fork 1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Stage 3 of dBFT (Commit) #320

Conversation

|

Please @belane, @igormcoelho and @vncoelho review this too |

|

Hi, @shargon, thanks for this. I checked the code (looks precise to the point) and I got that the |

|

|

||

| if (payload.ValidatorIndex >= context.Validators.Length) return; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Check if payload validator Index could had changed on RequestGetBlocks()

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I move this validation to the begining of the method

https://github.com/neo-project/neo/pull/320/files#diff-0285c1c12d1d492897a99ffe07f9fed9R233

|

@vncoelho is exactly this paper , you are a smart guy :) We need the comit phase for ensure that all blocks have the same hash before they spread the block to the network |

neo/Consensus/ConsensusService.cs

Outdated

| { | ||

| if (context.State.HasFlag(ConsensusState.BlockSent)) return; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this the "jump of the cat", Shargon? aheuahueahuea

neo/Consensus/ConsensusService.cs

Outdated

| { | ||

| if (context.State.HasFlag(ConsensusState.BlockSent)) return; | ||

| if (!context.TryToCommit(payload, message)) return; | ||

|

|

||

| if (context.Signatures.Count(p => p != null) >= context.M && context.TransactionHashes.All(p => context.Transactions.ContainsKey(p))) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this double check necessary? Because CheckSignatures() already checked it and called OnCommitAgreement.

neo/Consensus/ConsensusContext.cs

Outdated

|

|

||

| if (Commits[payload.ValidatorIndex] != null) | ||

| { | ||

| return false; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's be consistent with your single line ifs. I see some with curly bracket and some without.

neo/Consensus/ConsensusService.cs

Outdated

| { | ||

| if (!context.CommitAgreementSent) | ||

| { | ||

| if (context.Signatures.Count(p => p != null) >= context.M && context.TransactionHashes.All(p => context.Transactions.ContainsKey(p))) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

merge if loops

neo/Consensus/ConsensusContext.cs

Outdated

| @@ -28,21 +28,63 @@ internal class ConsensusContext | |||

| public byte[] ExpectedView; | |||

| public KeyPair KeyPair; | |||

|

|

|||

| private UInt256[] Commits; | |||

| private Block _header = null; | |||

| public bool CommitAgreementSent = false; | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This bool looks like it should belong in ConsensusState

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That is true, Snowy. It looks like.

|

Haduken. Good moves, Snowy and Shargon. |

|

@shargon brothers, I am confused... If yes, I think that the solution may be not this one. |

|

This phase (3) is not implemented already, this is for ensure that the other backup nodes have enough signatures for spread the block, before you spread the block. Then you can't spread a block alone, change view, and fork |

|

Is compatible with your PR. Because maybe this delay the network. We should add more improves (like you told me) for example:

|

|

It looks good, but introduces more phases for consensus and will have penalization in the consensus time what can make the change of view more frequent. we have to do more intense tests because in a local laboratory it will not reflect reality and commit phase will be quick. *It may be a good idea to increase the timer 2 seconds when the node reaches the commit phase? It may deserve wait two more seconds than change your view and start the whole process again. what do you think? |

|

@shargon, thanks for this nice explanation, ma frem. @belane, |

|

@vncoelho Something similar to Aardvark can be implemented. Aardvark requires the primary replica to achieve 90% of the throughput achieved during the previous |

|

Thanks, @shargon, the magister, for the patience and great explanations. @toghrulmaharramov, I will try to read it during these next days, thanks for the tip. |

|

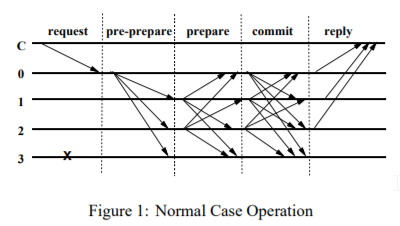

I think just fully implement the pBFT algorithm will solve this problem. |

That's it! |

|

I think the client and replicas model can be used here just like pBFT (But the details protocol should strictly follow the pBFT model, not like what we did before). If that, I think we can get a formal security proof of dBTF. |

|

@edwardz246003 PBFT was not created for the decentralized platforms as it only requires a leader change in case the majority of nodes (2f + 1) deem the leader to be faulty or Byzantine. The protocol can be very slow in a decentralized network plus it doesn't solve the issue of a Byzantine node propagating a different "valid" block. |

|

@erikzhang if you will, i could expose here the flow chart for the fork, Because together is better. |

That is good. |

|

I will expose here the current problem. All of this could be produced by network errors, IS DIFFICULT, but sometimes happens. For this example we only have 4 consensus nodes, of course, is hard with more nodes. Step 1The first step for produce the fork is the hard part:

Result:

Step 2The second step is automatic:

Result:

|

|

I think that the solution for all problems, is that the real signature only should be send on commit message |

Yes. But if we allow the view to be changed during the commit phase, it may also cause a fork. |

|

Maybe we should change the view if we receive more than X view change messages |

|

Consider a consensus network of 7 nodes with 2 malicious nodes. We name the nodes as A, B, C, D, E, F, and G, which A and B are malicious nodes, and the others are good ones. When A is primary, it send Since B is a malicious node, it will send But A and B can hold their commit messages and the After that, A and B can release In order to prevent this attack, we must prohibit view change during the commit phase. In this case, [E, F, G] does not change view, so [A, B, C, D] cannot generate a fork block. |

|

First of all, as Erik said, thanks for the eagle eyes of @edwardz246003 and his team. But, as always, it seems that you are proposing the right thing again. If this solves the problem, it will be better than requiring nodes to double checking Please, check if the following reasoning is right. In summary:

However, the only problem I see, that is not a big problem, is:

Maybe, as usual, I missed something...aehauheau Is this right? |

This won't result in a block fork. |

|

Yes, you are right, it won't result in a fork, it would be just a case where network fails (delays, etc..) make possible "f-1 malicious nodes stop block generation". Which is not a problem, because these delays should not, in essence, be really considered a Byzantine fault. I think it is not the point for this discussion, but I wrote this as I way for understanding and documenting the case (if I understood the steps correctly). :D |

When [A, B, C, D, G] create a bock, [E, F] will resume normal automatically. |

|

You are right, if they produce a block, I think that [E,F] should resume normal automatically. But there was this other case considering that [A] was a Malicious and did not want to sign anymore, then, [B, C, D, G] would not be able to create a block anymore. This was just an insight, I think we do not need to worry about this now. But, maybe, with this line of reasoning we find something else... 🗡️ aehuaheuaea |

You are right. In the case of a poor network, a malicious node can stop the consensus of the entire network. In the above case, G changes the view because it cannot communicate with [E, F]. But this is better than the block fork, and if there is a network failure, it is acceptable to temporarily stop the consensus. |

|

I also agree with you, Erik. As I said, this is a discussion for a brainstorming, maybe with this we can insight the future. |

|

With a bad guy in commit phase, you only could produce the current situation, don't produce the fork, you need all the requirements mentionen here #320 (comment) (and the bad guy) to produce the fork, so a bad guy is not enought for produce the fork. |

|

You are right, Sharrrgon. You are a good guy, Shargon, not the bad node guy 🗡️ @shargon, maybe it is better to implement these changes before merge, right? Or do you think that it should be merged and then modified? If you need any help (and if I am able to contribute) let us know. |

|

Such a nice discussion here! At least, even if the current commit phase is kept and no change views are allowed after this point, it guarantees that coordinated network problems won't cause forks anymore (which is rare), unless a bad agent tries to force this fork (by submitting the hash before as @edwardz246003 realized), but this compromises its credibility during the voting process. We still can go to a situation where coordinated hardware issues may cause a fork (by losing the state) together with network issues. This is possible to happen, but at least, I don't see that happening in a near future :) I mean, problem is not fully solved, but it's a huge evolution already, congratulations again Shargon. |

|

The next week we will start porting this to AKKA model :) |

|

Following @erikzhang and @vncoelho 's example, I have noticed an issue with current logic of new commit phase.

[A, B, C, D] will not enter commit phase, but request change view. NOT enough votes to change view. [A, B, E, F, G] will enter commit phase, but [A, B] may hold their signatures for commit phase. Then [E, F, G]'s commit votes are not enough to publish this block, and [E, F, G] are not allowed to change view. In this situation, the network stops producing block which I think is a problem, right? Please see the following image. |

|

Closed to continue in #422 |

TODO:

Fixes #193

Fixes neo-project/neo-node#219