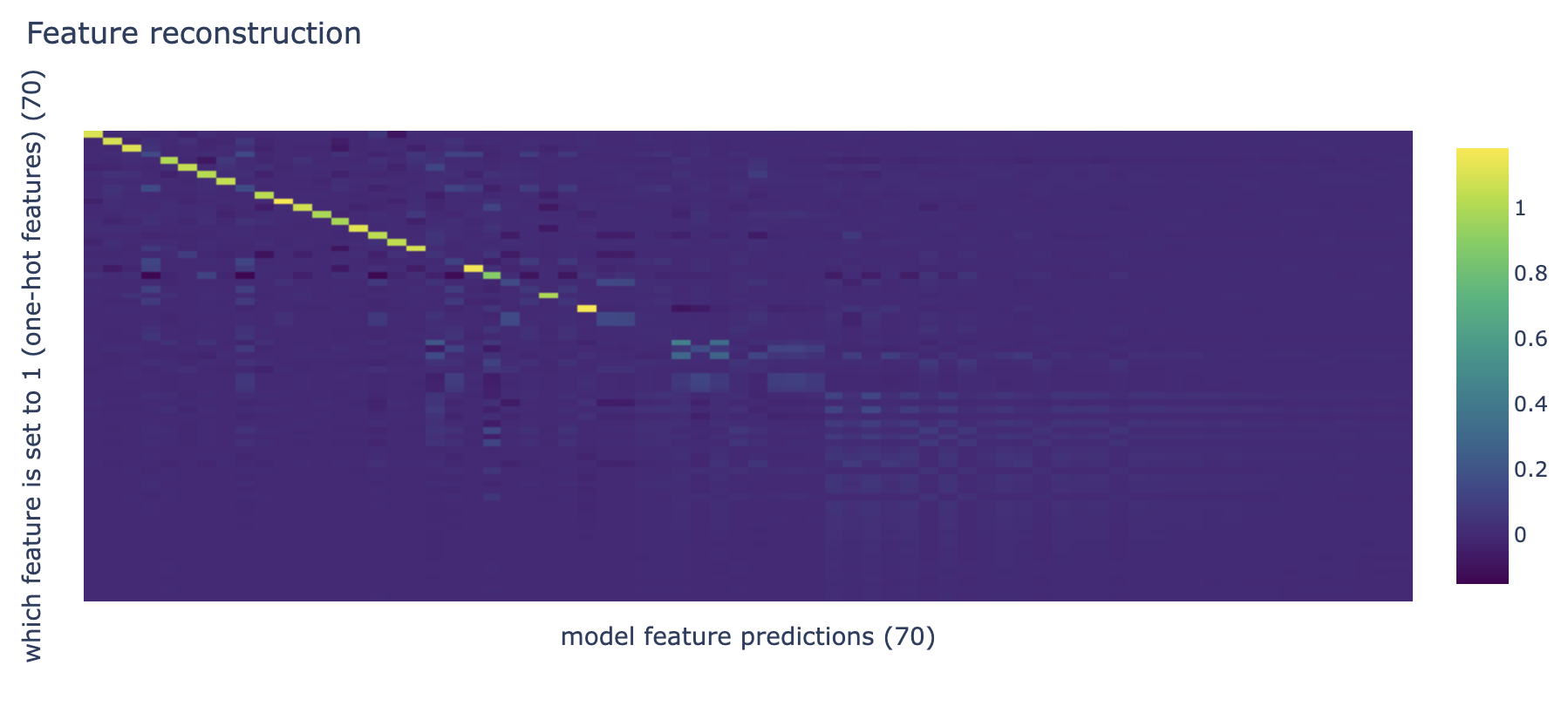

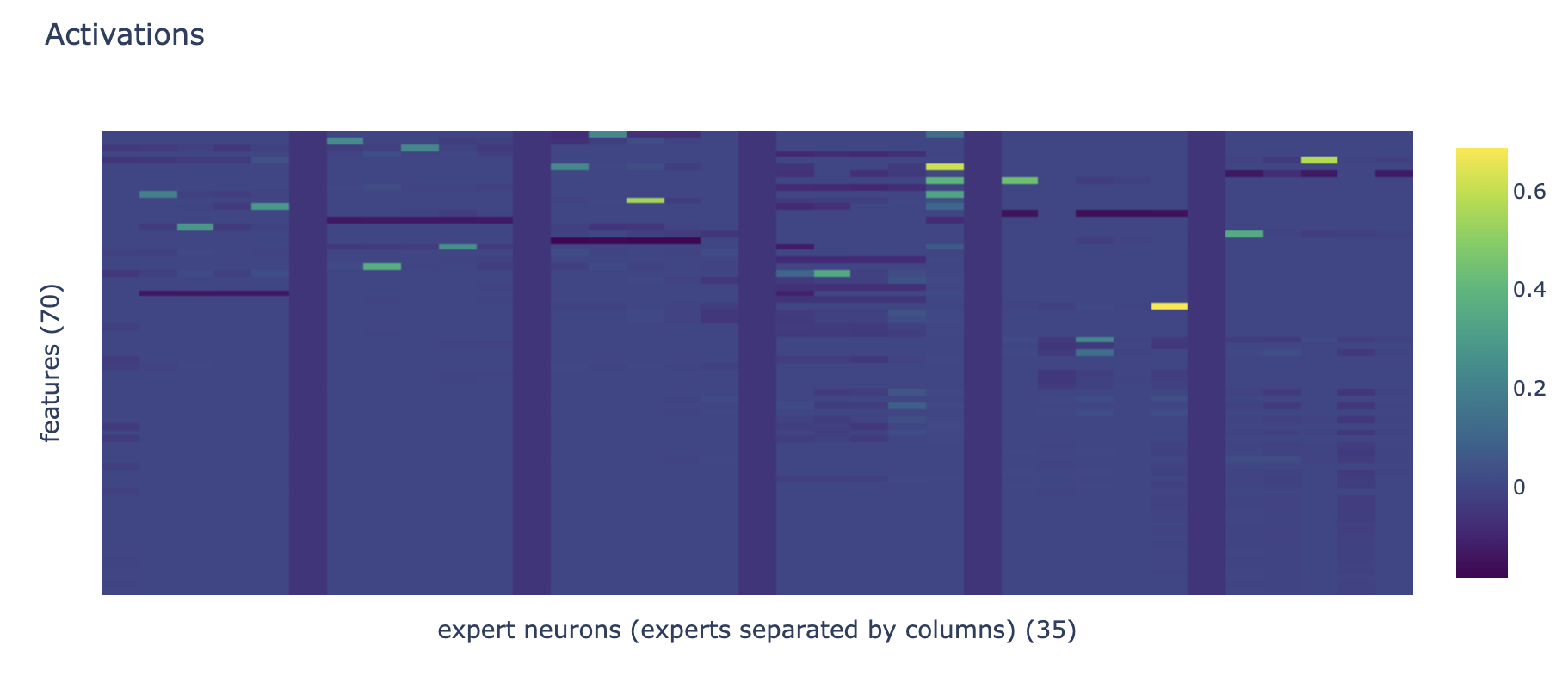

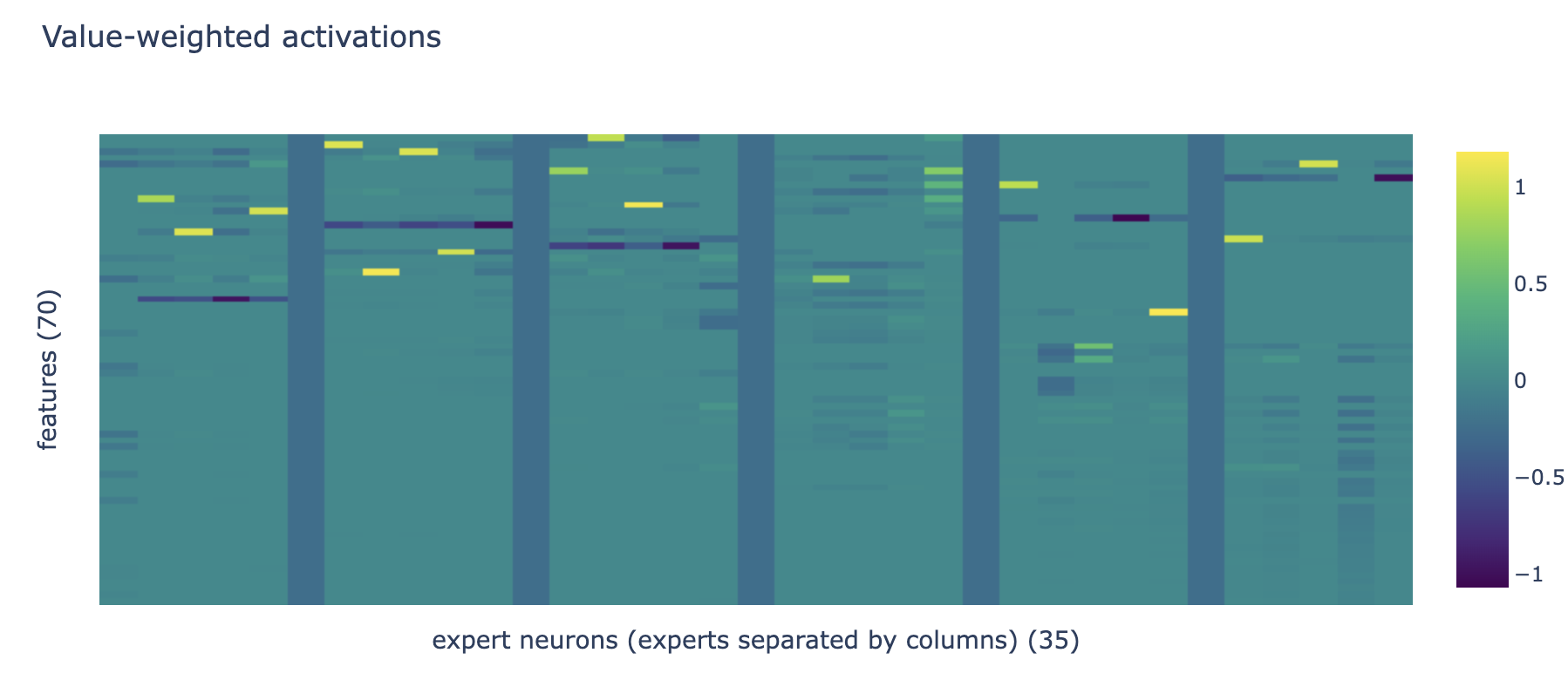

Below is the result from using the SoLU MoE layer as an autoencoder for a sparse feature toy model fairly similar to https://transformer-circuits.pub/2022/toy_model/index.html:

Note the features stored in negative solu neuron activations.

For more info, see these papers: