By A Nguyen et al. - ICRA 2018

- Tensorflow >= 1.0 (used 1.1.0)

- Clone the repository to your

$VC_Folder - We train the network using IIT-V2C dataset

- You can extract the features for each frames in the input videos using any network (e.g., VGG, ResNet, etc.)

- For a quick start, the pre-extracted features with ResNet50 is available here.

- Extract the file you downloaded to

$VC_Folder/data - Start training:

python train.pyin$VC_Folder/main

- Predict:

python predict.pyin$VC_Folder/mainfolder - Prepare the results for evaluation:

python prepare_evaluation_format.pyin$VC_Folder/evaluationfolder - Evaluate:

python cocoeval.pyin$VC_Folder/evaluationfolder

If you find this code useful in your research, please consider citing:

@inproceedings{nguyen2018translating,

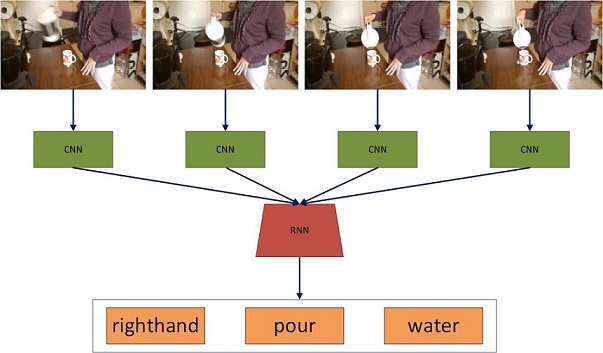

title={Translating videos to commands for robotic manipulation with deep recurrent neural networks},

author={Nguyen, Anh and Kanoulas, Dimitrios and Muratore, Luca and Caldwell, Darwin G and Tsagarakis, Nikos G},

booktitle={2018 IEEE International Conference on Robotics and Automation (ICRA)},

year={2018},

organization={IEEE}

}

If you have any questions or comments, please send an to anh.nguyen@iit.it