-

Notifications

You must be signed in to change notification settings - Fork 103

Model submission for Absolem sensor configuration 1 by CTU-CRAS-Norlab #520

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Model submission for Absolem sensor configuration 1 by CTU-CRAS-Norlab #520

Conversation

…OLEM_SENSOR_CONFIG_2 (which only differ by breadcrumbs).

|

This model uses an opposite direction of model XML generation - there is the "source" Xacro, which can generate valid URDF and almost valid SDF (that one needs to be processed by Also, the 3D collision model differs a bit in RViz and in Gazebo - the RViz model is meant for laser filtering, so it contains |

|

I'm also not sure if I chose the best approach to model the flipper/laser controlled joints. If I looked correctly, there are no motors directly in iGazebo. Should I use In ODE/Gazebo, I model the flippers by directly setting the ODE joint's |

Hi @peci1 , would you prefer to use position control or velocity control for the flippers? |

|

The real robot offers both interfaces. We use much more often the positional control mode. |

Sound good, we can enable position control for the flippers then. Let me take a look... |

|

I tested your submission and looks reasonable to me.

I got this error on my side when launching with Do you see it on your end? |

|

About controlling the flippers in position, you should be able to do it applying the following changes:

Note that you don't need the Also, I don't recommend to enable both interfaces (position and velocity) at the same time. Choose one, otherwise both controllers will fight each other. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have an error finding the robot_description on the parameter server, otherwise a few minor comment in case you want to change the flipper control

|

Thanks for the comments. The robot_description is something I wanted to look into. The problem is simple - I'm running a About the positional control of the flippers - I've already tried the positional controller you suggested. But you can't limit the maximum speed the error correction uses to get to the setpoint. And modelling this speed more or less accurately is IMO important. I've played a bit with the PID parameters, but I always ended up either with a "dead" flipper or with a too quick or oscillating one. The robot's interface allows me to set a limit on effort, set the positional setpoint and choose the velocity that should be used to reach the setpoint. I have a simple custom proportional controller that uses velocity commands to mimick this behavior. I'm now in the process of trying to port it to Ignition Gazebo. |

The effort limits should be enforced since this pull request was merged. We'll need to make a new Ignition Physics release 1.x to have the fix. |

|

The initial assessment of the CTU-CRAS-Norlab Absolem model is complete, however there are some issues to resolve before this model can be merged and utilized in a competitive setting:

Please address the issues noted above and add commits to this pull request as soon as possible. The submissions are expected to be incorporated into the SubT Virtual Testbed pending a successful review. |

7c5b28c to

28eecde

Compare

|

I fixed the IMU, collisions and the reported problem with @acschang What do you mean by adding meshes to lights? All of our lights are LED strips glued to the base link. The camera resolution is set according to the real data we get from it. The datasheet apparently rounded the numbers a bit. |

|

I also added a plugin that handles rotation of the lidar (it reverses the rotation direction every time the lidar reaches a given angular limit). |

|

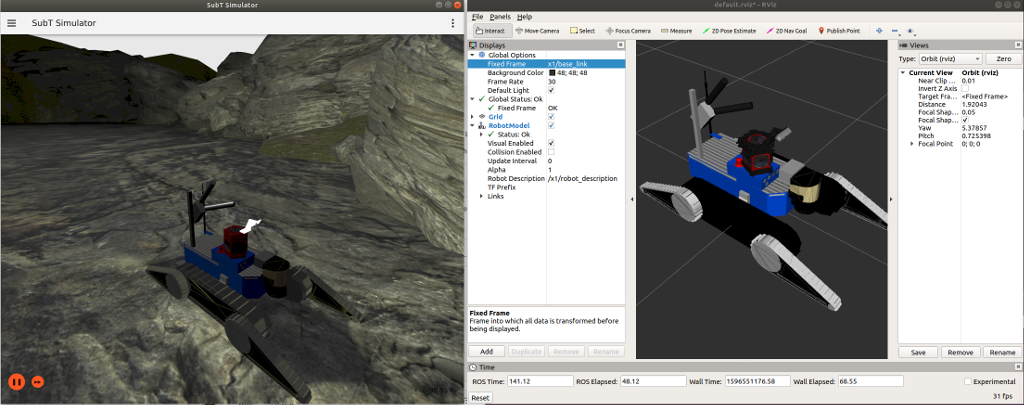

Here's my view from Ignition Gazebo and rviz. Maybe the mesh that @acschang refers is the depth camera and its support. See image below: |

|

The depth camera support was removed from SDF because it occluded the camera views (in our Gazebo 9 simulation this doesn't happen, so there's probably some imperfection in the transfer to Ignition, and I just thought this is a reasonable quick solution). The LED strips are present in the texture - they're the grayish strips near the top of the blue body. |

|

I've commited an attempt for the flipper control plugin ported from our Gazebo 9 implementation. It is still WIP, but I wanted to share it so that you can comment on the approach. I'm now leaving for a 2 day vacation when I won't be able to respond. Basically, the plugin provides both the positional and velocity controller APIs, but handles them together so that they don't interfere. Whether positional or velocity control is used depends on which topic has received the last command. There's also a TODO function which should allow changing the maximum allowed effort ("torque") on the fly, so that you can control the compliance of the flippers (this is also a feature the physical robot has). |

|

Does anybody know how could I programatically read the value of |

I don't think it's possible right now. We'd need to create a Gazebo component for reading joint max velocity and max effort respectively. |

|

Ahh, the lights are the white strips in the texture. My apologies for overlooking that while looking for a discrete object for illumination. The modifications to the IMU parameters and resolution of the collision when flipped resolve those outstanding issues. The verification on the exact camera resolution is sufficient. The only outstanding aforementioned issue is flipper control. |

The only flipper with position control enabled is the front left. You probably forgot to add the other ones to If I send a velocity command, and then, a position command, the latter is ignored. If I send a velocity command, then a 0 velocity command, and then, a position command, the position command is executed. Is this expected? |

|

We will adjust the RGBD resolution to 640x480 to match other robots in the repository that are also modeling the RealSense D435. No action is needed on your part. |

This missing mesh for the RGBD camera will also need to be resolved. It may be an in correct reference in the |

CTU_CRAS_NORLAB_ABSOLEM_SENSOR_CONFIG_2 only differs by breadcrumbs.

This is my first model submission here and I didn't have all the time I needed to fine-tune the simulation. But it should basically work. Later, I might try to add a positional controller for flippers and a better controller for the lidar rotation.