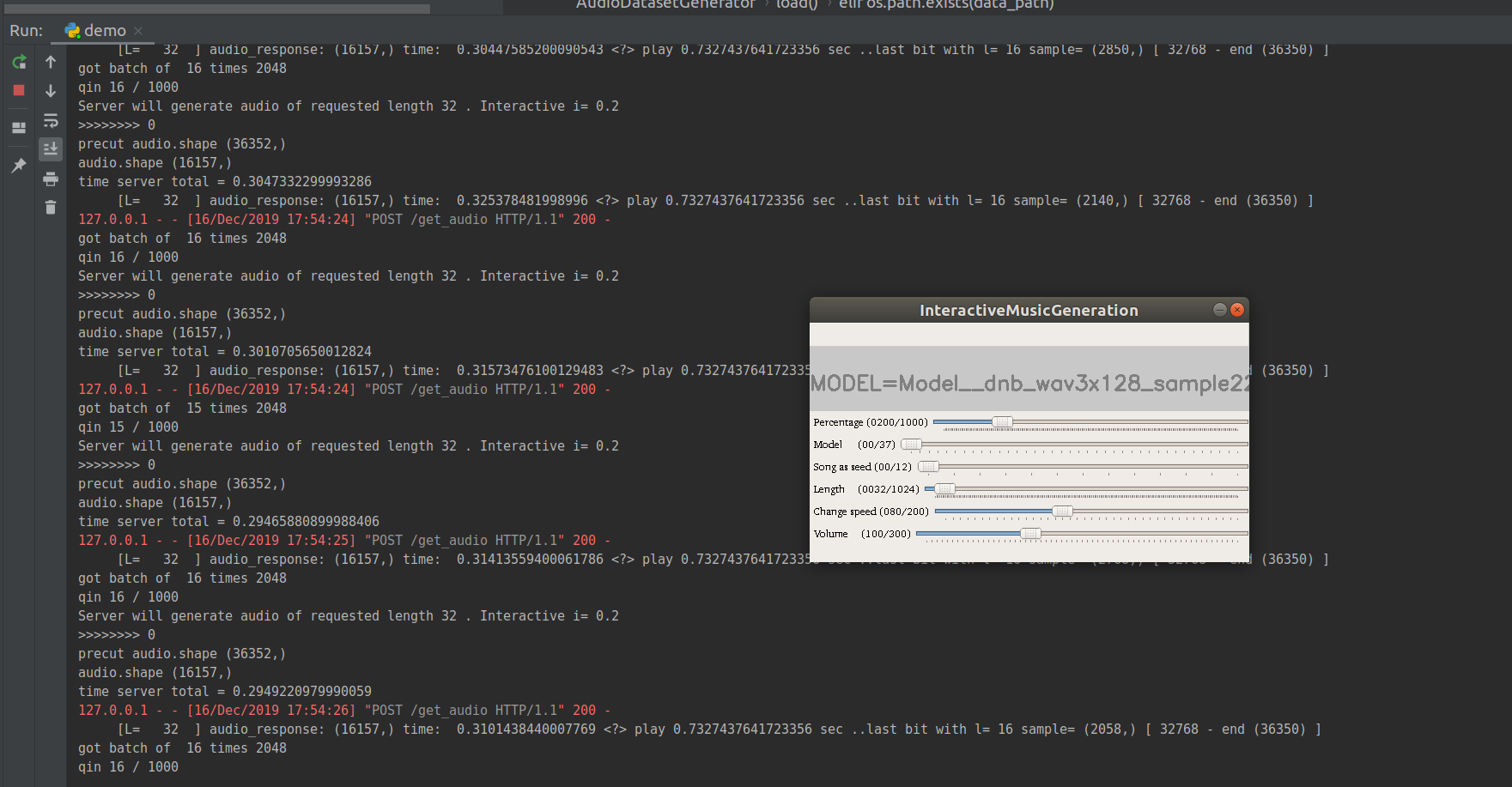

Vitek's interactive music generation project, aiming at real-time speeds and interaction with deep learning!

Ideally we will have a strong PC with good GPU as a server and any machine (eg. my potato laptop) connecting to it. Another version is with having a Google Cloud Virtual Machine as the server (which works, but has availability and slow connectivity issues). Finally it can all run on one machine.

Please for the easiest setup, see our instructions on running a nvidia Docker container with our code: https://hub.docker.com/repository/docker/previtus/demo-lstm-music-gen

Project is work in progress, so this will likely change and grow ...

-

pip install Pillow numpy opencv-python PyWavelets tqdm slugify -

pip install -U Flask -

pip install lws==1.2.6 -

pip install librosa==0.7.2 -

pip install h5py==2.10.0 keras==2.2.4 tflearn==0.3.2 -

pip install numba==0.49.1 -

jackd(https://jackaudio.org/) and the python client library from https://github.com/spatialaudio/jackclient-python/sudo apt-get install jack-toolspip install JACK-Client --user

-

For GUI install Qt5 + PyQt5

- Qt5:

sudo apt-get install build-essential,sudo apt-get install qtcreator,sudo apt-get install qt5-default - PyQt5:

pip3 install --user pyqt5,sudo apt-get install python3-pyqt5,sudo apt-get install pyqt5-dev-tools,sudo apt-get install qttools5-dev-tools

- Qt5:

-

Put your data into

__music_samplesand__saved_models:- for example:

__music_samples/mehldau/input.wav - so that you have:

__saved_models/modelBest_Mehldau.tfl(ps: you can also use https://github.com/Louismac/MAGNet to train your model - only remember to use the same settings for the model architecture)

- for example:

-

Adjust parameter

WAIT_if_qout_larger_div(inclient__playbackWithServer.py) according to your PC performance (for slower PC set this value lower - to 1 or 2)

First try running the demo (one file to rule them all) by:

python demo.py

If this doesn't work, follow these steps:

You will have to start the jackd on your pc:

jackd -R -d alsa -r 44100(this command might differ from pc to pc, depending on the soundcard and other setup)

Then start a server (on the same pc, or somewhere else with ssh tunneling), client and interaction tool (now crudely made in python, anything sending the right OSC commands would work):

- (server side)

python3 server.py(possible to specify some settings here -lstm_layers 3 etc ... more to come) - (client side)

python3 client__playbackWithServer.py(this will change for sure ...) - (optionally; client side)

python3 osc_interaction.py(without this it will send only the default values ... not much interactivity)

This readme might be outdated ... I will try to make my best to follow the code, but probably will return to it only when needed.

I was able to run this (both server+client+jackd) on my potato machine (aka no GPU, only CPU = Intel(R) Core(TM) i5-4300U CPU @ 1.90GHz -> goes turbo to 2.60GHz). Jackd sometimes zombifies. Anything better is much more preferable (for later features, faster reactivity and less zombies).

client__playbackWithServer.py

SIGNAL_requested_lenght = 64 # bigger batches are a bit faster

WAIT_if_qout_larger_div = 1 # less wasteful waiting time, but also less reactive (longer delay)

cross_len = 32 # shorter client side crossfasing (might cause more audible clicks)

server.py

parser.add_argument('-griffin_iterations', help='iterations to use in griffin reconstruction', default='10')

# this one is the hardest hit I guess, still sounds reasonable though

Qt setup: qt-opensource-windows-x86-5.14.1.exe + pip install pyqt5

(didn't work) Jack setup: ASIO4ALL_2_14_English.exe (drivers) + Jack_v1.9.11_64_setup.exe (jackd) + follow https://jackaudio.org/faq/jack_on_windows.html (regsvr32 JackRouter.dll) (jackd.exe -R -S -d portaudio -d "ASIO::ASIO4ALL v2") + pip install JACK-Client --user

Video with the "proto"-UI: https://www.youtube.com/watch?v=w7Sk7RTVs9U

Audio recording: https://soundcloud.com/previtus/ml-jazz-meanderings-ml-generated-sounds-1/s-DCZbx

More demos at: https://ual-cci.github.io/vitek/ml_gen_music/report_griff_lim.html?version=61bf4b0 (Full page of generated samples with spectrograms!)