-

Notifications

You must be signed in to change notification settings - Fork 0

Adjusting tests to julia. #2

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

for more information, see https://pre-commit.ci

|

I think this should be fine, however, I couldn't figure out (so far) how exactly julia treats wrong cmd line arguments. |

Codecov Report

@@ Coverage Diff @@

## main #2 +/- ##

===========================================

+ Coverage 69.06% 98.54% +29.47%

===========================================

Files 13 13

Lines 320 343 +23

===========================================

+ Hits 221 338 +117

+ Misses 99 5 -94

Flags with carried forward coverage won't be shown. Click here to find out more.

Continue to review full report at Codecov.

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

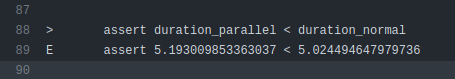

Looks great to me! Just added some pausing for the parallel tasks, those failed on my machine.

I was wondering, however, whether we should use the great momentum right away to implement dict-style passing of arguments as well?

I could see either using argparse or some standardized (hidden) json file. The latter might be more flexible and actually make it easier to run from editors / ides?

Something like

.pytask.[task_file].[task_name].[id].json

with contents:

{

"depends_on": ["[dict]", "[list]", "[single file]", null],

"produces": ["[dict]", "[list]", "[single file]", null],

"other_keys": "[whatever]"

}|

@hmgaudecker If one test occasionally fails I have no problem with that. Same in the other repos but it is really not that likely. |

|

@hildebrandecon Please, follow the link of the failing the pre-commit ci step and see whether you can fix the errors to make the pipeline pass. |

|

@tobiasraabe, what do you think about implementing passing of options via json, see above. Also, we may want to check that command line options recognized by Julia are parsed correctly. Something like pass number of threads and check within Julia that the number is correct? Once with 1, once with 4? |

|

@hmgaudecker Just for consistency and finishing stuff, I would advocate for merging this PR first to align with the other plugins. We will release it as v0.1.0. After that, and with a potential v0.2.0 release the interface can be reworked. I am nor sure I understand how you want to use the json. Do you want to be able to define tasks in json files? |

|

Sounds great. No, I would just want to use it to pass the dependencies and targets (and other options) in a way that resembles Python tasks. Right now, you can only pass arguments by order (=2 lists and on Julia/R side impossible to discriminate between deps/targets without knowing the mapping of list elements to files). Very error prone. |

@tobiasraabe I managed to resolve some issues. I'm not sure how to fix/treat the remaining ones, unfortunately. |

|

Just fix by ignoring (although I left that explicitly out from the course 😄)

@hildebrandecon @mbrambeer Could you draft a test for this? That is, pass explicitly to Julia that it should use only 1 thread at most, then check inside Julia that this is caught correctly. Repeat with 4. Test should include depends_on/produces because the order mattered in our experiments yesterday. I think this is more important than checking what happens with wrong options. Not really pytask's Job to check whether the user typed stuff correctly (it would be nice, of course, but could also become a maintenance burden). |

Yes, makes sense. To be more bold, you could pass the json data via the command line as an argument and parsing the string inside the program to get rid of the |

|

In principle yes, but the nice thing about the file (also relative to command line options) is that it makes it easy to run Julia/R from editors/IDEs/any way other than pytask. |

@hmgaudecker I must say that I don't understand what exactly you have in mind, probably because I'm not as an IT expert. However, here would be my rough idea what you would want to do:

|

|

Just add something to the script which makes it fail if |

|

Yip, that's precisely what I had in mind! |

|

Ok, I wrote a draft but in fact, it doesn't work. The test should fail since in line 141 we pass 4 threads. If I run the same stuff in the cmd line, it in fact works. Replication:

|

|

It seems that one has to pass arguments regarding Julia startup before the script but arguments used in the function itself after the script, replication:

In the shell, run: |

|

Thanks! I would hope writing the test is enough, @tobiasraabe has too much of an advantage of fixing the call from pytask for us to worry much about it 😉 However, can you parametrize the test with both 1 and 4 threads? I think it defaults to 4 on a machine with 4 cores (I might misremember) so that would be just to avoid random passes based on the machine type. |

tests/test_execute.py

Outdated

| os.chdir(tmp_path) | ||

| session = main({"paths": tmp_path}) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You dont need to switch paths and better use the runner patter from here

pytask-julia/tests/test_parallel.py

Line 58 in 2b15b07

| result = runner.invoke(cli, [tmp_path.as_posix()]) |

|

I don't really have a good idea. I feel like this forces us to replicate the command line interface and understand whether options are flags, accept and input, accept multiple inputs and what are arguments to the script, split them and place them to the left or right of the script. One approach to circumvent the replication would be to ask users to add |

|

Ah, sorry, I thought you had some sense or order in there. My understanding is that all you'd need to do is to swap the contents of |

|

Unfortunately, it is not enough to match the content of the decorator with Ok, plan for the implementation.

Everyone agrees? |

|

Okay, talking past each other here. As per our JSON discussion, I agree that pytask should not worry about resolving stuff. I would say the Anything else will be solved by different means in a different PR. |

That is, what is happening based on what @hildebrandecon posted above, I did not check in detail and it might not catch the option for other reasons. |

This would mean that v0.1.0 does not support all functionality. It does not allow users to pass any arguments to script as it is possible with the other plugins. We could pass depends on and produces by default to the script and the user can optionally parse them or not. But, something like passing a seed to a script would not work. I see the json approach as an alternative interface and a way to move forward, but I would prefer something fully working before that. |

|

Fair enough, I cannot delve deeply enough into it. So your view is to not pass any options to Julia, just to scripts? |

|

I think I cannot follow the discussion entirely, given that I don't understand most of the pytask details. Hence, I'm also not sure whether I can give any educated opinion about what best/not to do. Of course, let me know if I can be of help! |

|

I am sorry. I feel like I have not expressed myself clearly enough and caused some unnecessary confusion. Let me try it once more (although rather lengthy). The decorator @pytask.mark.r(("input.md", "1", "--verbose"))

@pytask.mark.depends_on(["script.R", "input.md"])

@pytask.mark.produces("out.md")

def task_write_number_with_r_to_file():

passThis way, although highly ugly, the R scripts receives the path to the markdown file and a number which can be written to the output file. We also pass the The main point here is that pytask-r can just take the tuple in the decorator and pass it on the command line to $ Rscript script.R input.md 1 --verboseWith This means pytask-julia needs to know whether something is an option for the executable or input to the script. As a super lightweight workaround and ugly hack, I propose to require users, if they use the @pytask.mark.julia(("--verbose", "--", "input.md", "1"))

@pytask.mark.depends_on(["script.jl", "input.md"])

@pytask.mark.produces("out.md")

def task_write_number_with_julia_to_file():

passThis way pytask-julia knows how to build the input to $ julia --verbose -- script.jl input.md 1I now also want to make it mandatory to always specify @pytask.mark.julia(("--", "input.md")) # to pass a path to the script

@pytask.mark.julia(("--threads", "4", "--")) # to enable 4 threads while running the program.The reason why I believe I know this is an extremely dirty hack and I am deeply unsatisfied with the situation. It is not an excuse to say waf was equally bad. We need to circumvent the positional indexing and get something label-based soon. But, in this PR, I just want to make pytask-julia as powerful as the other plugins so we have a common base to start from. |

|

thanks @tobiasraabe for the extensive explanation, I think your arguments make perfect sense! |

|

Sounds great, thanks for the detailed explanation. I think my view is to just not spend too much time on this right now. There will be few users and even less power users for the moment. Looking back at Waf and the current state of pytask-{R, Stata, Julia), I do think that requiring command-line options is a major inhibitor for take-up. For the typical (student) data cruncher, the most important feature is that starting from an IDE is seamless and the same as starting from pytask. But that's for another discussion! |

|

@hildebrandecon @hmgaudecker Hi, I implemented the described approach. The tests checking the number of threads inside the script works as well. Please review the readme and test whether the package works for you. |

|

@tobiasraabe tests run smoothly on my machine & I think the readme is in line with all changes. Thanks a lot! |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks great, just a couple of comments on documenting things and a suggestion for another trivial test.

| def test_check_passing_cmd_line_options(tmp_path): | ||

| task_source = """ | ||

| import pytask | ||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think this should be doubled up with a second number, different from 4. Just to avoid that whatever machine it is run on, it passes for reasons of the default happening to be 4.

for more information, see https://pre-commit.ci

|

I extended the cmd line option test as @hmgaudecker has proposed. I tried to do it with |

Closes #1 as far as I can tell: I adjusted the tests in

test_parallel.pyandtest_parametrize.py.Now, the run through.

I also adjusted the ReadMe.