-

-

Notifications

You must be signed in to change notification settings - Fork 655

Custom filename pattern for saving checkpoints #1127

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Custom filename pattern for saving checkpoints #1127

Conversation

|

@Joel-hanson thanks for the PR ! Let me check it and comment out things I do not understand... |

|

Looking into the test which failed. |

Fixed some of the tests which were related to the current implementation @vfdev-5 @sdesrozis can you please help me fix the other test cases |

|

@Joel-hanson our CI can fail on distributed tests, so no worries for failed tests... |

|

@Joel-hanson thanks for working on it ! I left several comments that may improve current implementation and open a small discussion about improvements of |

…something much more simple.

|

@Joel-hanson the problem of using predefined pattern like Just tried to play around all of that, I think class attribute is not a good approach... For example, I would like to setup 2 savers: training checkpoint (to save trainer, model, optimizer etc) and best model. For the first one, I do not want to alter filename pattern and for the second I provide score name and score function but for the filename instead of Maybe, we can introduce it as an additional argument to |

|

Good catch @vfdev-5 @vfdev-5 @sdesrozis

|

|

@Joel-hanson yes I think your notes are correct. Two last points

may be not clear how to implement. It could nice to have a single code for user pattern or our pattern. We can setup our default pattern for |

…nitialized via an additional argument.

|

@vfdev-5 @sdesrozis I have updated the PR with the required changes. There are 2 things to note

|

|

@Joel-hanson keys should be described in docs and the list of handled keys must be fixed. Thank you again for your work! |

|

I played with the existing rules of checkpoint naming. First, documentation states the rules (slightly modified) """

- `filename_prefix` is the argument passed to the constructor,

- `name` is the key in `to_save` if a single object is to store, otherwise `name` is "checkpoint".

- `suffix` is composed as following `{global_step}_{score_name}={score}`.

Above `global_step` defined by the output of `global_step_transform` and `score` defined

by the output of `score_function`.

By default, none of `score_function`, `score_name`, `global_step_transform` is defined,

then suffix is setup by attached engine's current iteration. The filename will be

`{filename_prefix}_{name}_{engine.state.iteration}.{ext}`.

If defined a `score_function`, but without `score_name`, then suffix is defined by provided score.

The filename will be `{filename_prefix}_{name}_{score}.{ext}`. If `global_step_transform` is

provided, then the filename will be `{filename_prefix}_{name}_{global_step}_{score}.{ext}`.

If defined `score_function` and `score_name`, then the filename will

be `{filename_prefix}_{name}_{score_name}={score}.{ext}`.

If `global_step_transform` is provided, then the filename will be

`{filename_prefix}_{name}_{global_step}_{score_name}={score}.{ext}`

For example, `score_name="neg_val_loss"` and `score_function` that returns `-loss`

(as objects with highest scores will be retained), then saved filename will be

`{filename_prefix}_{name}_neg_val_loss=-0.1234.pt`.

"""I wrote a tiny test which could help from typing import Callable, Optional

def test_pattern(

filename_prefix: str = "",

score_function: Optional[Callable] = None,

score_name: Optional[str] = None,

global_step_transform: Callable = None,

filename_pattern: Optional[str] = None,

):

# ignite rules are applied

if filename_pattern is None:

# filename_prefix will be included in pattern at the end

if score_function is None and score_name is None and global_step_transform is None:

# policy 1 `{filename_prefix}_{name}_{engine.state.iteration}.{ext}`

filename_pattern = "{name}_%s.{ext}" % 203 # fake engine.state.iteration

elif score_function and score_name is None:

if global_step_transform:

# policy 2a `{filename_prefix}_{name}_{global_step}_{score}.{ext}`

filename_pattern = "{name}_{global_step}_{score}.{ext}"

else:

# policy 2b `{filename_prefix}_{name}_{global_step}_{score}.{ext}`

filename_pattern = "{name}_{score}.{ext}"

elif score_function and score_name:

if global_step_transform:

# policy 3a `{filename_prefix}_{name}_{global_step}_{score_name}={score}.{ext}`

filename_pattern = "{name}_{global_step}_{score_name}={score}.{ext}"

else:

# policy 3b `{filename_prefix}_{name}_{global_step}_{score}.{ext}`

filename_pattern = "{name}_{score_name}={score}.{ext}"

else:

raise Exception("weird case score_name is not None but score_function is None")

# prefix is added to pattern if needed

if filename_prefix:

filename_pattern = "{filename_prefix}_" + filename_pattern

# filename_pattern contains user specific pattern or ignite pattern

# keys should be computed

# compute score : fake call

score = score_function() if score_function else None

# compute global_step : fake call

global_step = global_step_transform() if global_step_transform else None

# dict of keys

kwargs = {

"filename_prefix": filename_prefix,

"ext": "pt",

"name": "checkpoint",

"score_name": score_name,

"score": score,

"global_step": global_step

}

# pattern is applied wrt keys

return filename_pattern.format(**kwargs)

if __name__ == '__main__':

print(test_pattern())

print(test_pattern("best"))

print(test_pattern(score_function=lambda: 0.9999))

print(test_pattern(score_function=lambda: 0.9999, global_step_transform=lambda: 12))

print(test_pattern(score_function=lambda: 0.9999, score_name="acc"))

print(test_pattern(score_function=lambda: 0.9999, score_name="acc", global_step_transform=lambda: 12))

print(test_pattern("best", score_function=lambda: 0.9999))

print(test_pattern("best", score_function=lambda: 0.9999, global_step_transform=lambda: 12))

print(test_pattern("best", score_function=lambda: 0.9999, score_name="acc"))

print(test_pattern("best", score_function=lambda: 0.9999, score_name="acc", global_step_transform=lambda: 12))

pattern = "SAVE:{name}-{score_name}--{score}.pth"

print(test_pattern("best", score_function=lambda: 0.9999, score_name="acc", global_step_transform=lambda: 12, filename_pattern=pattern))Results are checkpoint_203.pt

best_checkpoint_203.pt

checkpoint_0.9999.pt

checkpoint_12_0.9999.pt

checkpoint_acc=0.9999.pt

checkpoint_12_acc=0.9999.pt

best_checkpoint_0.9999.pt

best_checkpoint_12_0.9999.pt

best_checkpoint_acc=0.9999.pt

best_checkpoint_12_acc=0.9999.pt

SAVE:checkpoint-acc--0.9999.pthHope that helps and no bug inside :) |

|

@sdesrozis Thank you so much for the docs and the test. I shall add it to this PR.

is this intentional if so I will remove this from the docstring.

from to correct, this is just to confirm.

|

|

|

@vfdev-5 @sdesrozis I have pushed the latest changes 🤞 .

|

vfdev-5

left a comment

vfdev-5

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the update @Joel-hanson ! I left some comments to improve the code and docs.

ignite/handlers/checkpoint.py

Outdated

| @staticmethod | ||

| def setup_filename_pattern( | ||

| filename_prefix, with_score, with_score_name, with_global_step_transform, filename_pattern=None, | ||

| ): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We need to provide a docstring for this public method, remove filename_pattern=None from args and update the code accordingly.

We also need annotate input args (typing) and add output type

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@staticmethod

def setup_filename_pattern(

filename_prefix: str = "",

with_score: bool = False,

with_score_name: bool = False,

with_global_step_transform: bool = False,

filename_pattern: union[str, None] = None,

) -> str:

Is this ok @vfdev-5

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The typehint for filename_pattern should be

filename_pattern: Optional[str] = None

Optional is a shortcut for Union[..., None]

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@sdesrozis we do not need this arg at all. See my message above, please

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry, @vfdev-5 I was a bit confused,

Are you suggesting that we don't require the filename_pattern to be passed but rather checked before calling setup_filename_pattern.

def call

if self.filename_pattern is None:

filename_pattern = self.setup_filename_pattern(

filename_prefix=self.filename_prefix,

with_score=self.score_function is None,

with_score_name=self.score_name is None,

with_global_step_transform=global_step is None,

)

else:

filename_pattern = self.filename_pattern

def setup_filename_pattern

def setup_filename_pattern(

filename_prefix: str = "",

with_score: bool = False,

with_score_name: bool = False,

with_global_step_transform: bool = False,

) -> Union[str, None]:

is this what you are suggesting?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, something like that. Note that setup_filename_pattern returns str and not Union.

def setup_filename_pattern(...) -> strThere was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

you are right @vfdev-5 will update this as well.

ignite/handlers/checkpoint.py

Outdated

| elif not with_global_step_transform: | ||

| filename_pattern = "{name}_{global_step}.{ext}" | ||

| else: | ||

| raise Exception("weird case score_name is not None but score_function is None") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| raise Exception("weird case score_name is not None but score_function is None") | |

| raise ValueError("If score_name is provided, score_function can not be None") |

|

@Joel-hanson thank you! I don’t have any comment more than those from @vfdev-5 😊 Thank you again for your work ! |

- The docstring was updated with the filename_pattern also added a example for this as well. - The static function `setup_filename_pattern` to get the default filename pattern of a checkpoint didn't have a proper typing. Have updated accordingly - The `setup_filename_pattern` function accepted the custom filename pattern which was not required. Have updated this as well not to accept the custom filename pattern.

|

@vfdev-5 I have updated the code accordingly which fixes the following.

|

…ests for this function. - The docstring for the function used for making the default filename pattern for checkpoints is added. - Added a new argument for filename prefix (`with_prefix`). - The tests for the update is added

…ignite into checkpoint-filename-pattern

vfdev-5

left a comment

vfdev-5

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Joel-hanson thanks for working on the PR, but there is something weird with docstring and implementation of setup_filename_pattern. Maybe, I'm missing something, please explain it, but the code does not look simple...

ignite/handlers/checkpoint.py

Outdated

| Args: | ||

| with_prefix (bool): If True, the `filename_prefix` with an underscore (filename_prefix_) will be added to the filename pattern. | ||

| By default the `filename_prefix` will not be appended to the filename pattern | ||

| with_score (bool): If True, this indicates that the score function is not provided, then it will look for ``with_score_name`` |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Joel-hanson seems like I didn't read attentively the last time the docstring. Now, the description IMO is a bit cumbersome. Argument with_score:

If True, this indicates that the score function is not provided

it looks very misleading, IMO. More intuitive approach is if with_score, then template will have {score}.

Am I missing something ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, @vfdev-5 its a bit confusing. I think I had mentioned this earlier #1127 (comment). Sorry for the confusion I can update this asap I should have communicated this properly.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please, try to make it simple and intuitive as much as possible.

ignite/handlers/checkpoint.py

Outdated

| if with_score and with_score_name and with_global_step_transform: | ||

| filename_pattern = "{name}_{score}.{ext}" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

is this contre-intuitive too or I do not understand something ?

"if with score and with score name and with global step transform" -> I would assume that filepattern contains those field.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@vfdev-5 we will have the pattern {name}_{score}.{ext} if the score function, score name, and global step transform function are not provided.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The code is wrong, IMO:

if with_score and with_score_name and with_global_step_transform:

filename_pattern = "{name}_{score}.{ext}"with_scoremeans filepattern will be with a field for the value from score functionwith_score_namemeans filepattern will be with score namewith_global_step_transformmeans filepattern will be with global step

so I expect to havefilename_pattern = "{name}_{global_step}_{score_name}={score}.{ext}"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have updated accordingly @vfdev-5.

|

@Joel-hanson I modified the code and docs from my side. |

sdesrozis

left a comment

sdesrozis

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Few comments about double quotation in docstring.

Otherwise, it is clear and concise!! Nice work, thank you.

|

I fixed double quotations. The PR is ready for merge when CI will be ok. |

|

@sdesrozis actually, docs rendering with double quotes is better than with single quotes. I prefer to keep double quotes. |

|

😱 I completely missed that! Very sorry, I revert! |

This reverts commit 1b8d8e1.

|

@Joel-hanson thank you for this work !! A lot of iteration but the result is nice 👍🏻 |

|

Thanks a lot, @vfdev-5 and @sdesrozis, It was my biggest opportunity to contribute to a well-known library like ignite. There have been several iterations, and each iteration has brought me something different to experience. Thank you again for motivating me to get this PR as successful as possible. It would be fantastic if you could find any of the pieces where I should have improved that might have made fewer iterations and made it easy for you guys. |

* Updated ImageNet example (pytorch#1138) * [WIP] Updated ImageNet example - minor fixes for Pascal VOC12 * Fixed flake8 * Updated pytorch-version-tests.yml to run cron every day at 00:00 UTC (pytorch#1141) Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> * Added check_compute_fn argument to EpochMetric and related metrics (pytorch#1140) * Added check_compute_fn argument to EpochMetric and related functions. * Updated docstrings * Added check_compute_fn to _BaseRegressionEpoch * Adding typing hints for check_compute_fn * Update roc_auc.py Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Docs cosmetics (pytorch#1142) * Updated docs, replaced single quote by double quote if is code - fixed missing link to Engine - cosmetics * More doc updates * More updates * Fix batch size calculation error (pytorch#1137) * Fix batch size calculation error * Add tests for fixed batch size calculation * Fix tests * Test for num_workers * Fix nproc comparison * Improve docs * Fixed docstring Co-authored-by: vfdev <vfdev.5@gmail.com> * Docs updates (pytorch#1139) * [WIP] Added teaser gif * [WIP] Updated README * [WIP] Updated README * [WIP] Updated docs * Reverted unintended pyproject.toml edits * Updated README and examples parts * More updates of README * Added badge to check pytorch/python compatible versions * Updated README * Added ref to blog "Using Optuna to Optimize PyTorch Ignite Hyperparameters" * Update README.md * Fixed bad internal link in examples * Updated README * Fixes docs (pytorch#1147) * Fixed bad link on teaser * Added manual_seed into docs * Issue pytorch#1115 : pbar persists due to specific rule in tqdm (notebook) when n < total (pytorch#1145) * Issue pytorch#1115 pbar persists in notebook due to specific rules when n < total * close pbar doesn't rise danger bar * fix when pbar.total is None Co-authored-by: vfdev <vfdev.5@gmail.com> Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> * Updated codebase such that torch>=1.3 (pytorch#1150) Co-authored-by: vfdev <vfdev.5@gmail.com> * add wandb (pytorch#1152) wandb integration already exists, just adding it to the requirements file * Fixed typo and missing part of "Where to go next" (pytorch#1151) * Fixes pytorch#1153 (pytorch#1154) - temporary downgrade of scipy to 1.4.1 instead of 1.5.0 * Use global_step as priority, if it exists (pytorch#1155) * Use global_step as priority, if it exists * Fix flake8 error * Style fix Co-authored-by: vfdev <vfdev.5@gmail.com> * Fix TrainsSaver handling of Checkpoint's n_saved (pytorch#1135) * Utilize Trains framework callbacks to better support checkpoint saving and respect Checkpoint.n_saved * Update trains callbacks to new format * autopep8 fix * Fix trains mnist example (store checkpoints in local folder) * Use trains 0.15.1rc0 until PR is approved * Use CallbackType for Trains callback type resolution. Add unit test for Trains callbacks * Update trains version * Updated test_trains_saver_callbacks Co-authored-by: jkhenning <> Co-authored-by: vfdev <vfdev.5@gmail.com> * Stateful handlers (pytorch#1156) * Stateful handlers * Added state_dict/load_state_dict tests for Checkpoint * integration test * Updated docstring and added include_self to ModelCheckpoint * An integreation test for checkpointing with stateful handlers * Black and flake8 Co-authored-by: vfdev-5 <vfdev.5@gmail.com> * Fixes pytorch#1162 (pytorch#1163) * Fixes pytorch#1162 - relaxed check of optimizer type * Updated docs * Cosmetics (pytorch#1164) * update ignite version to 0.5.0 in preparation of next release. (pytorch#1158) Co-authored-by: vfdev <vfdev.5@gmail.com> * Create FUNDING.yml * Update README.md Added "Uncertainty Estimation Using a Single Deep Deterministic Neural Network" paper by @y0ast * Issue 1124 (pytorch#1170) * Fixes pytorch#1124 - Trains logger can log torch vectors * Log vector as title=tag+key, series=str(index) * Improved namings in _XlaDistModel (pytorch#1173) * Issue 1123 - Improve usage of contrib common methods with other save handlers (pytorch#1171) * Added delegated_save_best_models_by_val_score * Fixes pytorch#1123 - added save_handler arg to setup_common_training_handlers - added method delegated_save_best_models_by_val_score * Renamed delegated_save_best_models_by_val_score to gen_save_best_models_by_val_score * Issue 1165 : nccl + torch.cuda not available (pytorch#1166) * fix issue 1165 * Update ignite/distributed/comp_models/native.py Co-authored-by: vfdev <vfdev.5@gmail.com> * add test for nccl /wo gpu Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: vfdev <vfdev.5@gmail.com> * Fix typo in the docstring of ModelCheckpoint * Fixes failing tests with native dist comp model (pytorch#1177) - saves/restore env on init/finalize * Set isort to 4.3.21 as it fails on 5.0 (pytorch#1180) * improve docs for custom events (pytorch#1179) * ValueError -> TypeError (pytorch#1175) * ValueError -> TypeError * refactor corresponeding unit-test Co-authored-by: vfdev <vfdev.5@gmail.com> * Update cifar10 (pytorch#1181) * Updated code to log models on Trains server * Updated cifar10 example to log necessary things to Trains * Fix Exception misuse in `ignite.contrib.handlers.base_logger.py` (pytorch#1183) * ValueError -> TypeError * NotImplementedError -> NotImplemented * rollback ignite/engine/events [raise NotImplementedError] * fix misuses of exceptions in ignite/contrib/handlers/base_logger.py * refactor corresponding unit tests Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> * Fixed failing cifar10 test (pytorch#1184) * Fix Exception misuse in `ignite.contrib.handlers.custom_events.py` (pytorch#1186) * ValueError -> TypeError * NotImplementedError -> NotImplemented * rollback ignite/engine/events [raise NotImplementedError] * fix misuses of exceptions in ignite/contrib/handlers/custom_events.py * remove period in exceptions * refactor corresponding unit tests * Update tpu-tests.yml * Fix Exception misuse in `ignite.contrib.engines.common.py` (pytorch#1182) * ValueError -> TypeError * NotImplementedError -> NotImplemented * fix misuses of exceptions in ignite/contrib/engines/common.py * rollback ignite/engine/events [raise NotImplementedError] Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Refactored test_utils.py into 3 files (pytorch#1185) - we can better test new coming comp models Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> * Fix Exception misuse in `ignite.contrib.handlers.lr_finder.py` (pytorch#1187) * ValueError -> TypeError * NotImplementedError -> NotImplemented * rollback ignite/engine/events [raise NotImplementedError] * fix misuses of exceptions in ignite/contrib/handlers/lr_finder.py * refactor corresponding unit tests * fix typo Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Fix Exception misuse in `ignite.contrib.handlers.mlflow_logger.py` (pytorch#1188) * ValueError -> TypeError * NotImplementedError -> NotImplemented * rollback ignite/engine/events [raise NotImplementedError] * fix misuses of exceptions in ignite/contrib/handlers/mlflow_logger.py & refactor corresponding unit tests Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Fix Exception misuse in `ignite.contrib.handlers.neptune_logger.py` (pytorch#1189) * ValueError -> TypeError * NotImplementedError -> NotImplemented * rollback ignite/engine/events [raise NotImplementedError] * fix misuses of exceptions in ignite/contrib/handlers/neptune_logger.py & refactor corresponding unit tests Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Update README.md (pytorch#1190) * Update README.md We are adding a disclaimer to all non-FB led repos in the PyTorch github org. Let me know if you have any concerns. Thanks! * Update README.md Co-authored-by: vfdev <vfdev.5@gmail.com> * fix for distributed proxy sampler runtime error (pytorch#1192) * fix for distributed proxy sampler padding * fixed formatting * Updated timers to include fired hanlders' times (pytorch#1104) (pytorch#1194) * update timers including fired handlers ones * autopep8 fix * fix measurement and add test * rename fire_start_time to handlers_start_time Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: AutoPEP8 <> * Improve pascalvoc (pytorch#1193) * Fixes pytorch#1124 - Trains logger can log torch vectors * [WIP] Fixes issue with exp_trackin - improved configs - training script * [WIP] Added explicit TrainsSaver setup * Updated training script * Fixed formatting * Fixed bad merging * Added missing rank dispatch for the progressbar * Custom filename pattern for saving checkpoints (pytorch#1127) * Custom filename pattern for saving checkpoints * The suffix check be confused when adding name initially to the dict * The filename prefix was updated which is not necessary was reverted * The default filename pattern attribute was set instead of the `_filename_pattern` * The redundant filename pattern to make filename was ugly, changed to something much more simple. * The filename pattern implementation changed to have a new way to be initialized via an additional argument. * - The extension given in the class has a dot infront of it, this can cause issues when having the latest filename pattern. have fixed it by assigning only the extension value not the dot - The docsstring was updated to latest changes - The assignment of name to filename pattern was missing * The tests for checking the checkpoint filenames when a custom filename pattern is given. * The formatting issue fixed * - Added a function to get the filename pattern for the default to make it much more readable. - Updated the current checkpoint __call__ to make filename based on the new function which has introduced - Updated test_checkpoint_filename_pattern to have the exact values instead have a function. - Updated a test case where it was failing due to the latest changes in a checkpoint __call__. * - The _get_filename_pattern function updated to public and static setup_filename_pattern - The setup_filename_pattern now takes updated arguments of with_score, with_score_name and with_global_step_transform * The dostring and the static setup_filename_pattern were updated - The docstring was updated with the filename_pattern also added a example for this as well. - The static function `setup_filename_pattern` to get the default filename pattern of a checkpoint didn't have a proper typing. Have updated accordingly - The `setup_filename_pattern` function accepted the custom filename pattern which was not required. Have updated this as well not to accept the custom filename pattern. * The tests for the static function `Checkpoint.setup_filename_pattern`. * The Docstring for setup_filename_pattern added and have updated the tests for this function. - The docstring for the function used for making the default filename pattern for checkpoints is added. - Added a new argument for filename prefix (`with_prefix`). - The tests for the update is added * Code clean up to have much more meaning to the code * Simplified the code and tests * fix quotes * Revert "fix quotes" This reverts commit 1b8d8e1. Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Docs update and auto_model change (pytorch#1197) * Fixes pytorch#1174 - Updated docs - auto_model puts params on device if they are not the device * - Updated docs * Update auto.py * Minor optimization for idist.get_* (pytorch#1196) * Minor optimization for idist.get_* * Set overhead threshold to 1.9 * Keep only test_idist_methods_overhead_nccl * Removed _sync_model_wrapper to implicitly check if we need to sync model This also reduces time of idist.get_* method calls vs native calls * Update test_native.py * autopep8 fix * Update test_native.py Co-authored-by: AutoPEP8 <> Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> * Propagate spawn kwargs from parallel to model's spawn (pytorch#1201) * Fixes pytorch#1199 - Updated code to propagate spawn kwargs - start_method is fork by default * Fixed bad syntax * Fixes pytorch#1198 - bug with CM in PascalVOC example (pytorch#1200) * Fixes pytorch#1198 - put CM to cpu before converting to numpy - removed manual recall computation, put into CM definition * Explicit CM compute by all proc and logging by 0 rank proc * Added link to Discuss.PyTorch forum (pytorch#1205) - Updated readme and FAQ * Fixed wrong IoU computation in Pascal VOC (pytorch#1204) * Fixed wrong IoU computation * use black to fix lint check error * Updated training code: - added custom_event_filter to log images less frequently - split events to avoid running validation twice in the end of the training * Fixed formatting Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> * Fix Typo in `ignite.handlers.timing` (pytorch#1208) * ValueError -> TypeError * NotImplementedError -> NotImplemented * rollback ignite/engine/events [raise NotImplementedError] * fix misuses of exceptions in ignite/contrib/handlers/custom_events.py * remove period in exceptions * refactor corresponding unit tests * fix typo in ignite/handlers/timing.py * Fixes issue with logging XLA tensors (pytorch#1207) * [WIP] fixed typing * Fixes pytorch#1136 - fixed problem when all_reduce does not put result tensor to original device * REFACTOR: Early Return Pattern (if elif else -> if if return) (pytorch#1211) * Issue 1133 - Fixes flaky Visdom tests (pytorch#1149) * [WIP] inspect bug * Attempt to fix flaky Visdom tests * autopep8 fix Co-authored-by: vfdev-5 <vfdev.5@gmail.com> Co-authored-by: AutoPEP8 <> * Updated about page * Replaced teaser code by a notebook runnable in Colab (pytorch#1216) * Replaced teaser code by a notebook runnable in Colab * Updated teaser (py, ipynb) * Added support of Horovod (pytorch#1195) * [WIP] Horovod comp model * [WIP] Horovod comp model - Implemented spawn - Added comp model tests * Refactored test_utils.py into 3 files - we can better test new coming comp models * [WIP] Run horovod tests * [WIP] Horovod comp model + tests * autopep8 fix * [WIP] More tests * Updated utils tests * autopep8 fix * [WIP] more tests * Updated tests and code and cifar10 example * autopep8 fix * Fixed failing CI and updated code * autopep8 fix * Fixes failing test * Fixed bug with new/old hvd API and the config * Added metric tests * Formatting and docs updated * Updated frequency test * Fixed formatting and a typo in idist.model_name docs * Fixed failing test * Docs updates and updated auto methods according to horovod API * autopep8 fix * Cosmetics Co-authored-by: AutoPEP8 <> * metrics: add SSIM (pytorch#1217) * metrics: add SSIM * add scikit-image dependency * add distributed tests, fix docstring * .gitignore back to normal * Update ignite/metrics/ssim.py Co-authored-by: vfdev <vfdev.5@gmail.com> * .format(), separate functions * scalar input for kernel, sigma, fix py3.5 CI * apply suggestions * some fixes * fixed tpu tests * Minor code cosmetrics and raised err tolerance in tests * used list comprehension convolution, fixed tests * added uniform kernel, change tolerance, various image size tests * Update ignite/metrics/ssim.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/ssim.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Fix flake8 Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * add the EpochOutputStore with tests (pytorch#1226) * add the EpochOutputStore with tests * add correct import and unify the test cases * fix checks from flake8 and isort Co-authored-by: Zhiliang@siemens <zhiliang.wu@siemens.com> * add horovod test (pytorch#1230) (pytorch#1231) Co-authored-by: Jeff Yang <ydcjeff@outlook.com> * Update README.md * Added idist.broadcast (pytorch#1237) * [WIP] Added idist.broadcast * Removed unused code * Added tests to increase coverage * Docker for users pytorch#1214 (pytorch#1218) * Docker for users pytorch#1214 - prebuilt docker image handling Ignite examples configuration * Docker for users pytorch#1214 - more complete basic image based on pytorch 1.5.1-cuda10.1-cudnn7-devel - with apex, opencv setups and pascal_voc2012 requirements _ container running with non-privileged user * Docker for users pytorch#1214 - improve Dockerfiles for vision and apex-vision (TORCH_CUDA_ARCH_LIST as argument) - propose apex-vision with multi-stage build * Docker for users pytorch#1214 - Dockerfiles for nlp and vision tasks with their apex version - user as root, Ignite examples added * Update README.md Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * [BC-breaking] NotImplementedError -> NotImplemented (pytorch#1178) * NotImplementedError -> NotImplemented * returning NotImplemented, instead of raising it * make type restriction inside & add corresponding tests * autopep8 fix * remove extra spaces * Updates according to the review * Fixed unsupported f-string in 3.5 - added more tests * Updated docs and tests Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: AutoPEP8 <> Co-authored-by: vfdev-5 <vfdev.5@gmail.com> * Allow passing keyword arguments to save function on checkpoint. (pytorch#1245) * Allow passing keyword arguments to save function on checkpoint. * Change Docstring * Add tests for keywords to DiskSaver * autopep8 fix * Use pytest.raises instead of xfail. Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: AutoPEP8 <> * Docs updates and fix of black version (pytorch#1250) * Update governance.rst * Fix Exception misuse in `ignite.contrib.handlers.param_scheduler.py` (pytorch#1206) * ValueError -> TypeError * NotImplementedError -> NotImplemented * rollback ignite/engine/events [raise NotImplementedError] * fix misuses of exceptions in ignite/contrib/handlers/custom_events.py * remove period in exceptions * refactor corresponding unit tests * fix misuses of exceptions in ignite/contrib/handlers/param_scheduler.py & refactor corresponding unit tests * fix misuses of exceptions in ignite/contrib/handlers/param_scheduler.py & refactor corresponding unit tests (stricter: list/tuple -> TypeError & item of list/tuple -> ValueError) * autopep8 fix * remove extra spaces * autopep8 fix * add matches to pytest.raises * add match to pytest.raises * autopep8 fix * add missing tests * autopep8 fix * Update param_scheduler.py * revert previous modification Co-authored-by: AutoPEP8 <> Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Issue pytorch#1247 (pytorch#1252) * Delete test_custom_events.py * Delete custom_events.py * Removing depriciated CustomPeriodicEvent * Remove deprecated CustomPeriodicEvent * Update test_tqdm_logger.py * Remove deprecated CustomPeriodicEvent * Update test_tqdm_logger.py Adding needed space. * Removing CustomPeriodicEvent * Update handlers.rst * [WIP] Update readme for docker (pytorch#1254) * [WIP] Update readme for docker * Update README.md Co-authored-by: vfdev <vfdev.5@gmail.com> * Update README.md Co-authored-by: vfdev <vfdev.5@gmail.com> * [WIP] Update readme for docker - fix rendering * [WIP] Update readme for docker - add DockerHub Ignite repo link and images list * Updated readme Co-authored-by: vfdev <vfdev.5@gmail.com> * Update README.md * Update index.rst * Update common.py * Update CONTRIBUTING.md * [WIP] Added sync_bn to auto_model with tests (pytorch#1265) * Added dist support for EpochMetric and other similar metrics (pytorch#1229) * [WIP] Added dist support for EpochMetric with tests * Updated docs * [WIP] Added idist.broadcast * Removed unused code * [WIP] Updated code * Code and test updates * autopep8 fix * Replaced XLA unsupported type() method by attribute .dtype * Updated code Co-authored-by: AutoPEP8 <> * Fixes pytorch#1258 (pytorch#1268) - Replaced mp.spawn by mp.start_processes for native comp model * Updated CONTRIBUTING.md (pytorch#1275) * Updatd CONTRIBUTING.md * Update CONTRIBUTING.md * Rename Epoch to Iterations when using epoch_length with max_epochs=1 (pytorch#1279) * Set default description as none * Add test for description with max_epochs set to 1 * Change default description to use iterations when max_epochs=1 * Correct test_pbar_with_max_epochs_set_to_one * Modify tests to reflect change from epochs to iterations * Use engine.state.max_epochs instead of engine.state_dict() * Change Iterations to Iteration * Correct tests * Update progress bar docstring * Update tqdm_logger.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update README.md * [BC-breaking] Make Metrics accumulate values on device specified by user (pytorch#1232) (pytorch#1238) * Make Metrics accumulate values on device specified by user (pytorch#1232) * update accuracy to accumulate _num_correct in a tensor on the right device * update loss metric to accumulate _sum in a tensor on the right device * update mae metric to accumulate in a tensor on the right device * update mpd metric to accumulate in a tensor on the right device * update mse metric to accumulate in a tensor on the right device * update top k accuracy metric to accumulate in a tensor on the right device * update precision and recall metrics to accumulate in tensors on the right device * ..... * black formatting * reverted run*.sh * change all metrics default device to cpu except running_average * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * remove Optional type from metric devices since default is cpu * add comment explaining lack of detach in accuracy metrics Co-authored-by: vfdev <vfdev.5@gmail.com> * Improved and fixed accuracy tests * autopep8 fix * update docs and docstrings for updated metrics (pytorch#1239) * update accuracy to accumulate _num_correct in a tensor on the right device * update loss metric to accumulate _sum in a tensor on the right device * update mae metric to accumulate in a tensor on the right device * update mpd metric to accumulate in a tensor on the right device * update mse metric to accumulate in a tensor on the right device * update top k accuracy metric to accumulate in a tensor on the right device * update precision and recall metrics to accumulate in tensors on the right device * ..... * black formatting * reverted run*.sh * change all metrics default device to cpu except running_average * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * remove Optional type from metric devices since default is cpu * add comment explaining lack of detach in accuracy metrics * update docstrings and docs * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accuracy.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/fbeta.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/loss.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/metric.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/recall.py Co-authored-by: vfdev <vfdev.5@gmail.com> * add comment explaining lack of detach in metrics docs * support device argument for running_average * update support for device argumenet for accumulation * fix and improve device tests for metrics * fix and improve device tests for metrics * fix TPU tests * Apply suggestions from code review * Apply suggestions from code review Co-authored-by: vfdev <vfdev.5@gmail.com> * Updates to metrics_impl (pytorch#1266) * update accuracy to accumulate _num_correct in a tensor on the right device * update loss metric to accumulate _sum in a tensor on the right device * update mae metric to accumulate in a tensor on the right device * update mpd metric to accumulate in a tensor on the right device * update mse metric to accumulate in a tensor on the right device * update top k accuracy metric to accumulate in a tensor on the right device * update precision and recall metrics to accumulate in tensors on the right device * ..... * black formatting * reverted run*.sh * change all metrics default device to cpu except running_average * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * remove Optional type from metric devices since default is cpu * add comment explaining lack of detach in accuracy metrics * update docstrings and docs * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accuracy.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/fbeta.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/loss.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/metric.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/recall.py Co-authored-by: vfdev <vfdev.5@gmail.com> * add comment explaining lack of detach in metrics docs * support device argument for running_average * update support for device argumenet for accumulation * fix and improve device tests for metrics * fix and improve device tests for metrics * fix TPU tests * Apply suggestions from code review * Apply suggestions from code review * detach tensors earlier in update * remove redundant to() call * ensure metrics aren't created on XLA devices * Fixed isort * move xla check to Metric.__init__ instead of individual metrics * update xla tests * replace deleted callable check * remove redundant precision and recall __init__ * replace precision/recall __init__ for docs rendering * add support for metrics_lambda with components on diff devices Co-authored-by: vfdev <vfdev.5@gmail.com> Co-authored-by: n2cholas <nicholas.vadivelu@gmai.com> * Update metrics.rst * Update metrics.rst * Fix TPU tests for metrics_impl branch (pytorch#1277) * update accuracy to accumulate _num_correct in a tensor on the right device * update loss metric to accumulate _sum in a tensor on the right device * update mae metric to accumulate in a tensor on the right device * update mpd metric to accumulate in a tensor on the right device * update mse metric to accumulate in a tensor on the right device * update top k accuracy metric to accumulate in a tensor on the right device * update precision and recall metrics to accumulate in tensors on the right device * ..... * black formatting * reverted run*.sh * change all metrics default device to cpu except running_average * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * remove Optional type from metric devices since default is cpu * add comment explaining lack of detach in accuracy metrics * update docstrings and docs * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accuracy.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/fbeta.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/loss.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/metric.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/recall.py Co-authored-by: vfdev <vfdev.5@gmail.com> * add comment explaining lack of detach in metrics docs * support device argument for running_average * update support for device argumenet for accumulation * fix and improve device tests for metrics * fix and improve device tests for metrics * fix TPU tests * Apply suggestions from code review * Apply suggestions from code review * detach tensors earlier in update * remove redundant to() call * ensure metrics aren't created on XLA devices * Fixed isort * move xla check to Metric.__init__ instead of individual metrics * update xla tests * replace deleted callable check * remove redundant precision and recall __init__ * replace precision/recall __init__ for docs rendering * add support for metrics_lambda with components on diff devices * fix epoch_metric xla test Co-authored-by: vfdev <vfdev.5@gmail.com> Co-authored-by: n2cholas <nicholas.vadivelu@gmai.com> * metrics_impl fix 2 gpu hvd tests and ensure consistent detaching (pytorch#1280) * update accuracy to accumulate _num_correct in a tensor on the right device * update loss metric to accumulate _sum in a tensor on the right device * update mae metric to accumulate in a tensor on the right device * update mpd metric to accumulate in a tensor on the right device * update mse metric to accumulate in a tensor on the right device * update top k accuracy metric to accumulate in a tensor on the right device * update precision and recall metrics to accumulate in tensors on the right device * ..... * black formatting * reverted run*.sh * change all metrics default device to cpu except running_average * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * remove Optional type from metric devices since default is cpu * add comment explaining lack of detach in accuracy metrics * update docstrings and docs * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accumulation.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/accuracy.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/fbeta.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/loss.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/metric.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/precision.py Co-authored-by: vfdev <vfdev.5@gmail.com> * Update ignite/metrics/recall.py Co-authored-by: vfdev <vfdev.5@gmail.com> * add comment explaining lack of detach in metrics docs * support device argument for running_average * update support for device argumenet for accumulation * fix and improve device tests for metrics * fix and improve device tests for metrics * fix TPU tests * Apply suggestions from code review * Apply suggestions from code review * detach tensors earlier in update * remove redundant to() call * ensure metrics aren't created on XLA devices * Fixed isort * move xla check to Metric.__init__ instead of individual metrics * update xla tests * replace deleted callable check * remove redundant precision and recall __init__ * replace precision/recall __init__ for docs rendering * add support for metrics_lambda with components on diff devices * fix epoch_metric xla test * detach output consistently for all metrics * fix horovod two gpu tests * make confusion matrix detaches like other metrics Co-authored-by: vfdev <vfdev.5@gmail.com> Co-authored-by: n2cholas <nicholas.vadivelu@gmai.com> * Fixes failing test on TPUs Co-authored-by: Nicholas Vadivelu <nicholas.vadivelu@gmail.com> Co-authored-by: AutoPEP8 <> Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: n2cholas <nicholas.vadivelu@gmai.com> * Specify tqdm to be less than or equal to v4.48.0 (pytorch#1293) * Fixes pytorch#1285 (pytorch#1290) - use mp.spawn for pytorch < 1.5 * Issue 1249 : fix ParamGroupScheduler with schedulers based on different optimizers (pytorch#1274) * remove **kwargs from LRScheduler * revert ParamGroupScheduler inheritance : remove ParamScheduler base class * use ParamGroupScheduler in ConcatScheduler * add tests for ParamGroupScheduler with multiple optimizers * autopep8 fix * fix doc example * fix from vfdev comments * refactor list of optimizers and paranames * add tests * autopep8 fix Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: AutoPEP8 <> Co-authored-by: vfdev <vfdev.5@gmail.com> * remove prints (pytorch#1292) * remove prints * code formatting Co-authored-by: vfdev <vfdev.5@gmail.com> * Fix link to pytorch documents (pytorch#1294) * Fix link to pytorch documents * Fix too long lines Co-authored-by: vfdev <vfdev.5@gmail.com> * Added required_output_keys public attribute (1289) (pytorch#1291) * Fixes pytorch#1289 - Promoted _required_output_keys to be public as user would like to override it. * Updated docs * Fixed typo in docs (concepts). (pytorch#1295) * Setup Mypy check at CI step (pytorch#1296) * add mypy file * add mypy at CI step * add mypy step at Contributing.md Co-authored-by: vfdev <vfdev.5@gmail.com> * Update README.md * Docker for users with Horovod (pytorch#1248) * [WIP] Docker for users with Horovod - base / vision / nlp - with apex build * [WIP] Docker for users with Horovod - install horovod with .whl , add nccl in runtime image * Docker for users with Horovod - update Readmes for horovod images and configuration * Docker for users with Horovod - hvd tags/v0.20.0 - ignite examples with git sparse checkout * Docker for users with Horovod - update docs Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Added input data type check (pytorch#1301) * Update metrics.rst * Docker for users with MSDeepSpeed (pytorch#1304) * Docker for users with DeepSpeed - msdp-base | vision | nlp * Docker for users with DeepSpeed - rename images extensions to msdp-apex-* Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> * Update README.md * Updated hvd images + scripts (pytorch#1306) * Updated hvd images - added scripts to auto build and push images * Updated scripts according to the review * Update BatchFiltered docstring * Improve Canberra metric (pytorch#1312) * Add abs on denominators in canberra metric and use sklearn in test * autopep8 fix * improve docstring * use canberra on total computation * Update canberra_metric.py Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: AutoPEP8 <> Co-authored-by: vfdev <vfdev.5@gmail.com> * Improve Canberra metric for DDP (pytorch#1314) * refactor canberra metric for ddp * improve canberra for ddp * autopep8 fix * use tensor for accumulation * detach output * remove useless item() * add missing move to device * refactor detach() and move * refactor to remove useless view_as and to() * do not expose reinit__is_reduced ad sync_all_reduce Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: AutoPEP8 <> * Improve ManhattanDistance metric for DDP (pytorch#1320) * fix manhattan distance and improve for ddp * replace article by sklearn documentation * Update ignite/contrib/metrics/regression/manhattan_distance.py Co-authored-by: vfdev <vfdev.5@gmail.com> Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: vfdev <vfdev.5@gmail.com> * Update README.md * Update about.rst * Update Circle CI docker image to pytorch 1.6.0 (pytorch#1325) * Update Circle CI docker image to pytorch 1.6. Closes pytorch#1225 * Update Circle CI docker image to pytorch 1.6. Closes pytorch#1225 (pytorch#1322) * Revert "Update Circle CI docker image to pytorch 1.6. Closes pytorch#1225 (pytorch#1322)" (pytorch#1323) This reverts commit 22ecac6. * Update Circle CI docker image to pytorch 1.6.0 Closes pytorch#1225 Co-authored-by: vfdev <vfdev.5@gmail.com> * Update CONTRIBUTING.md * Add new logo (pytorch#1324) * Update Circle CI docker image to pytorch 1.6. Closes pytorch#1225 (pytorch#1322) * Revert "Update Circle CI docker image to pytorch 1.6. Closes pytorch#1225 (pytorch#1322)" (pytorch#1323) This reverts commit 22ecac6. * add logos * remove past logo from readme * add logo guidelines * Update README.md Changed size to 512 * Updated docs logo Co-authored-by: Juan Miguel Boyero Corral <juanmi1982@gmail.com> Co-authored-by: vfdev <vfdev.5@gmail.com> * Fixed CI on GPUs with pth 1.6.0 (pytorch#1326) * Fixed CI on GPUs with pth 1.6.0 - updated tests/run_gpu_tests.sh file - updated nccl version to 2.7 for Horovod build * Fixed hvd failing tests * Updated about us (pytorch#1327) - Added CITATION file * Improve R2Score metric for DDP (pytorch#1318) * imrpove r2 for ddp * autopep8 fix * _num_examples type is scalar * autopep8 fix Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: AutoPEP8 <> Co-authored-by: vfdev <vfdev.5@gmail.com> * Fix canberra docstring : reference already in namespace (pytorch#1330) Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: vfdev <vfdev.5@gmail.com> * Improve State and Engine docs pytorch#1259 (pytorch#1333) - add State.restart() method - add note in Engine.run() docstring / improve error message - unit test for State.restart() * pytorch#1336 missing link in doc fix (pytorch#1337) * Make SSIM accumulate on specified device (pytorch#1328) * make ssim accumulate on specified device * keep output on original device until accumulation * implement more efficient kernel creation Co-authored-by: vfdev <vfdev.5@gmail.com> * Update documentation for terminate Events (pytorch#1338) * Update documentation for terminate Events (pytorch#1332) * Converted raw table in docstring to list table * Update README.md Co-authored-by: Anmol Joshi <anmolsjoshi@gmail.com> Co-authored-by: Sylvain Desroziers <sylvain.desroziers@gmail.com> Co-authored-by: Marijan Smetko <marijansmetko123@gmail.com> Co-authored-by: Desroziers <sylvain.desroziers@ifpen.fr> Co-authored-by: Lavanya Shukla <lavanya.shukla12@gmail.com> Co-authored-by: Akihiro Matsukawa <amatsukawa@users.noreply.github.com> Co-authored-by: Jake Henning <59198928+jkhenning@users.noreply.github.com> Co-authored-by: Elijah Rippeth <elijah.rippeth@gmail.com> Co-authored-by: Wang Ran (汪然) <wrran@outlook.com> Co-authored-by: Joseph Spisak <spisakjo@gmail.com> Co-authored-by: Ryan Wong <ryancwongsa@gmail.com> Co-authored-by: Joel Hanson <joelhanson025@gmail.com> Co-authored-by: Wansoo Kim <rladhkstn8@gmail.com> Co-authored-by: Jeff Yang <ydcjeff@outlook.com> Co-authored-by: Zhiliang <ZhiliangWu@users.noreply.github.com> Co-authored-by: Zhiliang@siemens <zhiliang.wu@siemens.com> Co-authored-by: François COKELAER <francois.cokelaer@gmail.com> Co-authored-by: Kilian Pfeiffer <kilsen512@gmail.com> Co-authored-by: Tawishi <55306738+Tawishi@users.noreply.github.com> Co-authored-by: Michael Hollingworth <sisoac@hotmail.com> Co-authored-by: Nicholas Vadivelu <nicholas.vadivelu@gmail.com> Co-authored-by: n2cholas <nicholas.vadivelu@gmai.com> Co-authored-by: Benjamin Lo <wolfnun011@gmail.com> Co-authored-by: Nidhi Zare <zarenidhi5@gmail.com> Co-authored-by: Keisuke Kamahori <keisuke1258@gmail.com> Co-authored-by: Théo Dumont <theodumont28@hotmail.fr> Co-authored-by: kenjihiraoka <31676903+kenjihiraoka@users.noreply.github.com> Co-authored-by: Juan Miguel Boyero Corral <juanmi1982@gmail.com> Co-authored-by: Isabela Presedo-Floyd <50221806+isabela-pf@users.noreply.github.com> Co-authored-by: Sumit Roy <sumit.roy@unacademy.com> Co-authored-by: Shashank Gupta <shaz4194@gmail.com>

Fixes #1074

This PR adds a feature to set custom filename by updating the class attribute

filename_pattern.Description:

There was an issue #1074 reported some days back where the "=" in the checkpoint name can cause certain issues in some operating systems.

I have added a class attribute

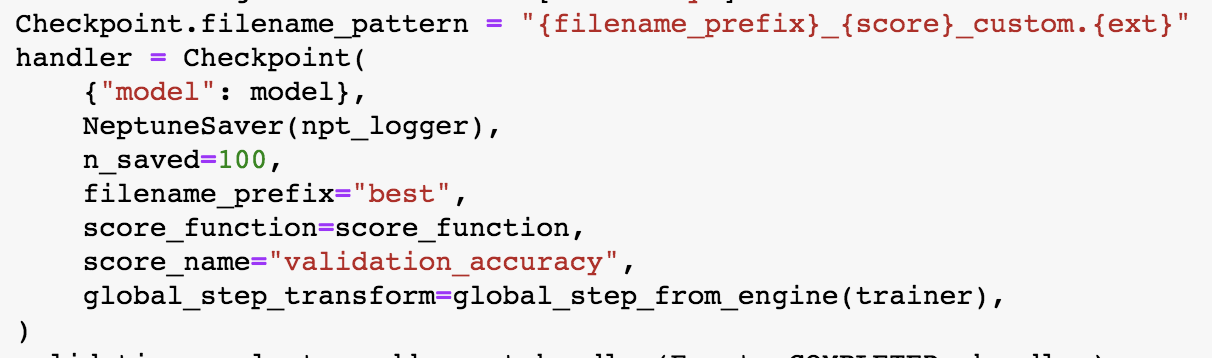

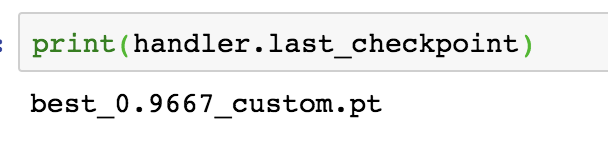

filename_patternwith the value{filename_prefix}_{name}_{global_step}_{score_name}={score}.{ext}, This file pattern is used when we save a checkpoint. If we update this attribute to a new pattern the checkpoint name will be updated to the new pattern which the user specifies.Example:

Checkpoint:

Output:

Checklist: