Hybrid images are special types of images which takes advantage of perceptual grouping to change the meaning of an image as viewing distance is changed [MIT Publication].

Once this repo has been cloned, you can copy the hybridimages folder into your project and use it by importing it as a package:

from hybridimages.hybrid_image import createHybridImage

To create a hybrid image, you will need two images that are perceptually aligned (e.g. if you want to blend two faces, the eyes and noses must align).

Load the images as a numpy array then set the values of free parameters lo_sigma and hi_sigma. These need to be tuned according to your specific images and require experimentation.

from PIL import Image

import numpy as np

from hybridimages.hybrid_image import createHybridImage

# Load images and convert to numpy array

img_A = Image.open('data/dog.bmp')

img_B = Image.open('data/cat.bmp')

lo_freq_img = np.asarray(img_A)

hi_freq_img = np.asarray(img_B)

# define free parameters (experimental tuning required)

lo_sigma, hi_sigma = 6.5, 5.0

# generate hybrid image

hybrid = createHybridImage(lo_freq_img, lo_sigma, hi_freq_img, hi_sigma)

# preview image

plt.imshow(img)

# save to disk

im = Image.fromarray(hybrid)

im.save('out/hybrid.png')

If everything has worked, then you will see this message in your terminal, followed by the image opening up automatically.

Hybrid image computed successfully

If your image is greyscale, load it as a single channel image as follows:

image = Image.open(path).convert('L')

Note: This package only relies on numpy to perform convolution. The unit tests make use of scipy.signal.convolve2d as a basis for comparison and checking correctness.

Matplotlib is required to visualise images and Pillow is used to load image files.

Sometimes Python does not recognise an import even though the package has been copied, this this case, copy the following to the top of your script.

import sys

import os

sys.path.append(os.path.dirname(os.path.abspath(__file__)) + "/..")

When viewing a greyscale image, matplotlib may fail to render it correctly. The following can fix this issue.

img = np.squeeze(img)

plt.imshow(img, cmap="gray")

See Harness.py for a self contained example. It also contains some helper methods for common image operations such as loading, viewing and saving if you're not familiar.

The following is an example of a hybrid image that has been downsampled which makes it clear to understand a hybrid image without having to walk further away from your screen.

A hybrid image is obtained by taking two images and adding a low frequency version of one to a high frequency version of another.

A low frequency image is obtained by running a low-pass filter on an image. A high frequency image can be obtained by running a high pass filter on an image. Examples of applying a low and high pass filter is shown below.

An image is filtered by applying an operation called convolution. We take a matrix of odd numbered dimensions, called the kernel, (so it has an element in the centre) overlay it on top of the image and multiply element-wise. The multiplied elements are summed and becomes a single element representing a pixel in the output matrix. The kernel performs a raster scan during convolution, as shown in the animation below.

Source: An intuitive guide to Convolutional Neural Network - freeCodeCamp

In the example above, note the dotted line around the image (blue). This is padding so that the kernel does not 'fall-off'. In this implementation, zero-padding is used. A good explanation can be found in DeepLizard.

More generally, matrix convolution is defined as:

in this example, we think of X as the input image and Y as the kernel.

The values inside the kernel determine the type of image filtering we perform. In the example figure below, the kernel represents a Sobel operator, which is commonly used for edge detection. A list of common kernels can be found in Wikipedia.

Source: freeCodeCamp

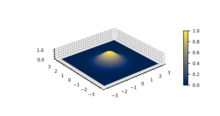

To create hybrid images, Gaussian blur is used. This convolves an input image with a Gaussian function which is visualised below. This function accepts a parameter, sigma, which in the context of image convolution means how much to blur an image.