-

Notifications

You must be signed in to change notification settings - Fork 1.1k

Add primitive compiletime operations on singleton types #7628

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add primitive compiletime operations on singleton types #7628

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hello, and thank you for opening this PR! 🎉

All contributors have signed the CLA, thank you! ❤️

Have an awesome day! ☀️

|

This is exciting! I contributed a lot to singleton-ops, and I wouldn't mind at all to retire it in favor of true compiler support. I will provide extensive feedback when I'll review the work. |

|

Topics for discussion:

|

|

It seems that I've broken some tests. I'll work on fixing those. @soronpo, thank you! I think the guiding principle should be to aim for support for all primitive operations on all supported singleton types. It would seem arbitrary to decide that certain types or certain operations should not be supported, especially when adding support for a primitive operation is as trivial as it is. So to answer your points:

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

2\. However, it may possible to emulate overloading by using union types and match types, as follows:

I think (as I mentioned in my review comment) that <: AnyVal should suffice. A union type is an interesting option, but I think we over-complicate the compiler. The match operation will throw an error anyway if the type is not supported, so I don't think the tight upper-bound limit is required.

A NoDenotation throws an AssertionError when getting its owner. Instead of crashing when passed a NoDenotation (which may happen in certain erroneous cases such as tests/neg/i3083.scala), return false.

Unconditionally normalizing type applications results can result in an infinite loop (e.g. in tests/neg-custom-args/matchtype-loop.scala), so this normalization should happen more conservatively.

As suggested in code review

|

What do you think about val oneCT : TwoFace.Int[1] = TwoFace.Int(1)

val one : Int = 1

val oneRT : TwoFace.Int[Int] = TwoFace.Int(one)

val twoCT : TwoFace.Int[2] = oneCT + oneCT

val twoRT1 : TwoFace.Int[Int] = oneCT + oneRT

val twoRT2 : TwoFace.Int[Int] = oneRT + oneCT

val twoRT3 : TwoFace.Int[Int] = oneRT + oneRTCurrently the dotty inline parameters mechanism only allows compile-time values, so I'm still forced to use the TwoFace concept. So for this to work, I require |

|

To clarify further, ideally I would like to be able to write: import scala.compiletime.ops._

object TwoFace {

opaque type Int[T <: scala.Int] = scala.Int

object Int {

def apply[T <: scala.Int](value : T) : Int[T] = value

protected def force[T <: scala.Int](value : scala.Int) : Int[T] = value

given Ops: {

def [L <: scala.Int, R <: scala.Int](left : Int[L]) + (right : Int[R]) : Int[L + R] = force[L + R](left + right)

}

}

} |

These are simply checked as the supertype. For instance, `Int + Int` is subtype of `Int`. This allows for more graceful failure when more general type arguments are provided.

|

This is a good point, thank you! I think typing I've implemented it in 4d73d89 by falling back to a type check with the super-type when constant folding fails. With this change, I'm able to make the program below compileimport scala.compiletime.ops._

object TwoFace {

opaque type Int[T <: scala.Int] = scala.Int

object Int {

def apply[T <: scala.Int](value : T) : Int[T] = value

protected def force[T <: scala.Int](value : scala.Int) : Int[T] = value

def [L <: scala.Int, R <: scala.Int](left : Int[L]) + (right : Int[R]) : Int[L + R] = force[L + R](left + right)

}

}

object TestTwoFace {

import TwoFace.Int.+

val oneCT : TwoFace.Int[1] = TwoFace.Int(1)

val one : Int = 1

val oneRT : TwoFace.Int[Int] = TwoFace.Int(one)

val twoCT : TwoFace.Int[2] = oneCT + oneCT

val twoRT1 : TwoFace.Int[Int] = oneCT + oneRT

val twoRT2 : TwoFace.Int[Int] = oneRT + oneCT

val twoRT3 : TwoFace.Int[Int] = oneRT + oneRT

} |

|

This is awesome! We need to make sure we don't screw up invariance. val invarianceError : TwoFace.Int[Int] = TwoFace.Int[1](1) //error

val worksDueToWidening : TwoFace.Int[Int] = TwoFace.Int(1) //works due to widening

val weirdButOK : TwoFace.Int[Int+0] = TwoFace.Int[1](1)Of course, I would change the `TwoFace` implementation above to be covariant:import scala.compiletime.ops._

object TwoFace {

opaque type Int[+T <: scala.Int] = scala.Int

object Int {

def apply[T <: scala.Int](value : T) : Int[T] = value

protected def force[T <: scala.Int](value : scala.Int) : Int[T] = value

def [L <: scala.Int, R <: scala.Int](left : Int[L]) + (right : Int[R]) : Int[L + R] = force[L + R](left + right)

}

}

object TestTwoFace {

import TwoFace.Int.+

val oneCT : TwoFace.Int[1] = TwoFace.Int(1)

val one : Int = 1

val oneRT : TwoFace.Int[Int] = TwoFace.Int(one)

val oneRT2 : TwoFace.Int[Int] = TwoFace.Int[1](1) //works due to covariance

val twoCT : TwoFace.Int[2] = oneCT + oneCT

val twoRT1 : TwoFace.Int[Int] = oneCT + oneRT

val twoRT2 : TwoFace.Int[Int] = oneRT + oneCT

val twoRT3 : TwoFace.Int[Int] = oneRT + oneRT

} |

|

Maybe now it will be easier to convince the SIP committee to support unary types (SIP36) so we can replace |

|

This might be a little more tough, but what about implementing algebraic rules within the type system (for the discussed ops)? def commutative[A <: Int, B <: Int](implicit ev : A + B =:= B + A) : Unit = {}

def associative[A <: Int, B <: Int, C <: Int](implicit ev : A + (B + C) =:= (A + B) + C) : Unit = {}

//others rules...Of course, in case of overflows or precision issues, these rules may not apply, so I'm open to other opinions on the matter. |

|

The covariant implementation you gave pretty much works as expected, so that's good news. The only line that I cannot compile in your example is the following: val twoCT : TwoFace.Int[2] = oneCT + oneCTI've narrowed down the problem to type inference (and not a bug in the val twoCT: TwoFace.Int[2.type] =

TwoFace.Int.+[Int, Int](TestTwoFace.oneCT)(TestTwoFace.oneCT)I'm not exactly sure of why it infers Helping out the type inference by explicitly writing the type parameters solves the problem, and makes the line compile: val twoCT: TwoFace.Int[2] = TwoFace.Int.+[1, 1](oneCT)(oneCT) |

|

What would be a good use-case for these algebraic rules? As a small aside, the examples you gave (commutativity and associativity) behave more like a test, rather than like a law that can be proven. Because these rules are true (for def badLaw[A <: Int, B <: Int](implicit ev: A + B =:= A - B) : Unit = {}Because badLaw[Int, Int]

badLaw[Int, 20]

badLaw[1, 0]

badLaw[0, 0]It only complains if I can give it a counter-example: badLaw[3, 1] // error, implicit not found |

Not much of a use-case, but the goal is regularity (notice the switched positions of import scala.compiletime.ops._

object TwoFace {

opaque type Int[T <: scala.Int] = scala.Int

object Int {

def apply[T <: scala.Int](value : T) : Int[T] = value

protected def force[T <: scala.Int](value : scala.Int) : Int[T] = value

def [L <: scala.Int, R <: scala.Int](left : Int[L]) + (right : Int[R]) : Int[L + R] = force[R + L](left + right) // switched positions

}

} |

|

I'm not convinced about |

It won't work with one direction. If |

|

To clarify, this is exactly what happens at the term level: val one : Int = 1

one * 2 // : Int

one * 3 // : Int

1 * 2 // : 2

1 * 3 // : 3 |

What do you mean by "won't work" ? you can have two subtypes of a given type whose sets of values partially overlap. |

Well, this actually depends on whether or not algebraic rules are applied to the typesystem. val one : Int = 1

val tfA = TwoFace.Int(one) + TwoFace.Int(1) // : TwoFace.Int[Int + 1]

val tfB = TwoFace.Int(1) + TwoFace.Int(one) // : TwoFace.Int[1 + Int]

summon[tfA.type =:= tfB.type] //errorThis error is weird. |

|

When |

|

I think adding algebraic rules on integer operations is outside the scope of this PR. The first step, and what's the most urgently missing at the moment, is a way perform integer operations on singleton types. One argument to limit the scope to only reduce when both types are singleton would be that doing something more elaborate might embed more knowledge in the compiler than there is in the runtime. The JVM know about |

|

I think that @OlivierBlanvillain raises a very good point. Currently, the following type-checks: object TwoFace {

opaque type Int[T <: scala.Int] = scala.Int

object Int {

def apply[T <: scala.Int](value : T) : Int[T] = value

protected def force[T <: scala.Int](value : scala.Int) : Int[T] = value

def [L <: scala.Int, R <: scala.Int](left : Int[L]) / (right : Int[R]) : Int[L / R] = force[R / L](left + right) // switched positions

}

}This may be fine for addition, which is commutative, but it's not acceptable for division, in my opinion. I suggest that we go back to only reducing singleton args for now, and not cases like So to sum up the conversation thus far: Remains in this PR

Once I've done those two things, I think the PR is ready to be merged. Possible extensions, for future PRs |

I'm inclined to agree because covariance should handle this properly. val one : Int = 1

val a : TwoFace.Int[Int] = TwoFace.Int(one) + TwoFace.Int(1)

The most important thing- the covariance error you mention here must be dealt with, IMO. |

|

For proof of greater usability, I think this PR should at least add the following:

All the above will bring us much further for people to be able to use this feature to replace the libraries Example code: import scala.compiletime.ops._

opaque type Positive = Int

object Positive {

type Check[T] = Require[T > 0, "The value provided isn't positive. Found: " + ToString[T]]

def apply[T <: Int](value : T)(given Check[T]) : Positive = value

}

val eight = Positive(8)

val negError = Positive(-1) //error: The value provided isn't positive. Found: -1 |

|

Instead of type Require[Cond <: Boolean, Msg <: String] = Cond match {

case true => Nothing

case false => Error[Msg]

} |

Oh, it seems I forgot about inline methods 🤦♂️ |

Well, it turns out that it is currently impossible to mix opaque types and inline defs, so using the compiletime ops for this will be nice. I created an issue for the inline defs (feature-lacking) problem: lampepfl/dotty-feature-requests#82 |

Division by 0 and modulo by 0 would otherwise crash the compiler. Throwing an error prints an error message positioned on the operator, and does not prevent other type errors from being found and shown.

As discussed in scala#7628

I agree, and I think we can open an issue for now. As far as I can tell, it's a type inference bug, not a bug with this implementation or with covariance, so I'm inclined to treat them separately. If merged, this PR should provide some nice motivation to fix it, IMO. As for the other points:

Preferably, I'd like to keep changes somewhat incremental rather than have a big PR doing many different things. So I think this is ready for your review @OlivierBlanvillain, and if it's all fine and the tests pass, I think we can merge. |

|

OK, I think it's better to have an |

* Use NoType instead of Option[Type] * Move a Set to a val to avoid recomputing it on every call

This commit serves as a proof-of-concept of "overloaded" singleton ops. `+` is already addition, so adding concatenation requires "overloading" the `+` op. Scala does not support overloaded type aliases, so we must have two + types in different objects. However: - unqualified references (`+`) can be ambiguous (`int.+` or `string.+`) - qualified types (e.g. `int.+`) cannot be used infix The solution is to have a top-level `+` match type that redirects to the qualified `int.+` or `string.+`. These are kept private, as an implementation detail. The top-level match type is not considered as a compiletime applied type, as we cannot do constant folding before the match type has been applied.

As discussed IRL with @OlivierBlanvillain, the previous approach with a match type dispatching to the correct overloaded op is not ideal, since adding more ops will mean modifying previous ops. For now, we therefore focus on having a good internal implementation of the ops. In some cases, the syntax is not ideal. For instance: import scala.compiletime.ops.int._ import scala.compiletime.ops.string._ summon[1 + 2] // ^ Reference to + is ambiguous // it is both imported by import scala.compiletime.ops.int._ // and imported subsequently by import scala.compiletime.ops.string._ Or: import scala.compiletime.ops._ summon[1 int.+ 2] // ^ an identifier expected, but '.' found These cases can be improved by allowing infix qualified types, or implementing resolution of member types (i.e. `A + B` as `A#+[B]` instead of `+[A, B]`).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In some cases, the syntax is not ideal. For instance:

import scala.compiletime.ops.int._

import scala.compiletime.ops.string._

summon[1 + 2]

// ^ Reference to + is ambiguous

// it is both imported by import scala.compiletime.ops.int._

// and imported subsequently by import scala.compiletime.ops.string._

IMO this split is the wrong move in terms of usability. Especially if we look at it in long-term when we want to add other operations support. There is also irregularity in == and !=. Why are they not split?

However, I believe the code you used to demonstrate how to combine +[ <: Int, <: Int] and +[ <: String, <: String] offers a good compromise. I think that ops.int._, op.string._ etc. should be regarded as internal and use the match types trick to create ops._ (or ops.general._ / ops.all._) which most users will use.

| @infix type ==[X <: AnyVal, Y <: AnyVal] <: Boolean | ||

| @infix type !=[X <: AnyVal, Y <: AnyVal] <: Boolean | ||

|

|

||

| @infix type +[X <: Int | String, Y <: Int | String] = (X, Y) match { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I actually like this example a lot. It's a shame to delete it. I propose to change it to a test code (or provide it as example in a document).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good point -- I've done both in 2cab591, and generally documented the feature.

| import scala.annotation.infix | ||

|

|

||

| package object ops { | ||

| @infix type ==[X <: AnyVal, Y <: AnyVal] <: Boolean |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe == and != should be split as well. Either everything is split according to the supertype or non of it is.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I moved them into scala.compiletime.ops.any in 8c117c1.

The alternative was to duplicate them into each subpackage for each supported type, and then duplicate the constant folding code... which felt like a lot of duplication. Seeing that == and != are defined on Any, I think this solution makes the most sense. It also emphasizes that equality is between to Any values, and that 1 == "1" will return false.

+ Add test to check that code example in the documentation compiles

This is more consistent with the other ops, which are also split depending on the type of the argument.

|

This is a very interesting idea and would have many applications in anything related to numerics. From the looks of it, it could help with having compile-time shape checks for something like Tensorflow! |

|

@frobinet I haven't mentioned it in the PR, but that's actually exactly what I'm hoping to achieve with this feature! I've been working on that as my semester project at EPFL over the past few months, and I plan on open-sourcing my implementation within the next two weeks or so. There are indeed many good applications for this feature. For instance, a paper called "Dex: array programming with typed indices" mentions the following:

Well, that's what this PR implements 🎉 It would make it possible to type operations like |

|

Sounds great. The only place I've seen this is C++ where you can just stick random computations inside template definitions, but that's just fancy copy-pasting. I guess it would be hard to get the reshape correct there, not to mention the horrendous error messages the compiler would throw at you if you get shapes wrong. Anyway, interesting project, I'll follow it one you're open-source! |

| package object ops { | ||

| object any { | ||

| /** Equality comparison of two singleton types. | ||

| * ```scala |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No need to specify scala here: that's the default inside scaladoc.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| } | ||

|

|

||

| object int { | ||

| /** Addition of two `Int` singleton types. Type-level equivalent of `scala.Int.+` |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would remove all the Type-level equivalant comments since it's pretty obvious. Also it's inaccurate as + is not actually a method on object Int...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

All right, fixed in 7e0a1db.

Remove unnecessary comments about singleton ops being type-level equivalents of term-level operations

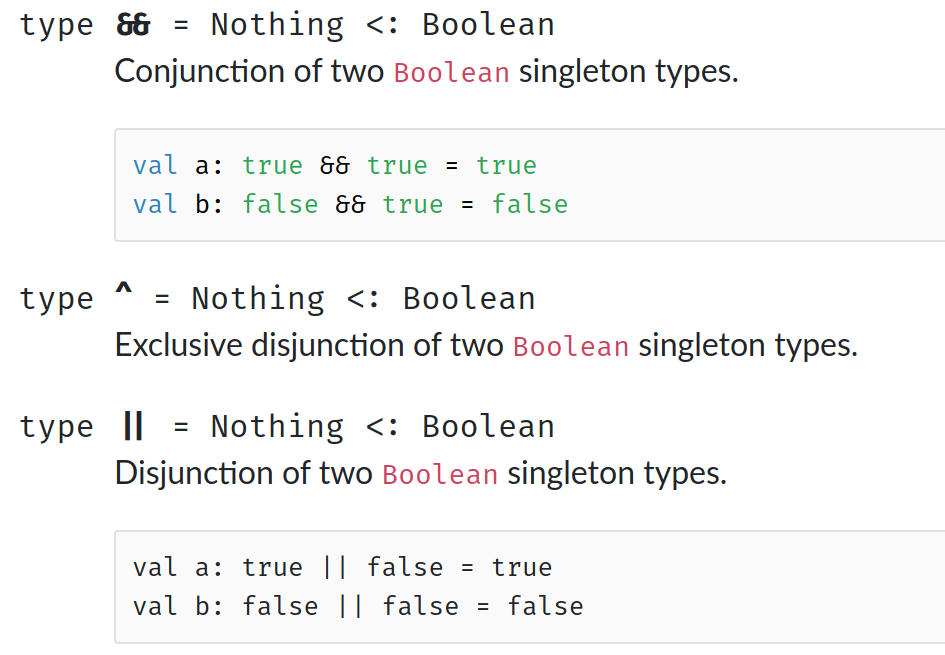

This PR adds support for type-level operations on singletons, making it possible to write the following:

Having these primitive operations makes it possible to define more complex operations through match types, e.g.:

Background

With

scala.compiletime.Sand match types, it's possible to implement a variety of operations on singletons. For instance, addition and multiplication can be implemented as follows:However, as these are implemented on the low-level primitive of

scala.compiletime.S, they are highly recursive. Interpreting these match types quickly runs out of memory (for instance,100 * 100exceeds the recursion limit on default settings).Further work and discussion

A natural extension to this would be to be able to type term-level operations as the precise type-level equivalent, perhaps as an opt-in, like

val x: 1 + 2 = 1 + 2.Related