Configured to be ready to be used for Log, Metrics, APM, Alerting, Machine Learning, and Security (SIEM) usecases.

Elastic Stack (ELK) Docker Composition, preconfigured with Security, Monitoring, and Tools; Up with a Single Command.

Suitable for Demoing, MVPs and small production deployments.

Stack Version: 8.10.2 🎉 - Based on Official Elastic Docker Images

You can change Elastic Stack version by setting

ELK_VERSIONin.envfile and rebuild your images. Any version >= 8.0.0 is compatible with this template.

- Configured as a Production Single Node Cluster. (With a multi-node cluster option for experimenting).

- Security Enabled By Default.

- Configured to Enable:

- Logging & Metrics Ingestion

- Option to collect logs of all Docker Containers running on the host. via

make collect-docker-logs.

- Option to collect logs of all Docker Containers running on the host. via

- APM

- Alerting

- Machine Learning

- Anomaly Detection

- SIEM (Security information and event management).

- Enabling Trial License

- Logging & Metrics Ingestion

- Use Docker-Compose and

.envto configure your entire stack parameters. - Persist Elasticsearch's Keystore and SSL Certifications.

- Self-Monitoring Metrics Enabled.

- Prometheus Exporters for Stack Metrics.

- Embedded Container Healthchecks for Stack Images.

And comparing Elastdocker and the popular deviantony/docker-elk

Expand...

One of the most popular ELK on Docker repositories is the awesome deviantony/docker-elk.

Elastdocker differs from deviantony/docker-elk in the following points.

-

Security enabled by default using Basic license, not Trial.

-

Persisting data by default in a volume.

-

Run in Production Mode (by enabling SSL on Transport Layer, and add initial master node settings).

-

Persisting Generated Keystore, and create an extendable script that makes it easier to recreate it every-time the container is created.

-

Parameterize credentials in .env instead of hardcoding

elastich:changemein every component config. -

Parameterize all other Config like Heap Size.

-

Add recommended environment configurations as Ulimits and Swap disable to the docker-compose.

-

Make it ready to be extended into a multinode cluster.

-

Configuring the Self-Monitoring and the Filebeat agent that ship ELK logs to ELK itself. (as a step to shipping it to a monitoring cluster in the future).

-

Configured Prometheus Exporters.

-

The Makefile that simplifies everything into some simple commands.

- Docker 20.05 or higher

- Docker-Compose 1.29 or higher

- 4GB RAM (For Windows and MacOS make sure Docker's VM has more than 4GB+ memory.)

-

Clone the Repository

git clone https://github.com/sherifabdlnaby/elastdocker.git

-

Initialize Elasticsearch Keystore and TLS Self-Signed Certificates

$ make setup

For Linux's docker hosts only. By default virtual memory is not enough so run the next command as root

sysctl -w vm.max_map_count=262144 -

Start Elastic Stack

$ make elk <OR> $ docker-compose up -d <OR> $ docker compose up -d

-

Visit Kibana at https://localhost:5601 or

https://<your_public_ip>:5601Default Username:

elastic, Password:changeme- Notice that Kibana is configured to use HTTPS, so you'll need to write

https://beforelocalhost:5601in the browser. - Modify

.envfile for your needs, most importantlyELASTIC_PASSWORDthat setup your superuserelastic's password,ELASTICSEARCH_HEAP&LOGSTASH_HEAPfor Elasticsearch & Logstash Heap Size.

- Notice that Kibana is configured to use HTTPS, so you'll need to write

Whatever your Host (e.g AWS EC2, Azure, DigitalOcean, or on-premise server), once you expose your host to the network, ELK component will be accessible on their respective ports. Since the enabled TLS uses a self-signed certificate, it is recommended to SSL-Terminate public traffic using your signed certificates.

🏃🏻♂️ To start ingesting logs, you can start by running

make collect-docker-logswhich will collect your host's container logs.

Expand

$ make monitoring$ make collect-docker-logs$ make all

$ make nodes$ make build$ make down$ make prune- Some Configuration are parameterized in the

.envfile.ELASTIC_PASSWORD, userelastic's password (default:changemepls).ELK_VERSIONElastic Stack Version (default:8.10.2)ELASTICSEARCH_HEAP, how much Elasticsearch allocate from memory (default: 1GB -good for development only-)LOGSTASH_HEAP, how much Logstash allocate from memory.- Other configurations which their such as cluster name, and node name, etc.

- Elasticsearch Configuration in

elasticsearch.ymlat./elasticsearch/config. - Logstash Configuration in

logstash.ymlat./logstash/config/logstash.yml. - Logstash Pipeline in

main.confat./logstash/pipeline/main.conf. - Kibana Configuration in

kibana.ymlat./kibana/config.

You can extend the Keystore generation script by adding keys to ./setup/keystore.sh script. (e.g Add S3 Snapshot Repository Credentials)

To Re-generate Keystore:

make keystore

-

⚠️ Elasticsearch HTTP layer is using SSL, thus mean you need to configure your elasticsearch clients with theCAinsecrets/certs/ca/ca.crt, or configure client to ignore SSL Certificate Verification (e.g--insecureincurl). -

Adding Two Extra Nodes to the cluster will make the cluster depending on them and won't start without them again.

-

Makefile is a wrapper around

Docker-Composecommands, usemake helpto know every command. -

Elasticsearch will save its data to a volume named

elasticsearch-data -

Elasticsearch Keystore (that contains passwords and credentials) and SSL Certificate are generated in the

./secretsdirectory by the setup command. -

Make sure to run

make setupif you changedELASTIC_PASSWORDand to restart the stack afterwards. -

For Linux Users it's recommended to set the following configuration (run as

root)sysctl -w vm.max_map_count=262144By default, Virtual Memory is not enough.

After completing the setup step, you will notice a container named apm-server which gives you deeper visibility into your applications and can help you to identify and resolve root cause issues with correlated traces, logs, and metrics.

In order to authenticate with Elastic APM, you will need the following:

- The value of

ELASTIC_APM_SECRET_TOKENdefined in.envfile as we have secret token enabled by default - The ability to reach port

8200 - Install elastic apm client in your application e.g. for NodeJS based applications you need to install elastic-apm-node

- Import the package in your application and call the start function, In case of NodeJS based application you can do the following:

const apm = require('elastic-apm-node').start({

serviceName: 'foobar',

secretToken: process.env.ELASTIC_APM_SECRET_TOKEN,

// https is enabled by default as per elastdocker configuration

serverUrl: 'https://localhost:8200',

})

Make sure that the agent is started before you require any other modules in your Node.js application - i.e. before express, http, etc. as mentioned in Elastic APM Agent - NodeJS initialization

For more details or other languages you can check the following:

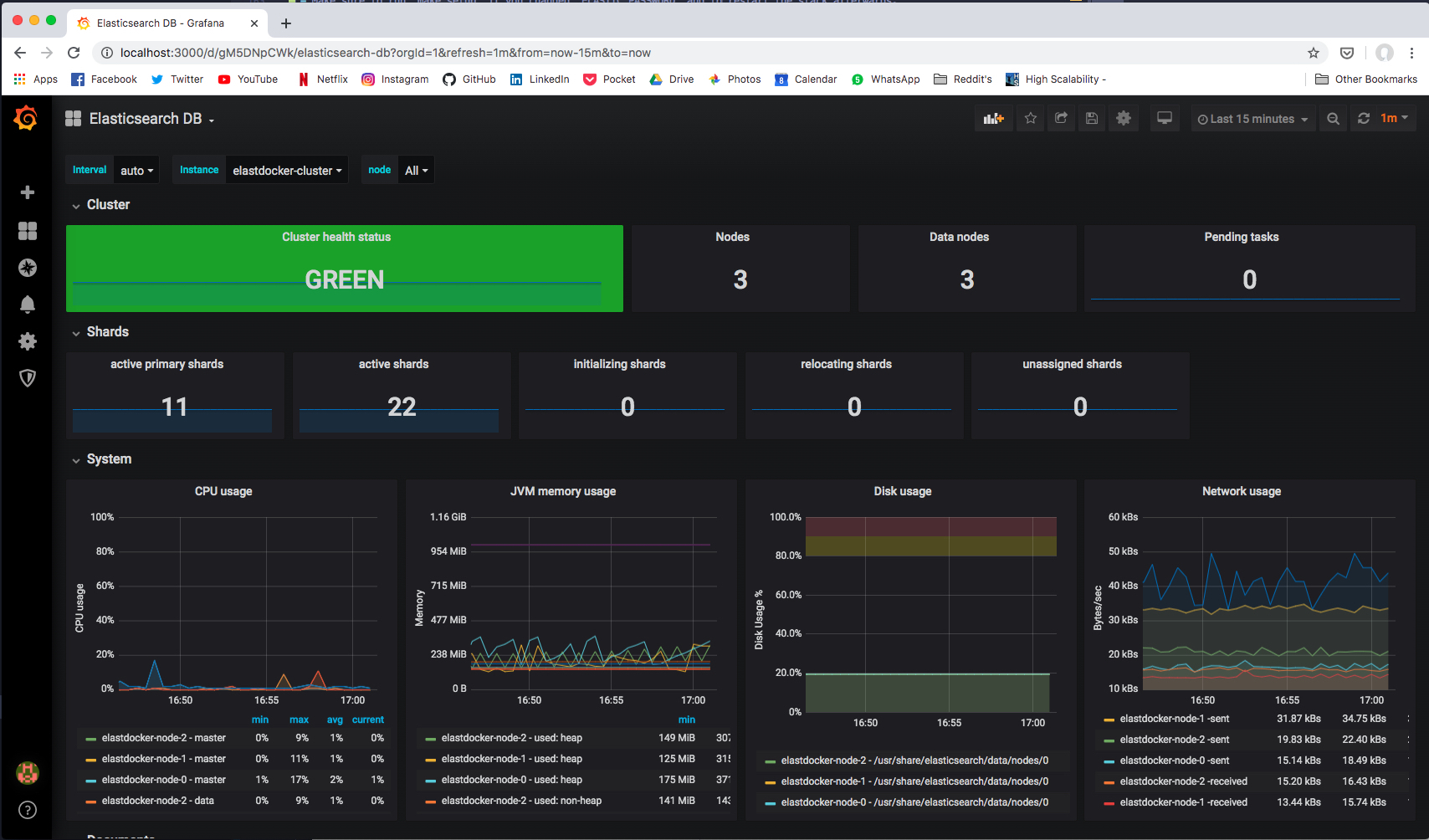

Head to Stack Monitoring tab in Kibana to see cluster metrics for all stack components.

In Production, cluster metrics should be shipped to another dedicated monitoring cluster.

If you started Prometheus Exporters using make monitoring command. Prometheus Exporters will expose metrics at the following ports.

| Prometheus Exporter | Port | Recommended Grafana Dashboard |

|---|---|---|

elasticsearch-exporter |

9114 |

Elasticsearch by Kristian Jensen |

logstash-exporter |

9304 |

logstash-monitoring by dpavlos |

MIT License Copyright (c) 2022 Sherif Abdel-Naby

PR(s) are Open and Welcomed.