-

Notifications

You must be signed in to change notification settings - Fork 136

Home

- Introduction

- Installation of the HHsuite and its databases

-

Brief tutorial to HHsuite tools

- Overview of programs

- Searching databases of HMMs using HHsearch and HHblits

- Generating a multiple sequence alignment using HHblits

- Example: Comparative protein structure modeling using HHblits and MODELLER

- Visually checking an MSA for corrupted regions

- Building customized databases

- Example: Building a database from the PDB

- Modifying or extending existing databases

-

Frequently asked questions

- How do I report a bug?

- What are HMM-HMM comparisons and why are they so powerful?

- When can the HH-suite be useful for me?

- What does homology mean and why is it important?

- How can I verify if a database match is homologous?

- What does the maximum accuracy alignment algorithm do?

- How is the MSA diversity Neff calculated?

- Do HHsearch and HHblits work fine with multi-domain sequences?

- How do I best build HMMs for integral membrane proteins?

- How do I reconcile overlapping and conflicting domain predictions?

- How can I build a phylogenetic tree for HMMs?

- Can I weight some residues more than others during the HMM-HMM alignment?

- Don’t I need to calibrate my query or database HMM?

- Should I use the -global option to build MSAs if I am intersted in global alignments to these database HMMs?

- How many iterations of hhblits should I use to search my customized genome database?

- How can I retrieve database A3M or HHM files from my HH-suite database?

- How can I build my own UniProt database for HHblits?

- Will you offer an HHsuite database that includes environmental sequences?

- How do I read the sequence/HMM headers in the pdb70 databases?

- Why do I get different results when reversing the roles of query and template?

- Why do I sometimes get the same database hit twice with different probabilities?

- Why do I get different results using HHblits and HHsearch when I search with the same query through the same database?

- Why do I get different results when I perform 2 and 1+1 iterations with HHblits?

- Why do I get a segmentation fault when calling hhblits on our new machine?

- I obtain different results for the same query sequence on the web server and on my local version using HHblits and HHsearch.

- I find an alignment of my query and a template with the exact same sequence, but they are aligned incorrectly.

- HHsearch/HHblits output: hit list and pairwise alignments

- File formats

-

Summary of command-line parameters

- hhblits – HMM-HMM-based lighting-fast iterative sequence search

- hhsearch – search a database of HMMs with a query MSA or HMM

- hhfilter – filter an MSA

- hhmake – build an HMM from an input alignment

- hhalign – align a query MSA/HMM to a template MSA/HMM

- reformat.pl – reformat one or many alignments

- addss.pl – add predicted secondary structure to an MSA or HMM

- hhmakemodel.pl and hhmakemodel.py – generate MSAs or coarse 3D models from HHsearch results file

- hhsuitedb.py – Build an HHsuite database

- splitfasta.pl – Split multi-sequence FASTA file into single-sequence files

- renumberpdb.pl – Renumber indices in PDB file to match input sequence indices

- cif2fasta.py – Create a fasta file from cif files

- pdbfilter.py – Filter sequences from the PDB (requires MMseqs2)

- License

- Contributors and Contact

- Acknowledgments

The HH-suite is an open-source software package for sensitive protein sequence searching based on the pairwise alignment of hidden Markov models (HMMs). It contains HHsearch and HHblits among other programs and utilities. HHsearch takes as input a multiple sequence alignment (MSA) or profile HMM and searches a database of HMMs (e.g. PDB, Pfam, or InterPro) for homologous proteins. HHsearch is often used for protein structure prediction to detect homologous templates and to build highly accurate query-template pairwise alignments for homology modeling. In the CASP9 competition (2010), a fully automated version of HHpred based on HHsearch and HHblits was ranked best out of 81 servers in template-based structure prediction. HHblits can build high-quality MSAs starting from single sequences or from MSAs. It transforms these into a query HMM and iteratively searches through Uniclust30 database by adding significantly similar sequences from the previous search to the updated query HMM for the next search iteration. Compared to PSI-BLAST, HHblits is faster, up to twice as sensitive and produces more accurate alignments. HHblits uses the same HMM-HMM alignment algorithms as HHsearch, but it employs a fast prefilter that reduces the number of database HMMs for which to perform the slow HMM-HMM comparison from tens of millions to a few thousands.

References:

-

Steinegger M, Meier M, Mirdita M, Vöhringer H, Haunsberger S J, and Söding J (2019) HH-suite3 for fast remote homology detection and deep protein annotation, BMC Bioinformatics, 473. doi: 10.1186/s12859-019-3019-7

-

Steinegger M, Meier M, Mirdita M, Vöhringer H, Haunsberger S J, and Söding J (2019) HH-suite3 for fast remote homology detection and deep protein annotation, bioRxiv, 560029. doi: 10.1101/560029

-

Mirdita M, von den Driesch L, Galiez C, Martin M J, Söding J, and Steinegger M (2016) Uniclust databases of clustered and deeply annotated protein sequences and alignments, Nucleic Acids Res., 45, D170–D176. doi: 10.1093/nar/gkw1081

-

Remmert M, Biegert A, Hauser A, and Söding J (2011) HHblits: Lightning-fast iterative protein sequence searching by HMM-HMM alignment. Nat. Methods 9, 173-175. doi: 10.1038/nmeth.1818

-

Angermüller C, Biegert A, Söding J (2014) Discriminative modelling of context-specific amino acid substitution probabilities. Bioinformatics 28 (24), 3240-3247. doi: 10.1093/bioinformatics/bts622

-

Söding J (2005) Protein homology detection by HMM-HMM comparison. Bioinformatics 21, 951-960. doi: 10.1093/bioinformatics/bti125

The HH-suite is an open-source software package for highly sensitive sequence searching and sequence alignment. Its two most important programs are HHsearch and HHblits. Both are based on the pairwise comparison of profile hidden Markov models (HMMs).

Profile HMMs are a concise representation of multiple sequence alignments (MSAs). Like sequence profiles, they contain for each position in the master sequence the probabilities to observe each of the 20 amino acids in homologous proteins. The amino acid distributions for each column are extrapolated from the homologous sequences in the MSA by adding pseudocounts to the amino acid counts observed in the MSA. Unlike sequence profiles, profile HMMs also contain position-specific gap penalties. More precisely, they contain for each position in the master sequence the probability to observe an insertion or a deletion after that position (the log of which corresponds to gap-open penalties) and the probabilities to extend the insertion or deletion (the log of which corresponds to gap-extend penalties). A profile HMM is thus much better suited than a single sequence to find homologous sequences and calculate accurate alignments. By representing both the query sequence and the database sequences by profile HMMs, HHsearch and HHblits are more sensitive for detecting and aligning remotely homologous proteins than methods based on pairwise sequence comparison or profile-sequence comparison.

HHblits can build high-quality multiple sequence alignments (MSAs) starting from a single sequence or from an MSA. Compared to PSI-BLAST, HHblits is faster, finds up to two times more homologous proteins and produces more accurate alignments. It uses an iterative search strategy, adding sequences from significantly similar database HMMs from a previous search iteration to the query HMM for the next search. Because HHblits is based on the pairwise alignment of profile HMMs, it needs its own type of databases that contain multiple sequence alignments and the corresponding profile HMMs instead of single sequences. The HHsuite database Uniclust30 is generated regularly by clustering the UniProt KB into groups of similar sequences alignable over at least 80% of their length and down to ∼30% pairwise sequence identity. This database can be downloaded together with HHblits. HHblits uses the HMM-HMM alignment algorithms in HHsearch, but it employs a fast prefilter that reduces the number of database HMMs for which to perform the slow HMM-HMM comparison from tens of millions to a few thousands. At the same time, the prefilter is sensitive enough to reduce the sensitivity of HHblits only marginally in comparison to HHsearch.

By generating highly accurate and diverse MSAs, HHblits can improve almost all downstream sequence analysis methods, such as the prediction of secondary and tertiary structure, of membrane helices, functionally conserved residues, binding pockets, protein interaction interfaces, or short linear motifs. The accuracy of all these methods depends critically on the accuracy and the diversity of the underlying MSAs, as too few or too similar sequences do not add significant information for the predictions. As an example, running the popular PSIPRED secondary structure prediction program on MSAs generated by HHblits instead of PSI-BLAST improved the accuracy of PSIPRED significantly even without retraining PSIPRED on the HHblits alignments.

HHsearch takes as input an MSA (e.g. built by HHblits) or a profile HMM and searches a database of HMMs for homologous proteins. The PDB70 database, for instance, consists of profile HMMs for a set of representative sequences from the PDB database; the scop70 database has profile HMMs for representative domain sequences from the SCOP database of structural domains; the Pfam domain database is a large collection of curated MSAs and profile HMMs for conserved, functionally annotated domains. HHsearch is often used to predict the domain architectures and the functions of domains in proteins by finding similarities to domains in the pdb70, Pfam or other databases.

In addition to the command line package described here, the interactive web server runs HHsearch and HHblits. It offers extended functionality, such as Jalview applets for checking query and template alignments, histogram views of alignments, and building 3D models with MODELLER.

In the CASP9 competition (Critical Assessment of Techniques for Protein Structure Prediction) in 2010, a fully automated version of HHpred based on HHsearch and HHblits was ranked best out of the 81 servers in template-based structure prediction, the category most relevant for biological applications, while having an average response time of minutes instead of days like most other servers .

Other popular programs for sensitive, iterative protein sequence searching are PSI-BLAST and HMMER. Since they are based on profile-to-sequence and HMM-to-sequence comparison, respectively, they have the advantage over HHblits and HHsearch of being able to search raw sequence databases.

The HH-suite source code for most Linux distributions and macOS, and its utility scripts written in Perl and Python can be found at:

https://github.com/soedinglab/hh-suite

In addition you have to install the Protein Data Bank parser for Python3 which can be found at:

https://github.com/soedinglab/pdbx

Databases can be downloaded at:

http://wwwuser.gwdg.de/~compbiol/data/hhsuite/databases/hhsuite_dbs/

HH-suite has been extensively tested on 64-bit Linux. We have done limited testing under BSD and macOS. HH-suite typically requires at least 4GB of main memory. Memory requirements can be estimated as follows:

Memory req.

= query_length * max_db_seq_length * num_threads * (4 (SSE2) or 8 (AVX2)) byte

+ query_length * max_db_seq_length * num_threads * 8 byte

+ 1 GB

Here, num_threads is the number of threads specified with option -cpu <int>.

Due to the high number of random file accesses by HHblits, it is important for good efficiency that the databases used by HHblits are placed in RAM disk or on a local solid state drive (SSD). See section Running HHblits efficiently on a computer cluster for details.

HHblits requires a CPU supporting at least the SSE2 (Streaming SIMD Extensions 2) instruction set. Every 64-bit CPU also supports SSE2. By default, the HH-suite binaries are compiled using the most recent supported instruction set on the computer used for compiling.

However, you may limit the used instruction set to SSE2 with the cmake

compile flag -DHAVE_SSE2=1. The precompiled standard binaries are compiled with this flag.

mkdir -p ~/programs/hh-suite && cd ~/programs/hh-suite

git clone https://github.com/soedinglab/hh-suite.git .

mkdir build && cd build

cmake ..

make

Set ${INSTALL_BASE_DIR} to the absolute path of the base directory

where you want install HH-suite. For example, to install into /usr/local/hhsuite:

INSTALL_BASE_DIR=/usr/local/hhsuite

cmake -DCMAKE_INSTALL_PREFIX="$INSTALL_BASE_DIR" ..

make install

The HH-suite binaries will then be placed in /usr/local/hhsuite/bin.

Some old HH-suite Python and Perl scripts will try to read the HHLIB environment variable to

locate HH-suite binaries and some required data files.

Set the HHLIB environment variable to ${INSTALL_BASE_DIR}, e.g, for bash use:

export HHLIB="${INSTALL_BASE_DIR}"

Put the location of your HH-suite binaries and scripts into your PATH:

export PATH="$PATH:${INSTALL_BASE_DIR}/bin:${INSTALL_BASE_DIR}/scripts"

To avoid typing these commands every time you open a new shell, you may

add the following lines to the .bashrc or

equivalent file in your home directory that is executed every time a

shell is started:

export HHLIB="/usr/local/hhsuite"

export PATH="$PATH:$HHLIB/bin:$HHLIB/scripts"

Since MPI is usually specific to the local cluster installation, the _mpi suffixed binaries have to be compiled from source. During the cmake invocation the build system will automatically check if MPI development files can be located and if found produce these binaries.

Generally you should refer to your local cluster admin team to find out how to load MPI development files for your local system. If you only want to use the _mpi suffixed binaries on a single node you can also try installing the development files as follows:

On Ubuntu/Debian run:

apt install mpi-default-bin mpi-default-dev

On macOS run:

brew install openmpi

The following HH-suite databases, which can be searched by HHblits and HHsearch, can be downloaded at:

http://wwwuser.gwdg.de/~compbiol/data/hhsuite/databases/hhsuite_dbs

-

Uniclust30: Based on UniProt KB, clustered to 30% seq. identity

- PDB70: Representatives from PDB (70% max. sequence identity), updated weekly

- scop70: Representatives from SCOP (70% max. sequence identity)

-

Pfam A: Protein Family database

Click on the ![]() links to go to the GitHub repository that contains the scripts to generate the respective database.

links to go to the GitHub repository that contains the scripts to generate the respective database.

The databases consist of usually to following six files, which all start with the name of the database, followed by different extensions:

<dbname>_cs219.ffdata packed file with column-state sequences for prefiltering

<dbname>_cs219.ffindex index file for packed column-state sequence file

<dbname>_a3m.ffdata packed file with MSAs in A3M format

<dbname>_a3m.ffindex index file for packed A3M file

<dbname>_hhm.ffdata packed file with HHM-formatted HMMs

<dbname>_hhm.ffindex index file for packed HHM file

The packed files <dbname>_cs219.ffdata, <dbname>_hhm.ffdata and

<dbname>_a3m.ffdata contain simply the concatenated A3M MSAs and HHMs,

respectively, with a \0 character at the end of each file. They

are therefore human-readable and are parsable for specific MSAs or

models using tools such as grep or search functions in text editors

(which however should be able to ignore the \0 character). The

.ffindex files contain indices to provide fast access to these packed

files.

To get started, download the Uniclust30 database. For example:

cd ~/programs/hh % change to HHsuite directory

mkdir databases; cd databases

wget http://wwwuser.gwdg.de/~compbiol/uniclust/2018_08/uniclust30_2018_08_hhsuite.tar.gz

tar xzvf uniclust30_2018_08_hhsuite.tar.gz

Note that, in order to generate multiple sequence alignments (MSAs) by iterative sequence searching using HHblits, you need to search the Uniclust30, since this databases covers essentially the largest part of the known sequence universe. The PDB70, PfamA, and SCOP are not appropriate to build MSAs by iterative searches.

Uniclust30 is obtained by clustering the UniProt Protein database. Clusters contain sequences that need to be almost full-length (80%) alignable and typically have pairwise sequence identities down to 30%. The clustering is done by MMseqs2, a very fast algorithm for all-against-all sequence comparison and clustering developed in our group. Sequences in each cluster are globally aligned into an MSA (using ClustalO). The clusters in Uniclust30 thus treat all member sequences equally. You need this type of database to build MSAs using iterative HHblits searches.

In the PDB70 and scop70 databases, each master sequence from the original sequence database is represented by an MSA. The MSAs are built by HHblits searches starting with the master sequence as a query. The MSAs and HMMs typically carry the name and annotation of the master sequence. In contrast to the clusters in the Uniclust30 database, sequences can in principle occur in several MSAs. These homologous sequences merely serve to contribute evolutionary information to the master sequence. As the sequences in this type of database do not cover the entire sequence space, they are not suited for iterative searches. See Building customized databases for how to build your own databases.

Note on efficiency: Always try to keep the HHsuite databases on a local SSD drive of the computer running HHblits/HHsearch, if possible. Otherwise file access times on your (shared) remote file server of local HD drive might limit the performance of HHblits/HHsearch.

When HHblits runs on many cores of a compute cluster and accesses the HHsuite database(s) via a central file system, hard disk random access times can quickly become limiting instead of the available compute power. To understand why, let us estimate the random file access bottleneck at an example. Suppose you want to search the Uniclust30 database with HHblits on four compute nodes with 32 CPU cores each. You let each job run on a single core, which is the most efficient way when processing many jobs. Each HHblits iteration through Uniclust30 on a modern 3GHz Intel/AMD core takes around, say, 60s on average. After each search iteration, HHblits needs to read around (3000) a3m or hhm files on disk that passed the prefilter. The total number of expected file accesses per second is therefore:

number of file accesses =

4 nodes * 32 CPU cores * 3000 files / 60s = 6400s

A typical server hard disk has a latency of around 5ms and can therefore randomly access only 200 files per second. Hence, if all cores need to access the files from the same RAID disk array, 4 CPU cores would already saturate the RAID’s disk drives! (You can slightly decrease latency by a factor of two by using RAID configurations with striping, but this won’t help much.)

To efficiently search with HHblits with millions of sequences in parallel on a compute cluster, we therefore recommend one of the first two options.

-

The ideal solution is to automatically load the needed HHsuite databases from the file system into the virtual RAM drive of each compute node upon boot-up. This requires enough memory in your computer. Software for RAM drives exist on all major operating systems. On Linux from kernel 2.4 upwards, a RAM drive is installed by default at

/dev/shm. When memory is low, this software can intelligently swap rarely used files to a hard drive. -

Another good option is to hold the HHsuite databases on local solid state drive (SSD) of each of the cluster’s compute nodes that can accept HHsuite jobs. The local SSDs also remove the file access bottleneck, as SSDs have typical random access times below 100ms. Reading 3000 files from a SSD after prefiltering thus should take below 0.3s.

-

Another good option might be to use a RAM disk (or SSD) on your file server to hold the HHsuite databases. Then file access time is not problematic anymore, but you need to estimate if data throughput might get limiting. The four servers from our previous example would need to read data at a rate:

transfer rate = 4 nodes * 32 CPU cores * 3000 files * 5kB (av. file size) / 60s

= 256MB/s

If you have 16 instead of 4 compute servers or if data transfer from the file server has to be shared with many other compute servers, the network transfer may be limiting the performance.

-

A not so good option is to keep the HHsuite databases on local hard disk drives (HDDs). In this way, you at least distribute the file accesses to as many HDDs as you have compute nodes. But as we have seen, already at around 4 cores per HDD, you will be limited by file access rather than CPU power.

-

You (or your sysadmin) should at least make sure that your compute nodes have sufficient memory reserves and proper cache settings in order to keep the prefiltering database file in cache (size: a few GB). You can check this by running HHblits on a fresh compute node twice and observing that the second run takes much less time to read the prefiltering database file. But this only solves the problem of having to read the prefiltering file every time hhblits is called. But, as discussed, the usually more serious bottleneck is reading the a3m and hhm files that passed the prefilter via the file system. These can also be cached, but since each time only a small subset of all files are read, caching is inefficient for these.

- hhblits: Iteratively) search an HHsuite database with a query sequence or MSA

- hhsearch: Search an HHsuite database with a query MSA or HMM

- hhmake: Build an HMM from an input MSA

- hhfilter: Filter an MSA by max sequence identity, coverage, and other criteria

- hhalign: Calculate pairwise alignments etc. for two HMMs/MSAs

- hhconsensus: Calculate the consensus sequence for an A3M/FASTA input file

- reformat.pl: Reformat one or many MSAs

- addss.pl: Add PSIPRED predicted secondary structure to an MSA or HHM file

- hhmakemodel.pl: Generate MSAs or coarse 3D models from HHsearch or HHblits results

- hhmakemodel.py: Generates coarse 3D models from HHsearch or HHblits results and modifies cif files such that they are compatible with MODELLER

- hhsuitedb.py: Build HHsuite database with prefiltering, packed MSA/HMM, and index files

- splitfasta.pl: Split a multiple-sequence FASTA file into multiple single-sequence files

- renumberpdb.pl: Generate PDB file with indices renumbered to match input sequence indices

- HHPaths.pm: Configuration file with paths to the PDB, BLAST, PSIPRED etc.

- mergeali.pl: Merge MSAs in A3M format according to an MSA of their seed sequences

- pdb2fasta.pl: Generate FASTA sequence file from SEQRES records of globbed pdb files

- cif2fasta.py: Generate a FASTA sequence from the pdbx_seq_one_letter_code entry of the entity_poly of globbed cif files

- pdbfilter.pl: Generate representative set of PDB/SCOP sequences from pdb2fasta.pl output

- pdbfilter.py: Generate representative set of PDB/SCOP sequences from cif2fasta.py output

Call a program without arguments or with -h option to get more detailed explanations.

We will use the MSA query.a3m in the data/ subdirectory of the

HHsuite as an example query. To search for sequences in the

scop70_1.75 database that are homologous to the query sequence or MSA

in query.a3m, type

hhsearch -cpu 4 -i data/query.a3m -d databases/scop70_1.75 -o data/query.hhr

The database scop70_1.75 can be obtained from our download server.

If the input file is an MSA or a single sequence, HHsearch calculates an

HMM from it and then aligns this query HMM to all HMMs in the

scop70_1.75 database using the Viterbi algorithm. After the search,

the most significant HMMs are realigned using the more accurate Maximum

Accuracy (MAC) algorithm. After the realignment

phase, the complete search results consisting of the summary hit list

and the pairwise query-template alignments are written to the output

file, data/query.hhr. The hhr result file format was designed to be

human readable and easily parsable.

The -cpu 4 option tells HHsearch to start four OpenMP threads for

searching and realignment. This will typically results in almost

fourfold faster execution on computers with four or more cores. Since

the management of the threads costs negligible overhead.

The HHblits tool can be used in much the same way as HHsearch. It takes the same input data and produces a results file in the same format as HHsearch. Most of the HHsearch options also work for HHblits, which has additional options associated with its extended functionality for iterative searches. Due to its fast prefilter, HHblits runs between 30 and 3000 times faster than HHsearch at the cost of only a few percent lower sensitivity.

The same search as above is performed here using HHblits instead of HHsearch:

hhblits -cpu 4 -i data/query.a3m -d databases/scop70_1.75 -o data/query.hhr -n 1

HHblits first scans the column state sequences in

scop70_1.75_cs219.ffdata with its fast prefilter. HMMs whose column

state sequences pass the prefilter are read from the packed file

scop70_1.75_hhm.ffdata (using the index file

scop70_1.75_hhm.ffindex) and are aligned to the query HMM generated

from query.a3m using the slow Viterbi HMM-HMM alignment algorithm. The

search results are written to the default output file query.hhr. The

option -n 1 tells HHblits to perform a single search iteration. (The

default is 2 iterations.)

To generate an MSA for a sequence or initial MSA in query.a3m, the

database to be searched should cover the entire sequence space, such as

the Uniclust30. The option -oa3m <msa_file> tells HHblits to

generate an output MSA from the significant hits, -n 1 specifies a

single search iteration.

hhblits -cpu 4 -i data/query.seq -d databases/uniclust30_2018_08/uniclust30_2018_08 -oa3m query.a3m -n 1

At the end of the search, HHblits reads from the packed database file

containing the MSAs the sequences belonging to HMMs with E-value below

the threshold. The E-value threshold for inclusion into the MSA can be

specified using the -e <E-value> option. After the search, query.a3m

will contain the MSA in A3M format.

We could do a second search iteration, starting with the MSA from the

previous search, to add more sequences. Since the MSA generated after

the previous search contains more information than the single sequence

in query.seq, searching with this MSA will probably result in many

more homologous database matches.

hhblits -cpu 4 -i query.a3m -d databases/uniclust30_2018_08/uniclust30_2018_08 -oa3m query.a3m -n 1

Instead, we could directly perform two search iterations starting from

query.seq:

hhblits -cpu 4 -i data/query.seq -d databases/uniclust30_2018_08/uniclust30_2018_08 -oa3m query.a3m -n 2

See Why do I get different results when I perform 2 and 1+1 iterations with HHblits? for an explanation why the results can be slightly different.

In practice, it is recommended to use between 1 and 4 iterations for building MSAs, depending on the trade-off between reliability and specificity on one side and sensitivity for remotely homologous sequences on the other side. The more search iterations are done, the higher will be the risk of non-homologous sequences or sequence segments entering the MSA and recruiting more of their kind in subsequent iterations. This is particularly problematic when searching with sequences containing short repeats, regions with amino acid compositional bias and, although less dramatic, with multiple domains. Fortunately, this problem is much less pronounced in hhblits as compared to PSI-BLAST due to hhblits’s lower number of iterations, its more robust Maximum Accuracy alignment algorithm, and the higher precision of its HMM-HMM alignments.

The parameter -mact (maximum accuracy threshold) lets you choose the

trade-off between sensitivity and precision. With a low mact-value

(e.g. -mact 0.01) very sensitive, but not so precise alignments are

generated, whereas a search with a high mact-value (e.g. -mact 0.9)

results in shorter but very precise alignments. The default value of

-mact in HHblits is 0.35.

To avoid unnecessarily large and diverse MSAs, HHblits stops iterating

when the diversity of the query MSA – measured as number of effective

sequences (N_eff) – grows past a threshold of 10.0. This threshold can be modified with the --neffmax <float>

option. See HHsearch/HHblits model format for a description of how the number of effective sequences is calculated in HH-suite.

To avoid that the final MSAs grows unnecessarily large, by default, the

database MSAs that are going to be merged with the query MSA into the

result MSA are filtered with the active filter options (by default

-id 90 and -diff 1000). The -all option turns off the filtering of

the result MSA. Use this option if you want to get all sequences in the

significantly similar Uniclust30 clusters:

hhblits -cpu 4 -i data/query.seq -d databases/uniclust30_2018_08/uniclust30_2018_08 -oa3m query.a3m -all

The A3M format uses small letters to mark inserts and capital letters to

designate match and delete columns (see Multiple sequence alignment formats),

allowing you to omit gaps aligned to insert columns. The A3M format

therefore uses much less space for large alignments than FASTA but looks

misaligned to the human eye. Use the reformat.pl script to reformat

query.a3m to other formats, e.g. for reformatting the MSA to Clustal

and FASTA format, type:

reformat.pl a3m clu query.a3m query.clu

reformat.pl a3m fas query.a3m query.fas

Next, to add secondary structure information to the MSA we call the

script addss.pl. For addss.pl to work, you have to make sure that

the paths to BLAST and PSIPRED in the file $HHLIB/scripts/HHPaths.pm

are correctly filled in:

addss.pl query.a3m

When the sequence has a SCOP or PDB identifier as first word in its

name, the script tries to add the DSSP states as well. Open the

query.a3m file and check out the two lines that have been added to the

MSA.

❗️ While secondary structure scoring does improve homology detection a bit, this step is extremely time consuming and currently hard to set up due to hardcoded old versions of tools. We recommend to skip this step.

Now you can generate a hidden Markov model (HMM) from this MSA:

hhmake -i query.a3m -o query.hhm

The default output file is query.hhm. By default, the option -M first will be used. This means that exactly those columns of the MSAs

which contain a residue in the query sequence will be assigned to Match

/ Delete states, the others will be assigned to Insert states. (The

query sequence is the first sequence not containing secondary structure

information.) Alternatively, you may want to apply the 50%-gap rule by

typing -M 50, which assigns only those columns to Insert states which

contain more than 50% gaps. The -M first option makes sense if your

alignment can best be viewed as a seed sequence plus aligned homologs to

reinforce it with evolutionary information. This is the case in the SCOP

and PDB versions of our HMM databases, since here MSAs are built around

a single seed sequence (the one with known structure). On the contrary,

when your alignment represents an entire family of homologs and no

sequence in particular, it is best to use the 50% gap rule. This is the

case for Pfam or SMART MSAs, for instance. Despite its simplicity, the

50% gap rule has been shown to perform well in practice.

When calling hhmake, you may also apply several filters, such as

maximum pairwise sequence identity (-id <int>), minimum sequence

identity with query sequence (-qid <int>), or minimum coverage with

query (-cov <int>). But beware of reducing the diversity of your MSAs

too much, as this will lower the sensitivity to detect remote homologs.

A three-dimensional (3D) structure greatly facilitates the functional characterization of proteins. However, for many proteins there are no experimental structures available, and thus, comparative modeling to known protein structures may provide useful insights. In this method, a 3D structure of a given protein sequence (target) is predicted based on alignments to one or more proteins of known structures (templates). In the following, we demonstrate how to create alignments for a unresolved protein with HHblits and the PDB70 database. We then convert search results from HHblits to build a comparative model using MODELLER (v9.16).

In 1999, Wu et all. reported the genomic sequence and evolutionary analysis of lactate dehydrogenase genes from Trichomonas vaginalis (TvLDH). Surprisingly, the corresponding protein sequence was most similar to the malate dehydrogenase (TvMDH) of the same organism implying TvLDH arose from TvMDH by convergent evolution. In the meantime, the structure of TvLDH has been resolved, however, for instructional purposes suppose that there is no 3D structure for the protein.

To get started we obtain the protein sequence of TvLDH from GeneBank

(accession number

AF060233.1). We first

copy-paste the protein sequence into a new file named query.seq. The

content of this file should somewhat look similar to this.

>TvLDH

MSEAAHVLITGAAGQIGYILSHWIASGELYGDRQVYLHLLDIPPAMNRLTALTMELEDCAFPHLAGFVATTDP

KAAFKDIDCAFLVASMPLKPGQVRADLISSNSVIFKNTGEYLSKWAKPSVKVLVIGNPDNTNCEIAMLHAKNL

KPENFSSLSMLDQNRAYYEVASKLGVDVKDVHDIIVWGNHGESMVADLTQATFTKEGKTQKVVDVLDHDYVFD

TFFKKIGHRAWDILEHRGFTSAASPTKAAIQHMKAWLFGTAPGEVLSMGIPVPEGNPYGIKPGVVFSFPCNVD

KEGKIHVVEGFKVNDWLREKLDFTEKDLFHEKEIALNHLAQ

The search results obtained by querying the TvLDH sequence against the PDB70 will be significantly better if we use a MSA instead of a single sequence. For this reason, we first query the protein sequence of TvLDH against the Uniclust30 database which covers the whole protein sequence space. By executing

hhblits -i query.seq -d databases/uniclust30_2018_08/uniclust30_2018_08 -oa3m query.a3m -cpu 4 -n 1

we obtain a MSA in a3m format which contains several sequences that are

similar to TvLDH. Now we can use the query.a3m and search the PDB70

database for similar protein structures.

hhblits -i query.a3m -o results.hhr -d databases/pdb70 -cpu 4 -n 1

Note that we now output a hhr file results.hhr instead of an a3m file.

Before we convert the search results to a format that is readable by

MODELLER, let us quickly inspect results.hhr.

Query TvLDH

Match_columns 333

No_of_seqs 2547 out of 8557

Neff 11.6151

Searched_HMMs 1566

Date Tue Aug 16 11:35:02 2016

Command hhblits -i query.a3m -o results.hhr -d pdb70 -cpu 32 -n 2

No Hit Prob E-value P-value Score SS Cols Query HMM Template HMM

1 7MDH_C MALATE DEHYDROGENASE; C 100.0 1.5E-39 1.3E-43 270.1 0.0 326 3-333 31-358 (375)

2 4UUL_A L-LACTATE DEHYDROGENASE 100.0 1.8E-35 1.6E-39 244.5 0.0 332 1-332 1-332 (341)

3 4UUP_A MALATE DEHYDROGENASE (E 100.0 2.2E-35 1.9E-39 243.8 0.0 332 1-332 1-332 (341)

4 4UUM_B L-LACTATE DEHYDROGENASE 100.0 5.7E-35 5.1E-39 241.5 0.0 333 1-333 1-333 (341)

5 1CIV_A NADP-MALATE DEHYDROGENA 100.0 1.6E-34 1.5E-38 241.3 0.0 326 2-332 40-367 (385)

6 1Y7T_A Malate dehydrogenase(E. 100.0 3.4E-34 3.1E-38 235.3 0.0 324 1-331 1-325 (327)

7 4I1I_A Malate dehydrogenase (E 100.0 3.8E-34 3.4E-38 236.4 0.0 319 2-327 22-343 (345)

8 1BMD_A MALATE DEHYDROGENASE (E 99.9 5.9E-34 5.3E-38 233.9 0.0 324 1-331 1-325 (327)

9 4H7P_B Malate dehydrogenase (E 99.9 9.9E-34 8.9E-38 233.9 0.0 319 2-327 22-343 (345)

10 2EWD_B lactate dehydrogenase, 99.9 1.1E-33 9.5E-38 231.1 0.0 306 1-328 1-314 (317)

(...)

We find that there are several templates that have a high similarity to our query. Interestingly, the hit with the most significant E-value score is also malate dehydrogenase. We will use this structure as a basis for our comparative model. In order to build the model we first have to obtain the template structure(s). We can get 7MDH by typing the following commands

mkdir templates

cd templates

wget http://files.rcsb.org/download/7MDH.cif

cd ..

To convert our search results results.hhr into an alignment that is

readable by MODELLER we use hhmakemodel.py.

python3 hhmakemodel.py results.hhr templates/ TvLDH.pir ./ -m 1

This script takes four positional arguments: the results file in hhr

format, the path to the folder containing all templates in cif format,

the output pir file, and folder where the processed cif files should be

written to. The -m flag tells hhmakemodel.py to only include the

first hit in the pir alignment. The pir file together with processed

cifs can be used as an input for MODELLER (please refer to the MODELLER

documentation for further help).

Iterative search methods such as PSI-BLAST and HHblits may generate alignments containing non-homologous sequence stretches or even large fractions of non-homologous sequences. The cause for this is almost always the overextension of homologous alignments into non-homologous regions. This has been termed homologous overextension in . This effect occurs particularly in multidomain and repeat proteins. A single overextended alignment in the search results leads to more of such sequences to be included in the profile of the next iteration – usually all homologous to the first problematic sequence but with even longer non-homologous stretches. Thus, after three or more iterations, large sections of the resulting MSA may be non-homologous to the original query sequence. This risk of homologous overextension is greatly reduced in HHblits in comparison to PSI-BLAST, because fewer iterations are usually necessary for HHblits and because HHblits uses the Maximum Accuracy alignment algorithm (see What does the maximum accuracy alignment algorithm do?), which is much less prone to overextend alignments than the Smith-Waterman/Viterbi algorithm used by most other programs including PSI-BLAST. Still, in important cases it is worth to visually check the MSAs of the query and the matched database protein for the presence of corrupted regions containing non-homologous sequence stretches.

The recommended procedure to visually check an MSA is the following. We first reduce the MSA to a small set of sequences that could still fit into a single window of an alignment viewer:

hhfilter -i query.a3m -o query.fil.a3m -diff 30

The option -diff causes hhfilter to select a representative set of at

least 30 sequences that best represent the full diversity of the MSA.

Sequences that contain non-homologous stretches are therefore usually

retained, as they tend to be the most dissimilar to the main sequence

cluster.

Next, we remove all inserts (option -r) with respect to the first,

master sequence in the MSA. The resulting MSA is sometimes called a

master-slave alignment:

reformat.pl -r query.fil.a3m query.fil.fas

This alignment is now very easy to inspect for problematic regions in

any viewer that allows to color the residues according to their

physico-chemical properties. We can recommend alnedit

or jalview, for example:

java -jar alnedit.jar query.fil.fas .

It is possible to build custom HH-suite databases using the same tools we use to build the standard HH-suite databases (except Uniclust30). An example application is to search for homologs among all proteins of an organism. To build your own HH-suite database from a set of sequences, you first need to generate an MSA with predicted secondary structure for every sequence in the set.

🗒 The following calls assume you have compiled the HH-suite with MPI support. You can substitute hhblits_mpi with hhblits_omp, ffindex_apply_mpi with ffindex_apply and cstranslate_mpi with cstranslate -f to use the OpenMP (single compute node) parallelization instead.

First of all we convert the input FASTA file to a FFindex database with

ffindex_from_fasta:

ffindex_from_fasta -s <db>_fas.ff{data,index} <db.fas>

Now, to build an MSA with HHblits for each sequence in <db>_fas.ff{data,index}, run

mpirun -np <number_threads> \

hhblits_mpi -i <db>_fas -d <path_to/uniclust30> -oa3m <db>_a3m_wo_ss -n 2 -cpu 1 -v 0

The MSAs are written to the ffindex <db>_a3m_wo_ss.ff{data,index}. To

be sure that everything went smoothly, check that the number of lines in

<db>_a3m.ffindex is the same as the number of lines in <db>_fas.ffindex.

The number of HHblits search iterations and the HMM inclusion E-value

threshold for HHblits can be changed from their default values (2 and

0.01, respectively) using the -n <int> and -e <float> options. A

higher number of iterations such as -n 3 will result in very high

sensitivity to discover remotely homologous relationships, but the

ranking among homologous proteins will often not reflect their degree of

relationship to the query. The reason is that any similarities that are

higher than the similarities among the sequences in the query and

database MSAs cannot be resolved.

If you already have MSAs in aligned FASTA format, place all of them first in a single folder that does not contain any other files to create a single FFindex database:

cd msa/

ffindex_build -s ../<db>_msa.ff{data,index} .

cd ..

Now you can use hhconsensus to add a consensus sequence and do the format conversion to the more space efficient A3M format:

OMP_NUM_THREADS=1 mpirun -np <number_threads> ffindex_apply_mpi <db>_msa.ff{data,index} \

-i <db>_a3m_wo_ss.ffindex -d <db>_a3m_wo_ss.ffdata \

-- hhconsensus -M 50 -maxres 65535 -i stdin -oa3m stdout -v 0

rm <db>_msa.ff{data,index}

Now, add PSIPRED-predicted secondary structure and if possible DSSP secondary structure annotation to all MSAs:

❗️ While secondary structure scoring does improve homology detection a bit, this step is extremely time consuming and currently hard to set up due to hardcoded old versions of tools. We recommend to skip this step. Rename _a3m_wo_ss.ff{data,index} to _a3m.ff{data,index} instead to proceed with the next step.

mpirun -np <number_threads> ffindex_apply_mpi <db>_a3m_wo_ss.ff{data,index} \

-i <db>_a3m.ffindex -d <db>_a3m.ffdata -- addss.pl -v 0 stdin stdout

rm <db>_a3m_wo_ss.ff{data,index}

We also need to generate an HHM model for each MSA:

mpirun -np <number_threads> ffindex_apply_mpi <db>_a3m.ff{data,index} \\

-i <db>_hhm.ffindex -d <db>_hhm.ffdata -- hhmake -i stdin -o stdout -v 0

In order to build the FFindex database containing the column state sequences for prefiltering for each a3m we run:

mpirun -np <number_threads> cstranslate_mpi -f -x 0.3 -c 4 -I a3m -i <db>_a3m -o <db>_cs219

❗️ Be careful with the parameters. Leaving anything out, might result in a database that looks superficially correct, but will perform very badly.

❗️ Before version 3.2 you would need to specify the -b parameter to cstranslate to produce a HHblits3 compatible database, without it you would produce a database that could be still post-processed to be readable by HHblits2.x. We have since dropped support for HHblits2.x and the -b parameter.

Next we want to reorder the entries in the hmm and a3m databases. For this purpose we

sort the files in the data files according to the number of columns in

the MSAs (i.e. the sequence length of the consensus sequence). The number of columns can be retrieved in the third row of <db>_cs219.ffindex.

sort -k3 -n -r <db>_cs219.ffindex | cut -f1 > sorting.dat

ffindex_order sorting.dat <db>_hhm.ff{data,index} <db>_hhm_ordered.ff{data,index}

mv <db>_hhm_ordered.ffindex <db>_hhm.ffindex

mv <db>_hhm_ordered.ffdata <db>_hhm.ffdata

ffindex_order sorting.dat <db>_a3m.ff{data,index} <db>_a3m_ordered.ff{data,index}

mv <db>_a3m_ordered.ffindex <db>_a3m.ffindex

mv <db>_a3m_ordered.ffdata <db>_a3m.ffdata

To make efficient sequence searches in the PDB we provide a precompiled PDB70 database containing PDB sequences clustered at 70% sequence identity. However, to find a larger variety of PDB templates, larger databases with more redundancy might be required. In this tutorial, we outline the steps required to build a custom PDB database. See the PDB70 GitHub repository for the actual scripts used to build this database.

First, download the entire PDB database from

RSCB in

cif file format (this is the successor of the pdb file format) by

executing

rsync --progress -rlpt -v -z --port=33444 rsync.wwpdb.org::ftp/data/structures/divided/mmCIF <cif_dir>

and unzip the files into a single directory <all_cifs>. Then, run

cif2fasta.py by typing

python3 cif2fasta.py -i <all_cifs> -o pdb100.fas -c <num_cores> -p pdb_filter.dat

The script scans the folder <all_cifs> for files with the suffix

*.cif and write each sequence and its associated chain identifier

annotated in pdbx_seq_one_letter_code entry from the entity_poly

table to the fasta file pdb100.fas. By specifying the optional -p

flag, cif2fasta.py creates an additional file pdb_filter.dat which

is required by pdbfilter.py in a later step. Note that cif2fasta.py

by default removes sequences which are shorter than 30 residues and/or

comprise only the residue ’X’.

If you wish to exhaustively search the PDB, skip the following steps and

continue with the instructions described in section

Building customized databases. However, to increase the speed of database

searches, e.g. on systems with limited resources, you can reduce the

number of sequences by clustering them with MMseqs2 and selecting

representative sequences with pdbfilter.py. To cluster the sequences

of pdb100.fas at a sequence identity of X and a coverage of Y run

MMseqs2 using these options.

mmseqs createdb pdb100.fas <clu_dir>/pdb100

mmseqs cluster <clu_dir>/pdb100 <clu_dir>/pdbXX_clu /tmp/clustering -c Y --min-seq-id X

mmseqs createtsv <clu_dir>/pdb100 <clu_dir>/pdb100 <clu_dir>/pdbXX_clu <clu_dir>/pdbXX_clu.tsv

MMseqs2 yields a tab separated file pdbXX_clu.tsv which contains cluster

assignments for all sequences. Representative sequences are selected by

pdbfilter.py which chooses up to three sequences for each cluster by

identifying the ones having either the highest resolution

A, the largest R-free value or the largest "completeness".

We compute the completeness of a protein structure by dividing the number of residues that are found in the ATOM section by the total number of residues declared in the pdbx_seq_one_letter_code entry of the entity_poly table.

python3 pdbfilter.py pdb100.fas pdbXX_clu.tsv pdb_filter.dat pdbXX.fas -i pdb70_to_include.dat -r pdb70_to_remove.dat

pdbfilter.py takes the original fasta file (pdb100.fas) and the

annotation file pdb_filter.dat which both were created by

cif2fasta.py, and the cluster assignments from MMseqs2

(pdb70_clu.tsv) as input and outputs the final pdbXX.fas. Use this

fasta file to complete the creation of your database (see section

Building customized databases).

Assume you have a list of filenames in files.dat you want to remove

from an HHsuite database.

ffindex_modify -s -u -f files.dat <db>_a3m.ffindex

ffindex_modify -s -u -f files.dat <db>_hhm.ffindex

ffindex_modify -s -u -f files.dat <db>_cs219.ffindex

This deletes the file entries from the ffindex files, however the files are still in the ffdata file. This way HHblits and HHsuite won’t be able to use them. If you want to get rid of them in the ffdata file you may rebuild the databases.

ffindex_build -as <db>_cs219_ordered.ff{data,index} -i <db>_cs219.ffindex -d <db>_cs219.ffdata

mv <db>_c219_ordered.ffindex <db>_cs219.ffindex

mv <db>_cs219_ordered.ffdata <db>_cs219.ffdata

sort -k3 -n <db>_cs219.ffindex | cut -f1 > sorting.dat

ffindex_order sorting.dat <db>_hhm.ff{data,index} <db>_hhm_ordered.ff{data,index}

mv <db>_hhm_ordered.ffindex <db>_hhm.ffindex

mv <db>_hhm_ordered.ffdata <db>_hhm.ffdata

ffindex_order sorting.dat <db>_a3m.ff{data,index} <db>_a3m_ordered.ff{data,index}

mv <db>_a3m_ordered.ffindex <db>_a3m.ffindex

mv <db>_a3m_ordered.ffdata <db>_a3m.ffdata

If you want to check your HHsuite database you may run:

hhsuitedb.py -o <db> --cpu 1

hhsuitedb.py supports the flag --force that tries to fix the

database. This may lead to the deletion of MSAs from the database.

Additionally you may add a3m, hhm and cs219 files with globular expressions to an existing database. Please keep in mind that related a3m, hhm and cs219 files should have the same name. To make it easier you may add just a3m files and the corresponding hhm and cs219 files will be calculated by the script.

hhsuitedb.py -o <db> --cpu 1 --ia3m=<a3m_glob> --ics219=<cs219_glob> --ihhm=<hhm_glob>

If you found a bug, please report it to us using GitHub issues.

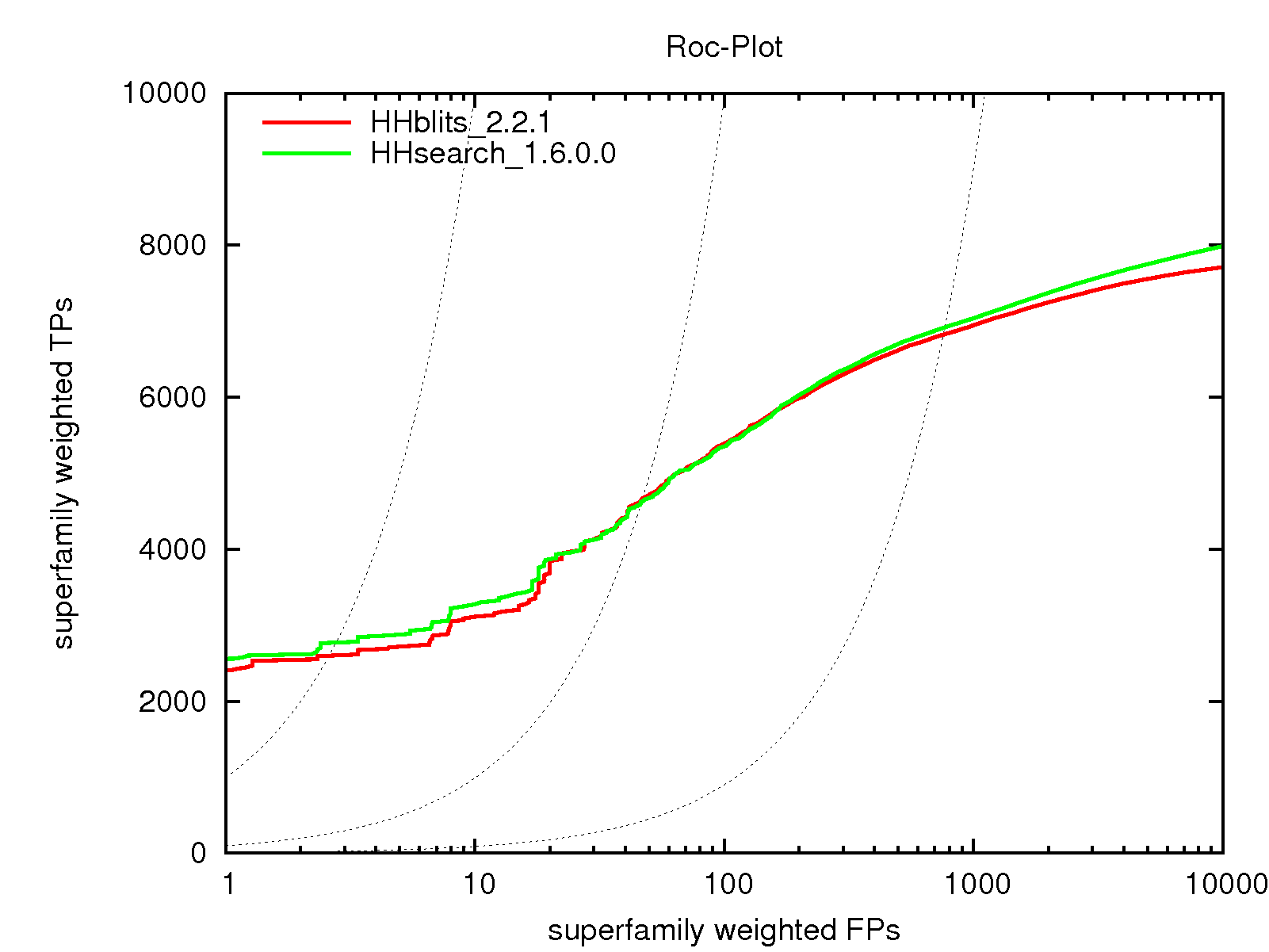

When searching for remote homologs, it is wise to make use of as much information about the query and database proteins as possible in order to better distinguish true from false positives and to produce optimal alignments. This is the reason why sequence-sequence comparison is inferior to profile-sequence comparison. Sequence profiles contain for each column of a multiple alignment the frequencies of the 20 amino acids. They therefore contain detailed information about the conservation of each residue position, i.e., how important each position is for defining other members of the protein family, and about the preferred amino acids. Profile Hidden Markov Models (HMMs) are similar to simple sequence profiles, but in addition to the amino acid frequencies in the columns of a multiple sequence alignment they contain information about the frequency of inserts and deletions at each column. Using profile HMMs in place of simple sequence profiles should therefore further improve sensitivity. Using HMMs both on the query and the database side greatly enhances the sensitivity/selectivity and alignment quality over sequence-profile based methods such as PSI-BLAST. HHsearch is the first software to employ HMM-HMM comparison and HHblits is the first profile-profile comparison method that is fast enough to do iterative searches to build MSAs.

Sequence search methods such as BLAST, FASTA, or PSI-BLAST are of prime importance for biological research because functional information of a protein or gene can be inferred from homologous proteins or genes identified in a sequence search. But quite often no significant relationship to a protein of known function can be established. This is certainly the case for the most interesting group of proteins, those for which no ortholog has yet been studied. In cases where conventional sequence search methods fail, HHblits and HHsearch quite often allow to make inferences from more remotely homologous relationships. HHblits builds better MSAs, with which more remote homologs can then be found using HHsearch or HHblits, e.g. by searching the PDB or domain databases such as Pfam. If the relationship is so remote that no common function can be assumed, one can often still derive hypotheses about possible mechanisms, active site positions and residues, or the class of substrate bound. When a homologous protein with known structure can be identified, its stucture can be used as a template to model the 3D structure of the protein of interest , since even protein domains that shared a common ancestor some 3 billion years ago mostly have similar 3D structures. The 3D model may then help to generate hypotheses to guide experiments.

Two protein sequences are homologous to each other if they descended from a common ancestor sequence. Generally, homologous proteins (or protein fragments) have similar structure because structures diverge much more slowly than their sequences . Depending on the degree of divergence between the sequences, the proteins may also have similar cellular functions, ligands, protein interaction partners, or enzymatic mechanisms. On the contrary, proteins that have a similar structure by convergence (i.e., by chance) are said to be analogous. They don’t generally share similar functions or biochemical mechanisms and are therefore much less helpful for making inferences. HHsearch and HHblits are tools for homology detection and as such do not normally detect analogous relationships.

Here is a list of things to check if a database match really is at least locally homologous.

Check probability and E-value: HHsearch and HHblits can detect homologous relationships far beyond the twilight zone, i.e., below 20% sequence identity. Sequence identity is therefore not an appropriate measure of relatedness anymore. The estimated probability of the template to be (at least partly) homologous to your query sequence is the most important criterion to decide whether a template HMM is actually homologous or just a high-scoring chance hit. When it is larger than 95%, say, the homology is nearly certain. Roughly speaking, one should give a hit serious consideration (i.e., check the other points in this list) whenever (1) the hit has (>50%) probability, or (2) it has (>30%) probability and is among the top three hits. The E-value is an alternative measure of statistical significance. It tells you how many chance hits with a score better than this would be expected if the database contained only hits unrelated to the query. At E-values below one, matches start to get marginally significant. Contrary to the probability, when calculating the E-value HHsearch and HHblits do not take into account the secondary structure similarity. Therefore, the probability is a more sensitive measure than the E-value.

Check if homology is biologically suggestive or at least reasonable: Does the database hit have a function you would expect also for your query? Does it come from an organism that is likely to contain a homolog of your query protein?

Check secondary structure similarity: If the secondary structure of query and template is very different or you can’t see how they could fit together in 3D, then this is a reason to distrust the hit. Note however that if the query alignment contains only a single sequence, the secondary structure prediction is quite unreliable and confidence values are overestimated.

Check relationship among top hits: If several of the top hits are homologous to each other, (e.g. when they are members of the same SCOP superfamily), then this will considerably reduce the chances of all of them being chance hits, especially if these related hits are themselves not very similar to each other. Searching the SCOP database is very useful precisely for this reason, since the SCOP family identifier (e.g. a.118.8.2) allows to tell immediately if two templates are likely homologs.

Check for possible conserved motifs: Most homologous

pairs of alignments will have at least one (semi-)conserved motif in

common. You can identify such putative (semi-)conserved motifs by the

agglomeration of three or more well-matching columns (marked with a

| sign between the aligned HMMs) occurring within a few

residues, as well as by matching consensus sequences. Some false

positive hits have decent scores due to a similar amino acid composition

of the template. In these cases, the alignments tend to be long and to

lack conserved motifs.

Check residues and role of conserved motifs: If you can identify possible conserved motifs, are the corresponding conserved template residues involved in binding or enzymatic function?

Check query and template alignments: A corrupted query or template alignment is the main source of high-scoring false positives. The two most common sources of corruption in an alignment are (1) non-homologous sequences, especially repetitive or low-complexity sequences in the alignment, and (2) non-homologous fragments at the ends of the aligned database sequences. Check the query and template MSAs in an alignment viewer such as Jalview.

Realign with other parameters: change the alignment parameters. Choose global instead of local mode, for instance, if you expect your query to be globally homologous to the putative homolog. Try to improve the probability by changing the values for minimum coverage or minimum sequence identity. You can also run the query HMM against other databases.

Build the query and/or database MSAs more aggressively: If your query (or template) MSA is not diverse enough, you could increase sensitivity substantially by trying to include more remotely homologous sequences into the MSA. Have non-homologous sequences or sequence segments been accidentally included? You can also try to build a more diverse MSA manually: Inspect the HHblits results after the first iteration and consider including hits above the E-value inclusion threshold of 0.001, based on biological plausibility, relatedness of the organism, a reasonable looking alignment, or just guessing. Then start the second HHblits search iteration HHblits with this manually enriched alignment.

Verify predictions experimentally: The ultimate confirmation of a homologous relationship or structural model is, of course, the experimental verification of some of its key predictions, such as validating the binding to certain ligands by binding assays, measuring biochemical activity, or comparing the knock-out phenotype with the one obtained when the putative functional residues are mutated.

HHblits and HHsearch use a better alignment algorithm than the quick and

standard Viterbi method to generate the final HMM-HMM alignments. Both

realign all displayed alignments in a second stage using the more

accurate Maximum Accuracy (MAC) algorithm . The Viterbi algorithm is

employed for searching and ranking the matches. The realignment step is

parallelized (-cpu <int>) and typically takes a few seconds only.

Please note: Using different alignment algorithms for scoring and

aligning has the disadvantage that the pairwise alignments that are

displayed are not always very similar to those that are used to

calculate the scores. This can lead to confusing results where

alignments of only one or a few residues length may have obtained

significant E-values. In such cases, run the search again with the

-norealign option, which will skip the MAC-realignment step. This will

allow you to check if the Viterbi alignments are valid at all, which

they will probably not be. The length of the MAC alignments can

therefore give you additional information to decide if a match is valid.

In order to avoid confusion for users of our HHpred server , the

-norealign option is the default there, whereas for you pros who dare

to use the command line package, realigning is done by default.

The posterior probability threshold is controlled with the -mact

option. This parameter controls the alignment algorithm’s greediness.

More precisely, the MAC algorithm finds the alignment that maximizes the

sum of posterior probabilities minus mact for each aligned pair. Global

alignments are generated with -mact 0, whereas -mact 0.5 will produce

quite conservative local alignments.

The -global and -local options now refer to both the Viterbi search stage as well as the MAC realignment stage. With -global (-local), the posterior probability matrix will be calculated for global (local) alignment. When -global is used in conjunction with -realign, the mact parameter is automatically set to 0 in order to produce global alignments. In other words, both following two commands will give global alignments:

hhsearch -i <query> -d <db> -realign -mact 0

hhsearch -i <query> -d <db> -realign -global

The first version uses local Viterbi to search and then uses MAC to realign the proteins globally (since mact is 0) on a local posterior probability matrix. The second version uses global Viterbi to search and then realigns globally (since mact is automatically set to 0) on a global posterior matrix. To detect and align remote homologs, for which sometimes only parts of the sequence are conserved, the first version is clearly better. It is also more robust. If you expect to find globally alignable sequence homologs, the second option might be preferable. In that case, it is recommended to run both versions and compare the results.

The number of effective sequences of the full alignment, which appears

as NEFF in the header of each hhm file, is the average of local values

Neff_M(i) over all alignment positions i. The values Neff_M(i)

are given in the main model section of the hhm model files (subsection

HHsearch/HHblits model format). They quantify the local diversity of the alignment

in a region around position i. More precisely, Neff_M(i) measures

the diversity of subalignment Ali_M(i) that contains all sequences

that have a residue at column i of the full alignment. The

subalignment contains all columns for which at least 90% of these

sequences have no end gap. End gaps are gaps to the left of the first

residue or to the right of the last residue. The latter condition

ensures that the sequences in the subalignment Ali_M(i) cover most

of the columns in it. The number of effective sequences in the

subalignment Ali_M(i) is exp of the average sequence entropy over

all columns of the subalignment. Hence, Neff_M is bounded by 0 from

below and 20 from above. In practice, it is bounded by the entropy of a

column with background amino acid distribution f_a:

N_eff < sum_(a=1)^20 f_a*log(f_a) ≈ 16

Similarly, Neff_I(i) gives the diversity of the subalignment Ali_I(i) of all

sequences that have an insert at position i, and Neff_D(i) refers

to the diversity of subaligment Ali_D(i) of all sequences that have

a Delete (a gap) at position i of the full alignment.

HHblits and HHsearch have been designed to work with multi-domain queries. However, the chances for false positives entering the query alginment during the HHblits iterations is greater for multi-domain proteins. For long sequences, it may therefore be of advantage to first search the PDB or the SCOP domain database and then to cut the query sequence into smaller parts on the basis of the identified structural domains. Pfam or CDD are - in our opinion - less suitable to determine domain boundaries.

Despite the biased amino acid composition in their integral membrane helices, membrane proteins behave very well in HMM-HMM comparisons. In many benchmark tests and evolutionary studies we have not noticed any higher rates of false positives than with cytosolic proteins.

For example when domain A is predicted from

residues 2-50 with 98% probability and domain B from 2-200 with 95%

probability? The probability that a pair of residues is correctly

aligned is the product of the probability for the database match to be

homologous (given by the values in the Probab column of the hit list)

times the posterior probability of the residue pair to be correctly

aligned given the database match is correct in the first place. The

posterior probabilities are specified by the confidence numbers in the

last line of the alignment blocks (0 corresponds approximately to 0-10%,

9 to 90-100%). Therefore, an obvious solution would be to prune the

alignments in the overlapping region such that the sum of total

probabilities is maximized. There is no script yet that does this

automatically.

I would use a similarity measure like the raw score per alignment length. You might also add the secondary structure score to the raw score with some weight. Whereas probabilities, E-values, and P-values are useful for deciding whether a match is a reliable homolog or not, they are not suitable for measuring similarities because they strongly depend on the length of the alignment, roughly like:

P-value ∝ exp^(λ * average_similarity * length),

with some constant lambda of order 1. The probability has an even

more complex dependence on length. Also, the “Similarity” given above

the alignment blocks is a simple substitution matrix score per column

between the query and template master sequences and does not capture the

evolutionary information contained in the MSAs (see

HMM-HMM pairwise alignments). One note of caution: Large, diverse MSAs are

usually more sensitive to find homologs than narrower ones. Therefore, I

would limit the diversity of all HMMs to some reasonable number (perhaps

around 5 or 7, depending on how far diverged your HMMs are). This

filtering can be done using:

hhfilter -i <MSA.a3m> -o <MSA_filt.a3m> -neff 5

For example to select templates for homology

modeling, it could make sense to weight residues around the known

functional site more strongly. This might also positively impact the

query-template alignment itself. Well, directly weighting query or

template match states is not possible. What you can do with a bit of

file fiddling, however, may even be better: You can modify the amount of

pseudocounts that hhblits, hhsearch, or hhalign will automatically add

to the various match state columns in the query or template profile HMM.

This is equivalent to assuming a higher conservation for these

positions, since pseudocounts are basically mutations that are added to

the observed counts in the training sequences to generalize to more

remotely related sequences. In HHsuite, the more diverse the sequence

neighborhood around a match state column i is, as measured by the

number of effective sequences Neff_M(i) (see How is the MSA diversity Neff calculated?),

the fewer pseudocounts are added. By manually increasing Neff_M(i) for

a match state column you increase the assumed conservation of this

position in the protein. The Neff_M(i) values are found in the

hhm-formatted HMM model files in the eighth column of the second line of

each match state block in units of 0.001 (see HHsearch/HHblits model format).

You could, for example, simply increase Neff_M(i)

values by a factor of two for important, conserved positions. Setting

Neff_M(i) to 99999 will reduce pseudocounts to effectively zero. A

word of caution is advised: HMMs built from a diverse set of training

sequences already contain quantitative information about the degree of

conservation for each position.

No. If you don’t specify otherwise, the two parameters

of the extreme-value distribution for the query are estimated by a

neural network from the lengths and diversities N_eff of

query and database HMMs that was trained on a large set of example

queries-template pairs, in an approach similar to the one used in .

However, the old calibration is still available as an option in

HHsearch.

Should I use the -global option to build MSAs if I am intersted in global alignments to these database HMMs?

Never use -global when building MSAs by iterative

searches with HHblits. Remember that global alignments are very greedy

alignments, where alignments stretch to the ends of either query of

database HMM, no matter what. Global alignments will therefore often

lead to non-homologous segments getting included in the MSA, which is

catastrophic, as these false segments will lead to many false positive

matches when searching with this MSA. But you may use -global when

searching (with a single iteration) for global matches of your query

HMMs through your customized HHsuite database, for example.

One iteration! Doing more than one search iterations in general only makes sense in order to build up a diverse MSA of homologous sequences by searching through the Uniclust30 or a clustered NR databases.

For reasons of efficiency, the MSA A3M and HHM model

files are packed into two files, <db>_a3m.ffdata and

<db>_hhm.ffdata, each with its own index file. The contained files are

easy to extract from the packed files. For example, to dump

d1aa7a_.a3m in scop70 to standard output, type

ffindex_get scop70_a3m.ffdata scop70_a3m.ffindex d1aa7a_.a3m

You may write the extracted file to a separate file by appending '> d1aa7a_.a3m' to the above command. If you want to extract all files in

the ffindex data structures to directory tmp/scop70, type

ffindex_unpack scop70_a3m.ffdata scop70_a3m.ffindex tmp/scop70/ .

The procedure to cluster the UniProt databases is more complicated than building a database for a genome or for the sequences in the PDB. As its first step it involves clustering these huge databases down to 20%-30% sequence identity. The clustering is done in our lab using MMseqs2. We provide this database - the Uniclust30 - including all scripts to build it. These scripts generate A3M files, HHM files, and consensus sequences. Because of the large number of files to generate, these scripts need to be run on a computer cluster and this would require considerable computer savviness.

Currently we do not provide environmental databases for the HH-suite. Please use MMseqs2 to search through such datasets. MMseqs2 can easily search through the largest data such, such as assembled proteins from environmental metagenomes. We offer a Soil Reference Catalog (SRC) and a Marine Eukaryotic Reference Catalog, with billions of environmental proteins.

The pdb70 database is clustered to 70% maximum

pairwise sequence identity to reduce redundancy. The PDB identifiers at

the end of the description line of sequence X list the sequences

with lower experimental resolution that were removed for having higher

than 70% sequence identity with PDB sequence X. The asterisks

indicate that a multi-atom ligand is bound in the structure.

When taking A as the query, the pairwise alignment and significance scores with template B in the database can be different than when B is the query and A the template. By default hhblits, hhsearch, and hhalign add context-specific pseudocounts to the query MSA or HMM whereas the much faster substitution matrix pseudocounts are added to the database HMMs/MSAs for reasons of speed. If you want to combine the significance estimates from the forward and reverse comparisons, we recommend to take the geometric mean of Probability, P-value and E-value. Since the less diverse HMM or MSA will profit more from the context-specific pseudocounts than the more diverse HMM or MSA, it might be even better to take the estimate from the comparison in which the HMM/MSA with the smaller diversity (measured as number of effective sequences, NEFF) is used as the query.

Each line in the summary hit list refers to an alignment with an HMM in the database, not to the database HMM itself. Sometimes, alternative (suboptimal) alignments covering a different part of either the query or the database HMM may appear in the hit list. Usually, both the optimal and the alternative alignments are correct, in particular when the query or the database HMMs represent repeat proteins.

Why do I get different results using HHblits and HHsearch when I search with the same query through the same database?

There are two reasons. First, some hits that hhsearch shows

might not have passed the prefilter in hhblits. The option

-prepre_smax_thresh <bits> lets you modify the minimum score threshold

of the first, gapless alignment prefilter (default is 10 bits). Option

-pre_evalue_thresh <E-value> sets the maximum E-value threshold for

the second, gapped alignment prefilter (default is 1000). Second, while

the probabilities and P-values of hhblits and hhsearch should be the

identical for the same matches, the E-values are only similar. The

reason is that hhblits heuristically combines the E-values of the second

prefilter with the E-values from the full HMM-HMM Viterbi alignment into

total E-values . These E-values are slightly better in distinguishing

true from false hits, because they combine the partly independent

information from two comparisons.

When you do a single iteration and start a second

iteration with the A3M output file from the first iteration, the

alignments should be nearly the same as if you do two iterations

(-n 2) Why not exactly the same? Because when doing multiple

iterations in a single hhblits run, alignments for each hit are only

calculated once. When the same database HMM is found in later search

iterations, the “frozen”, first alignment is used instead of recomputing

it. This increases robustness with respect to homologous overextension.

However, the significance values can be slightly different between the

cases with 2 and 1+1 iterations. The reason is that the HHblits

P-value is calculated by a weighted combination of the P-value of the

Viterbi pairwise HMM alignment and the prefilter E-value. The prefilter

E-values differ in the two cases described, since in the first case the

E-value is calculated for the first prefilter search (before the first

full search) and in the second case it is calculated for the second

prefilter search, performed with the query MSA obtained after the

first search iteration.

Often, segmentation faults can be caused by too little available memory. HHblits needs memory to read the entire column state file into memory for prefiltering. For Viterbi HMM-HMM alignment it needs about:

Memory Viterbi = Query_length * max_db_seq_length * (num_threads+1) * 5 byte

Here, num_threads is the number of threads specified with option -cpu <int>. For realignment, HHblits needs in addition:

Memory Realign = Query_length * max_db_seq_length * (num_threads+1) * 8 byte

But the amount of memory for realignment can be limited using the

-maxmem <GB> option, which will cause the realignment to skip the

longest sequences.

Make sure to enable the memory overcommitment on your machine. On Linux either add vm.overcommit_memory=1 to /etc/sysctl.conf and then reboot or run the command sysctl vm.overcommit_memory=1.

Under Linux, you can check your memory limit settings

using ulimit -a. Make sure you have at least 4GB per thread. Try

ulimit -m 4000000 -v 4000000 -s 8192 -f 4000000

Also try using -cpu 1 -maxmem 1 to reduce memory requirements. If it

still does not work, follow the tipps in subsection

How do I report a bug? to report your problems to us.

I obtain different results for the same query sequence on the web server and on my local version using HHblits and HHsearch.

-

Check the exact command line calls for HHblits and HHsearch from the log file accessible on the results page of the server. If any of the commands differ, rerun your command line version with the same options.

-

Check if the query multiple sequence alignments on the server and in your versions contain the same number of sequences and the same diversity. If not, try to find out why (see previous point, for example.)

-

For some of the alignments with differing scores, check if the db aligment on the server is the same as the one in your local database. You can download the db MSA by clicking the alignment logo above the query-template pairwise profile-profile alignment.

I find an alignment of my query and a template with the exact same sequence, but they are aligned incorrectly.

I find a gap inserted at residue position 92 in query and position 99 in

template:

Q ss_pred CcEEEeeCCCC-CCCeEEEEEECCeeEEEEEEECCCCcEEEEEEeeCCHHHHHHHHhhCCCccceeEEEecccCCCCCCe

Q Pr_1 81 GAFLIRESESA-PGDFSLSVKFGNDVQHFKVLRDGAGKYFLWVVKFNSLNELVDYHRSTSVSRNQQIFLRDIEQVPQQPT 159 (217)

Q Consensus 81 ~~~~~~~~~~~-~~~~~~s~~~~~~~~~~~i~~~~~~~~~~~~~~f~s~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ 159 (217)

|.+++|.+... ++.+.+ +..++.++|+++.....+.|+.+...|.++..++.++...+....................

T Consensus 81 G~flvR~s~~~~~~~~~l-v~~~~~v~h~~i~~~~~g~~~~~~~~f~sl~~Lv~~y~~~~~~~~~~~~l~~~~~~~~~~~ 159 (217)

T 1gri_A 81 GAFLIRESESAPGDFSLS-VKFGNDVQHFKVLRDGAGKYFLWVVKFNSLNELVDYHRSTSVSRNQQIFLRDIEQVPQQPT 159 (217)

T ss_dssp TCEEEEECSSSTTCEEEE-EEETTEEEEEEEEECSSSCEESSSCEESSHHHHHHHHHHSCSSSSTTCCCCBCCCCCCCCC

T ss_pred CeEEEEECCCCCCCcEEE-EECCCceeEEEEEECCCCcEEEeeEecCCHHHHHHHhhcCCCccccceeccccccccCCce