This repository has been archived by the owner on Jan 24, 2024. It is now read-only.

-

Notifications

You must be signed in to change notification settings - Fork 137

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Fix KoP will cause Kafka Errors.REQUEST_TIMED_OUT when consume multi …

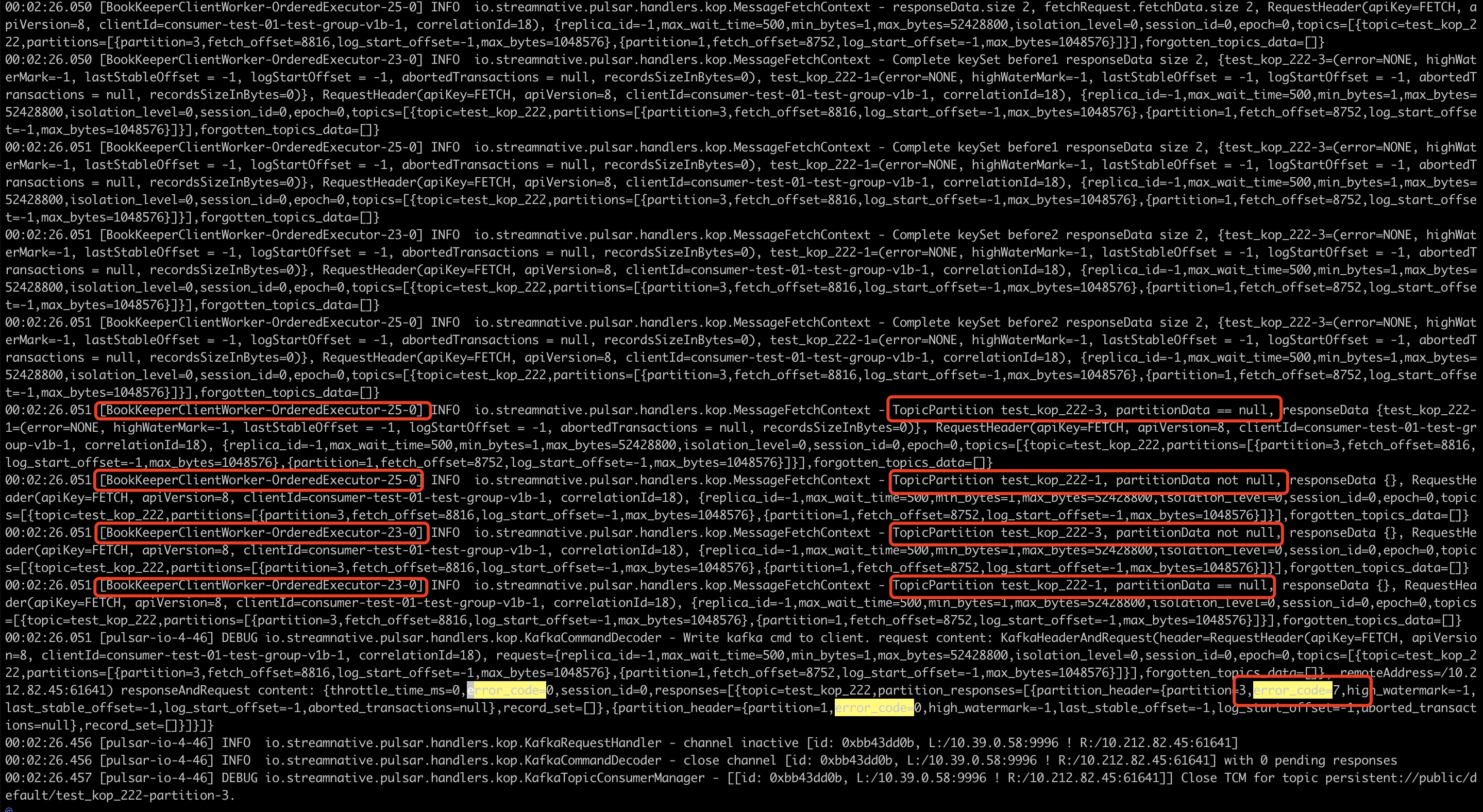

…TopicPartition in one consumer request (#654) Fixes #604 ### Motivation When consume multi TopicPartition in one request, in MessageFetchContext.handleFetch(), tryComplete() may enter race condition When one topicPartition removed from responseData, maybe removed again, which will cause Errors.REQUEST_TIMED_OUT ReadEntries and CompletableFuture.complete operations for each partition are all performed by BookKeeperClientWorker- Different threads in the OrderedExecutor thread pool are executed. When the partition can read data, because the read data and decode operations will take uncertain time, the competition in this case is relatively weak; and when the partition has no data to write, and the consumer After all the data has been consumed, I have been making empty fetch requests, which can be reproduced stably at this time. Stable steps to reproduce: A single broker has two partition leaders for one topic; The topic is not writing data, and consumers have consumed the old data; At this time, the consumer client continues to send Fetch requests to broker; Basically, you will soon see that the server returns error_code=7, and the client will down。 1、One fetch request, two partitions, and two threads. The data obtained is an empty set without any protocol conversion operation. 2、When the BookKeeperClientWorker-OrderedExecutor-25-0 thread adds test_kop_222-1 to the responseData, BookKeeperClientWorker-OrderedExecutor-23- 0 thread adds test_kop_222-3 to responseData, 3、at this time responseData.size() >= fetchRequest.fetchData().size(), because tryComplete has no synchronization operation, two threads enter at the same time, 4、fetchRequest.fetchData().keySet() .forEach two threads traverse at the same time, resulting in the same partition multiple times responseData.remove(topicPartition), partitionData is null and cause the REQUEST_TIMED_OUT error.  ### Modifications `MessageFetchContext.tryComplete` add synchronization lock

- Loading branch information

1 parent

2274524

commit df436e3

Showing

2 changed files

with

137 additions

and

1 deletion.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

100 changes: 100 additions & 0 deletions

100

kafka-impl/src/test/java/io/streamnative/pulsar/handlers/kop/MessageFetchContextTest.java

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,100 @@ | ||

| /** | ||

| * Licensed under the Apache License, Version 2.0 (the "License"); | ||

| * you may not use this file except in compliance with the License. | ||

| * You may obtain a copy of the License at | ||

| * | ||

| * http://www.apache.org/licenses/LICENSE-2.0 | ||

| * | ||

| * Unless required by applicable law or agreed to in writing, software | ||

| * distributed under the License is distributed on an "AS IS" BASIS, | ||

| * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| * See the License for the specific language governing permissions and | ||

| * limitations under the License. | ||

| */ | ||

| package io.streamnative.pulsar.handlers.kop; | ||

|

|

||

| import static org.testng.Assert.assertFalse; | ||

|

|

||

| import java.util.HashSet; | ||

| import java.util.Map; | ||

| import java.util.Set; | ||

| import java.util.concurrent.CompletableFuture; | ||

| import java.util.concurrent.atomic.AtomicReference; | ||

| import java.util.function.BiConsumer; | ||

| import org.apache.kafka.common.TopicPartition; | ||

| import org.apache.kafka.common.protocol.Errors; | ||

| import org.apache.kafka.common.requests.AbstractResponse; | ||

| import org.apache.kafka.common.requests.FetchRequest; | ||

| import org.apache.kafka.common.requests.ResponseCallbackWrapper; | ||

| import org.testng.annotations.BeforeMethod; | ||

| import org.testng.annotations.Test; | ||

| import org.testng.collections.Maps; | ||

|

|

||

| public class MessageFetchContextTest { | ||

|

|

||

| private static final Map<TopicPartition, FetchRequest.PartitionData> fetchData = Maps.newHashMap(); | ||

|

|

||

| private static CompletableFuture<AbstractResponse> resultFuture = null; | ||

|

|

||

| FetchRequest fetchRequest = FetchRequest.Builder | ||

| .forConsumer(0, 0, fetchData).build(); | ||

|

|

||

| private static final String topicName = "test-fetch"; | ||

| private static final TopicPartition tp1 = new TopicPartition(topicName, 1); | ||

| private static final TopicPartition tp2 = new TopicPartition(topicName, 2); | ||

|

|

||

| private static volatile MessageFetchContext messageFetchContext = null; | ||

|

|

||

| @BeforeMethod | ||

| protected void setup() throws Exception { | ||

| fetchData.put(tp1, null); | ||

| fetchData.put(tp2, null); | ||

| resultFuture = new CompletableFuture<>(); | ||

| messageFetchContext = MessageFetchContext.getForTest(fetchRequest, resultFuture); | ||

| } | ||

|

|

||

| private void startThreads() throws Exception { | ||

| Thread run1 = new Thread(() -> { | ||

| // For the synchronous method, we can check it only once, | ||

| // because if there is still has problem, it will eventually become a flaky test, | ||

| // If it does not become a flaky test, then we can keep this | ||

| messageFetchContext.addErrorPartitionResponseForTest(tp1, Errors.NONE); | ||

| }); | ||

|

|

||

| Thread run2 = new Thread(() -> { | ||

| // As comment described in run1, we can check it only once. | ||

| messageFetchContext.addErrorPartitionResponseForTest(tp2, Errors.NONE); | ||

| }); | ||

|

|

||

| run1.start(); | ||

| run2.start(); | ||

| run1.join(); | ||

| run2.join(); | ||

| } | ||

|

|

||

| private void startAndGetResult(AtomicReference<Set<Errors>> errorsSet) | ||

| throws Exception { | ||

|

|

||

| BiConsumer<AbstractResponse, Throwable> action = (response, exception) -> { | ||

| ResponseCallbackWrapper responseCallbackWrapper = (ResponseCallbackWrapper) response; | ||

| Set<Errors> currentErrors = errorsSet.get(); | ||

| currentErrors.addAll(responseCallbackWrapper.errorCounts().keySet()); | ||

| errorsSet.set(currentErrors); | ||

| }; | ||

|

|

||

| startThreads(); | ||

| resultFuture.whenComplete(action); | ||

| } | ||

|

|

||

| // Run the actually modified code logic in MessageFetchContext | ||

| // to avoid changing the MessageFetchContext in the future | ||

| // and failing to catch possible errors. | ||

| // We need to ensure that resultFuture.complete has no REQUEST_TIMED_OUT error. | ||

| @Test | ||

| public void testHandleFetchSafe() throws Exception { | ||

| AtomicReference<Set<Errors>> errorsSet = new AtomicReference<>(new HashSet<>()); | ||

| startAndGetResult(errorsSet); | ||

| assertFalse(errorsSet.get().contains(Errors.REQUEST_TIMED_OUT)); | ||

| } | ||

|

|

||

| } |