Retrieval-Augmented Image Sequence Colorization

Authors: Junhao Zhuang, Xuan Ju, Zhaoyang Zhang, Yong Liu, Shiyi Zhang, Chun Yuan, Ying Shan

Your star means a lot for us to develop this project! ⭐

This README provides a guide on how to prepare a manga dataset and use the ColorFlow tool for processing.

Before you begin, ensure you have the following installed:

- Python 3.x

- Git

- FFmpeg

- Obtain Manga Images:

- Visit the Genshin Impact manga website to download or view manga images.

- Example links:

### zh-tw

# https://sg-public-api-static.hoyoverse.com/content_v2_user/app/a1b1f9d3315447cc/getContentList?iAppId=32&iChanId=401&iPageSize=1000&iPage=1&sLangKey=zh-tw

### en-us

# https://sg-public-api-static.hoyoverse.com/content_v2_user/app/a1b1f9d3315447cc/getContentList?iAppId=32&iChanId=401&iPageSize=1000&iPage=1&sLangKey=en-us

import requests

import json

# 目标 URL

url = "https://sg-public-api-static.hoyoverse.com/content_v2_user/app/a1b1f9d3315447cc/getContentList?iAppId=32&iChanId=401&iPageSize=1000&iPage=1&sLangKey=en-us"

# 发送 GET 请求

response = requests.get(url)

# 检查请求是否成功

if response.status_code == 200:

# 解析 JSON 数据

data = response.json()

# 指定保存的文件路径

file_path = "genshin_impact_manga.json"

# 将数据写入到 .json 文件

with open(file_path, 'w', encoding='utf-8') as json_file:

json.dump(data, json_file, ensure_ascii=False, indent=4)

print(f"数据已成功保存到 {file_path}")

else:

print(f"请求失败,状态码: {response.status_code}")

import json

import pandas as pd

import numpy as np

with open("genshin_impact_manga.json", "r", encoding="utf-8") as f:

j_obj = json.load(f)

df = pd.DataFrame(j_obj["data"]["list"])

dff = df[["sTitle", "sIntro", "sExt"]]

dff["sExt"] = dff["sExt"].map(eval)

dff["sExt_401_0"] = dff["sExt"].map(lambda x: x["401_0"])

dff["sExt_401_1"] = dff["sExt"].map(lambda x: x["401_1"])

dff = dff.iloc[::-1, :]

dfff = dff.iloc[:, [0, 1, 3]].explode("sExt_401_0")

dfff["name"] = dfff["sExt_401_0"].map(lambda x: x["name"])

dfff["url"] = dfff["sExt_401_0"].map(lambda x: x["url"])

dfff = dfff.iloc[:, [0, 1, 3, 4]].reset_index().iloc[:, 1:]

dfff

import requests

from PIL import Image

from io import BytesIO

def url_to_image(url):

# 发送HTTP GET请求获取图像数据

response = requests.get(url)

response.raise_for_status() # 如果请求失败,抛出异常

# 将获取到的图像数据转换为PIL图像对象

image = Image.open(BytesIO(response.content))

return image

from datasets import Dataset

ds = Dataset.from_pandas(dfff.reset_index().iloc[:, 1:])

dss = ds.map(

lambda x: {"image": url_to_image(x["url"])}, num_proc = 4

)

dss.save_to_disk("im_local")

#dss.push_to_hub("svjack/Genshin-Impact-Manga")

dss.push_to_hub("svjack/Genshin-Impact-Manga-EN-US")

#https://huggingface.co/spaces/ragavsachdeva/Magiv2-Demo

#https://huggingface.co/spaces/ragavsachdeva/the-manga-whisperer

#https://github.com/ragavsachdeva/magi- Prepare Reference Image:

- Ensure you have at least one image with two characters for reference. This image will be used for colorization or other processing tasks.

-

Update and Install Dependencies:

sudo apt-get update && sudo apt-get install cbm ffmpeg -

Clone the ColorFlow Repository:

git clone https://huggingface.co/spaces/svjack/ColorFlow && cd ColorFlow

-

Install Python Requirements:

pip install -r requirements.txt

- Start the Application:

python app.py

- API Method

### 使用api 方法

from gradio_client import Client, handle_file

from PIL import Image

import pandas as pd

#client = Client("https://a9b5f5cb5cf97de36e.gradio.live/")

client = Client("http://127.0.0.1:7860")

result = client.predict(

query_image_=handle_file('nlu.png'),

input_style="Sketch",

resolution="640x640",

api_name="/extract_line_image"

)

print(result)

final_result = client.predict(

reference_images=[handle_file("nlutree.jpg")],

resolution="640x640",

seed=0,

input_style="Sketch",

num_inference_steps=30,

api_name="/colorize_image"

)

print(final_result)

Image.open(pd.DataFrame(final_result)["image"][0])

Image.open(pd.DataFrame(final_result)["image"][2])

result = client.predict(

query_image_=handle_file('nlu.png'),

input_style="GrayImage(ScreenStyle)",

resolution="640x640",

api_name="/extract_line_image"

)

print(result)

final_result = client.predict(

reference_images=[handle_file("nlutree.jpg")],

resolution="640x640",

seed=0,

input_style="GrayImage(ScreenStyle)",

num_inference_steps=30,

api_name="/colorize_image"

)

print(final_result)

Image.open(pd.DataFrame(final_result)["image"][0])

Image.open(pd.DataFrame(final_result)["image"][2])

#### sketch 会亮和鲜艳一些

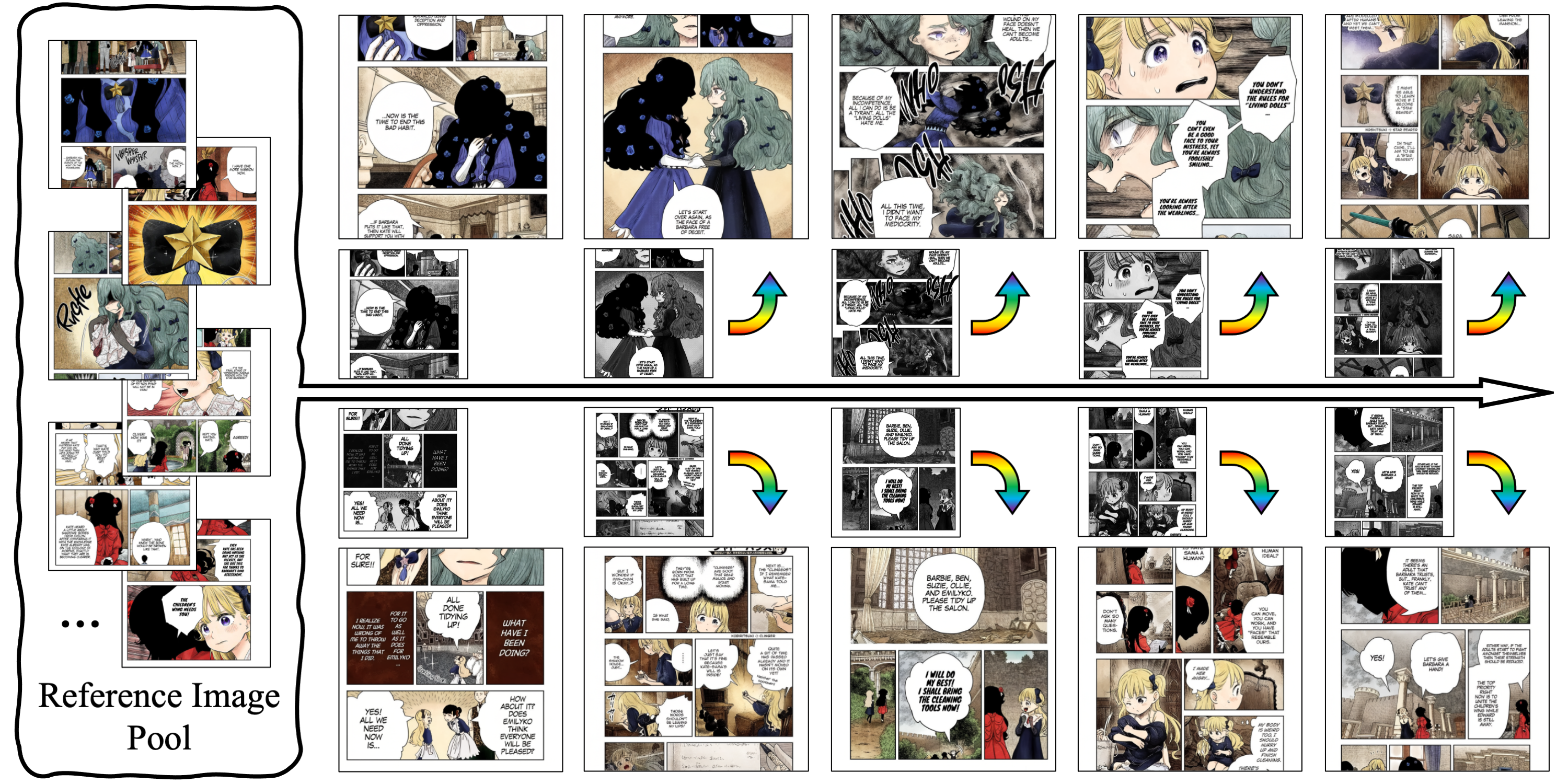

#### https://github.com/TencentARC/ColorFlow/issues/3Automatic black-and-white image sequence colorization while preserving character and object identity (ID) is a complex task with significant market demand, such as in cartoon or comic series colorization. Despite advancements in visual colorization using large-scale generative models like diffusion models, challenges with controllability and identity consistency persist, making current solutions unsuitable for industrial application.

To address this, we propose ColorFlow, a three-stage diffusion-based framework tailored for image sequence colorization in industrial applications. Unlike existing methods that require per-ID finetuning or explicit ID embedding extraction, we propose a novel robust and generalizable Retrieval Augmented Colorization pipeline for colorizing images with relevant color references.

Our pipeline also features a dual-branch design: one branch for color identity extraction and the other for colorization, leveraging the strengths of diffusion models. We utilize the self-attention mechanism in diffusion models for strong in-context learning and color identity matching.

To evaluate our model, we introduce ColorFlow-Bench, a comprehensive benchmark for reference-based colorization. Results show that ColorFlow outperforms existing models across multiple metrics, setting a new standard in sequential image colorization and potentially benefiting the art industry.

- Release Date: 2024.12.17 - Inference code and model weights have been released! 🎉

- ✅ Release inference code and model weights

- ⬜️ Release training code

Follow these steps to set up and run ColorFlow on your local machine:

-

Clone the Repository

Download the code from our GitHub repository:

git clone https://github.com/TencentARC/ColorFlow cd ColorFlow -

Set Up the Python Environment

Ensure you have Anaconda or Miniconda installed, then create and activate a Python environment and install required dependencies:

conda create -n colorflow python=3.8.5 conda activate colorflow pip install -r requirements.txt

-

Run the Application

You can launch the Gradio interface for PowerPaint by running the following command:

python app.py

-

Access ColorFlow in Your Browser

Open your browser and go to

http://localhost:7860. If you're running the app on a remote server, replacelocalhostwith your server's IP address or domain name. To use a custom port, update theserver_portparameter in thedemo.launch()function of app.py.

You can try the demo of ColorFlow on Hugging Face Space.

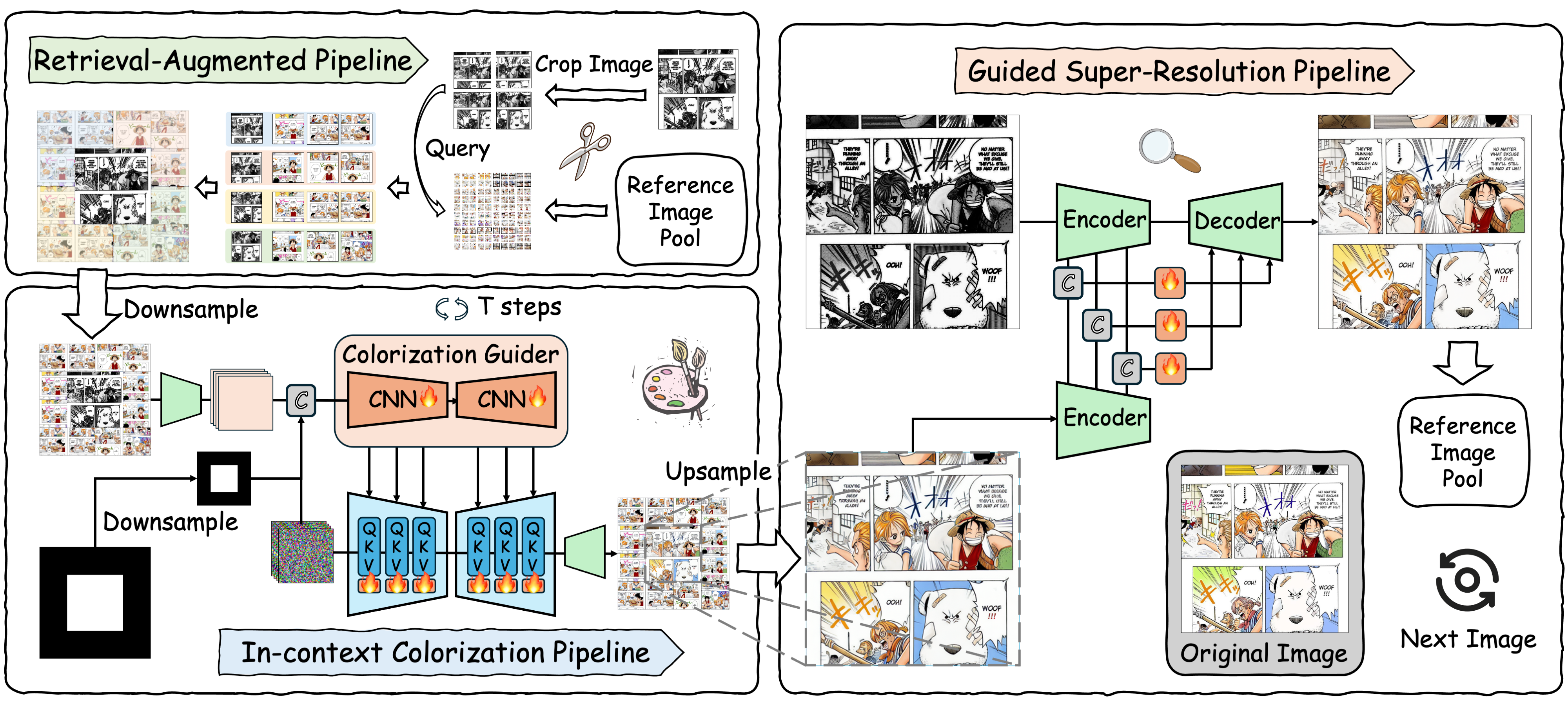

The overview of ColorFlow. This figure presents the three primary components of our framework: the Retrieval-Augmented Pipeline (RAP), the In-context Colorization Pipeline (ICP), and the Guided Super-Resolution Pipeline (GSRP). Each component is essential for maintaining the color identity of instances across black-and-white image sequences while ensuring high-quality colorization.

🤗 We welcome your feedback, questions, or collaboration opportunities. Thank you for trying ColorFlow!

We would like to acknowledge the following open-source projects that have inspired and contributed to the development of ColorFlow:

- ScreenStyle: https://github.com/msxie92/ScreenStyle

- MangaLineExtraction_PyTorch: https://github.com/ljsabc/MangaLineExtraction_PyTorch

We are grateful for the valuable resources and insights provided by these projects.

- Junhao Zhuang

Email: zhuangjh23@mails.tsinghua.edu.cn

@misc{zhuang2024colorflow,

title={ColorFlow: Retrieval-Augmented Image Sequence Colorization},

author={Junhao Zhuang and Xuan Ju and Zhaoyang Zhang and Yong Liu and Shiyi Zhang and Chun Yuan and Ying Shan},

year={2024},

eprint={2412.11815},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2412.11815},

}

Please refer to our license file for more details.