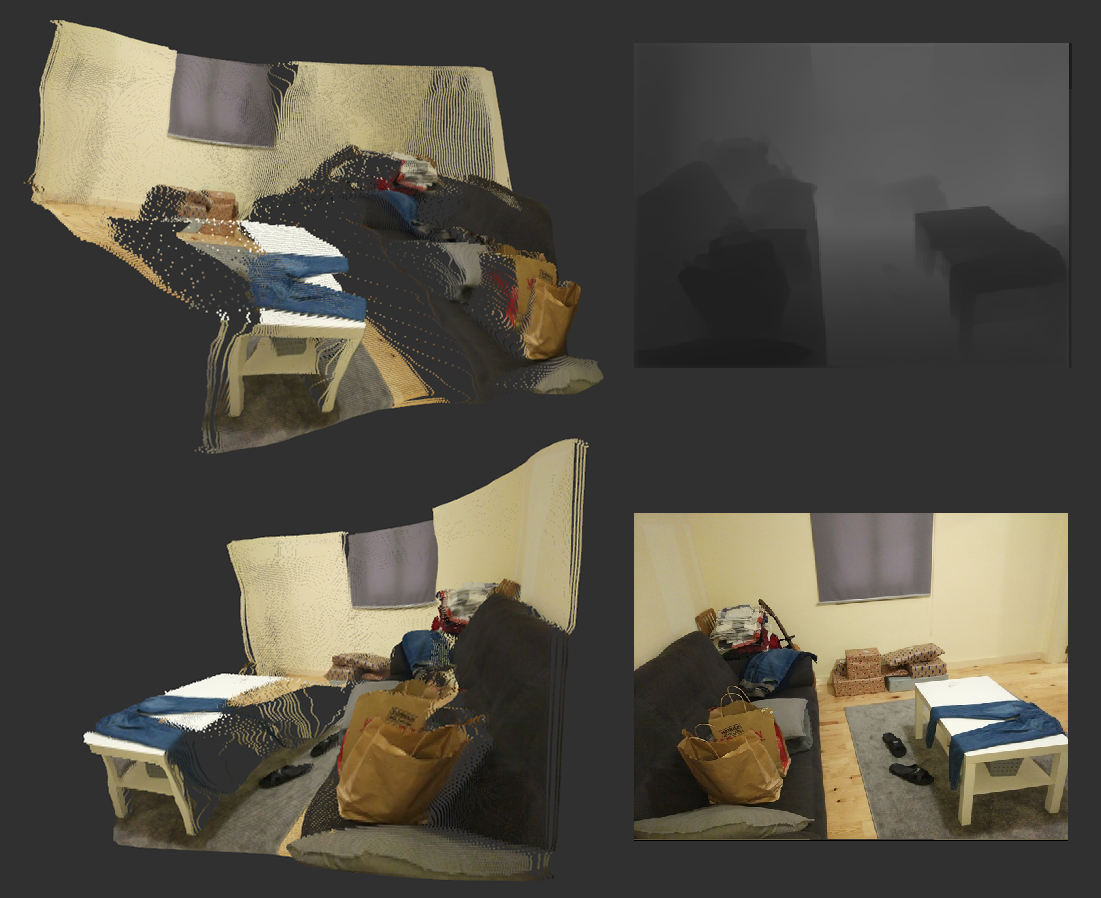

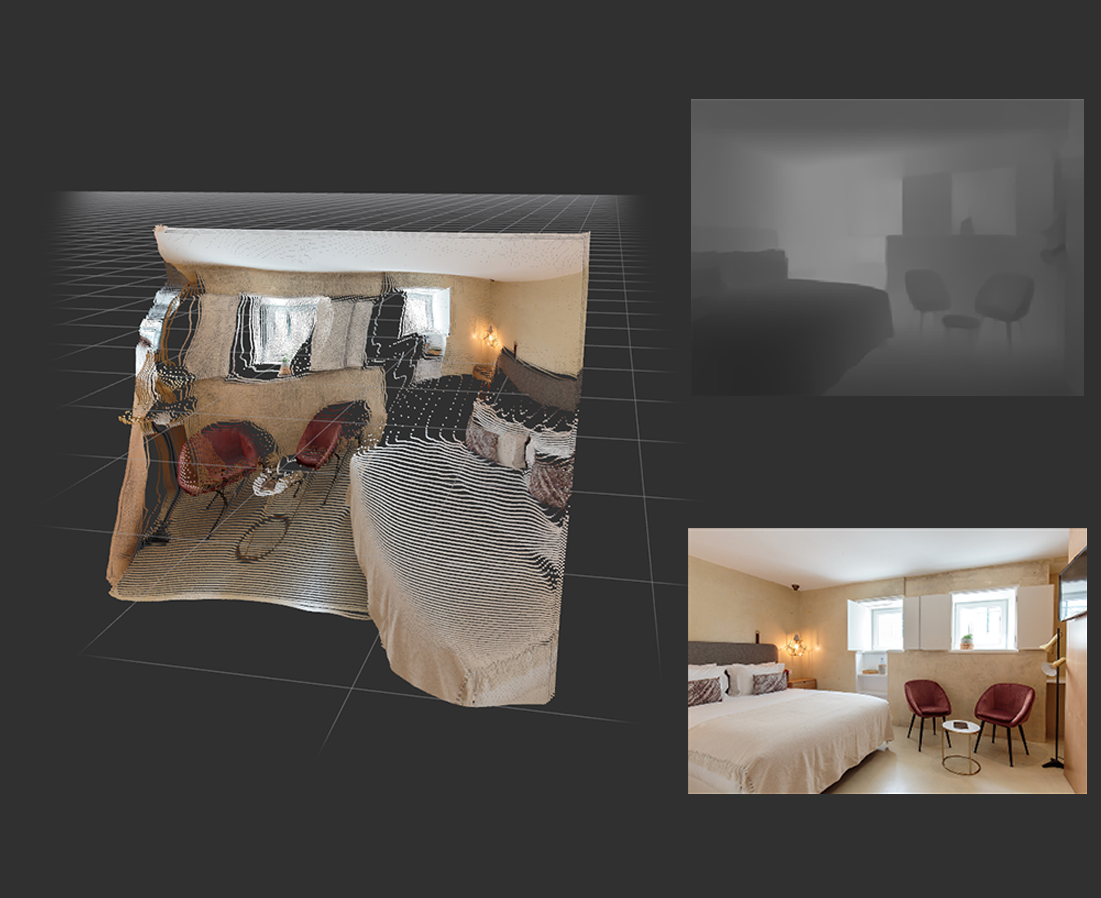

- ROS node used to estimated depth from monocular RGB data.

- Should be used with Python 2.X and ROS

- The original code is at the repository Dense Depth Original Code

- High Quality Monocular Depth Estimation via Transfer Learning by Ibraheem Alhashim and Peter Wonka

- Topics subscribed by the ROS node

- /image/camera_raw - Input image from camera (can be changed on the parameter topic_color)

- Topics published by the ROS node, containing depth and point cloud data generated.

- /image/depth - Image message containing the depth image estimated (can be changed on the parameter topic_depth).

- /pointcloud - Pointcloud2 message containing a estimated point cloud (can be changed on the parameter topic_pointcloud).

- Parameters that can be configurated

- frame_id - TF Frame id to be published in the output messages.

- debug - If set true a window with the output result if displayed.

- min_depth, max_depth - Min and max depth values considered for scaling.

- batch_size - Batch size used when predicting the depth image using the model provided.

- model_file - Keras model file used, relative to the monodepth package.

- Install Python 2 and ROS dependencies

apt-get install python python-pip curl

pip install rosdep rospkg rosinstall_generator rosinstall wstool vcstools catkin_tools catkin_pkg- Install project dependencies

pip install tensorflow keras pillow matplotlib scikit-learn scikit-image opencv-python pydot GraphViz tk- Clone the project into your ROS workspace and download pretrained models

git clone https://github.com/tentone/monodepth.git

cd monodepth/models

curl –o nyu.h5 https://s3-eu-west-1.amazonaws.com/densedepth/nyu.h5- Example ROS launch entry provided bellow, for easier integration into your already existing ROS launch pipeline.

<node pkg="monodepth" type="monodepth.py" name="monodepth" output="screen" respawn="true">

<param name="topic_color" value="/camera/image_raw"/>

<param name="topic_depth" value="/camera/depth"/>

</node>- Pre-trained keras models can be downloaded and placed in the /models folder from the following links:

- NYU Depth V2 (50K)

- The NYU-Depth V2 data set is comprised of video sequences from a variety of indoor scenes as recorded by both the RGB and Depth cameras from the Microsoft Kinect.

- Download dataset (4.1 GB)

- KITTI Dataset (80K)

- Datasets captured by driving around the mid-size city of Karlsruhe, in rural areas and on highways. Up to 15 cars and 30 pedestrians are visible per image.