Welcome to a LLM Response Analysis Framework! This tool is designed to dive deep into the heart of Language Models (LLMs) and their intriguing responses. Designed for researchers, developers, and LLM enthusiasts, the framework offers a way to examine the consistency of Large Language Models and Agents build on them.

Features | Screenshots | Getting Started | Development

-

Dynamic LLM Integration Seamlessly connect with various LLM providers and models to fetch responses using a flexible architecture. Following integrations are available.

- Openai

- Groq

- Ollama

-

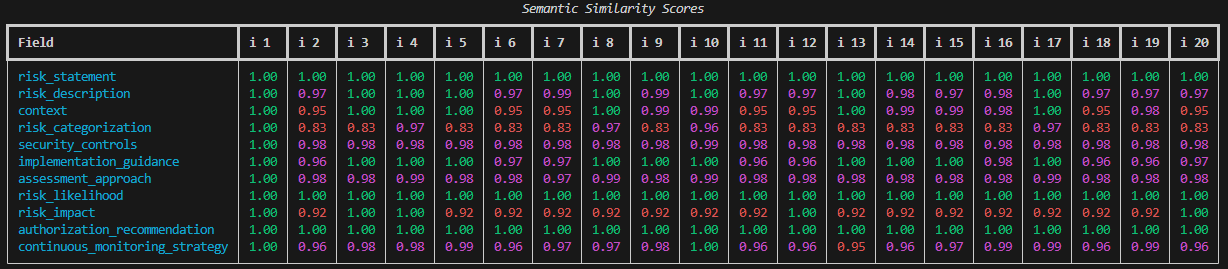

LangChain Structured Output Chain Analysis Seamlessly connect with a LangChain Structured Output and check for the consistency of responses. See this documentation for further information.

-

Semantic Similarity Calculation Understand the nuanced differences between responses by calculating their semantic distances.

-

Diverse Response Analysis Group, count, and analyze responses to highlight both their uniqueness and redundancy.

-

Rich Presentation Utilize beautiful tables and text differences to present analysis results in an understandable and visually appealing manner.

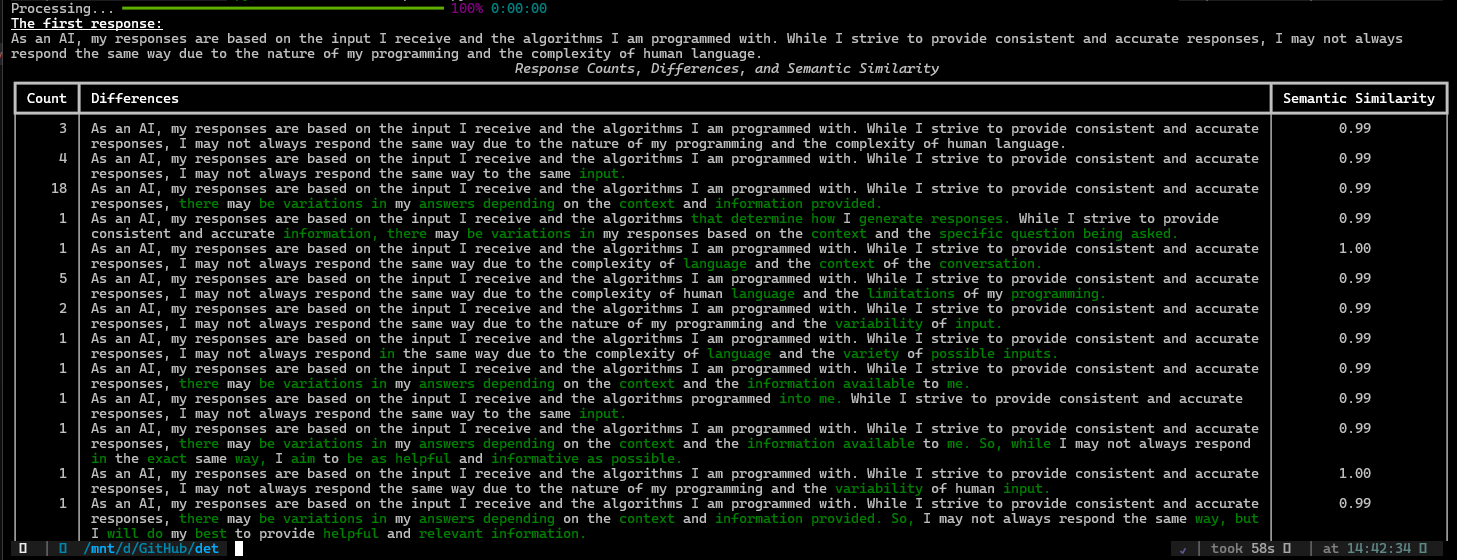

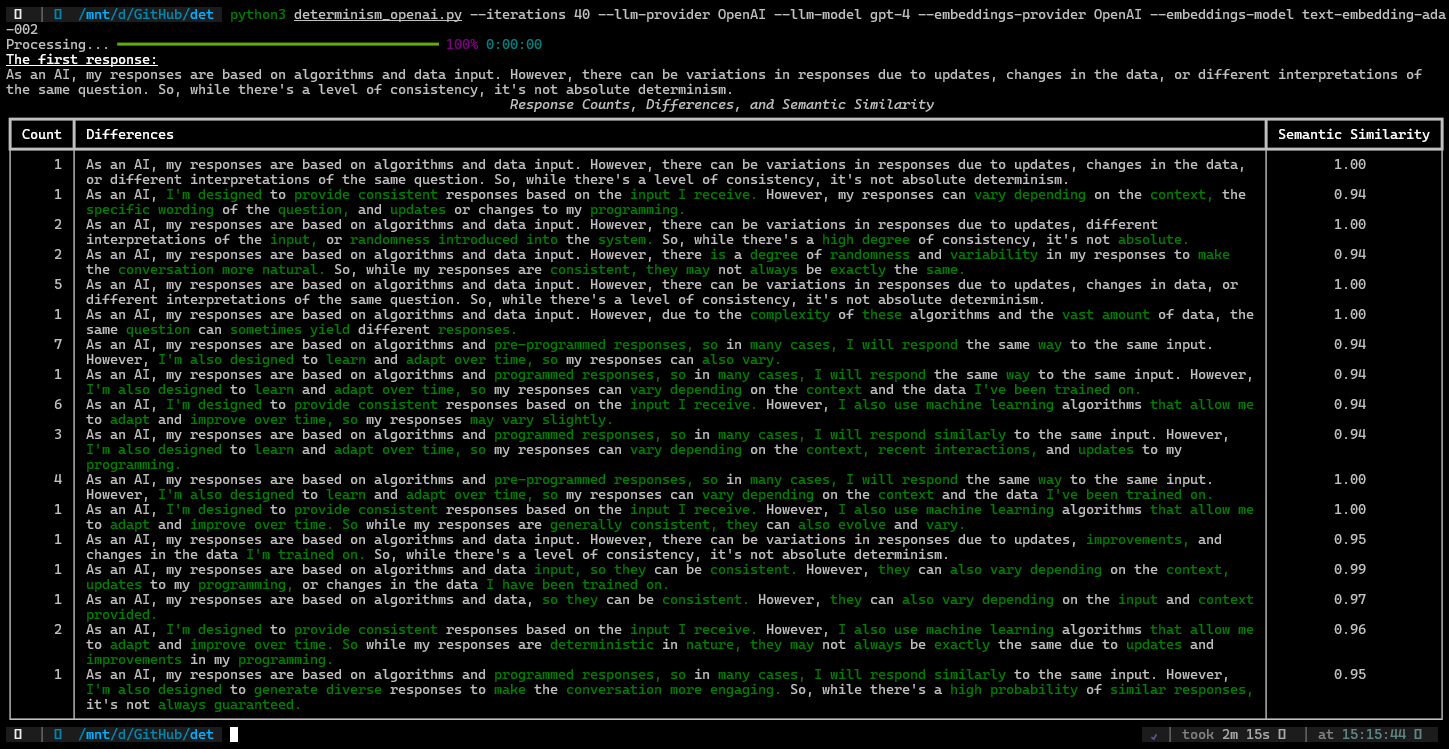

Below are some screenshots showcasing the framework in action:

These visuals provide a glimpse into how the framework processes and presents data from different LLM versions, highlighting the flexibility and depth of analysis possible with this tool.

- Ensure you have Python 3.10 or higher installed on your system.

Use pipx for det rather than install it on the base python. pipx is awesome for installing and running Python applications in isolated environments.

Get pipx here: https://pipx.pypa.io/stable/

Install det using pipx:

pipx install det

I understand that this approach heavily depends on the state of my system, it may not work and may result in pythonic module hell induced headaches

.Are you sure you want to do this?

I really know what I'm doing or plan on throwing away my computer

OK, no more checking - fill your boots.

Install det using pip:

pip install det

Before using det, configure your LLM and embeddings provider API keys

export OPENAI_API_KEY=sk-makeSureThisIsaRealKey

for groq to configure groq client API key.

export GROQ_API_KEY=gsk-DUMMYKEYISTHIS

To get a list of all the arguments and their descriptions, use:

det --help

a basic analysis of OpenAI's gpt-4o-mini model

det check-responses \

--iterations 2 \

--llm-provider OpenAI \

--llm-model gpt-4o-mini \

--embeddings-provider OpenAI \

--embeddings-model text-embedding-ada-002for Groq use --llm-provider as Groq

for Ollama use --llm-provider as Ollama

a LangChain Structured Output example

note, this requires the prompt details /resources/prompt.json and a pydantic output class /resources/risk_definition.py

det check-chain \

--iterations 20 \

--embeddings-provider OpenAI \

--embeddings-model text-embedding-ada-002 \

--prompt-config ./resources/prompts.json \

--prompt-group RiskDefinition \

--input-variables-str "risk_statement=There is a risk that failure to enforce multi-factor authentication can cause unauthorized access to user accounts to occur, leading to account takeover that could lead to financial fraud and identity theft issues for customers."-

Clone the repository:

git clone https://github.com/thompsonson/det.git cd det -

Set up the Poetry environment:

poetry install -

Activate the Poetry shell:

poetry shell

You're now ready to start development on the det project!

The documentation is in the module headings. I'll probably move it out at some point but that's good for now :)

For support, please open an issue on the GitHub repository. Contributions are welcome.

This project is licensed under the MIT License - see the LICENSE file for details.