This project is Pose Estimation on iOS with Core ML.

If you are interested in iOS + Machine Learning, visit here you can see various DEMOs.

| Jointed Keypoints | Hatmaps | Still Image |

|---|---|---|

|

|

|

Video source:

| Pose Capturing | Pose Matching |

|---|---|

|

|

- Estimate body pose on a image

- Inference with camera's pixel buffer on real-time

- Inference with a photo library's image

- Visualize as heatmaps

- Visualize as lines and points

- Pose capturing and pose matching

- Xcode 9.2+

- iOS 11.0+

- Swift 4

Download this temporary models from following link.

Or

☞ Download Core ML model model_cpm.mlmodel or hourglass.mlmodel.

input_name_shape_dict = {"image:0":[1,192,192,3]} image_input_names=["image:0"]

output_feature_names = ['Convolutional_Pose_Machine/stage_5_out:0']-in https://github.com/tucan9389/pose-estimation-for-mobile

| Model | Size (MB) |

Minimum iOS Version |

Input Shape | Output Shape |

|---|---|---|---|---|

| cpm | 2.6 | iOS11 | [1, 192, 192, 3] |

[1, 96, 96, 14] |

| hourhglass | 2 | iOS11 | [1, 192, 192, 3] |

[1, 48, 48, 14] |

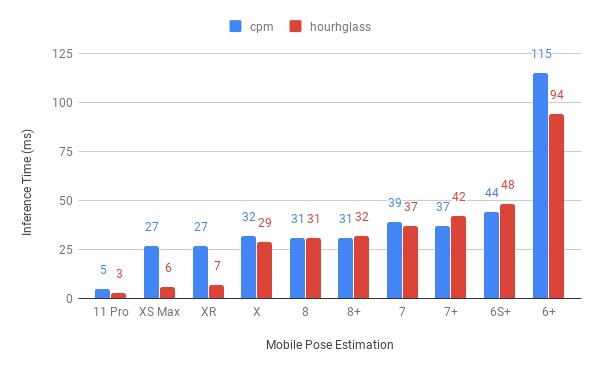

| Model vs. Device | 11 Pro |

XS Max |

XR | X | 8 | 8+ | 7 | 7+ | 6S+ | 6+ |

|---|---|---|---|---|---|---|---|---|---|---|

| cpm | 5 | 27 | 27 | 32 | 31 | 31 | 39 | 37 | 44 | 115 |

| hourhglass | 3 | 6 | 7 | 29 | 31 | 32 | 37 | 42 | 48 | 94 |

| Model vs. Device | 11 Pro |

XS Max |

XR | X | 8 | 8+ | 7 | 7+ | 6S+ | 6+ |

|---|---|---|---|---|---|---|---|---|---|---|

| cpm | 23 | 39 | 40 | 46 | 47 | 45 | 55 | 58 | 56 | 139 |

| hourhglass | 23 | 15 | 15 | 38 | 40 | 40 | 48 | 55 | 58 | 106 |

| Model vs. Device | 11 Pro |

XS Max |

XR | X | 8 | 8+ | 7 | 7+ | 6S+ | 6+ |

|---|---|---|---|---|---|---|---|---|---|---|

| cpm | 15 | 23 | 23 | 20 | 20 | 21 | 17 | 16 | 16 | 6 |

| hourhglass | 15 | 23 | 23 | 24 | 23 | 23 | 19 | 16 | 15 | 8 |

Or you can use your own PoseEstimation model

Once you import the model, compiler generates model helper class on build path automatically. You can access the model through model helper class by creating an instance, not through build path.

No external library yet.

import Vision// properties on ViewController

typealias EstimationModel = model_cpm // model name(model_cpm) must be equal with mlmodel file name

var request: VNCoreMLRequest!

var visionModel: VNCoreMLModel!override func viewDidLoad() {

super.viewDidLoad()

visionModel = try? VNCoreMLModel(for: EstimationModel().model)

request = VNCoreMLRequest(model: visionModel, completionHandler: visionRequestDidComplete)

request.imageCropAndScaleOption = .scaleFill

}

func visionRequestDidComplete(request: VNRequest, error: Error?) {

/* ------------------------------------------------------ */

/* something postprocessing what you want after inference */

/* ------------------------------------------------------ */

}// on the inference point

let handler = VNImageRequestHandler(cvPixelBuffer: pixelBuffer)

try? handler.perform([request])You can download cpm or hourglass model for Core ML from tucan9389/pose-estimation-for-mobile repo.

2. Fix the model name on PoseEstimation_CoreMLTests.swift

Hit the ⌘ + U or click the Build for Testing icon.

- motlabs/iOS-Proejcts-with-ML-Models

: The challenge using machine learning model created from tensorflow on iOS - tucan9389/PoseEstimation-TFLiteSwift

: The pose estimation with TensorFlowLiteSwift pod for iOS (Preparing...) - edvardHua/PoseEstimationForMobile

: TensorFlow project for pose estimation for mobile - tucan9389/pose-estimation-for-mobile

: forked from edvardHua/PoseEstimationForMobile - tucan9389/FingertipEstimation-CoreML

: iOS project for fingertip estimation using CoreML.