Rembg is a tool to remove images background.

If this project has helped you, please consider making a donation.

|

PhotoRoom Remove Background API

https://photoroom.com/api

Fast and accurate background remover API |

python: >3.7, <3.13

CPU support:

pip install rembg # for library

pip install rembg[cli] # for library + cliGPU support:

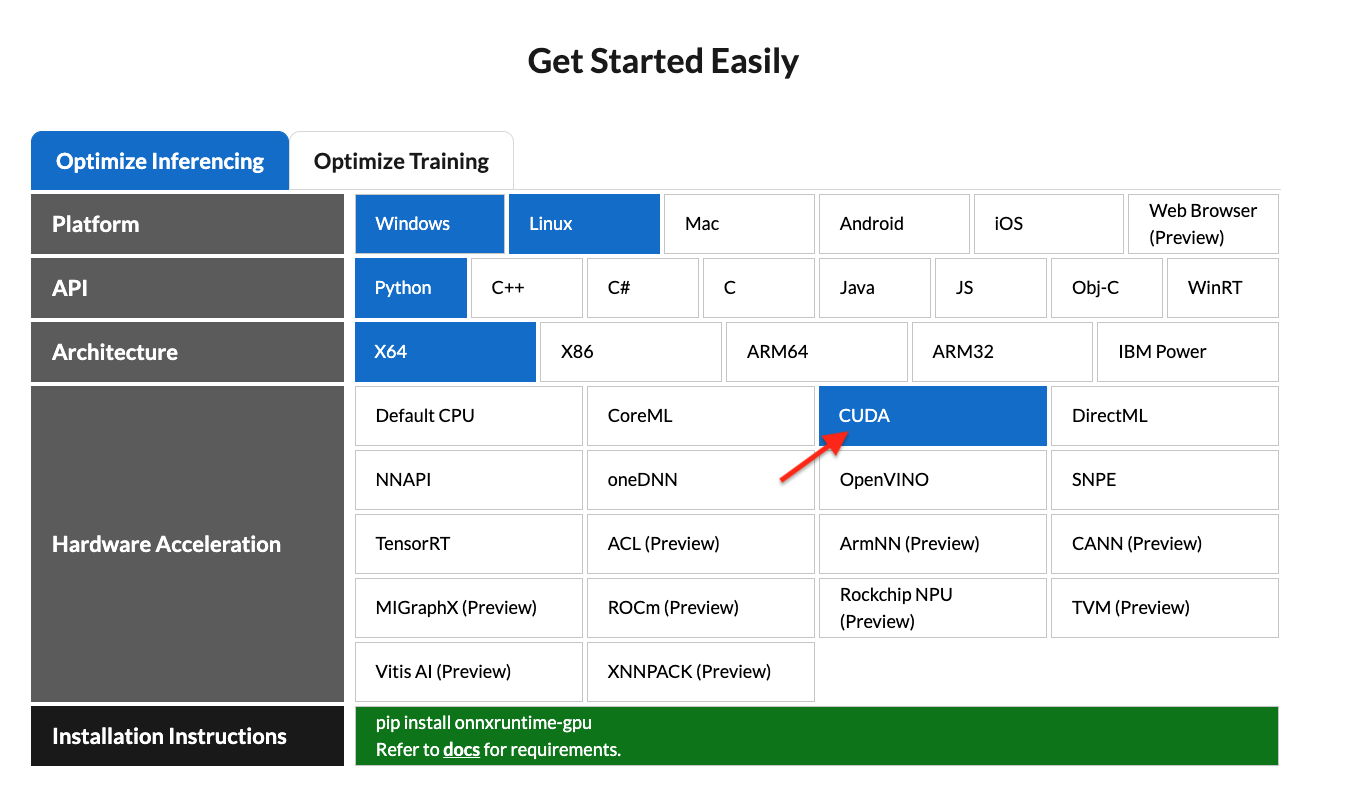

First of all, you need to check if your system supports the onnxruntime-gpu.

Go to https://onnxruntime.ai and check the installation matrix.

If yes, just run:

pip install rembg[gpu] # for library

pip install rembg[gpu,cli] # for library + cliAfter the installation step you can use rembg just typing rembg in your terminal window.

The rembg command has 4 subcommands, one for each input type:

ifor filespfor folderssfor http serverbfor RGB24 pixel binary stream

You can get help about the main command using:

rembg --helpAs well, about all the subcommands using:

rembg <COMMAND> --helpUsed when input and output are files.

Remove the background from a remote image

curl -s http://input.png | rembg i > output.pngRemove the background from a local file

rembg i path/to/input.png path/to/output.pngRemove the background specifying a model

rembg i -m u2netp path/to/input.png path/to/output.pngRemove the background returning only the mask

rembg i -om path/to/input.png path/to/output.pngRemove the background applying an alpha matting

rembg i -a path/to/input.png path/to/output.pngPassing extras parameters

SAM example

rembg i -m sam -x '{ "sam_prompt": [{"type": "point", "data": [724, 740], "label": 1}] }' examples/plants-1.jpg examples/plants-1.out.pngCustom model example

rembg i -m u2net_custom -x '{"model_path": "~/.u2net/u2net.onnx"}' path/to/input.png path/to/output.pngUsed when input and output are folders.

Remove the background from all images in a folder

rembg p path/to/input path/to/outputSame as before, but watching for new/changed files to process

rembg p -w path/to/input path/to/outputUsed to start http server.

rembg s --host 0.0.0.0 --port 7000 --log_level infoTo see the complete endpoints documentation, go to: http://localhost:7000/api.

Remove the background from an image url

curl -s "http://localhost:7000/api/remove?url=http://input.png" -o output.pngRemove the background from an uploaded image

curl -s -F file=@/path/to/input.jpg "http://localhost:7000/api/remove" -o output.pngProcess a sequence of RGB24 images from stdin. This is intended to be used with another program, such as FFMPEG, that outputs RGB24 pixel data to stdout, which is piped into the stdin of this program, although nothing prevents you from manually typing in images at stdin.

rembg b image_width image_height -o output_specifierArguments:

- image_width : width of input image(s)

- image_height : height of input image(s)

- output_specifier: printf-style specifier for output filenames, for example if

output-%03u.png, then output files will be namedoutput-000.png,output-001.png,output-002.png, etc. Output files will be saved in PNG format regardless of the extension specified. You can omit it to write results to stdout.

Example usage with FFMPEG:

ffmpeg -i input.mp4 -ss 10 -an -f rawvideo -pix_fmt rgb24 pipe:1 | rembg b 1280 720 -o folder/output-%03u.pngThe width and height values must match the dimension of output images from FFMPEG. Note for FFMPEG, the "-an -f rawvideo -pix_fmt rgb24 pipe:1" part is required for the whole thing to work.

Input and output as bytes

from rembg import remove

input_path = 'input.png'

output_path = 'output.png'

with open(input_path, 'rb') as i:

with open(output_path, 'wb') as o:

input = i.read()

output = remove(input)

o.write(output)Input and output as a PIL image

from rembg import remove

from PIL import Image

input_path = 'input.png'

output_path = 'output.png'

input = Image.open(input_path)

output = remove(input)

output.save(output_path)Input and output as a numpy array

from rembg import remove

import cv2

input_path = 'input.png'

output_path = 'output.png'

input = cv2.imread(input_path)

output = remove(input)

cv2.imwrite(output_path, output)How to iterate over files in a performatic way

from pathlib import Path

from rembg import remove, new_session

session = new_session()

for file in Path('path/to/folder').glob('*.png'):

input_path = str(file)

output_path = str(file.parent / (file.stem + ".out.png"))

with open(input_path, 'rb') as i:

with open(output_path, 'wb') as o:

input = i.read()

output = remove(input, session=session)

o.write(output)To see a full list of examples on how to use rembg, go to the examples page.

Just replace the rembg command for docker run danielgatis/rembg.

Try this:

docker run -v path/to/input:/rembg danielgatis/rembg i input.png path/to/output/output.pngAll models are downloaded and saved in the user home folder in the .u2net directory.

The available models are:

- u2net (download, source): A pre-trained model for general use cases.

- u2netp (download, source): A lightweight version of u2net model.

- u2net_human_seg (download, source): A pre-trained model for human segmentation.

- u2net_cloth_seg (download, source): A pre-trained model for Cloths Parsing from human portrait. Here clothes are parsed into 3 category: Upper body, Lower body and Full body.

- silueta (download, source): Same as u2net but the size is reduced to 43Mb.

- isnet-general-use (download, source): A new pre-trained model for general use cases.

- isnet-anime (download, source): A high-accuracy segmentation for anime character.

- sam (download encoder, download decoder, source): A pre-trained model for any use cases.

If You need more fine tuned models try this: danielgatis#193 (comment)

- https://www.youtube.com/watch?v=3xqwpXjxyMQ

- https://www.youtube.com/watch?v=dFKRGXdkGJU

- https://www.youtube.com/watch?v=Ai-BS_T7yjE

- https://www.youtube.com/watch?v=D7W-C0urVcQ

- https://arxiv.org/pdf/2005.09007.pdf

- https://github.com/NathanUA/U-2-Net

- https://github.com/pymatting/pymatting

This library directly depends on the onnxruntime library. Therefore, we can only update the Python version when onnxruntime provides support for that specific version.

Liked some of my work? Buy me a coffee (or more likely a beer)

Copyright (c) 2020-present Daniel Gatis

Licensed under MIT License