This repository implements teleoperation of the Unitree humanoid robot using Apple Vision Pro.

Here are the robots that will be supported,

| 🤖 Robot | ⚪ Status | 📝 Remarks |

|---|---|---|

| G1 (29DoF) + Dex3-1 | ✅ Completed | main branch |

| H1 (Arm 4DoF) | ⏱ In Progress | Refer to this branch's ik temporarily |

| H1_2 (Arm 7DoF) + Inspire | ✅ Completed | The original h1_2 branch has become stale, and the original g1 branch has been renamed to the main branch. The main branch now supports both g1 and h1_2. |

| ··· | ··· | ··· |

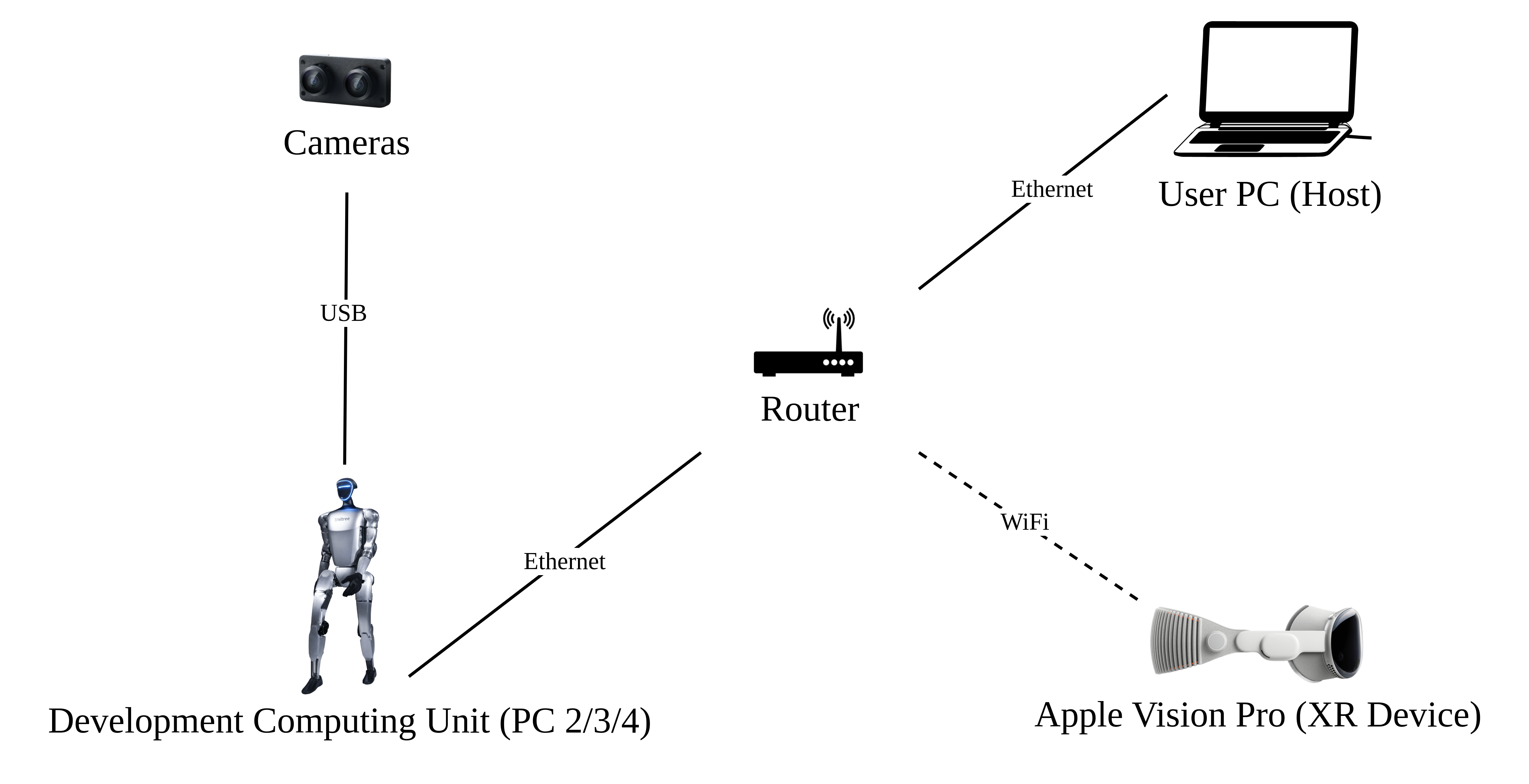

Here are the required devices and wiring diagram,

We tested our code on Ubuntu 20.04 and Ubuntu 22.04, other operating systems may be configured differently.

For more information, you can refer to Official Documentation and OpenTeleVision.

unitree@Host:~$ conda create -n tv python=3.8

unitree@Host:~$ conda activate tv

# If you use `pip install`, Make sure pinocchio version is 3.1.0

(tv) unitree@Host:~$ conda install pinocchio -c conda-forge

(tv) unitree@Host:~$ pip install meshcat

(tv) unitree@Host:~$ pip install casadip.s. All identifiers in front of the command are meant for prompting: Which device and directory the command should be executed on.

In the Ubuntu system's ~/.bashrc file, the default configuration is: PS1='${debian_chroot:+($debian_chroot)}\u@\h:\w\$ '

Taking the command

(tv) unitree@Host:~$ pip install meshcatas an example:

(tv)Indicates the shell is in the conda environment namedtv.unitree@Host:~Shows the user\uunitreeis logged into the device\hHost, with the current working directory\was$HOME.$shows the current shell is Bash (for non-root users).pip install meshcatis the commandunitreewants to execute onHost.You can refer to Harley Hahn's Guide to Unix and Linux and Conda User Guide to learn more.

# Install unitree_sdk2_python.

(tv) unitree@Host:~$ git clone https://github.com/unitreerobotics/unitree_sdk2_python.git

(tv) unitree@Host:~$ cd unitree_sdk2_python

(tv) unitree@Host:~$ pip install -e .p.s. The unitree_dds_wrapper in the original h1_2 branch was a temporary version. It has now been fully migrated to the official Python-based control and communication library: unitree_sdk2_python.

(tv) unitree@Host:~$ cd ~

(tv) unitree@Host:~$ git clone https://github.com/unitreerobotics/avp_teleoperate.git

(tv) unitree@Host:~$ cd ~/avp_teleoperate

(tv) unitree@Host:~$ pip install -r requirements.txtApple does not allow WebXR on non-https connections. To test the application locally, we need to create a self-signed certificate and install it on the client. You need a ubuntu machine and a router. Connect the Apple Vision Pro and the ubuntu Host machine to the same router.

- install mkcert: https://github.com/FiloSottile/mkcert

- check Host machine local ip address:

(tv) unitree@Host:~/avp_teleoperate$ ifconfig | grep inetSuppose the local ip address of the Host machine is 192.168.123.2

p.s. You can use

ifconfigcommand to check your Host machine ip address.

- create certificate:

(tv) unitree@Host:~/avp_teleoperate$ mkcert -install && mkcert -cert-file cert.pem -key-file key.pem 192.168.123.2 localhost 127.0.0.1place the generated cert.pem and key.pem files in teleop

(tv) unitree@Host:~/avp_teleoperate$ cp cert.pem key.pem ~/avp_teleoperate/teleop/- open firewall on server:

(tv) unitree@Host:~/avp_teleoperate$ sudo ufw allow 8012- install ca-certificates on Apple Vision Pro:

(tv) unitree@Host:~/avp_teleoperate$ mkcert -CAROOTCopy the rootCA.pem via AirDrop to Apple Vision Pro and install it.

Settings > General > About > Certificate Trust Settings. Under "Enable full trust for root certificates", turn on trust for the certificate.

In the new version of Vision OS 2, this step is different: After copying the certificate to the Apple Vision Pro device via AirDrop, a certificate-related information section will appear below the account bar in the top left corner of the Settings app. Tap it to enable trust for the certificate.

Settings > Apps > Safari > Advanced > Feature Flags > Enable WebXR Related Features.

This step is to verify that the environment is installed correctly.

comming soon.

Please read the Official Documentation at least once before starting this program.

Copy image_server.py in the avp_teleoperate/teleop/image_server directory to the Development Computing Unit PC2 of Unitree Robot (G1/H1/H1_2/etc.), and execute the following command in the PC2:

# p.s.1 You can transfer image_server.py to PC2 via the scp command and then use ssh to remotely login to PC2 to execute it.

# Assuming the IP address of the development computing unit PC2 is 192.168.123.164, the transmission process is as follows:

# log in to PC2 via SSH and create the folder for the image server

(tv) unitree@Host:~$ ssh unitree@192.168.123.164 "mkdir -p ~/image_server"

# Copy the local image_server.py to the ~/image_server directory on PC2

(tv) unitree@Host:~$ scp ~/avp_teleoperate/teleop/image_server/image_server.py unitree@192.168.123.164:~/image_server/

# p.s.2 Currently, this image transmission program supports two methods for reading images: OpenCV and Realsense SDK. Please refer to the comments in the `ImageServer` class within `image_server.py` to configure your image transmission service according to your camera hardware.

# Now located in Unitree Robot PC2 terminal

unitree@PC2:~/image_server$ python image_server.py

# You can see the terminal output as follows:

# {'fps': 30, 'head_camera_type': 'opencv', 'head_camera_image_shape': [480, 1280], 'head_camera_id_numbers': [0]}

# [Image Server] Head camera 0 resolution: 480.0 x 1280.0

# [Image Server] Image server has started, waiting for client connections...After image service is started, you can use image_client.py in the Host terminal to test whether the communication is successful:

(tv) unitree@Host:~/avp_teleoperate/teleop/image_server$ python image_client.pyNote: If the selected robot configuration does not use the Inspire dexterous hand (Gen1), please ignore this section.

You can refer to Dexterous Hand Development to configure related environments and compile control programs. First, use this URL to download the dexterous hand control interface program. Copy it to PC2 of Unitree robots.

On Unitree robot's PC2, execute command:

unitree@PC2:~$ sudo apt install libboost-all-dev libspdlog-dev

# Build project

unitree@PC2:~$ cd h1_inspire_service & mkdir build & cd build

unitree@PC2:~/h1_inspire_service/build$ cmake .. -DCMAKE_BUILD_TYPE=Release

unitree@PC2:~/h1_inspire_service/build$ make

# Terminal 1. Run h1 inspire hand service

unitree@PC2:~/h1_inspire_service/build$ sudo ./inspire_hand -s /dev/ttyUSB0

# Terminal 2. Run example

unitree@PC2:~/h1_inspire_service/build$ ./h1_hand_exampleIf two hands open and close continuously, it indicates success. Once successful, close the ./h1_hand_example program in Terminal 2.

Everyone must keep a safe distance from the robot to prevent any potential danger!

Please make sure to read the Official Documentation at least once before running this program.

Always make sure that the robot has entered debug mode (L2+R2) to stop the motion control program, this will avoid potential command conflict problems.

It's best to have two operators to run this program, referred to as Operator A and Operator B.

First, Operator B needs to perform the following steps:

-

Modify the

img_configimage client configuration under theif __name__ == '__main__':section in~/avp_teleoperate/teleop/teleop_hand_and_arm.py. It should match the image server parameters you configured on PC2 in Section 3.1. -

Choose different launch parameters based on your robot configuration

# 1. G1 (29DoF) Robot + Dex3-1 Dexterous Hand (Note: G1_29 is the default value for --arm, so it can be omitted) (tv) unitree@Host:~/avp_teleoperate/teleop$ python teleop_hand_and_arm.py --arm=G1_29 --hand=dex3 # 2. G1 (29DoF) Robot only (tv) unitree@Host:~/avp_teleoperate/teleop$ python teleop_hand_and_arm.py # 3. H1_2 Robot (Note: The first-generation Inspire Dexterous Hand is currently only supported in the H1_2 branch. Support for the Main branch will be added later.) (tv) unitree@Host:~/avp_teleoperate/teleop$ python teleop_hand_and_arm.py --arm=H1_2 # 4. If you want to enable data visualization + recording, you can add the --record option (tv) unitree@Host:~/avp_teleoperate/teleop$ python teleop_hand_and_arm.py --record

-

If the program starts successfully, the terminal will pause at the final line displaying the message: "Please enter the start signal (enter 'r' to start the subsequent program):"

And then, Operator A needs to perform the following steps:

-

Wear your Apple Vision Pro device.

-

Open Safari on Apple Vision Pro and visit : https://192.168.123.2:8012?ws=wss://192.168.123.2:8012

p.s. This IP address should match the IP address of your Host machine.

-

Click

Enter VRandAllowto start the VR session. -

You will see the robot's first-person perspective in the Apple Vision Pro.

Next, Operator B can start teleoperation program by pressing the r key in the terminal.

At this time, Operator A can remotely control the robot's arms (and dexterous hands).

If the --record parameter is used, Operator B can press s key in the opened "record image" window to start recording data, and press s again to stop. This operation can be repeated as needed for multiple recordings.

p.s.1 Recorded data is stored in

avp_teleoperate/teleop/utils/databy default, with usage instructions at this repo: unitree_IL_lerobot.p.s.2 Please pay attention to your disk space size during data recording.

To exit the program, Operator B can press the q key in the 'record image' window.

To avoid damaging the robot, it's best to ensure that Operator A positions the robot's arms in a naturally lowered or appropriate position before Operator B presses q to exit.

avp_teleoperate/

│

├── assets [Storage of robot URDF-related files]

│

├── teleop

│ ├── image_server

│ │ ├── image_client.py [Used to receive image data from the robot image server]

│ │ ├── image_server.py [Capture images from cameras and send via network (Running on robot's on-board computer)]

│ │

│ ├── open_television

│ │ ├── television.py [Using Vuer to capture wrist and hand data from apple vision pro]

│ │ ├── tv_wrapper.py [Post-processing of captured data]

│ │

│ ├── robot_control

│ │ ├── robot_arm_ik.py [Inverse kinematics of the arm]

│ │ ├── robot_arm.py [Control dual arm joints and lock the others]

│ │ ├── robot_hand_inspire.py [Control inspire hand joints]

│ │ ├── robot_hand_unitree.py [Control unitree hand joints]

│ │

│ ├── utils

│ │ ├── episode_writer.py [Used to record data for imitation learning]

│ │ ├── mat_tool.py [Some small math tools]

│ │ ├── weighted_moving_filter.py [For filtering joint data]

│ │

│ │──teleop_hand_and_arm.py [Startup execution code for teleoperation]

| Item | Quantity | Link | Remarks |

|---|---|---|---|

| Unitree Robot G1 | 1 | https://www.unitree.com/g1 | With development computing unit |

| Apple Vision Pro | 1 | https://www.apple.com/apple-vision-pro/ | |

| Router | 1 | ||

| User PC | 1 | Recommended graphics card performance at RTX 4080 and above | |

| Head Stereo Camera | 1 | [For reference only] http://e.tb.cn/h.TaZxgkpfWkNCakg?tk=KKz03Kyu04u | For head |

| Head Camera Mount | 1 | https://github.com/unitreerobotics/avp_teleoperate/blob/g1/hardware/head_stereo_camera_mount.STEP | For mounting head stereo camera, FOV 130° |

| Intel RealSense D405 | 2 | https://www.intelrealsense.com/depth-camera-d405/ | For wrist |

| Wrist Ring Mount | 2 | https://github.com/unitreerobotics/avp_teleoperate/blob/g1/hardware/wrist_ring_mount.STEP | Used with wrist camera mount |

| Left Wrist Camera Mount | 1 | https://github.com/unitreerobotics/avp_teleoperate/blob/g1/hardware/left_wrist_D405_camera_mount.STEP | For mounting left wrist RealSense D405 camera |

| Right Wrist Camera Mount | 1 | https://github.com/unitreerobotics/avp_teleoperate/blob/g1/hardware/right_wrist_D405_camera_mount.STEP | For mounting right wrist RealSense D405 camera |

| M3 hex nuts | 4 | [For reference only] https://a.co/d/1opqtOr | For Wrist fastener |

| M3x12 screws | 4 | [For reference only] https://amzn.asia/d/aU9NHSf | For wrist fastener |

| M3x6 screws | 4 | [For reference only] https://amzn.asia/d/0nEz5dJ | For wrist fastener |

| M4x14 screws | 2 | [For reference only] https://amzn.asia/d/cfta55x | For head fastener |

| M2x4 self-tapping screws | 4 | [For reference only] https://amzn.asia/d/1msRa5B | For head fastener |

Note: The bolded items are essential equipment for teleoperation tasks, while the other items are optional equipment for recording datasets.

| Item | Simulation | Real | ||

|---|---|---|---|---|

| Head | Head Mount | Side View of Assembly | Front View of Assembly | |

| Wrist | Wrist Ring and Camera Mount | Left Hand Assembly | Right Hand Assembly | |

Note: The wrist ring mount should align with the seam of the robot's wrist, as shown by the red circle in the image.

This code builds upon following open-source code-bases. Please visit the URLs to see the respective LICENSES:

- https://github.com/OpenTeleVision/TeleVision

- https://github.com/dexsuite/dex-retargeting

- https://github.com/vuer-ai/vuer

- https://github.com/stack-of-tasks/pinocchio

- https://github.com/casadi/casadi

- https://github.com/meshcat-dev/meshcat-python

- https://github.com/zeromq/pyzmq

- https://github.com/unitreerobotics/unitree_dds_wrapper

- https://github.com/tonyzhaozh/act

- https://github.com/facebookresearch/detr

- https://github.com/Dingry/BunnyVisionPro

- https://github.com/unitreerobotics/unitree_sdk2_python