-

Notifications

You must be signed in to change notification settings - Fork 111

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

signatures should be on quoted graphs #1248

Comments

|

I also had a look at Example 2: A simple example of a verifiable presentation.

@prefix cred: <https://www.w3.org/2018/credentials#> .

@prefix credEx: <http://example.edu/credentials/> .

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix sec: <https://w3id.org/security#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix sch: <http://schema.org/> .

@prefix dc: <http://purl.org/dc/terms/> .

credEx:1872 sec:proof {

[] rdf:type sec:RsaSignature2018 ;

dc:created "2017-06-18T21:19:10Z"^^xsd:dateTime ;

sec:jws "eyJhbGciOiJSUzI1NiIsImI2NCI6ZmFsc2UsImNyaXQiOlsiYjY0Il19..TCYt5XsITJX1CxPCT8yAV-TVkIEq_PbChOMqsLfRoPsnsgw5WEuts01mq-pQy7UJiN5mgRxD-WUcX16dUEMGlv50aqzpqh4Qktb3rk-BuQy72IFLOqV0G_zS245-kronKb78cPN25DGlcTwLtjPAYuNzVBAh4vGHSrQyHUdBBPM" ;

sec:proofPurpose sec:assertionMethod ;

sec:verificationMethod

<https://example.edu/issuers/565049#key-1> .

} .

[] a cred:VerifiablePresentation ;

cred:verifiableCredential {

<did:example:ebfeb1f712ebc6f1c276e12ec21>

sch:alumniOf [ id <did:example:c276e12ec21ebfeb1f712ebc6f1>

sch:name "Example University"@en, "Exemple d'Université"@fr

] .

credEx:1872 a cred:VerifiableCredential,

<https://example.org/examples#AlumniCredential>;

cred:credentialSubject

<did:example:ebfeb1f712ebc6f1c276e12ec21> .

credEx:1872

cred:issuanceDate "2010-01-01T19:23:24Z"^^xsd:dateTime ;

cred:issuer <https://example.edu/issuers/565049> .

} ;

sec:proof {

[] a sec:RsaSignature2018 ;

dc:created "2018-09-14T21:19:10Z"^^xsd:dateTime ;

sec:challenge "1f44d55f-f161-4938-a659-f8026467f126" ;

sec:domain "4jt78h47fh47" ;

sec:jws "eyJhbGciOiJSUzI1NiIsImI2NCI6ZmFsc2UsImNyaXQiOlsiYjY0Il19..kTCYt5XsITJX1CxPCT8yAV-TVIw5WEuts01mq-pQy7UJiN5mgREEMGlv50aqzpqh4Qq_PbChOMqsLfRoPsnsgxD-WUcX16dUOqV0G_zS245-kronKb78cPktb3rk-BuQy72IFLN25DYuNzVBAh4vGHSrQyHUGlcTwLtjPAnKb78" ;

sec:proofPurpose sec:authenticationMethod ;

sec:verificationMethod

<did:example:ebfeb1f712ebc6f1c276e12ec21#keys-1> .

} .This makes more sense because we are linking a graph of verifiable credentials and a signature. But it seems like the signature needs not be placed in a graph. It is an acknowledged statement of fact. And indeed, it is a bit weird because the signature is a blank node so the proof is a statement in which one has to find the only blank node and continue from there to find the proof. The context could have been avoided and a link directly to the blank node made. |

|

The issue was discussed in a meeting on 2023-08-23

View the transcript3.2. N3 rendering of Verifiable Claims Examples problems (issue vc-data-model#1248)See github issue vc-data-model#1248. Manu Sporny: This has to do with the 'verifiable credential graph' language that some would like, and also relates to the diagrams. I think Henry got it right, and suggested some post-CR modifications we could make. Brent Zundel: I'm not hearing any objections to this being post-CR. |

|

@iherman reported

Thanks for letting me know about that discussion. |

|

|

|

Just as an addendum note to @iherman, @brentzundel and @msporny More importantly, I argue that the JsonLd that is currently produced won't do. It is related to how graphs get merged, which is an essential property of RDF: merging true graphs should lead to true graphs. Consider the graph reached by current jsonld conversion Now consider merging this graph with another true graph. This could quickly render the signature meaningless. Take for example, the true graph <did:example:ebfeb1f712ebc6f1c276e12ec21> foaf:name "Pay Hayes";

foaf:publications <https://scholar.google.com/citations?sortby=pubdate&hl=en&user=VTNr4NMAAAAJ&view_op=list_works> .If we merged that to the representation where only the signature is a surface, the new graph would consist of the intact green surface, the other data and those three triples on the same default surface. I.e. we have the following graph, and it would no longer be possible to know what was signed. Compare this to the merging of the simplified model of §4 with the true Pat Hayes graph. Here we can still verify the Credential Graph using the Proof object, and if we still believe it, then we can merge the content of the pink surface and the rest without problem. So that provides a simple, pragmatic reason for why the current translation is not good. |

|

@bblfish thanks for the very detailed issue report; the diagrams were very helpful.

Yes, exactly. My initial response to you (which I deleted, but you still responded to) was incorrect, please discard that one and read this one instead. :) I had to read through your issue multiple times in an attempt to understand the content at depth. I'm fairly convinced I'm still missing something, so apologies for the overly verbose reply. Just to establish some other content that might help you navigate this: For securing a Verifiable Credential using Data Integrity (what you delve into above), you might want to familiarize yourself with the Data Integrity specification and at least one of the cryptosuite specifications, such as a EdDSA Cryptosuite that uses RDF Dataset Canonicalization. Namely, the following are further detailed across these specifications:

What I'm trying to elaborate upon above is that we have put a lot of thought and time into ensuring that the proper boundaries are created around the default RDF Dataset, the There is a boundary to the information that is contained in a VP or a VC, and that boundary ends at the document you receive. Since we're using RDF Datasets, you /can/ make inferences across those boundaries if objects are identified using URLs, but merging RDF Datasets isn't something one should do without careful consideration (such as, there are no labeled blank nodes in either RDF Dataset that might conflict). I do expect that we could do better to explain the above, but at the same time, remember that we have a number of people in the ecosystem that neither care about these formal modelling details or want to know about them. The more RDF we put into the specification, the more agitated a subset of the community becomes... so we've always struggled to provide just the right level of information, especially at the beginning of the specification, without opening up the flood gates into graph theory and formal semantics... which, people using this technology, don't need to know about... just like how someone operating a vehicle doesn't need to understand the physics behind it all... you just press one pedal to go and another pedal to stop. All that said, we do have to do at least the following:

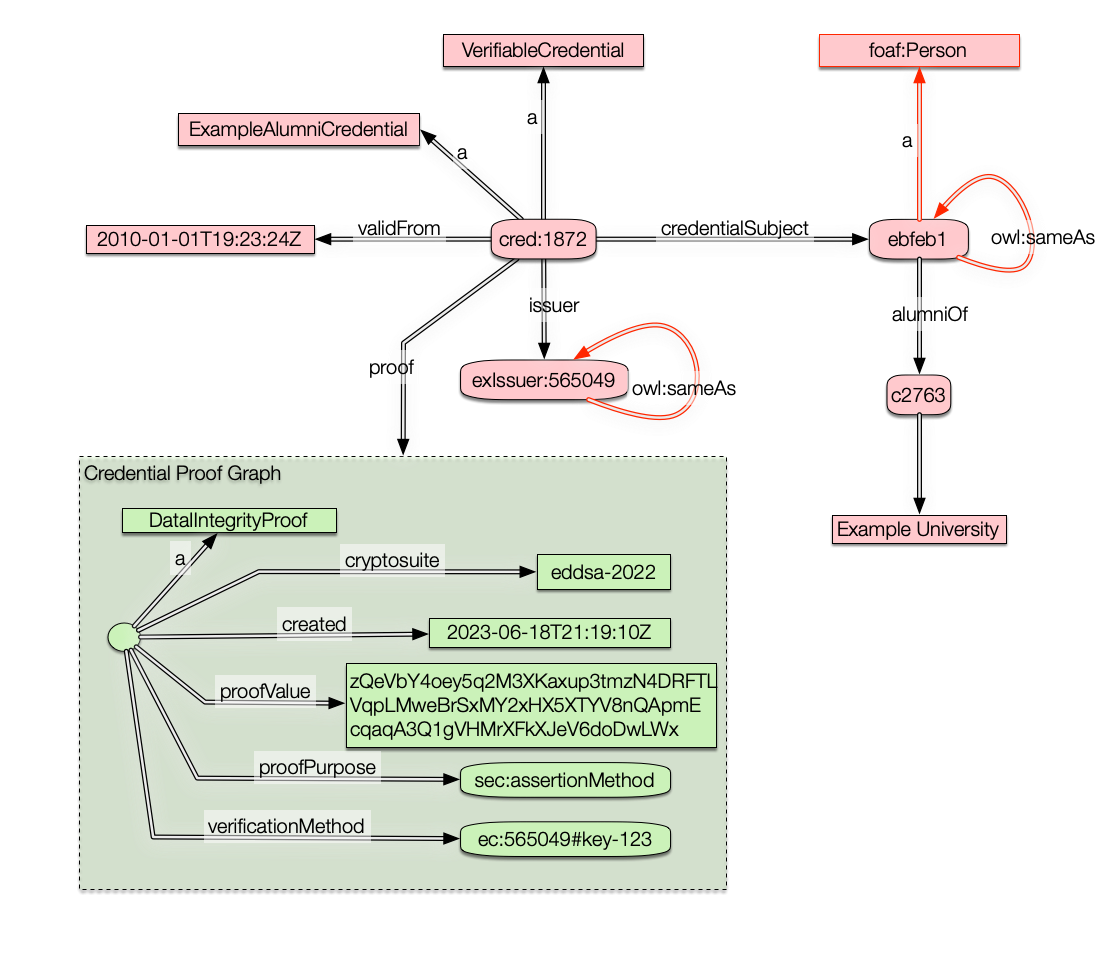

In the meantime, try this example instead, which is from the latest spec (EXAMPLE 1): {

"@context": [

"https://www.w3.org/ns/credentials/v2",

"https://www.w3.org/ns/credentials/examples/v2"

],

"id": "http://university.example/credentials/1872",

"type": ["VerifiableCredential", "ExampleAlumniCredential"],

"issuer": "https://university.example/issuers/565049",

"validFrom": "2010-01-01T19:23:24Z",

"credentialSubject": {

"id": "did:example:ebfeb1f712ebc6f1c276e12ec21",

"alumniOf": {

"id": "did:example:c276e12ec21ebfeb1f712ebc6f1",

"name": "Example University"

}

},

"proof": {

"type": "DataIntegrityProof",

"cryptosuite": "eddsa-2022",

"created": "2023-06-18T21:19:10Z",

"proofPurpose": "assertionMethod",

"verificationMethod": "https://university.example/issuers/565049#key-123",

"proofValue": "zQeVbY4oey5q2M3XKaxup3tmzN4DRFTLVqpLMweBrSxMY2xHX5XTYV8nQApmEcqaqA3Q1gVHMrXFkXJeV6doDwLWx"

}

}I'll stop there and see if you have more concrete guidance on what we could do with the above. |

|

Thanks, Manu, for the Detailed response. Let me start with your final suggestion to look at the examples 1 and 2 from the 2.0 spec (I was looking at the 1.1 data model before) So, I started with Example 1 JsonLD and using the JSLd Playground transformed it into NQuads and then by hand to Example 1 N3. (It definitely would save a huge amount of time if @jeswr added his JS N3 lib to the JS playground as per comment). The resulting RDF was structurally no different than the 1.1 spec. Within a node of the default RDF graph, we have a Credential Proof Graph. We colored that node green here to match the spec colors. When a node contains a graph, we can call it a surface. (The default graph is on the default surface, too, if you think of every RDF graph as located on a single hidden node, which is just another way to say that it could be quote in a larger context) I then translated the Json from Example 2 using the same procedure (and using Jen3) to an n3 file which makes it easier to see the semantic structure. I drew that out as The problem here is less serious than above because both the credential graph and the presentation proof graph are wrapped in a node. (The proof does not need to be wrapped as above). Still, once the presentation proof is verified, we can unwrap the verifiable credential graph and pass it on to another agent who may want to verify the credential: but it will be stumped again because it won't be able to tell what is signed. That is just like with cryptography: we sign a stringlike object. In RDF1.0, these graphs-in-nodes would have been rdf:XMLLiteral with an RDF/XML type. All we are doing here is showing more clearly the internal structure of such nodes.

Agree. My argument above works because I start with the assumption that I fully trust two graphs that I wanted to merge. I used this graph as an example <did:example:ebfeb1f712ebc6f1c276e12ec21> foaf:name "Pay Hayes";

foaf:publications <https://tinyurl.com/patHayesScholar> .We assume those two triples are true. It is a law of RDF that when true graphs are merged the new conjoined graph will also be true. So given those two essential rules of RDF that are millions of times easier to understand than cryptographic algorithms, if we were to merge the Example 1 graph with the two triples above, we would get the following graph:

But there is an even better example. Every graph with a node But the answer to this is very simple:

Here 1 is essential. 2 would just simplify your data structure. I say that, betting that you can't find a good reason not just to use a blank node. Given those points, what you want is the RDF or JSON/LD for this diagram: Is that problematic to express in JSON-LD? |

|

@bblfish @msporny (and others) -- Please note that color coding will not be sufficient to mark elements of diagrams in our published documents, for a11y reasons. Dashed or otherwise differentiated lines and borders, and different kinds of B&W (or other high contrast) shading of interiors, is preferred. Specifically regarding the draft diagrams above, @bblfish, I would like to have some border around each entire dataset. (The "page color" background is fine.) |

|

Btw, I put a lot of energy into making the diagrams also work in Dark Mode so that people can read them at night and still see the arrows. Someone who reads the above diagrams and does not see color would not lose out on the information presented. A color-blind person would see it more like the computer sees it: the only thing of import are graphs composed of arrows and nodes, with values on the nodes, which can be strings, integers, and other literals, blank nodes, URIs, or graphs recursively. So the yellow-colored nodes that belong to the claim graph in Figure 6 of the spec don't belong to the claim graph if they are not themselves inside a node that distinguishes them from the rest of the graph, as in many of the examples above. What colors a node is the context in which it is located. I just kept the color of the nodes as a visual reminder of their intended role... (a debatable decision, I agree). As for placing the whole graph inside a default node, i.e., having a border around the whole node, I think that would be better left to the document portraying the diagram: the outer context is there to be drawn out by information that is outside the context of the graph: i.e., another graph that mentions these graph, by placing them in a node. In the language of RDF Surfaces all current RDF graphs are on a positive surface, and a positive surface on a positive surface makes a positive surface, i.e., the border can be erased. Now for the moment, these diagrams are here not to be placed inside a spec but to explain the problem with the current encoding of VCs, which I hope will be fixed :-) |

|

The charter limits us to securing VCs and similar documents which put data in the default graph and has worked this way for a decade; and that data must be signed. This informs the design. We were specifically asked not to try and create a general solution for all linked data, but rather this specific profile of "well-bound documents". When previously signed data (like VCs) is attached to other graphs, we do put it into a graph container, but we expect it to be removed and put back into a default graph for independent verification. Future work could extend things further, but that's not what was on the table for this WG. |

|

@dlongley, charter interpretation is a fine art that requires many different contexts to be brought together. A key criterion is that what you come up with has to be coherent with the existing specs, such as JSON, JWK, JsonLd, and therefore, RDF and RDF semantics. My impression is that you have been working very closely with Json and the cryptography community, who don't care at all about the semantic web side. In your time working on these specs, you learned from them and produced something that makes them happy. That is a great feat. But clearly, people have not been seriously looking at the semantic side at all. First, in the 1.1 Data Model spec, there were a lot of basic mistakes in the RDF generated from the examples, which shows that the spec was not tested in depth. (For the IETF Signing HTTP Messages Spec, I ran tests on all the examples (of a version ~17), to make sure the signatures were correct). A charter cannot order you to do what breaks existing specs, nor can it order you to build logically incoherent systems. When push comes to shove on an issue like this, the charter would have to give ground to RDF semantics. But, in any case, I could not find the text in the charter which supports your statement. I think you may want to keep the JSON format stable if possible. So one should search for a solution as far as possible to see if JsonLD provides tools to give the right semantics and make the Json folks happy too. Otherwise one may have to make a slight adjustment at the syntax level. Currently from the RDF perspective, it is not possible to really tell what is being signed. As a result, it is not really possible to verify a signature if one is serious about the Semantic side. I really don't think it can be a big step to get these things right. |

Indeed. And it only gets accepted once it has specific text that certain parties, for whatever reason, agree to -- in the face of potential objections. It's quite true that all of the various concerns raised are not always easily gleaned from the charter text directly. Often these concerns can then only be more deeply understood from reading long conversations on various public mailing lists or meeting minutes. Even then, the potential objections aren't necessarily understood by all parties but if the text is agreed to, the group moves on without dwelling on them or trying to fully understand all facets.

I would not say that. It may be true that other portions have received proportionally more attention. And a number of mistakes were made in areas that were known to not have been well tested, especially with RSA signatures. This work has been dropped. As you know, producing work items in working groups can be very challenging as a number of different competing concerns must be reconciled. And no work item output is ever perfect. But this is why work continues on in future revisions and with new iterations of working groups -- each with their own hard timelines and hard fought outputs.

This is not true: for well-bound datasets (such as VCs), for proof sets, everything in the default graph is signed over as is the contents of each individual proof (separately). This does imply a constraint, as mentioned above and that you also reference, that you cannot merge data into a singular default graph without knowing where the boundaries are unless they are tracked independently, such as they are, for example, with externalized JSON structure. But improving on this further is a different issue for another WG if anyone is able to create a charter that would allow working on that problem without objections. Whether such work can get sufficient support and consensus from competing communities with goals that are not perfectly aligned -- in order to produce acceptable output -- is also an open question. |

|

Let's do this a step at a time @dlongley . What text in the Verifiable Credentials WG charter do you believe supports your position that the signature must be of the default graph. |

|

I don't think any text requires it directly. I think the charter that was going to be created to pursue security of RDF outside of the context of specifically signing VCs, which do use the default graph, was strenuously objected to and instead the work was limited to securing VCs and similar well-bound documents. |

|

@dlongley "I don't think any text requires it directly." I looked at example 1 Verifiable Credential from the 1.1 spec and the 2.0 spec, and both map to RDF graphs which are logically equivalent to one with an extra You have no problem using "named" graphs since you use one for the signature - the surface in green above. We can solve all your problems by moving the named graph around so that the triples to be signed are in the context, as shown here So our question should be:

|

For the reasons I stated in the rest of my comment: #1248 (comment)

No, I do not believe so.

I don't think there is interest in taking on this kind of change -- especially given what I mentioned in my comment -- at this time. The feeling is that we will have to make more incremental progress instead and take on this sort of thing, if it's desirable (gets to consensus), in a future WG. |

|

A particular JSON-LD document is well bound. They presume everything is signed according to the securing mechanism's documentation. This works and there are implementations that use it today without issue. Extending the wrappers around what is signed into the information space as you have recommended would be great. We just can't do it right now, there are a number of practical (but not strictly technical) limitations that are in the way. |

Yes, because by doing the merge in the way that you've done it, you've violated the "well bound dataset" requirement that this WG is under. IOW, you shouldn't do the merge BEFORE checking signatures, you should do it AFTER checking signatures (where you're fine if the data is co-mingled)... and if you're not fine w/ co-mingling the data, you should put it into a separate graph (as you say). Processing rules could then take the quads and put them into a default graph to check the signature (if you wanted the proof to live on w/ the data). A future WG, more focused on RDF, could tackle the concern you raised but, as @dlongley stated above, doing so in this WG is 1) out of scope for this WG to address, and 2) using the VC Data Model and Data Integrity in a way that it was not intended to be used, 3) entirely preventable if you don't process it in the way that you are, and 4) achievable if you do some post-processing on the RDF Dataset to add/remove the boundaries that you'd like. Does the above make sense, @bblfish? |

|

@msporny I don't see a definition of "well bound dataset" in the VC Data Model spec 2.0. We should have a brainstorming session because we are confronted here with a clear problem and a reasonable solution and yet a strong rejection on your part that is mostly procedurally based. I feel that behind that lies a technical problem that is keeping quiet. I am left to having to guess what the real underlying technical problem is. My guess is informed from discussions some ten years ago, where, if I remember correctly, a few people warned about the problem you were getting yourself into here. But at the time, if I remember correctly, the answer was that JSON-LD would solve the problem. And perhaps it can... So here is my guess - tell me if I am right. You want to be able to add signatures to JSON without changing the JSON parsers so that they can see the content without having to read the signature, perhaps first. (One can glean that from how you build up your examples in the VC Data Integrity spec where you start with {

"title": "Hello world!"

};and then add the "proof" next to the title. Is that right? |

Political, social, and procedural issues are in the way here. The only technical issues have to do with what has already been mentioned right here in this issue, which is that VCs use JSON-LD in a JSON-idiomatic way and those always use the default graph. You could express what you're looking for in a non-JSON-idiomatic way (using As mentioned many times, the biggest hurdle is not technical. Rather, it is more complex; it is related to those parties that very strenuously objected to doing anything around RDF security that wasn't strictly tied to VCs, which again, are expressed in JSON-LD format and always use the default graph to ensure idiomatic JSON, which is, again partly for political and social reasons, and not strictly technical in nature. We simply cannot do what you're asking at this time for procedural, social, and political reasons. Technical solutions alone are not possible. A number of different WGs with members that are able to come to consensus on a variety of issues all within the same timeline is what is required to do what you want. Unfortunately, that's not where we are today. A technical solution can always be invented to just about any of these problems if those other issues fade away. |

|

I understand what you are saying, @dlongley, but my point is that your RDF model is so clearly wrong that it is the equivalent of pushing people to use broken cryptographic systems. No Charter can be used to support such an abuse of a standard you are basing your work on. |

|

I disagree with your assessment.

Not forever, just now. An attempt was already made to do something different that could have included what you are interested in, but a pivot had to be made to focus on the work that is now quickly approaching CR. These things do not happen in a political and social vacuum, getting to consensus is hard, standards are never perfect, and procedural timelines are real. |

|

I would say this issue is close to going on forever now. I brought it up in a January 2016 email "Signature in Wrong Position", and I know this type of problem was brought up years before on various occasions. I just thought that day I should summarise it:

There was a long discussion see thread, but your answer the next day was that the graph could be signed:

I did not want to continue the discussion at that point, as there were too many moving parts. When the Verifiable Credentials Model 1.1 came along, I thought I should check how you had solved the problem, and on seeing Figure 6 with a Credential Graph was pleased that this had been done correctly. I did not have time to check carefully as I did in the last week what the JsonLD corresponded to. The argument put forward most succinctly a few comments up is closely related to what I brought up 7.5 years ago. But it is of course, much clearer now, since I can work from documents you have published, rather than promises of things that were to happen. |

|

Alas!, I think this issue should be closed because we cannot properly finish the discussion with Henry 😢. The essence of his message, ie, that the current diagrams are incorrect, has been taken up in #1318; the rest of the discussion involving N3 and possibly RDF surfaces is to be followed up elsewhere, if needed. |

|

Agree, marking pending close. |

This comment was marked as off-topic.

This comment was marked as off-topic.

This comment was marked as off-topic.

This comment was marked as off-topic.

|

Henry's issue was:

...

Diagrams are only one part of it. It would be better if the JSON-LD was written out together with the diagram. Henry has put a lot of work into pointing out a major bug. I think it would be a good one to fix. If it's going to be a WONTFIX, I think it would be good to say that in this issue. |

This comment was marked as off-topic.

This comment was marked as off-topic.

|

Oops. I have just realized that I have put comments to the wrong issue!! #1248 (comment) and #1248 (comment) and, I suppose, #1248 (comment), should have been added to #1318 |

There is disagreement on whether or not this is a bug. To put it more clearly -- it's not a bug. Here's the answer to the question that Henry posed:

The agent would have to use the same bound document that was used to generate the signature. That is, you can't just take the document and throw it into a quad-store w/o ensuring that you keep all the triples/graphs in some sort of bound structure. Doing that is outside of the scope of the WG, which is why the WG was limited to addressing the problem in bound documents (not arbitrary graph soup databases). So, there is a simple answer to the question posed: If you want to verify the signature, keep the information together, such as just storing the JSON-LD document in a document-oriented database. |

|

@melvincarvalho: Henry himself said, in #1248 (comment):

See also #1248 (comment). We do not have a separate "WONTFIX" label in our repository, but I think that, semantically, this issue falls into this category: at this stage of the WG's work this will not be fixed. Hence, the proposal is to close it. |

|

@iherman I dont believe Henry's use of the word "forever" is to be taken literally. He has been objecting to this since 2016, and the objection dates back even further than that.

I think he is objecting to the issue that he has worked hard to describe, for a very long time, being ignored or swept under the rug, or kicked into the "long grass".

|

|

You may be right, @melvincarvalho, but I believe my comment

reflects the WG's position. |

|

Henry indeed has a point. @uvdsl and I saw the issue too when looking at the verifiable credentials data model with our RDF glasses on. In [1,2], we discuss possible solutions for the issue of how to represent the triples to be signed, with the contestants:

None of the above was without drawbacks (reified triples - referential opacity, named graphs - what are the semantics - are the signed triples asserted?, RDF lists - imposed ordering on the triples, no RDF list - open set of triples ...). N3 quoted graph would also have been a contestant, but also not without problems. We thus agree with Henry that the current state of the standard is iffy from an RDF perspective, thus we hope that the issue gets more attention than a WONTFIX and the standard receives some update, even if it is minor. [1] Christoph Braun, Tobias Käfer "Quantifiable Integrity for Linked Data on the Web", Semantic Web Journal, 2023. https://semantic-web-journal.net/content/quantifiable-integrity-linked-data-web-0 |

Compare this with Henry's comment:

It seems more than saying: "that the current diagrams are incorrect". IMHO it's worth looking at possible solutions, as suggested by @bblfish and @kaefer3000. |

|

The new PR #1326, which has just been raised, is very much relevant for this discussion. Beyond improving the diagrams, it also gives a proper definition of which graphs, i.e., which quads, are signed through the mechanism defined by the proof property. This was indeed missing (or unclear) from the spec so far and, in my view, this is the major issue raised by Henry. The PR does this using the RDF Dataset terminology, which is our only choice, because other solutions, like N3, are not formal standards, nor are they expressible in JSON-LD today. The definition is VC specific, and relies on "out of band" knowledge of the structure of Verifiable Credentials. This is the approach that has been around for a long time, which has been adopted by the Working Group (previous and current), and has already been deployed in products. In other words, the PR does not provide a generic solution for securing linked data, nor does it aim to do so. In an ideal world, a generic solution would have to include, I presume, some sort of "back" relationships from the proof to the original data in some way or other to specify "what" is being signed. But such a generic approach is not what this WG is meant to do, nor is it chartered to do. |

|

Admin: imho this issue should be closed if and when #1326 is finalized and merged to the main spec. To avoid misunderstandings, I have removed the 'pending close' flag, which could be misunderstood. |

I do not believe this is correct Henry's statement:

Would it be correct to say that #1326 are non normative changes (for example to the diagrams)? |

... and I respectfully disagree with this for the specific VC case. It may be correct if the aim is the general solution. But it isn't. Also

is simply not an option for the Working Group.

As I hinted in #1326 (comment), it is actually a borderline. The diagram changes are indeed non-normative. But the changes or, rather, additions in the specification on the usage |

|

PR #1326 has been merged, which was raised to address this issue. Closing. |

In issue 204 on the N3 CG, I tried to translate 2 VC model examples. This revealed a few minor errors and some deeper conceptual problems, which are much easier to see in N3 than in Json-LD. These conceptual problems revolve around what is in a graph context and what is not. The JsonLD seems to place the contexts incorrectly, leading to unclarity about what is and what is not signed.

Update: the problem is perhaps most concisely expressed in a comment below in response to @msporny's feedback.

The N3 notation is to NQuads what Turtle is to NTriples: it makes it easy to read and reduces repetition for the author and the reader so that one can easily get an overview of what is being said. N3 makes it easy to read and write contexts. It also adds many other things, such as the ability to write rules for logical inferences... Where JsonLD makes it easy for developers happy with JSON to use the result, N3 makes it easy to see the data structure and reason about it. It especially makes it easy to see contexts, which are very difficult to see in jsonld.

1. Mapping the JSonLD of example 1 to N3

To take example 1, which I placed here https://bblfish.net/tmp/2023/08/vcdm.ex1.jsonld the result after fixing a few errors with namespaces, explained in detail in N3 issue 204 is that it gives me the following N3:

The problem is that when signing data, one has to be very clear about which triples are signed. They, therefore, belong into a context which in n3 is placed inside the squiggly brackets

{ ... }.In this N3 we have the signature in the context, but the claim that should be signed is outside of the context, so exactly what triples are being signed is unclear.

This can be made clearer by representing the above N3 in the diagram below, which shows graphs on rectangular surfaces when they appear inside

{...}in the n3 notation.Here we see that we can't tell clearly what is signed. We see that the credential links to the credential subject

did:example:ebfeb1..., but what after that is included in the data signed? Is it also thealumniOfrelation? Should one also add the literals? Which of the credential attributes has been signed? The data does not tell us that, but it should.The problem is that one does not sign an object but a graph. Signatures are always applied to documents. Indeed, we can think of Graphs as semantically structured documents. Graphs are a type of object, of course, but we have a special syntax in N3 to relate them to other nodes by surrounding a set of triples with

{ ... }. Diagrammatically we represent this as nodes with graphs on the nodes.As we will see next, we can have graphs in the nodes recursively: that is, graphs within nodes can also have graphs at their nodes. In the diagram below, we see this with the yellow rectangle surrounded by a green rectangle which itself is a node in a larger graph.

2. N3 corresponding to the VC Credentials diagram

The intuitions were clearly understood by the authors of diagram 6 from §3.2 of the VC-data-model spec, which uses surfaces to distinguish different claims.

This diagram represents the signature, the graph signed, and the claim, each in its own graph. This seems like the right model, aligning us with the well-known Says logic for decentralized access control.

We can interpret the colored surfaces as telling us who is making a claim.

Our trust in the claim being made depends on our trust in the agent making the claim, and our ability to verify that it did make that claim.

Anyway, the official Verifiable Claims interpretation of the JsonLD is that it should consist of 3 claims, i.e., sets of relations that we can think of as being placed on surfaces, following an idea from Pat Hayes, that he took from Peirce and which is now being worked on in the RDF Surfaces CG.

As shown in the diagram, a VC is data about a claim (the Yellow surface to the right) stating that Pat is an alumnus of "Example University". That claim is made or "said" by the issuer - the University in this case - at a certain date. The data about that is on the pink surface. Finally, the University key signs the above claim with the statement on the green surface.

In the

sayslogic, this would be expressed as something like this:where$\text{ PaU } \equiv \text{ Pat alumnusOf Uni }$

This suggests that the pink and yellow surfaces are placed onto the green surface, which follows by associativity of the

saysrelation:To be precise, we should perhaps start with The Guard initially receiving the following claim from some

connAgent,ie the agent at the other end of a connection.

From the Guard's point of view, the only fact that exists at that point in time,

is that the

connAgentsaid that thing (that theUniKeysaid...)None of the embedded statements are to be taken as true or false at that point by the Guard.

They are quoted sayings, and their truth value is yet to be decided.

Yet, without needing to trust the

connAgentat all, the Guard can verify the embedded claim made by the UniKey using cryptographic signatures so that it can conclude to the truth of the pink surface.That is because the Guard trust the University key to speak for the University.

So he concludes:

Again here, the Guard has a fact that does not assert or deny that

Patis an alumnus ofUni, only that theUnisaid so.So we are now on the pink surface where the Guard does not yet know if the yellow surface is true or false.

If the Guard trusts the issuer about claims it makes regarding its own members - which seems like a reasonable assumption - then the Guard can proceed to believe that

Patis an alumnus of that university. Perhaps that is what is needed to be proven to satisfy an access control rule.All that makes logical sense. If we wanted to write the above official diagram in N3, we would have something like the following N3.

(Note: the diagram has two links (

credentialSubjectandproof) that go from an object into a node in a graph context (a box). That requires an extra relation to point into the graph, it seems, but I'll ask and the N3 group if it could be done if it were really needed.)Here the VC signed the information about who made the claim and the claim together.

Now we see that it is odd that the university's name is in the claim. Should it perhaps be outside the claim? It is not clear from the graph what is intended there, but both would be fine.

3. Slight Simplification of N3 translation of Diagram

We have two arguments for removing the green surface:

key says ...we can think of the green surface as containing the pink one and so the green surface being the base one. In that case we can see that the green surface would be the default graph and we can get rid of it altogether.Removing the green surface simplifies the n3 quite a lot giving us:

Graphically this can be represented as

Would it be possible, with minor tweaking of the Json-ld contexts, to get the above interpretation to work?

4. proposition of N3 intended by the JsonLD

The above seems right but is not what the JsonLD mapping to RDF shows, if only because it is missing the yellow surface and has placed the data to be signed on the default surface.

The closest to something we could get that resembles the RDF we found the current JSonLD to translate to is to flip the

contexts. Instead of the signature being in the context, we get something more reasonable by having the signed data be in a context.

Perhaps this is what the VC data model intended the original json-ld to represent?

That is, here we only use a graph context to enclose the signed triples, which in this case

I guess enclose the metadata about the issuer too. Graphically represented, this is what we have:

Is that correct? I don't know. It would require a very close analysis of the spec to understand what is intended to be signed. Update: See a detailed reasoning for why this follows from the previous two models.

(todo: We could also imagine that only the claim was intended to be signed.)

In issue 204 on the N3 repo I show the same problem with Example 6 in the spec.

Conclusion

Perhaps I missed something. Is there a mistake in the JsonLd or its

@contextstatements? I have not written a JSON-LD parser, so I don't know the capabilities of contexts inside out. Perhaps it is easy to fix? What is the intended N3 corresponding to the Json-Ld?The text was updated successfully, but these errors were encountered: