Instructing Retrieval-Augmented Generation via Self-Synthesized Rationales

[arXiv]

[Website]

[Model] [Dataset]

[X Summary]

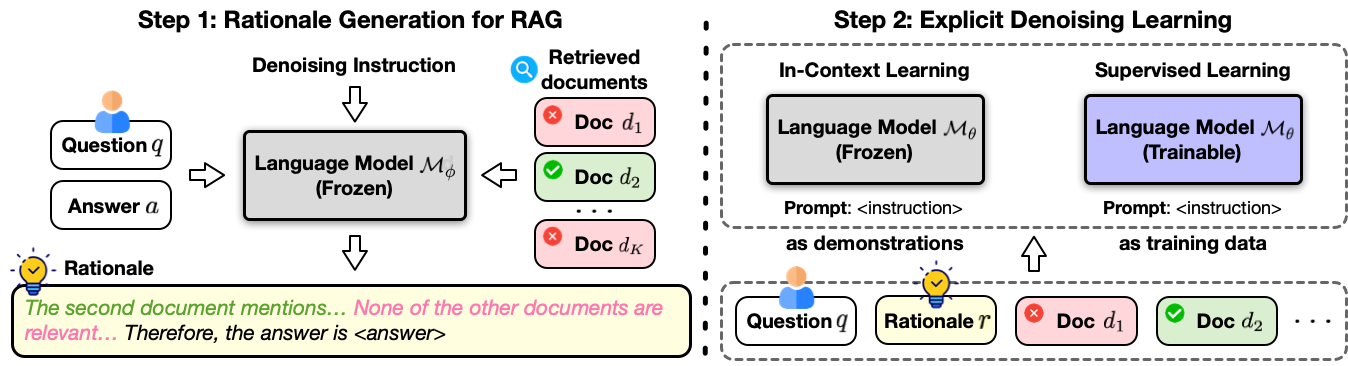

InstructRAG is a simple yet effective RAG framework that allows LMs to explicitly denoise retrieved contents by generating rationales for better verifiability and trustworthiness.

- 🤖 Self-Synthesis: Leverage instruction-tuned LMs to generate their OWN supervision for denoising.

- 🔌 Easy-to-Use: Support both in-context learning (ICL) and supervised fine-tuning (SFT).

- 🚀 Effectiveness: Up to 8.3% better results across 5 benchmarks (Table 3).

- 💪 Noise Robustness: Robust to increased noise ratios in various scenarios (Figure 3).

- 🔁 Task Transferability: InstructRAG can also solve out-of-domain unseen tasks (Figure 4).

Please see also our paper and X summary for more details.

Run the following script to create a Python virtual environment and install all required packages.

bash setup.shAlternatively, you can also directly create a conda environment using the provided configuration file.

conda env create -f environment.ymlTo train the model (i.e., InstructRAG-FT), just activate the environment and run the following training script. The training config is set for 4xH100 80G GPUs. You may need to adjust NUM_DEVICE and PER_DEVICE_BATCH_SIZE based on your computation environment.

conda activate instrag

bash train.shThere are two instantiations of our framework:

- InstructRAG-ICL: training-free & easy-to-adapt

- InstructRAG-FT: trainable & better performance

Use the following script to evaluate InstructRAG in both training-free and trainable settings. You can specify the task and model by adjusting DATASET and MODEL in eval.sh.

conda activate instrag

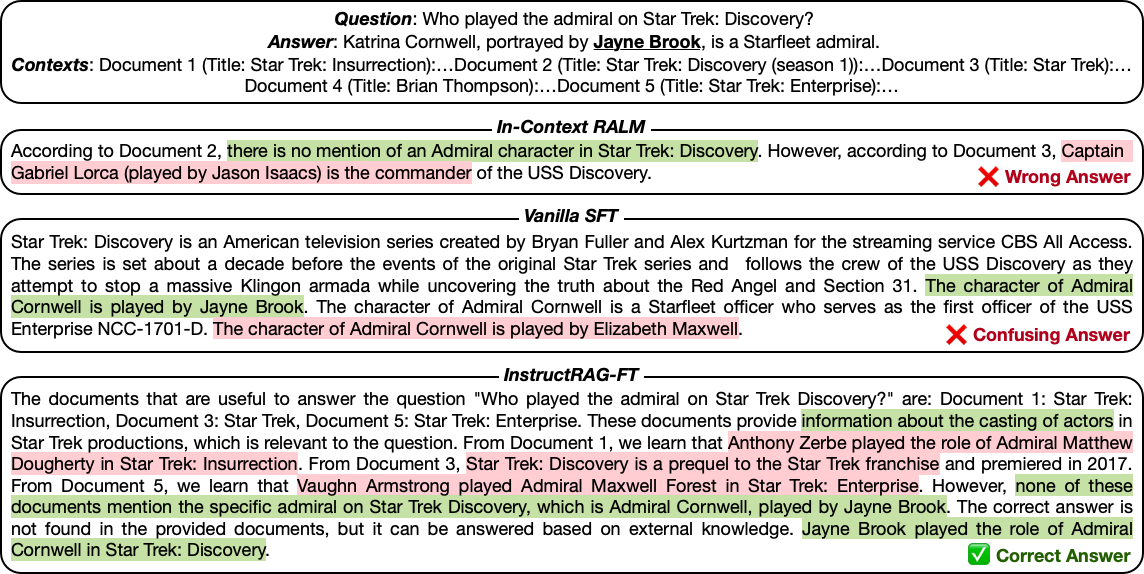

bash eval.shThe following case study shows that InstructRAG can effectively identify relevant information from noisy input and leverage its own knowledge to correctly answer questions when required. The red texts denote irrelevant or inaccurate model generations, while the green texts denote contents relevant to the question.

Below is the full list of InstructRAG models fine-tuned on each dataset in our work.

| Dataset | HF Model Repo | Retriever |

|---|---|---|

| PopQA | meng-lab/PopQA-InstructRAG-FT | Contriever |

| TriviaQA | meng-lab/TriviaQA-InstructRAG-FT | Contriever |

| Natural Questions | meng-lab/NaturalQuestions-InstructRAG-FT | DPR |

| ASQA | meng-lab/ASQA-InstructRAG-FT | GTR |

| 2WikiMultiHopQA | meng-lab/2WikiMultiHopQA-InstructRAG-FT | BM25 |

If you have any questions related to the code or the paper, feel free to email Zhepei (zhepei.wei@virginia.edu). If you encounter any problems when using the code, or want to report a bug, feel free to open an issue! Please try to specify the problem with details so we can help you better and quicker!

Please cite our paper if you find the repo helpful in your work:

@inproceedings{

wei2025instructrag,

title={Instruct{RAG}: Instructing Retrieval-Augmented Generation via Self-Synthesized Rationales},

author={Zhepei Wei and Wei-Lin Chen and Yu Meng},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://openreview.net/forum?id=P1qhkp8gQT}

}