abstract technique that aids information retention : instance inheritance system

... allows us to make inherited descriptions of mappings of transformations from predecessor structured data types to the successors, as if it was math f(x)=>y ... and we will use this keyword as a persistent data structure where we will apply that transformations

- ?. : type : asynchronous monad descriptor => this

- ?. : prod ready : we wonder about

- ?. : example : git clone && npm run example

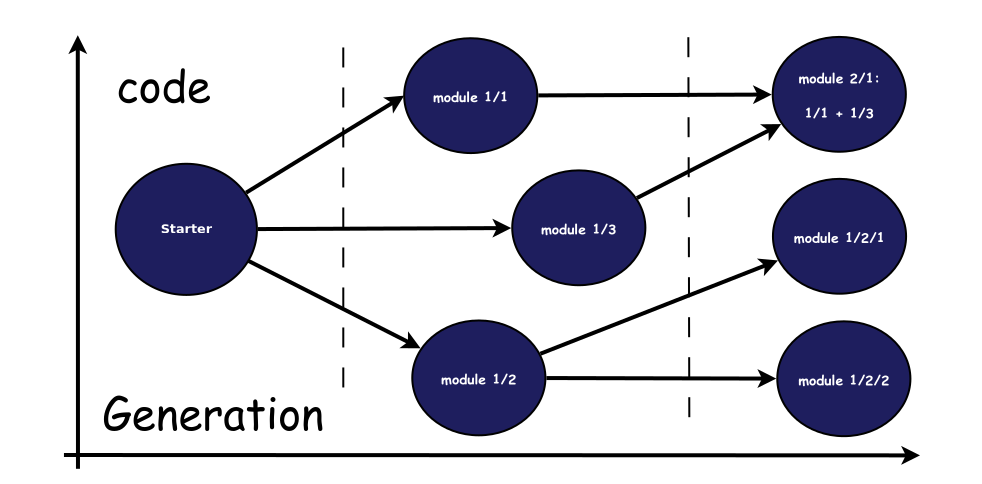

This lib might help to create some sort of order or sequence or precedence of how we modify data inside of our code. It utilizes the concept of tree or Trie by combining both: Object Instances and Inheritance through the Prototype Chain, where we are able to create new instance inherited from existing one as much times as we need. It might look like obvious, but ... we tell about Instances, not about Classes, meaning Plain Objects, crafted from real Constructors before we start the process of inheriting them one from another. In an attempt to describe this approach let me suggest this articles:

- Inheritance in JavaScript : Factory of Constructors with Prototype Chain

- Architecture of Prototype Inheritance in JavaScript

- Dead Simple type checker for JavaScript

define function now fully supports TypeScript definitions

for more easy types writing nested~sub constructors might be applied using just direct apply, call or bind functions

As we discrovered from that article, we need some tooling, giving us the best experience with Prototype Chain Inheritance pattern. First of all it must be reproducible and maintainable. And from the first point we have to define some sort of Factory Constructor for start crafting our Instances, it might look like so:

const { define } = require('mnemonica');

const TypeModificationProcedure = function (opts) {

Object.assign(this, opts);

};

// prototype definition is NOT obligatory

const TypeModificationPrototypeProperties = {

description : 'SomeType Constructor'

};

// SomeTypeConstructor -- is a constructor.name

const SomeType = define('SomeTypeConstructor',

TypeModificationProcedure,

TypeModificationPrototypeProperties);Or, we can define SomeType like this:

const TypeModificationConstructorFactory = () => {

// SomeTypeConstructor -- is a constructor.name

const SomeTypeConstructor = function (opts) {

// as this is absolutely the same behaviour

// we described upper

// in TypeModificationProcedure

// we allowed to do the following

// for shortening this example lines

Object.assign(this, opts);

};

// prototype definition is NOT obligatory

SomeTypeConstructor.prototype

.description = 'SomeType Constructor';

return SomeTypeConstructor;

};

const SomeType = define(TypeModificationConstructorFactory);Or using Classes:

const TypeModificationConstructorFactory = () => {

// SomeTypeConstructor -- is a constructor.name

class SomeTypeConstructor {

constructor (opts) {

// all this definitions

// just to show the example

// of how it works

const {

some,

data,

// we will re-define

// "inside" property later

// using nested sub-type

inside

} = opts;

this.some = some;

this.data = data;

this.inside = inside;

}

};

return SomeTypeConstructor;

};

const SomeType = define(TypeModificationConstructorFactory);Then we can define some nested type, using our crafted SomeType definition:

SomeType.define('SomeSubType', function (opts) {

const {

other,

inside // again

} = opts;

this.other = other;

// here we will re-define

// our previously defined property

// with the new value

this.inside = inside;

}, {

description : 'SomeSubType Constructor'

});

// or, absolutely equal

SomeType.SomeSubType = function (opts) {

const {

other,

inside // again

} = opts;

this.other = other;

// here we will re-define

// our previously defined property

// with the new value

this.inside = inside;

};

SomeType.SomeSubType.prototype = {

description : 'SomeSubType Constructor'

};Now our type modification chain looks like this:

// 1.

SomeType

// 1.1

.SomeSubType;And we can continue nesting sub-types as far as it might be necessary for our code and our software architecture... :^)

Let's create an instance, using SomeType construtor, we earlier.

const someTypeInstance = new SomeType({

some : 'arguments',

data : 'necessary',

inside : 'of SomeType definition'

});Then, there might be situation, when we reached the place in code, where we have to use the next, nested -- SomeSubType -- constructor, to apply our someTypeInstance to the next one Inherited and Sub-Type'd Instance.

const someSubTypeInstance =

// someTypeInstance is an instance

// we did before, through the referenced

// SomeType constructor we made using

// define at the first step

// of this fabulous adventure

someTypeInstance

// we defined SomeSubType

// as a nested constructor

// so we have to use it

// utilising instance

// crafted from it's parent

.SomeSubType({

other : 'data needed',

// and this is -re-definition

// of "inside" property

// as we promised before

inside : ' of ... etc ...'

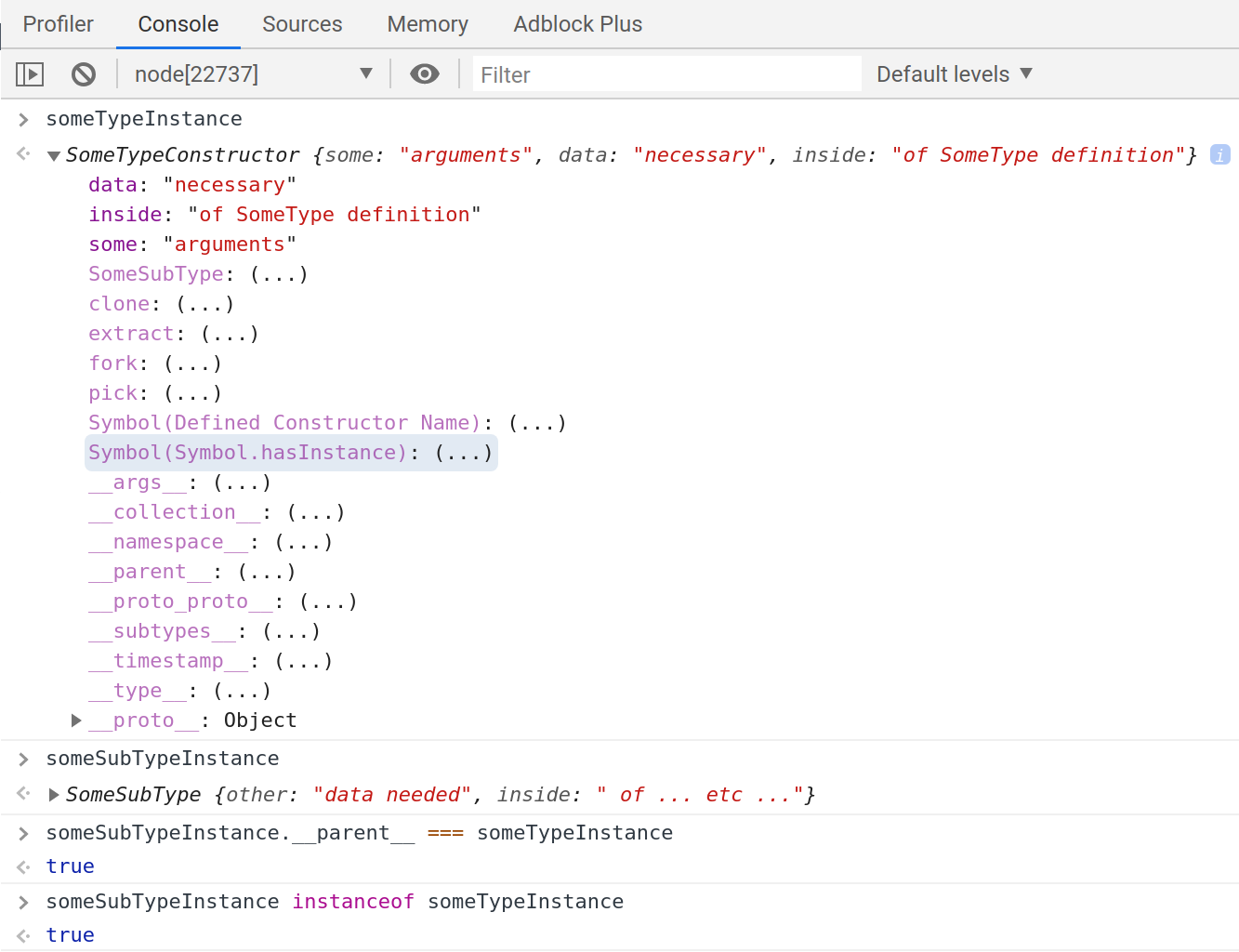

});At this moment all stored data will inherit from someTypeInstance to someSubTypeInstance. Moreover, someSubTypeInstance become instanceof SomeType and SomeSubType and, let's go deeper, instance of someTypeInstance.

And now let's ponder on our Instances:

console.log(someTypeInstance);

some : 'arguments'

data : 'necessary'

inside : 'of SomeType definition'

console.log(someSubTypeInstance);

other : 'data needed'

inside : ' of ... etc ...'So, here it might be looking miscouraging... but this is not the end of the story, cause we have the magic of built-in ...

So, here is a situation we need all previously defined props and all the nested props collected to the one-same object:

const extracted = someSubTypeInstance.extract();

console.log(extracted);

data : "necessary"

description : "SomeSubType Constructor"

inside : " of ... etc ..."

other : "data needed"

some : "arguments"or, with the same behaviour:

const { extract } = require('mnemonica').utils;

const extracted = extract(someSubTypeInstance);

data : "necessary"

description : "SomeSubType Constructor"

inside : " of ... etc ..."

other : "data needed"

some : "arguments"

console.log(extract(someTypeInstance));

data : "necessary"

description : "SomeType Constructor"

inside : "of SomeType definition"

some : "arguments"Here extracted object will contain all iterable props of someSubTypeInstance. It means props are accessible via Symbol.iterator. So if you will define some hidden props, it will not consume them. This technique allows us acheive concentration only on meaningfull parts of Data Flow Definition. So, all this might help to cover the gap between declared data flow and indeed flow written in code through describing flow itself with that simple way. For sure you are free to make your own .extractor() functions on the top of acheived multiplie inherited data object (storage):

And back to definitions, for sure, all of the following is true:

console.log(someTypeInstance instanceof SomeType); // true

console.log(someSubTypeInstance instanceof SomeType); // true

console.log(someSubTypeInstance instanceof SomeSubType); // true

// who there can care... but, yes, it is again: true

console.log(someSubTypeInstance instanceof someTypeInstance);But this is not the end... it might be discouraging, but this is only the begining.

Suppose we have to handle the request.data somewhere in our code and we have to consume it someelsewhere. Let's imagine us inside of ETL process.

Let's create Constructor for this sort of data.

const RequestDataTypeModificator =

define('RequestData',

FactoryOfRequestHandlerCostructor);Then we will use it in our code like this:

(req, res) => {

const requestInstance =

RequestDataTypeModificator(req.body);

};And then it might be necessary to jump to the next part of code, which cooperate with some Storage or Daba Base.

const GoneToTheDataBase =

RequestDataTypeModificator.

// jumped data definition

define('GoneToTheDataBase',

DataBaseRequestHandlerCostructor);Here we might choose what to do: inspect some collected data or probably we wish to extract it for tests or log or even grab them with the other consumer. And yes, we already saw .extract() method, but there are also two other methods could be much more helpfull...

// callback will be called Before requestInstance creation

const preCreationCallback = (hookData) => {

const {

// { string }

TypeName : TypeModificatorConstructor.name,

// 1. [ array ]

// ...args of TypeModificator

// for instance creation

argumentsOfTypeModificator,

// 2. { object }

// instance we will craft from

// using our TypeModificator

instanceUsedForInheritance

} = hookData;

// some necessary pre-investigations

};

RequestDataTypeModificator

.registerHook(

'preCreation',

preCreationCallback);

const postCreationCallback = (hookData) => {

const {

// { string }

TypeName : TypeModificatorConstructor.name,

// 1. [ array ]

argumentsOfTypeModificator,

// 2. { object }

instanceUsedForInheritance,

// 3. { object }

// instance we just crafted

// from instanceUsedForInheritance

// using our TypeModificator

// with argumentsOfTypeModificator

// and ... inheritedInstance

// .constructor.name is

// TypeName

inheritedInstance

} = hookData;

// some necessary post-investigations

};

// callback will be called After requestInstance creation

RequestDataTypeModificator

.registerHook(

'postCreation',

postCreationCallback);Thouse hooks will be proceeded if you register them... for each instance you will craft using RequestDataTypeModificator. So if you wish to investigate instances of GoneToTheDataBase type modificator then you have to register the other hooks for it:

GoneToTheDataBase

.registerHook(

'preCreation', // ...

GoneToTheDataBase

.registerHook(

'postCreation', // ...

For sure registering hooks for each defined type is boring, so now we see we have to use Namespaces for Instances.

By default define utilise services of Default Namespace which is always hidden, but always exist. You can lend them using the following technique:

const {

namespaces,

SymbolDefaultNamespace

} = require('mnemonica');

const defaultNamespace = namespaces.get(SymbolDefaultNamespace);Silly joke, isn't it? ... sure, you can just use:

const {

defaultNamespace

} = require('mnemonica');And here you can play much more joyfull play with hooks:

defaultNamespace

.registerHook(

'preCreation', preCreationNamespaceCallback);

defaultNamespace

.registerHook(

'postCreation', postCreationNamespaceCallback);So, now all instances crafted from this namespace will call that hooks. The difference between hooks defined using type modificator and hooks defined using namespace is in the following execution order of hooks invocations:

// 1.

namespace.invokeHook('preCreation', // ...

// 2.

type.invokeHook('preCreation', // ...

// 3. instance creation is here

// 4.

type.invokeHook('postCreation', // ...

// 5.

namespace.invokeHook('postCreation', // ...It is important, yes, but not as much as we can expect... because of ... nevermind.

Finally, you can craft your own namespaces:

const {

createNamespace

} = require('mnemonica');

const anotherNamespace =

createNamespace('Another Namespace Name');And there also is one important thing. For example you have to build type modificator with the same name. You can do this, because you can craft as much types modificators collecttions, as you need. Even inside of the same namespace:

const {

createTypesCollection

} = require('mnemonica');

const otherOneTypesCollectionOfDefaultNamespace =

createTypesCollection(defaultNamespace);

const defineOfAnother =

otherOneTypesCollectionOfDefaultNamespace

// another define method -> another ref

.define;

const anotherTypesCollectionOfAnotherNamespace =

createTypesCollection(anotherNamespace);

const defineOfAnotherAnother =

anotherTypesCollectionOfAnotherNamespace

// and another define method -> another ref

.define;And even more. You can use Hooks with Types Collections also (starting from v0.3.1). For doing this just grab referer to collection somewhere, for example:

const {

defaultTypes

} = require('mnemonica');

defaultTypes

.registerHook(

'preCreation', preCreationTypesCollectionCallback);

defaultTypes

.registerHook(

'postCreation', postCreationTypesCollectionCallback);

'Pre' hooks for Types Collections invoked after Namespace Hooks invocation, but before Type Hook invocation. 'Post' hooks for Types Collections invoked after Namespace Type Hook invocation, but before Namespace Hooks invocation. So, actually it looks like:

// 1.

namespace.invokeHook('preCreation', // ...

// 2.

typecollection.invokeHook('preCreation', // ...

// 3.

type.invokeHook('preCreation', // ...

// 4. instance creation is here

// 5.

type.invokeHook('postCreation', // ...

// 6.

typecollection.invokeHook('postCreation', // ...

// 7.

namespace.invokeHook('postCreation', // ...As we can see, type hooks are closest one to the type itself. For sure, there can be situations, when you have to register some common hooks, but not for typecollection or namespace. Assume you have some friendly types, might be from different collections, and you have to register the same hooks definitions for them. And the plase where you wish to do this is the file, other than files you defined that types. There you can use:

const {

lookup

} = require('mnemonica');

const SomeType = lookup('SomeType');

const SomeNestedType = lookup('SomeType.SomeNestedType');And now it is not so necessary easy to explain why it could be usefull to use nested definitions. Sure you can do them by using the following technique:

define('SomeExistentType.SomeExistentNestedType.NewType', function () {

// operators

});

// or from tm descriptor

// if you have reference

SomeExistentType.define('SomeExistentNestedType.NewType', function () {

// operators

});

// you can also use

SomeExistentType.define('SomeExistentNestedType', () => {

// name of "NewType" is here

// nested inside of delcaration

return class NewType {

constructor (str) {

// operators

}

};

});Let assume our instance has indeed deep prototype chain:

EvenMore

.MoreOver

.OverMore

// ...

// ...

.InitialTypeAnd we wish to get reference to one of the prececessors, though we have only reference to insance itself. Here we can use builtin instance.parent() method:

// fisrst parent

// simply equal to instance.__parent__

const parent = instance.parent();

// deep parent from chain

const parent = instance

.parent( 'DeepParentName' );You can combine existing TypeConstructor with any instance, even with Singletones:

const usingProcessAsProto = Singletoned.call(process, {

some : 'arguments',

data : 'necessary',

inside : 'for definition'

});

console.log(typeof instanceUsingProcessSingletone.on) // functionor you can combine with window, or document or...

const usingWindowAsProto = Windowed.call(window);

const usingDocumentAsProto = Documented.call(document);

const usingJQueryAsProto = JQueried.call(jQuery);

// or even ReactDOM

import ReactDOM from "react-dom";

import { define } from "mnemonica";

const ReactDOOMed = define("ReactDOOMed", function() {});

const usingReactAsProto = ReactDOOMed.call(ReactDOM);

const root = document.getElementById("root");

usingReactAsProto.render("just works", root);mostly for TypeScript purpose you may do this

const SomeType = define('SomeType', function () {

// ...

});

const SomeSubType = SomeType

.define('SomeSubType', function (...args) {

// ...

});

const someInstance = new SomeType;

const someSubInstance = call(

someInstance, SomeSubType, ...args);

// or for array of args

const someSubInstance = apply(

someInstance, SomeSubType, args);

// or for delayed construction

const someSubInstanceConstructor =

bind( someInstance, SomeSubType );

const someSubInstance = someSubInstanceConstructor(...args);First of all you should understand what you wish to are doing! Then you should understand what you wish. And only after doing so you might use this technic:

const AsyncType = define('AsyncType', async function (data) {

return Object.assign(this, {

data

});

});

const asyncCall = async function () {

const asyncConstructedInstance = await new AsyncType('tada');

console.log(asyncConstructedInstance) // { data: "tada" }

console.log(asyncConstructedInstance instanceof AsyncType) // true

};

asyncCall();Also nothing will warn you from doing this for SubTypes:

const NestedAsyncType = AsyncType

.define('NestedAsyncType',async function (data) {

return Object.assign(this, {

data

});

}, {

description: 'async of nested'

});

const nestedAsyncTypeInstance = await new

asyncConstructedInstance.NestedAsyncType('boom');

console.log(nestedAsyncTypeInstance instanceof AsyncType) // true

console.log(nestedAsyncTypeInstance instanceof NestedAsyncType) // true

console.log(nestedAsyncTypeInstance.description); // 'async of nested'Also for the first instance in chain you can do for example inherit from singletone:

const asyncCalledInstance = await AsyncType

.call(process, 'wat');

// or

const asyncCalledInstance = await AsyncType

.apply(process, ['wat']);

console.log(asyncCalledInstance) // { data: "wat" }

console.log(asyncCalledInstance instanceof AsyncType) // trueLet for example suppose you need the following code:

async (req, res) => {

const {

email // : 'async@check.mail',

password // : 'some password'

} = req.body

const user = await

new UserTypeConstructor({

email,

password

})

.UserEntityValidate('valid sign') // sync

.WithoutPassword() // sync, rid of password

const storagePushResult =

await user.AsyncPushToStorage();

const storageGetResult =

await storagePushResult.AsyncGetStorageResponse();

const storageValidateResult =

storagePushResult.SyncValidateStorageData()

const requestRplyResult =

await StorageValidateResult.AsyncReplyToRequest(res);

return requestRplyResult;

};Here we have a lot of unnecessary variables. Though we can combine our chain using .then() or simpy brakets (await ...), but it will definetely looks weird:

async (req, res) => {

const {

email, // : 'async@check.mail',

password // : 'some password'

} = req.body

const user =

await (

(

await (

await (

new UserTypeConstructor({

email,

password

})

.UserEntityValidate('valid sign')

.WithoutPassword()

).AsyncPushToStorage()

).AsyncGetStorageResponse()

).SyncValidateStorageData()

).AsyncReplyToRequest(res);

};And with using .then() of general promises it will look much more badly, even over than "callback hell".

And, if so, starting from v.0.5.8 we are able to use async chains for async constructors:

async (req, res) => {

const {

email, // : 'async@check.mail',

password // : 'some password'

} = req.body

const user =

await new UserTypeConstructor({

email,

password

})

.UserEntityValidate('valid sign')

.WithoutPassword()

.AsyncPushToStorage()

.AsyncGetStorageResponse()

.SyncValidateStorageData()

.AsyncReplyToRequest(res);

};So now you don't have to care if this constructor is async or sync and where to put brakets and how many of them. It is enough to type constructor name after the dot and pass necessary arguments. That's it.

It is important: if we make util.callbackify from our instance async creation method, we shall .call it then.

Also starting from v0.3.8 the following non enumerable props are availiable from instance itself (instance.hasOwnProperty(__prop__)):

Arguments, used for calling instance creation constructor.

The definition of instance type.

If instance is nested, then it is a reference of it's parent.

What you can craft from this instance accordingly with it's defined Type, the same as __type__.subtypes.

Collection of types where __type__ was defined.

Namespace where __collection__ was defined.

Returns cloned instance, with the following condition instance !== instance.clone. Cloning includes all the inheritance, with hooks invocations and so on. Therfore cloned instance is not the same as instance, but both made from the same .__parent__ instance.

Note: if you are cloning instance, which has async Constructor, you should await it;

Returns forked instance. Behaviour is same as for cloned instance, both made from the same .__parent__. But this is a method, not the property, so you can apply another arguments to the constructor.

Note: if you are forking instance, which has async Constructor, you should await it;

Let assume you nedd Directed Acyclic Graph. Then you have to be able to construct it somehow. Starting from v0.6.1 you can use fork.call or fork.apply for doing this:

// the following equals

A.fork.call(B, ...args);

A.fork.apply(B, [args]);

A.fork.bind(B)(...args);Note: if you are fork.clone'ing instance, which has async Constructor, you should await it;

The same as fork.call but providing instsnces directly.

Note: if you are merge'ing instances, where A.constructor is async Constructor, you should await it;

Starting from v0.6.8 you can try to import ESM using the following scenario:

import { define, lookup } from 'mnemonica/module';While making define we can provide some config data about how instance should be created, default values and descriptions are below:

define('SomeType', function () {}, {}, {

// shall or not we use strict checking

// for creation sub-instances Only from current type

// or we might use up-nested sub-instances from chain

strictChain: true,

// should we use forced errors checking

// to make all inherited types errored

// if there is an error somewhere in chain

// disallow instance construction

// if there is an error in prototype chain

blockErrors: true,

// if it is necessary to collect stack

// as a __stack__ prototype property

// during the process of instance creation

submitStack: false,

// await new Constructor()

// must return value

// optional ./issues/106

awaitReturn: true,

})Also you can override default config options for Types Collection or Namespace. Keep in mind, that TypesCollection options override namespace options. Therefore if you will override namespace options all previously created types collections would not update. For example, after doing so all types that have no own config will fall without any error:

import {

defaultTypes,

SymbolConfig

} from 'mnemonica';

defaultTypes[SymbolConfig].blockErrors = false;So, now you can craft as much types as you wish, combine them, re-define them and spend much more time playing with them:

- test : instances & arguments

- track : moments of creation

- check : if the order of creation is OK

- validate : everything, 4 example use sort of TS in runtime

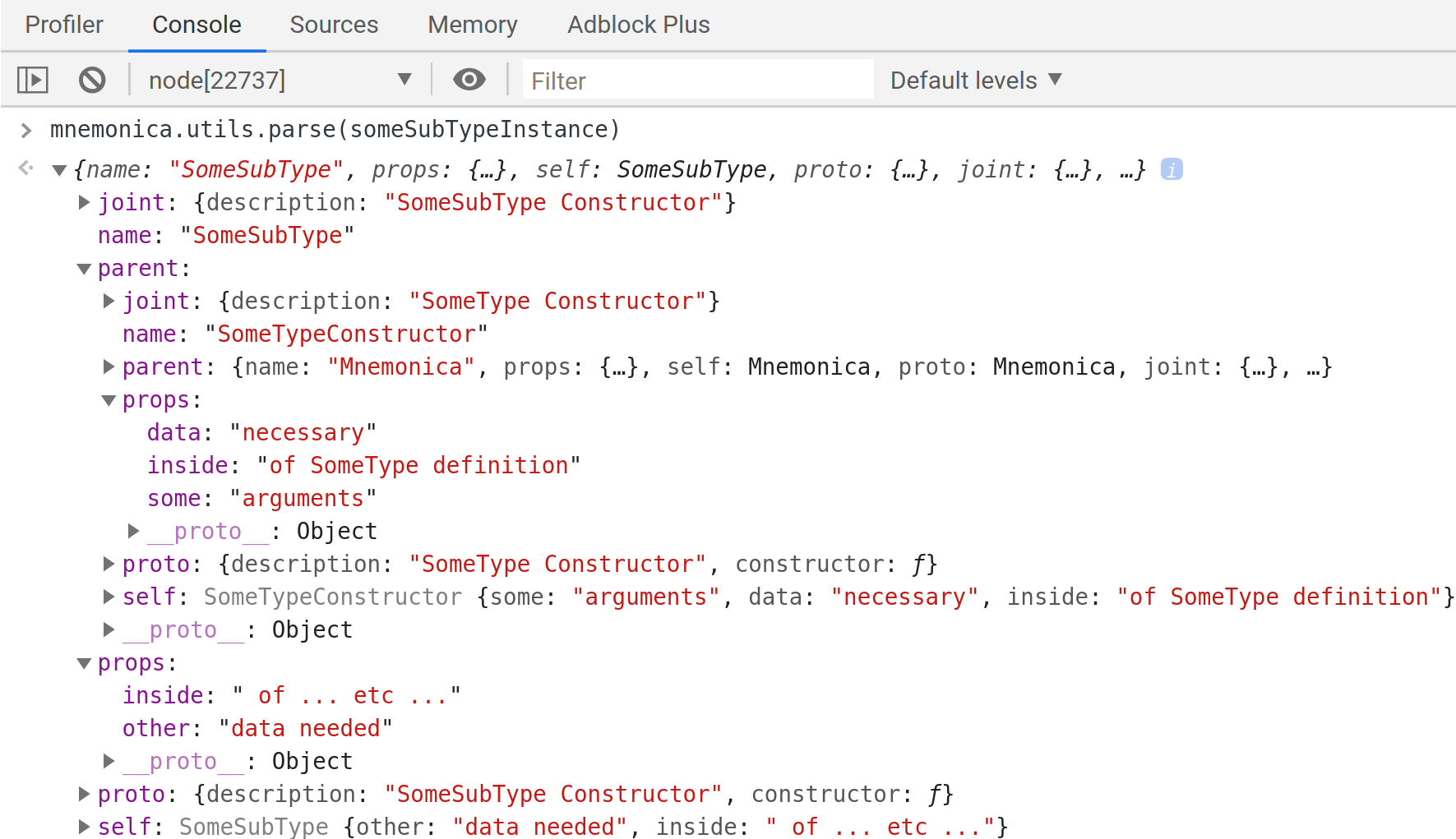

- and even .parse them using

mnemonica.utils.parse:

Good Luck!