-

Notifications

You must be signed in to change notification settings - Fork 39

Tutorial 5: Congestion Control in Multi tenant DC

This tutorial will lead you in the usage and evaluation of performance isolation policies for multiple Tenants in Datacenters. This experiment make use of iMinds testbed no.1, which allows users to deploy experiments in an isolated network on a real environment (not simulations). Once swapped-in, the experiment must be set up in order to run the IPCM and the bootstrapping configuration, which will ultimately create a DC-wide DIF. Policies will be introduced at this level, leaving the Tenant organization free to arrange their own network as desired.

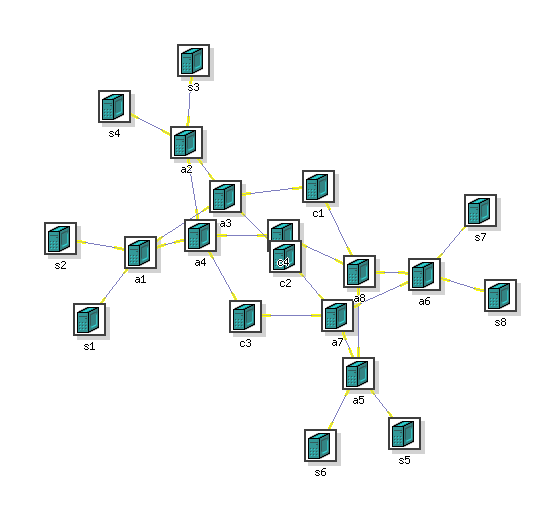

Picture 1. Configuration of the experiment network, organized as two layer of switches (nodes 'a') interconnected with core routers (nodes 'c') which provides full bisection bandwidth to servers (nodes 's').

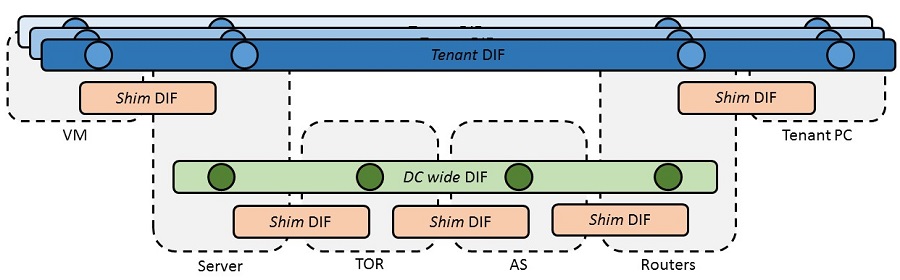

Picture 2. Configuration of the RINA layers within the DC. Multiple abstraction DIFs provides connection at the DC layer, which offer then it's services to the Tenants.

We assume that the machines swapped-in to be used in this scenario have a working pristine-1.5 IRATI stack already installed and tested as working. See the 'Getting started' tutorial for additional information on how to clone, setup, compile and install the stack on a Linux machine.

In addition of this, vWall allow you to create OS images of the whole machine, so experiments can be swapped-out and in without repeating every time the installation of the software on the Linux distribution. I personally advise to install the stack, test it to be sure that it works and then create a backup image that can be used on all the other nodes to bootstrap machines that are ready-to-use.

The use of such testbed is not mandatory, so you can use your own machines on your desk and still try such configuration. What is ultimately necessary is the possibility to login to such machine with SSH terminals.

During the description of the experiment I will make use of scripts that have been built in order to simplify all the operations. Some of this scripts are vWall specific, since they interrogate such system to obtain the necessary information that are later use to craft the .dif files necessary to run the experiment. If you use a system which is different from Virtual Wall you will need to adjust such files by hand, and it will take some time to do. Some of this scripts are testbed-independent and can be used on whatever machine (if it has SSH access).

The scripts are organized in two group; one must be shipped on every node in order to provide a local support, while the other must reside on the controller machine, where you issue commands to all the nodes in the network under your control.

Using the scripts

The step necessary to do for using the scripts are:

- Copy the local scripts on a folder on your machine. Local scripts must be aware of the location where you saved the remote ones, so adjust properly their internal variables.

- Copy the remote scripts on a folder on any of the nodes of your testbed.

- Edit the

access.txtfile on your machine to match the ip address of the remote nodes; syntax is<node_name> <ip_address> <user_name>. This will allow the scripts to reach your machines. - If the machine are not already configured for this, use

ssh-copy-idutility to allows access to your machines without having to type the password every time. After you did this run the script./run.sh "uname -r"to check if the configuration is ok; you should be able to see theunamecommand being invoked on every node under your control.

If you correctly executes such steps you will be able to command your machines remotely with the ./run.sh [machine_name] "command" scripts, which optionally accepts a node name (as saved in access.txt) to execute the command only on one machine. If you succeeded then you are ready for the next steps.

Configuration on the vWall

If you go with vWall configuration you can use the net-resolution.sh <node_name> scripts, which interrogates a node and receive the configuration of your network. Such results are then saved in the intermediate file network.txt which will be used by the next steps.

NOT using the vWall

If you don't want to use the virtual Wall testbed, you will need to adjust the network.txt text file with the information about the network you are going to use. This is mainly a list of the interfaces and their configuration, which will be used later by the configuration generation scripts to create the necessary files for the IPC Manager to run.

The syntax of the network.txt file is:

<node_name> <interface_name> <vlan_id> <ip_address>

Generating the IPCM configuration

This is done by the script conf-generation.sh script, which will look for network.txt information, and will use them to create all the .conf and .dif files necessary for the setup. This generator only work for a layers configuration of multiple Shims over Ethernet and a big DIF which connects all of them, which is the configuration cover for the experiment. At the end of the computation you will find the directory you reserved to host such configuration (see CDIR variable at the begin of the script), which is a bunch of .dif files for the shims and .conf files for the nodes IPCM.

An example of the shim-over-ethernet files generated is:

{

"difType" : "shim-eth-vlan",

"configParameters" :

{

"interface-name" : "eth61"

}

}

And example of IPCM conf file generated is:

{

"configFileVersion" : "1.4.1",

"localConfiguration" :

{

"installationPath" : "/pristine/userspace/bin",

"libraryPath" : "/pristine/userspace/lib",

"logPath" : "/pristine/userspace/log",

"consoleSocket" : "/pristine/userspace/var/ipcm-console.sock",

"pluginsPaths": [

"/pristine/userspace/lib/rinad/ipcp",

"/lib/modules/4.1.24/extra"

]

},

"ipcProcessesToCreate" :

[

{

"type" : "shim-eth-vlan",

"apName" : "s1.102",

"apInstance" : "1",

"difName" : "102"

}

,

{

"type" : "shim-eth-vlan",

"apName" : "s1.101",

"apInstance" : "1",

"difName" : "101"

}

,

{

"type" : "normal-ipc",

"apName" : "s1.dc",

"apInstance" : "1",

"difName" : "dc",

"difsToRegisterAt" : [

"102"

,

"101"

]

}

],

"difConfigurations" : [

{

"name" : "102",

"template" : "102.s1.dif"

}

,

{

"name" : "101",

"template" : "101.s1.dif"

}

,

{

"name" : "dc",

"template" : "dc.dif"

}]

}

I don't want to use the scripts

Then you will have to do all the changes by hand. :-)

There are lot of useful tutorials that explain how to write a configuration file for a DIF or for the IPC Manager. Follow them in order to match your setup, and arm yourself with lot of patience.

About naming

IPCP naming during the configuration generation follow a well predictable behavior, which is: <node_name>.<dif_name>. Reference on such naming is preserved along all the configuration files, and if you need to change this you will have to change the configuration generation scripts and the dc.dif file.

Resume

After all these steps, you shall have remote access to your nodes, and the configuration for you experiment ready to be copied to the remote nodes. What is missing is the DC dif file, which you have to configure as you need in order to enable the right policy and configure the supported QoS. For the experiment you can use the dc.dif present in the local scripts folder by copying it in the same directory where all the other configurations files are.

This DIF file is already setup to use the policies for Congestion Control, along with ECMP routing strategies. Two QoS are actually present there, which are reliable and unreliable communication: the Unreliable one is configured to use the Congestion Control policies, while traffic with Reliable behavior is maintained as default. This means that, in order to use the CC policies, you must require an unreliable communication between two hosts. You can always decide to change this behavior at any time by editing the QoS settings.

{

"name" : "unreliablewithflowcontrol",

"id" : 1,

"partialDelivery" : false,

"orderedDelivery" : false,

"efcpPolicies" :

{

"dtpPolicySet" :

{

"name" : "default",

"version" : "0"

},

"initialATimer" : 0,

"dtcpPresent" : true,

"dtcpConfiguration" :

{

"dtcpPolicySet" :

{

"name" : "cdrr",

"version" : "1"

},

"rtxControl" : false,

"flowControl" : true,

"flowControlConfig" :

{

"rateBased" : true,

"rateBasedConfig" :

{

"sendingRate" : 95000,

"timePeriod" : 10

},

"windowBased" : false

}

}

}

},

To use the ECN marking policy needed by the CDRR flow control one, you need to setup your RMT as follows (notice that I also selected the use of a custom ECMP forwarding policy):

"rmtConfiguration" :

{

"pffConfiguration" :

{

"policySet" :

{

"name" : "pffb",

"version" : "1"

}

},

"policySet" :

{

"name" : "ecn",

"version" : "1"

}

},

Next modification of the DIF profile will be to adopt a custom flow allocation policy which allows application to specify the MGB required for their communication.

"flowAllocatorConfiguration" :

{

"policySet" :

{

"name" : "fare",

"version" : "1"

}

},

The last restriction is that, to use ECMP forwarding policy, you also have to set the routing one. Instead of the classical Dijkstra, you should select the ECMPDijkstra embedded in the default ones.

{

"name" : "routingAlgorithm",

"value" : "ECMPDijkstra"

}]

Resume

If you correctly followed the instruction until here, you should have a bunch of dif files which will be used by your shim-IPC processes that abstract over Ethernet, and one dc.dif file which set the behavior of the DC DIF. What is left is to copy all these information on the remote nodes using the ./conf-to-node.sh script. Since the scripts copy the folder containing such files on the remote nodes, remember to put there also your dc.dif file.

Bootstrapping the system is quite quick now:

- Run

./prepare-interfaces.shif you need to do some operation on the network interfaces before running the IPCP process on it. This is necessary to prepare the Ethernet interfaces to work with the shim IPC Process. - Run

./prepare-nodes.shwill run the IPCM with the given configuration file. For shims this file is already named as<vlan>.<machine_name>.dif, while for DC DIF the file is fixed todc.dif. - Run

./enroll-nodes.shto perform the necessary enrollment operations. Note that enrollments orders are listed inenroll.txtfile in the local scripts. The syntax for such file is<machine_name> <ipcp id> <dif_name> <supporting_dif_name> <target_ipcp_name> <target_ipcp_instance>. If you maintained the IPCP names as they originally were, you can use the file as it is, otherwise you will have to update the enrollment operations.

When there three operation finish successfully, you will have your experiment enrolled and ready to go. Test it using one of the utilities offered by IRATI to be sure that the communication has been established. If error occurs, you will have to investigate in the IPCM log (saved by the scripts under the same folder were the IPCM binary is located) or in kern.log file to trace the error messages.

Again, if you decided to skip the usage of the provided scripts, then open such files and read the operation they do, because you need to do it yourself by hand. Since such files just invokes a set of default operations on the system, if you perform them yourself you should be able to run the scenario without any problem... it will just take lot of time.

You can use the tools provided with the IRATI stack if you desire to perform some operation on such network, but only if you require a Reliable flow; this is because of the specialized policies bound to unreliable QoS we setup earlier. The Flow Allocation Rate Enforcement FA policy in fact translates the avgBandwidth field of the QoS in the Minimum Granted Bandwidth, and since for the normal tools it's zero, your flow will not allow any byte to pass through.

To overcome this behavior you have two chooses:

- Modify the tools like

rina-tgen(the traffic generator) to accept an additional parameter which will be put in the QoS average bandwidth field. - Use rping software provided by Create-Net, which has been specifically crafted for this setup. Invoke this utility without any arguments to trigger the help and see the options which are available.

Trivial tenant

To simulate a flow coming from a Tenant you have to run a server and client instance of the desired software.

To run a server instance of the rping software, for example, you should run:

./rping t1_srv 1 dc --listen

This will create a application named t1_srv on such node which will register on the DC dif and will wait for incoming connection to occurs. What is left now is running the client instance which will connect with such server.

To run the client instance, which will also require a certain MGB, you need to run:

./rping t1_clt 1 dc t1_srv 1 1 30 125000

which will run a Flood test (continue transmission of data) for 30 seconds while requiring a MGB of 125.000 bytes for every time frame of the policy (if you see the DC.dif the default is 10ms, which is time_frame field in the QoS rate based flow control part of the QoS).

What will happens now is that such clients will start to achieve the full speed, because is not in competing with any other tenant. If you run again a similar client instance (with a different MGB) with a different application name but terminating at the same server, you will notice that the rate begins to split the resources, while never going under the requested MGB. This because both will attempt to go at full rate at the begin, and will be pushed back due congestion happening along one of the switches used to reach the server. This will generate ECN marked PDU, which are received by the flow on the destination node, which will eventually start to lower the rate while maintaining the Minimum Granted Bandwidth.

- Home

- Software Architecture Overview

- IRATI in depth

-

Tutorials

- 1. DIF-over-a-VLAN-(point-to-point-DIF)

- 2. DIF over two VLANs

- 3. Security experiments on small provider net

- 4. Multi-tenant Data Centre Network configured via the NMS-DAF

- 5. Congestion control in multi-tenant DC

- 6. Multi-tenant Data Centre network with Demonstrator

- 7. ISP Security with Demonstrator

- 8. Renumbering in a single DIF

- 9. Application discovery and Distributed Mobility Management over WiFi

- 10. Distributed Mobility Management over multiple providers

- 11. Multi-access: multiple providers, multiple technologies