-

-

Notifications

You must be signed in to change notification settings - Fork 5.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

unlock notifies _all_ waiting tasks

#56182

Comments

|

Simple example from the original issue report in July 2023 (would have been an older julia version)

|

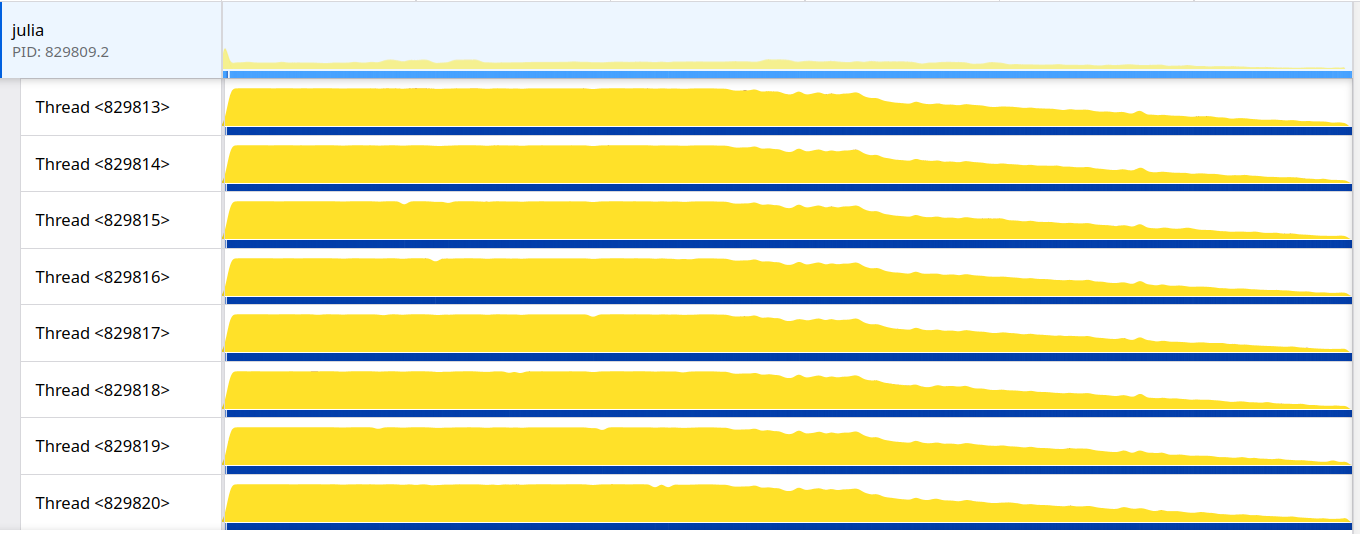

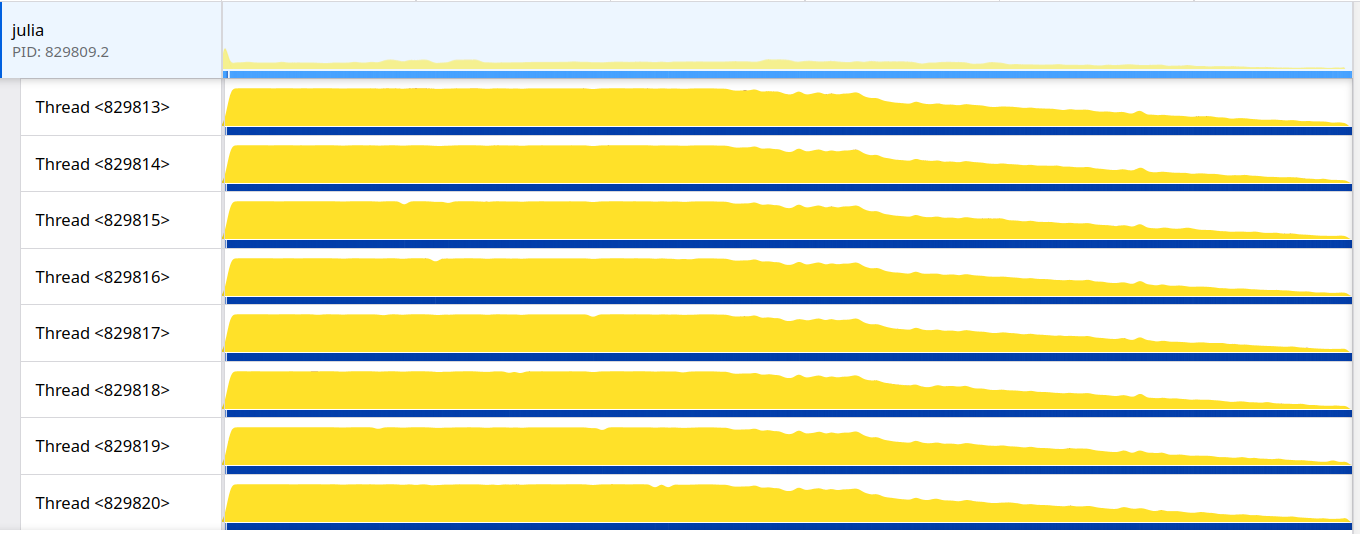

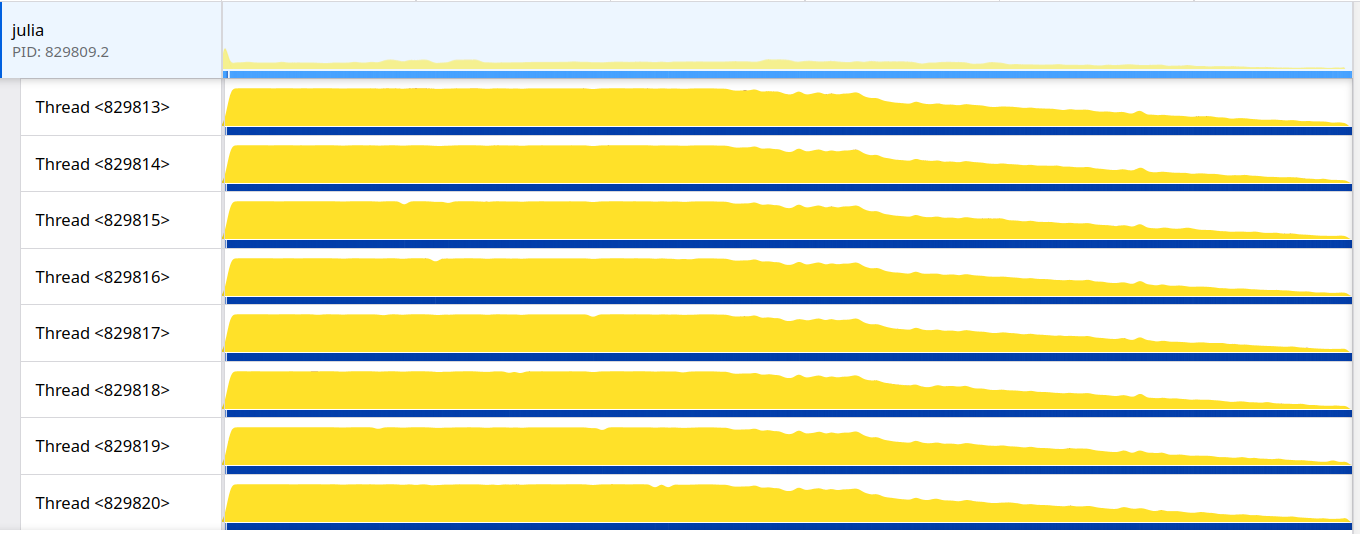

…56814) I propose a change in the implementation of the `ReentrantLock` to improve its overall throughput for short critical sections and fix the quadratic wake-up behavior where each unlock schedules **all** waiting tasks on the lock's wait queue. This implementation follows the same principles of the `Mutex` in the [parking_lot](https://github.com/Amanieu/parking_lot/tree/master) Rust crate which is based on the Webkit [WTF::ParkingLot](https://webkit.org/blog/6161/locking-in-webkit/) class. Only the basic working principle is implemented here, further improvements such as eventual fairness will be proposed separately. The gist of the change is that we add one extra state to the lock, essentially going from: ``` 0x0 => The lock is not locked 0x1 => The lock is locked by exactly one task. No other task is waiting for it. 0x2 => The lock is locked and some other task tried to lock but failed (conflict) ``` To: ``` # PARKED_BIT | LOCKED_BIT | Description # 0 | 0 | The lock is not locked, nor is anyone waiting for it. # -----------+------------+------------------------------------------------------------------ # 0 | 1 | The lock is locked by exactly one task. No other task is # | | waiting for it. # -----------+------------+------------------------------------------------------------------ # 1 | 0 | The lock is not locked. One or more tasks are parked. # -----------+------------+------------------------------------------------------------------ # 1 | 1 | The lock is locked by exactly one task. One or more tasks are # | | parked waiting for the lock to become available. # | | In this state, PARKED_BIT is only ever cleared when the cond_wait lock # | | is held (i.e. on unlock). This ensures that # | | we never end up in a situation where there are parked tasks but # | | PARKED_BIT is not set (which would result in those tasks # | | potentially never getting woken up). ``` In the current implementation we must schedule all tasks to cause a conflict (state 0x2) because on unlock we only notify any task if the lock is in the conflict state. This behavior means that with high contention and a short critical section the tasks will be effectively spinning in the scheduler queue. With the extra state the proposed implementation has enough information to know if there are other tasks to be notified or not, which means we can always notify one task at a time while preserving the optimized path of not notifying if there are no tasks waiting. To improve throughput for short critical sections we also introduce a bounded amount of spinning before attempting to park. ### Results Not spinning on the scheduler queue greatly reduces the CPU utilization of the following example: ```julia function example() lock = ReentrantLock() @sync begin for i in 1:10000 Threads.@Spawn begin @lock lock begin sleep(0.001) end end end end end @time example() ``` Current: ``` 28.890623 seconds (101.65 k allocations: 7.646 MiB, 0.25% compilation time) ```  Proposed: ``` 22.806669 seconds (101.65 k allocations: 7.814 MiB, 0.35% compilation time) ```  In a micro-benchmark where 8 threads contend for a single lock with a very short critical section we see a ~2x improvement. Current: ``` 8-element Vector{Int64}: 6258688 5373952 6651904 6389760 6586368 3899392 5177344 5505024 Total iterations: 45842432 ``` Proposed: ``` 8-element Vector{Int64}: 12320768 12976128 10354688 12845056 7503872 13598720 13860864 11993088 Total iterations: 95453184 ``` ~~In the uncontended scenario the extra bookkeeping causes a 10% throughput reduction:~~ EDIT: I reverted _trylock to the simple case to recover the uncontended throughput and now both implementations are on the same ballpark (without hurting the above numbers). In the uncontended scenario: Current: ``` Total iterations: 236748800 ``` Proposed: ``` Total iterations: 237699072 ``` Closes #56182

…uliaLang#56814) I propose a change in the implementation of the `ReentrantLock` to improve its overall throughput for short critical sections and fix the quadratic wake-up behavior where each unlock schedules **all** waiting tasks on the lock's wait queue. This implementation follows the same principles of the `Mutex` in the [parking_lot](https://github.com/Amanieu/parking_lot/tree/master) Rust crate which is based on the Webkit [WTF::ParkingLot](https://webkit.org/blog/6161/locking-in-webkit/) class. Only the basic working principle is implemented here, further improvements such as eventual fairness will be proposed separately. The gist of the change is that we add one extra state to the lock, essentially going from: ``` 0x0 => The lock is not locked 0x1 => The lock is locked by exactly one task. No other task is waiting for it. 0x2 => The lock is locked and some other task tried to lock but failed (conflict) ``` To: ``` ``` In the current implementation we must schedule all tasks to cause a conflict (state 0x2) because on unlock we only notify any task if the lock is in the conflict state. This behavior means that with high contention and a short critical section the tasks will be effectively spinning in the scheduler queue. With the extra state the proposed implementation has enough information to know if there are other tasks to be notified or not, which means we can always notify one task at a time while preserving the optimized path of not notifying if there are no tasks waiting. To improve throughput for short critical sections we also introduce a bounded amount of spinning before attempting to park. Not spinning on the scheduler queue greatly reduces the CPU utilization of the following example: ```julia function example() lock = ReentrantLock() @sync begin for i in 1:10000 Threads.@Spawn begin @lock lock begin sleep(0.001) end end end end end @time example() ``` Current: ``` 28.890623 seconds (101.65 k allocations: 7.646 MiB, 0.25% compilation time) ```  Proposed: ``` 22.806669 seconds (101.65 k allocations: 7.814 MiB, 0.35% compilation time) ```  In a micro-benchmark where 8 threads contend for a single lock with a very short critical section we see a ~2x improvement. Current: ``` 8-element Vector{Int64}: 6258688 5373952 6651904 6389760 6586368 3899392 5177344 5505024 Total iterations: 45842432 ``` Proposed: ``` 8-element Vector{Int64}: 12320768 12976128 10354688 12845056 7503872 13598720 13860864 11993088 Total iterations: 95453184 ``` ~~In the uncontended scenario the extra bookkeeping causes a 10% throughput reduction:~~ EDIT: I reverted _trylock to the simple case to recover the uncontended throughput and now both implementations are on the same ballpark (without hurting the above numbers). In the uncontended scenario: Current: ``` Total iterations: 236748800 ``` Proposed: ``` Total iterations: 237699072 ``` Closes JuliaLang#56182

…56814) I propose a change in the implementation of the `ReentrantLock` to improve its overall throughput for short critical sections and fix the quadratic wake-up behavior where each unlock schedules **all** waiting tasks on the lock's wait queue. This implementation follows the same principles of the `Mutex` in the [parking_lot](https://github.com/Amanieu/parking_lot/tree/master) Rust crate which is based on the Webkit [WTF::ParkingLot](https://webkit.org/blog/6161/locking-in-webkit/) class. Only the basic working principle is implemented here, further improvements such as eventual fairness will be proposed separately. The gist of the change is that we add one extra state to the lock, essentially going from: ``` 0x0 => The lock is not locked 0x1 => The lock is locked by exactly one task. No other task is waiting for it. 0x2 => The lock is locked and some other task tried to lock but failed (conflict) ``` To: ``` # PARKED_BIT | LOCKED_BIT | Description # 0 | 0 | The lock is not locked, nor is anyone waiting for it. # -----------+------------+------------------------------------------------------------------ # 0 | 1 | The lock is locked by exactly one task. No other task is # | | waiting for it. # -----------+------------+------------------------------------------------------------------ # 1 | 0 | The lock is not locked. One or more tasks are parked. # -----------+------------+------------------------------------------------------------------ # 1 | 1 | The lock is locked by exactly one task. One or more tasks are # | | parked waiting for the lock to become available. # | | In this state, PARKED_BIT is only ever cleared when the cond_wait lock # | | is held (i.e. on unlock). This ensures that # | | we never end up in a situation where there are parked tasks but # | | PARKED_BIT is not set (which would result in those tasks # | | potentially never getting woken up). ``` In the current implementation we must schedule all tasks to cause a conflict (state 0x2) because on unlock we only notify any task if the lock is in the conflict state. This behavior means that with high contention and a short critical section the tasks will be effectively spinning in the scheduler queue. With the extra state the proposed implementation has enough information to know if there are other tasks to be notified or not, which means we can always notify one task at a time while preserving the optimized path of not notifying if there are no tasks waiting. To improve throughput for short critical sections we also introduce a bounded amount of spinning before attempting to park. ### Results Not spinning on the scheduler queue greatly reduces the CPU utilization of the following example: ```julia function example() lock = ReentrantLock() @sync begin for i in 1:10000 Threads.@Spawn begin @lock lock begin sleep(0.001) end end end end end @time example() ``` Current: ``` 28.890623 seconds (101.65 k allocations: 7.646 MiB, 0.25% compilation time) ```  Proposed: ``` 22.806669 seconds (101.65 k allocations: 7.814 MiB, 0.35% compilation time) ```  In a micro-benchmark where 8 threads contend for a single lock with a very short critical section we see a ~2x improvement. Current: ``` 8-element Vector{Int64}: 6258688 5373952 6651904 6389760 6586368 3899392 5177344 5505024 Total iterations: 45842432 ``` Proposed: ``` 8-element Vector{Int64}: 12320768 12976128 10354688 12845056 7503872 13598720 13860864 11993088 Total iterations: 95453184 ``` ~~In the uncontended scenario the extra bookkeeping causes a 10% throughput reduction:~~ EDIT: I reverted _trylock to the simple case to recover the uncontended throughput and now both implementations are on the same ballpark (without hurting the above numbers). In the uncontended scenario: Current: ``` Total iterations: 236748800 ``` Proposed: ``` Total iterations: 237699072 ``` Closes #56182

unlock(rl::ReentrantLock) does a notify(cond) which wakes all tasks waiting on the lock. As this is a lock, only one of these tasks can succeed, so it is unnecessary to schedule all of them. When there are a large number of tasks, the current approach wastes CPU time and can cause a quadratic amount of allocations for waitlist nodes.

It isn't straightforward to trivially fix this by changing the

notifycall to setall=false--unlockwill only callnotifyifReentrantLock.havelockis0x02(see here) and that only happens after the firstnotifyif all waiting tasks are woken up to race for the lock again (see here).Broken out from #50425 as this is a separate issue.

The text was updated successfully, but these errors were encountered: