-

Notifications

You must be signed in to change notification settings - Fork 167

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

【Hackathon 5th No.83】PaddleMIX ppdiffusers models模块功能升级同步HF (#322)

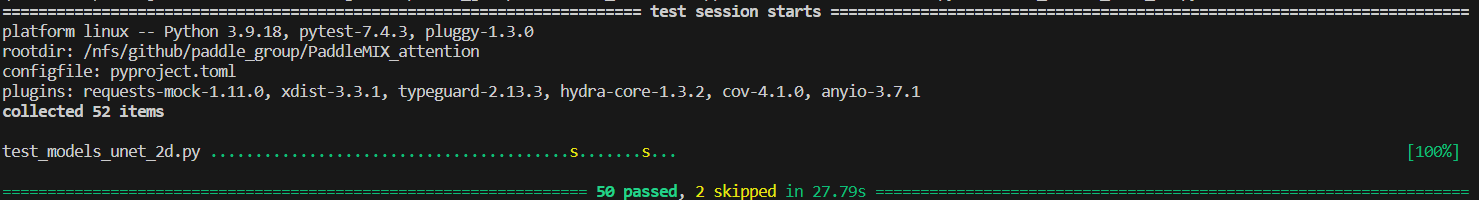

PaddleMIX ppdiffusers models模块功能升级同步HF https://github.com/PaddlePaddle/community/blob/master/hackathon/hackathon_5th/%E3%80%90PaddlePaddle%20Hackathon%205th%E3%80%91%E5%BC%80%E6%BA%90%E8%B4%A1%E7%8C%AE%E4%B8%AA%E4%BA%BA%E6%8C%91%E6%88%98%E8%B5%9B%E5%A5%97%E4%BB%B6%E5%BC%80%E5%8F%91%E4%BB%BB%E5%8A%A1%E5%90%88%E9%9B%86.md#no83paddlemix-ppdiffusers-models%E6%A8%A1%E5%9D%97%E5%8A%9F%E8%83%BD%E5%8D%87%E7%BA%A7%E5%90%8C%E6%AD%A5hf #259 #260 #262 #264 #265 升级 models 增加scale 增加get_processor diffusers上LoRA类逐渐取消,改为使用lora_layer 部分测试误差增加,测试修改前代码同样有误差 增加tests/lora目录 flax模型paddle没有使用,没有迁移 test_attention_processor.py  test_models_unet_2d.py  test_lora_layers.py  test_models_unet_motion.py

- Loading branch information

Showing

46 changed files

with

8,799 additions

and

2,365 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Large diffs are not rendered by default.

Oops, something went wrong.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.