-

Notifications

You must be signed in to change notification settings - Fork 126

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

PaddleMIX ppdiffusers中升级attention相关代码 #262

Comments

|

ppdiffusers里attention.py里GEGLU模块缺少 |

|

似乎缺少models/attention.py的单测 |

不需要 |

如果torch 的diffusers里有这个单测则添加上去,如果没有就不需要添加 |

|

diffusers的attention_processor.py里存在的pytorch2.0版本的类需要在ppdiffusers里实现吗 像: |

|

缺少2_0的类会导致新增的 ADDED_KV_ATTENTION_PROCESSORS = (

AttnAddedKVProcessor,

SlicedAttnAddedKVProcessor,

AttnAddedKVProcessor2_0,

XFormersAttnAddedKVProcessor,

LoRAAttnAddedKVProcessor,

)

CROSS_ATTENTION_PROCESSORS = (

AttnProcessor,

AttnProcessor2_0,

XFormersAttnProcessor,

SlicedAttnProcessor,

LoRAAttnProcessor,

LoRAAttnProcessor2_0,

LoRAXFormersAttnProcessor,

)

AttentionProcessor = Union[

AttnProcessor,

AttnProcessor2_0,

XFormersAttnProcessor,

SlicedAttnProcessor,

AttnAddedKVProcessor,

SlicedAttnAddedKVProcessor,

AttnAddedKVProcessor2_0,

XFormersAttnAddedKVProcessor,

CustomDiffusionAttnProcessor,

CustomDiffusionXFormersAttnProcessor,

# depraceted

LoRAAttnProcessor,

LoRAAttnProcessor2_0,

LoRAXFormersAttnProcessor,

LoRAAttnAddedKVProcessor,

]部分出现类似于"AttnProcessor2_0" is not defined的warning |

如果功能一致不需要;如果是因为2.0版本导致新增功能,则需要。 |

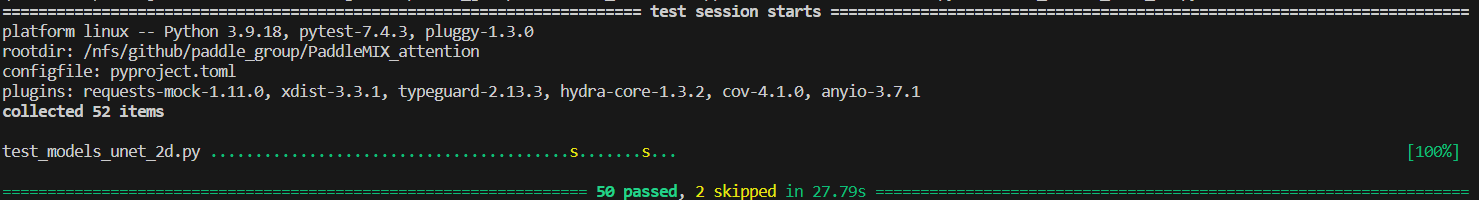

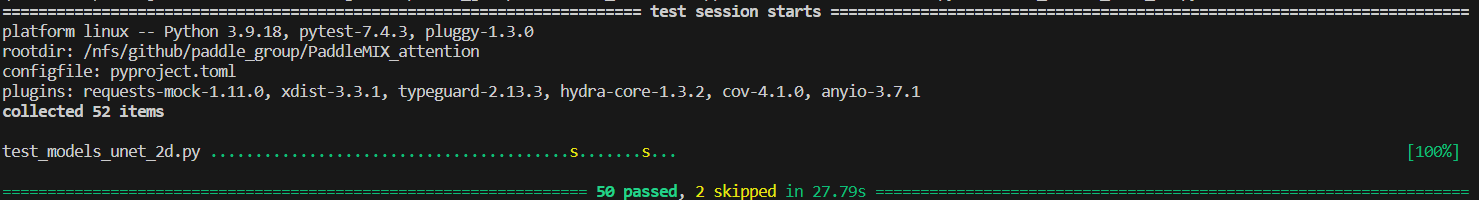

PaddleMIX ppdiffusers models模块功能升级同步HF https://github.com/PaddlePaddle/community/blob/master/hackathon/hackathon_5th/%E3%80%90PaddlePaddle%20Hackathon%205th%E3%80%91%E5%BC%80%E6%BA%90%E8%B4%A1%E7%8C%AE%E4%B8%AA%E4%BA%BA%E6%8C%91%E6%88%98%E8%B5%9B%E5%A5%97%E4%BB%B6%E5%BC%80%E5%8F%91%E4%BB%BB%E5%8A%A1%E5%90%88%E9%9B%86.md#no83paddlemix-ppdiffusers-models%E6%A8%A1%E5%9D%97%E5%8A%9F%E8%83%BD%E5%8D%87%E7%BA%A7%E5%90%8C%E6%AD%A5hf #259 #260 #262 #264 #265 升级 models 增加scale 增加get_processor diffusers上LoRA类逐渐取消,改为使用lora_layer 部分测试误差增加,测试修改前代码同样有误差 增加tests/lora目录 flax模型paddle没有使用,没有迁移 test_attention_processor.py  test_models_unet_2d.py  test_lora_layers.py  test_models_unet_motion.py

|

任务已完成 by @co63oc ,close issue。 |

…ddle#322) PaddleMIX ppdiffusers models模块功能升级同步HF https://github.com/PaddlePaddle/community/blob/master/hackathon/hackathon_5th/%E3%80%90PaddlePaddle%20Hackathon%205th%E3%80%91%E5%BC%80%E6%BA%90%E8%B4%A1%E7%8C%AE%E4%B8%AA%E4%BA%BA%E6%8C%91%E6%88%98%E8%B5%9B%E5%A5%97%E4%BB%B6%E5%BC%80%E5%8F%91%E4%BB%BB%E5%8A%A1%E5%90%88%E9%9B%86.md#no83paddlemix-ppdiffusers-models%E6%A8%A1%E5%9D%97%E5%8A%9F%E8%83%BD%E5%8D%87%E7%BA%A7%E5%90%8C%E6%AD%A5hf PaddlePaddle#259 PaddlePaddle#260 PaddlePaddle#262 PaddlePaddle#264 PaddlePaddle#265 升级 models 增加scale 增加get_processor diffusers上LoRA类逐渐取消,改为使用lora_layer 部分测试误差增加,测试修改前代码同样有误差 增加tests/lora目录 flax模型paddle没有使用,没有迁移 test_attention_processor.py  test_models_unet_2d.py  test_lora_layers.py  test_models_unet_motion.py

PaddleMIX ppdiffusers中升级attention相关代码

任务描述

任务背景

完成步骤

提交内容:

题目更新:

当前还请按照diffusers最新稳定版本0.23.1更新升级

The text was updated successfully, but these errors were encountered: