-

Notifications

You must be signed in to change notification settings - Fork 1

Deploying a Docker based Geoserver cluster solution

Here I will document the steps I took along with any issues encountered during deployment of a Geoserver cluster with Docker.

At minimum, our Docker-based Geoserver deployment needs 3 main elements:

- A disk volume for the data and relevant scripts for daily updates.

- A Postgis server, either Docker based or standalone. At present our AWS deployment uses a dedicated RDB instance.

- A Docker container running Geoserver ( 2.24.4 at the time of this writing).

- Make an EBS volume and copy all current data onto it.

- Create a VM and install docker.io.

- Mount this volume on /mnt/cephfs (the mount point is a holdover for when we used this setup on the Plume cluster at the Firelab).

- Deploy a Postgres server with Postgis extension (via container or RDB instance).

- Load the wfas database onto the Postgis server.

- Deploy a single node Geoserver instance via docker container. Example Docker file, using an image built with WFAS extensons:

version: "3.7"

services:

geoserver_adm:

image: wfas/wfas-repo:geoserver-2.24.4-alpine

depends_on:

- broker

logging:

driver: "json-file"

options:

max-size: "10m"

ports:

- target: 8080

published: 8081

protocol: tcp

mode: host

volumes:

- type: bind

source: /mnt/cephfs/geoserver/data_dir

target: /opt/geoserver/data_dir

- type: bind

source: /mnt/cephfs

target: /mnt/cephfs

environment:

- CLUSTER_CONFIG_DIR=/opt/geoserver/data_dir/cluster/master

- GEOSERVER_LOG_LOCATION=/opt/geoserver/logs/geoserver.log

- COOKIE=JSESSIONID prefix

- GWC_DISKQUOTA_DISABLED="true"

- GWC_METASTORE_DISABLED="true"

- instanceName=master

- POSTGRES_ADDR=

- POSTGRES_PORT=

- DBNAME=

- PROXY_NAME=

- PROXY_HTTPS_PORT=

deploy:

restart_policy:

condition: any

mode: replicated

replicas: 1

placement:

constraints: [node.hostname==]

update_config:

delay: 2s

A Docker swarm is based on a manager/worker node structure, where the nodes are either physical machines or virtual instances.

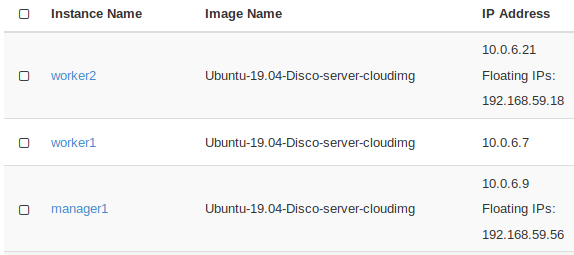

- I created 3 instances on the Plume Openstack cluster, using the Ubuntu 19.04 Disco image.

- Next, I installed docker on each of the nodes:

sudo apt update

sudo apt install docker.io

- Then initialized the swarm:

sudo docker swarm init --advertise-addr <MANAGER-IP>

- Added the worker nodes:

sudo docker swarm join --token sometoken <MANAGER-IP/MANAGER PORT>

- Created a local image repository:

sudo docker service create --name registry --publish 5000:5000 registry:2

I based this stack on the activemq Dockerfile from G Roldan's 2019 FOSS4G presentation, and the kartoza/geoserver docker image.

- Some customizations were necessary, hence the need for the local registry in Step 5 above. Most notably, these customizations were:

- Modifying the path for the GEOSERVER_DATA_DIR environment variable to match the path specified in the kartoza/geoserver image:

ENV GEOSERVER_DATA_DIR /opt/geoserver/data_dir

- Adding the jms-clustering plugin to the list of extensions in the setup.sh script for the kartoza/geoserver image:

geoserver-${GS_VERSION:0:4}-SNAPSHOT-jms-cluster-plugin.zip

There were a few other changes, such as geoserver download URL since the public server rebuild, and creation of directories. I should include a file with my changes.

- Build the Docker images:

sudo docker build --tag 'localhost:5000/geoserver:2.15.1' .

sudo docker build --tag 'localhost:5000/activemqbroker:2.15.1' .

Following a successful build, these iamges should now be listed with sudo docker image ls

- Push these images to the local registry:

sudo docker push localhost:5000/geoserver:2.15.1

sudo docker push localhost:5000/activemqbroker:2.15.1

- Finally, deploy the stack:

sudo docker stack deploy -c docker-geoserver.stack.yml gs_stack

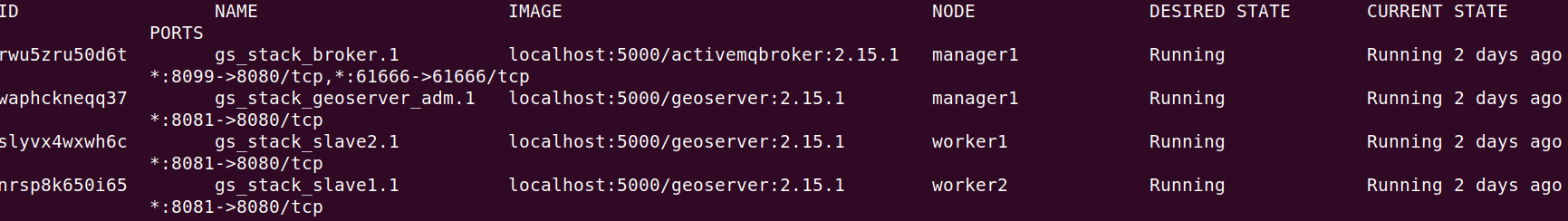

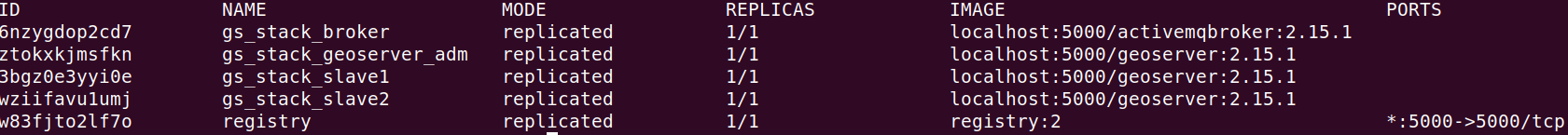

- Inspect the status of the deployment

sudo docker stack ps gs_stack

sudo docker service ls

- Clustering configuration:

Inspect configuration of the cluster.properties file. From this thread: the option "Slave connection" in the "Cluster configuration" panel should be set to "enabled". It is important to note that "Slave connection" corresponds to "connection=enabled" in cluster.properties. The "URL of the broker to connect" field (on the very top) should be set to the broker:

tcp://broker:61666, where broker is the name of the service where the activemq appication is running. This had me stumped for days. Working options in the cluster.properties file:

- in cluster/master:

CLUSTER_CONFIG_DIR=/opt/geoserver/data_dir/cluster/master

instanceName=master

readOnly=disabled

brokerURL=tcp\://broker\:61666

durable=true

embeddedBroker=disabled

toggleMaster=true

connection.retry=10

xbeanURL=./broker.xml

embeddedBrokerProperties=embedded-broker.properties

topicName=VirtualTopic.geoserver

connection=enabled

toggleSlave=false

connection.maxwait=500

group=geoserver-cluster

- in cluster/slave1:

CLUSTER_CONFIG_DIR=/opt/geoserver/data_dir/cluster/slave1

instanceName=slave1

readOnly=enabled

brokerURL=tcp\://broker\:61666

durable=true

embeddedBroker=disabled

toggleMaster=false

connection.retry=10

xbeanURL=./broker.xml

embeddedBrokerProperties=embedded-broker.properties

topicName=VirtualTopic.geoserver

connection=enabled

toggleSlave=true

connection.maxwait=500

group=geoserver-cluster

TODO:

- How to load a common configuration file for slave instances, but have some options customized? For example, instanceName for each client.

- Gabriel Roldan's 2019 FOSS4G presentation on Geoserver clustering (in Spanish):

https://groldan.github.io/2019_foss4g-ar_taller_geoserver/

- The lengthy tutorial pages on this topic from Geosolutions:

https://geoserver.geo-solutions.it/edu/en/clustering/clustering/active/index.html

- Gabriel Roldan's thread on the Geoserver mailing list

http://osgeo-org.1560.x6.nabble.com/Geoserver-and-JMS-clustering-td5403395.html

- GeoServer Clustering Revisited: Getting Your Docker On

- Trial and error

I haven't been able to leverage Docker's built-in routing mesh and load balancing capabilities so far. In order to get clustering working, I resorted to using the "host" networking mode. Maybe I need to change the connection type to the broker on all cluster instances.

- Front end proxy/load ballancer.

HAProxy (in my case, deployed on a separate VM) requires manual config, which is not optimal.

Traeffic seems to be the currently widely acceppted and actively developed solution. It is pretty complicated to configure. But, if any services are scaled, it is dynamically reconfigured. Also, I had problems with having a session persist on the Master Geoserver instance.

dockercloud/haproxy is also capable of dynamic configuration. However, it is not being developed actively anymore.