-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-25332][SQL] select broadcast join instead of sortMergeJoin for the small size table even query fired via new session/context #22758

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@srowen @cloud-fan @HyukjinKwon @felixcheung. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is to test when the conversion is on and off. We shouldn't change it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Right. i think some misunderstanding i will recheck into this. Thanks

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't quite understand why table must have stats. For both file sources and hive tables, we will estimate the data size with files, if the table doesn't have stats.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for your valuable feedback.

My observations :

- In insert flow we are always trying to update the HiveStats as per the below statement in InsertIntoHadoopFsRelationCommand.

if (catalogTable.nonEmpty) {

CommandUtils.updateTableStats(sparkSession, catalogTable.get)

}

but after create table command, when we do insert command within the same session Hive statistics is not getting updated due to below validation where condition expects stats to be non-empty as below

def updateTableStats(sparkSession: SparkSession, table: CatalogTable): Unit = {

if (table.stats.nonEmpty) {

But if we re-launch spark-shell and trying to do insert command the Hivestatistics will be saved and now onward the stats will be taken from HiveStats and the flow will never try to estimate the data size with file .

- Currently always system is not trying to estimate the data size with files when we are executing the insert command, as i told above if we launch the query from a new context , system will try to read the stats from the Hive. i think there is a problem in the behavior consistency and also if we can always get the stats from hive then shall we need to calculate again eveytime the stats from files?

I think we may need to update the flow where it shall always try read the data size from files, it shall never depend on HiveStats,

Or if we are recording the HiveStats then everytime it shall read the Hivestats.

Please let me know whether i am going right direction, let me know for any clarifications.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

but after create table command, when we do insert command within the same session Hive statistics is not getting updated

This is the thing I don't understand. Like I said before, even if table has no stats, Spark will still get a stats via the DetermineTableStats rule.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@sujith71955 What if spark.sql.statistics.size.autoUpdate.enabled=false or hive.stats.autogather=false? It still update stats?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes its default setting which means false. but i think it should be fine to keep default setting in this scenario .

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

but after create table command, when we do insert command within the same session Hive statistics is not getting updated

This is the thing I don't understand. Like I said before, even if table has no stats, Spark will still get a stats via the

DetermineTableStatsrule.

Right,but i DefaultStatistics will return default value for the stats

but after create table command, when we do insert command within the same session Hive statistics is not getting updated

This is the thing I don't understand. Like I said before, even if table has no stats, Spark will still get a stats via the

DetermineTableStatsrule.

I think this rule will return default stats always unless we make session.sessionState.conf.fallBackToHdfsForStatsEnabled as true, i will reconfirm this behaviour.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The table created in the current session does not have stats. In this situation. It gets sizeInBytes from

spark/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/LogicalRelation.scala

Lines 42 to 46 in 1ff4a77

| override def computeStats(): Statistics = { | |

| catalogTable | |

| .flatMap(_.stats.map(_.toPlanStats(output, conf.cboEnabled))) | |

| .getOrElse(Statistics(sizeInBytes = relation.sizeInBytes)) | |

| } |

spark/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/HadoopFsRelation.scala

Lines 88 to 91 in 25c2776

| override def sizeInBytes: Long = { | |

| val compressionFactor = sqlContext.conf.fileCompressionFactor | |

| (location.sizeInBytes * compressionFactor).toLong | |

| } |

.

It's realy size, that why it's

broadcast join. In fact, we should invalidate this table to let Spark use the DetermineTableStats to take effect. I am doing it here: #22721

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right its about considering the default size, but i am not very sure whether we shall invalidate the cache, i will explain my understanding below.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

but after create table command, when we do insert command within the same session Hive statistics is not getting updated

This is the thing I don't understand. Like I said before, even if table has no stats, Spark will still get a stats via the

DetermineTableStatsrule.

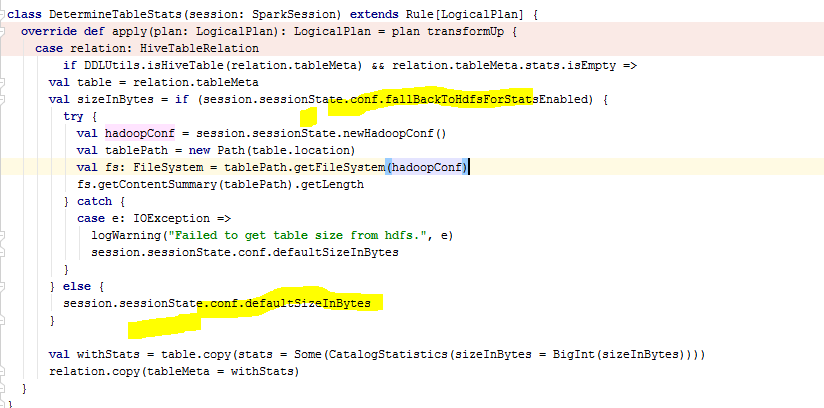

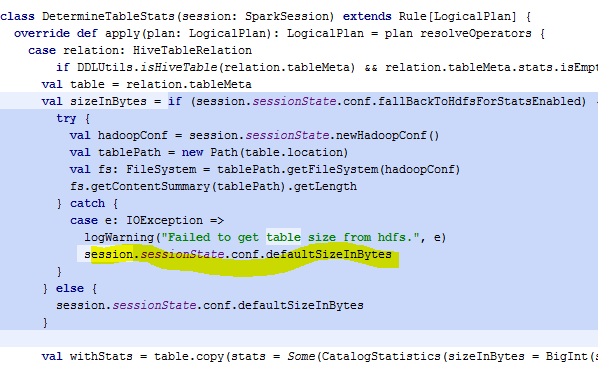

@cloud-fan DetermineStats is just initializing the stats if the stats is not set, only if session.sessionState.conf.fallBackToHdfsForStatsEnabled is true then the rule is deriving the stats from file system and updating the stats as shown below code snippet. In insert flow this condition never gets executed, so the stats will be still none.

|

@cloud-fan |

|

I think the cost of get the stats from |

|

Inorder to make this flow consistent either |

Just a suggestion let me know for any thoughts;) Thanks all for your valuable time. |

|

@cloud-fan Shall i update this PR based on the second approach, will that be fine?I tested with the second approach also and the usecases are working fine which is mentioned in this JIRA . please let me know your view, or we are going to continue with the approach mentioned in #22721. If any clarifications is required regarding this flow please let me know , i will try my best to explain as the scenarios are confusing. |

…s selected when restart spark-shell/spark-JDBC for hive provider ## What changes were proposed in this pull request? Problaem: Below steps in sequence to reproduce the issue. a.Create parquet table with stored as clause. b.Run insert statement => This will not update Hivestats. c.Run (Select query which needs to calculate stats or explain cost select statement) -> this will evaluate stats from HadoopFsRelation d.Since correct stats(sizeInBytes) is available , the plan will select broadcast node if join with any table. e. Exit; (come out of shell) f.Now again run setp c ( calculate stat) query. This gives wrong stats (sizeInBytes - default value will be assigned) in plan. Because it is calculated from HiveCatalogTable which does not have any stats as it is not updated in step b g.Since in-correct stats(sizeInBytes - default value will be assigned) is available, the plan will select SortMergeJoin node if join with any table. h.Now Run insert statement => This will update Hivestats . i.Now again run setp c ( calculate stat) query. This gives correct stat (sizeInBytes) in plan .because it can read the hive stats which is updated in step i. j.Now onward always stat is available so correct stat is plan will be displayed which picks Broadcast join node(based on threshold size) always. Solution: Here the main problem is hive stats is not getting recorded after insert command, this is because of a condition "if (table.stats.nonEmpty)" in updateTableStats() which will be executed as part of InsertIntoHadoopFsRelationCommand command. So as part of fix we initialized a default value for the CatalogTable stats if there is no cache of a particular LogicalRelation. Also it is observed in Test Case “test statistics of LogicalRelation converted from Hive serde tables" in StatisticsSuite, orc and parquet both are convertible but we are expecting that only orc should get/record stats Hivestats not for parquet.But since both relations are convertible so we should have same expectation. Same is corrected in this PR. How this patch tested: Manually testes, adding the test snapshots and the UT is corrected which will verify the PR scenario.

469f369 to

1d5902e

Compare

|

@cloud-fan @HyukjinKwon @srowen b) If we have mechanism to read the stats from hive then why we shall estimate the data size with files? Please let me know your suggestions i feel there is an inconsistency in this flow. |

|

@cloud-fan @HyukjinKwon @wangyum Any suggestions on this issue , because of this defect we are facing some performance issues in our customer environment. Requesting you all to please have a look on this again and please provide me suggestions if any so that i can handle it. |

|

I don't know this code well enough to review. I think there is skepticism from people who know this code whether this is change is correct and beneficial. If there's doubt, I think it should be closed. |

|

Thanks for the comment Sean , there are certain areas which i found

inconsistencies, if i get some inputs from experts i think i can update

the PR , if we are planning to tackle this issue via other PR then I will

close this PR.

Currently this issue is causing performance degradation as the node is

getting converted to SortMergeJoin even though it has potential to opt

Broadcast join as the data size is less than broadcast threshold which

triggers unnecessary shuffle.

…On Fri, 2 Nov 2018 at 12:33 AM, Sean Owen ***@***.***> wrote:

I don't know this code well enough to review. I think there is skepticism

from people who know this code whether this is change is correct and

beneficial. If there's doubt, I think it should be closed.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#22758 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/AMZZ-Ws13ku8Nrh7hwpdjDYDY8pOzD-Kks5uq0VzgaJpZM4Xm3l1>

.

|

|

do we need to handle this scenario? do we have any PR for handling this issue? |

|

cc @wzhfy |

|

@srowen @cloud-fan @wangyum @dongjoon-hyun @wzhfy Any update on this, How shall we move forward to handle this scenario?Problem still exists. |

|

Can you make the PR description concise .. ? It's super hard to read and follow. |

| // Intialize the catalogTable stats if its not defined.An intial value has to be defined | ||

| // so that the hive statistics will be updated after each insert command. | ||

| val withStats = { | ||

| if (updatedTable.stats == None) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@wangyum, so it is basically subset of #22721? It's funny that Hive tables should set the initial stats alone here, which is supposed to be set somewhere else.

Bit old PR :), will go through the problem once again and let you know more concise details. i remeber i struggled a lot for handing this issue :) Please let me know for any inputs if this way of handling is wrong.

|

|

@wangyum Please test my scenario with your fix and check whether this issue will be addressed with your PR, i doubt . Let me know for any suggestions. Thanks |

|

Can one of the admins verify this patch? |

|

We're closing this PR because it hasn't been updated in a while. If you'd like to revive this PR, please reopen it! |

What changes were proposed in this pull request?

Problem:

Instead of broadcast hash join ,Sort merge join has selected when restart spark-shell/spark-JDBC for hive provider.

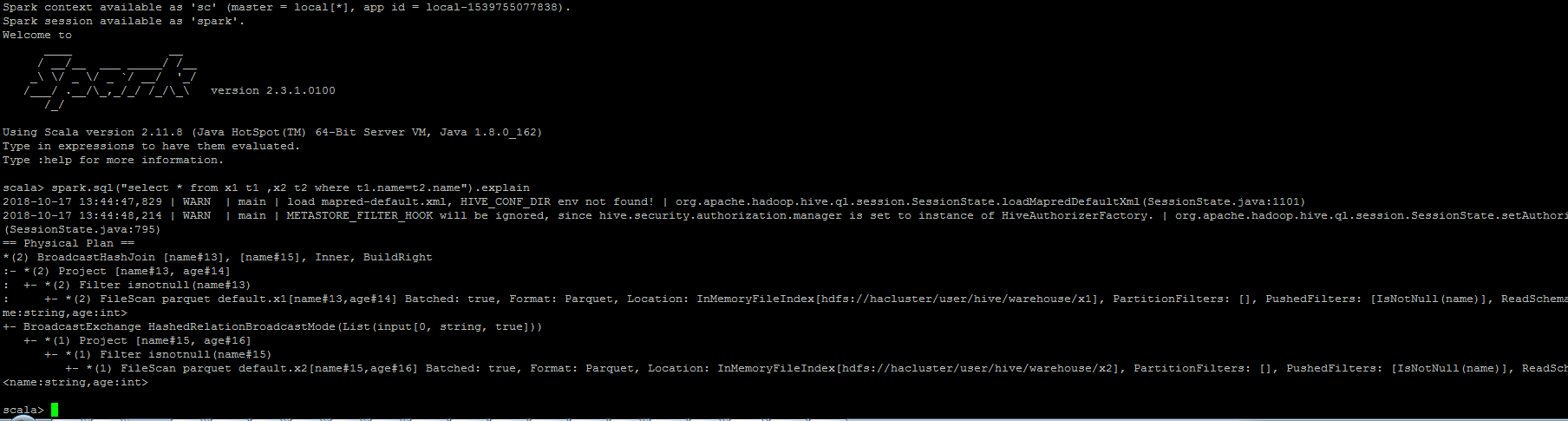

Query Plan:

Now Restart Spark-Shell or do spark-submit or restsrt JDBCServer again and run same select query

Query plan will be modified as below.

What changes were proposed in this pull request?

Here the main problem is hive stats is not getting recorded after insert command, this is because of a condition "if (table.stats.nonEmpty)" in updateTableStats() which will be executed as part of InsertIntoHadoopFsRelationCommand command. since the CatalogTable stats never initialized, this condition never meets. so again if we fire same query in a new context/session the plan ll changed to SortMergeJoin.

So as part of fix we initialized a default value for the CatalogTable stats if there is no cache of a particular LogicalRelation.

As per this statement after insert we are expecting the Hivestats shall get updated, please correct me if i am missing something.

How was this patch tested?

Manually tested, attaching the snapshot.

After Fix

Login to spark-shell => create 2 tables => Run insert commad => Explain the check the plan =>Plan contains Broadcast join => Exit

Step 2:

Relaunch Spark-shell => Run explain command of particular select statement => verify the plan => Plan still contains Broadcast join since after fix Hivestats is available for the table.

Step 3:

Again Run insert command => Run explain command of particular select statement => verify the plan

we can observer the plan still retains BroadcastJoin - Nowonwards the results are always consistent