-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-42172][CONNECT] Scala Client Mima Compatibility Tests #39712

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

decfdd9

b852f36

6299f9a

3f27f63

23a759c

ea30f6a

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -33,6 +33,7 @@ | |

| <properties> | ||

| <sbt.project.name>connect-client-jvm</sbt.project.name> | ||

| <guava.version>31.0.1-jre</guava.version> | ||

| <mima.version>1.1.0</mima.version> | ||

| </properties> | ||

|

|

||

| <dependencies> | ||

|

|

@@ -92,6 +93,13 @@ | |

| <artifactId>mockito-core</artifactId> | ||

| <scope>test</scope> | ||

| </dependency> | ||

| <!-- Use mima to perform the compatibility check --> | ||

|

||

| <dependency> | ||

| <groupId>com.typesafe</groupId> | ||

| <artifactId>mima-core_${scala.binary.version}</artifactId> | ||

| <version>${mima.version}</version> | ||

| <scope>test</scope> | ||

| </dependency> | ||

| </dependencies> | ||

| <build> | ||

| <testOutputDirectory>target/scala-${scala.binary.version}/test-classes</testOutputDirectory> | ||

|

|

||

| Original file line number | Diff line number | Diff line change | ||

|---|---|---|---|---|

|

|

@@ -19,6 +19,7 @@ package org.apache.spark.sql | |||

| import scala.collection.JavaConverters._ | ||||

|

|

||||

| import org.apache.spark.connect.proto | ||||

| import org.apache.spark.internal.Logging | ||||

| import org.apache.spark.sql.Column.fn | ||||

| import org.apache.spark.sql.connect.client.unsupported | ||||

| import org.apache.spark.sql.functions.lit | ||||

|

|

@@ -44,7 +45,7 @@ import org.apache.spark.sql.functions.lit | |||

| * | ||||

| * @since 3.4.0 | ||||

| */ | ||||

| class Column private[sql] (private[sql] val expr: proto.Expression) { | ||||

| class Column private[sql] (private[sql] val expr: proto.Expression) extends Logging { | ||||

|

||||

| class Column(val expr: Expression) extends Logging { |

should we delete private[sql] here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My poor Scala knowledge indicate this only marks one constructor private. The intension is to mark the current constructor private. For more constructor methods, we will add in follow up PRs.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hmm....why is it not consistent with spark.sql.Column?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Our type is proto.Expression, it is not the same as Expression. I leave it to later PRs to decide how to support Expression.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I mean, why not

class Column(val expr: proto.Expression) extends Logging { ...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Because I am not certain if we should expose constructor this(expr: proto.Expression) and val expr:proto.Expression.

They are not the same type as this(expr: Expression) and val expr: Expression.

Our type proto.Expression is some grpc class and Expression is in sql package. They are different types from the binary code point of view.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's keep it private[sql] for now.

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Both Column and Column$ are private[sql] access scope with this pr, so this is not an API for users?

Seem users cannot create a Column in their own package with this pr, for example:

package org.apache.spark.test

import org.scalatest.funsuite.AnyFunSuite // scalastyle:ignore funsuite

import org.apache.spark.sql.Column

class MyTestSuite

extends AnyFunSuite // scalastyle:ignore funsuite

{

test("new column") {

val a = Column("a") // Symbol apply is inaccessible from this place

val b = new Column(null) // No constructor accessible from here

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think org.apache.spark.sql.Column#apply was a public api before. If private[sql] is added to object Column, it may require more code refactoring work

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for your inputs.

Looking the current Column class, the SQL API give two public APIs to construct the Column:

class Column(val expr: Expression) extends Logging {

def this(name: String) = this(name match {

case "*" => UnresolvedStar(None)

case _ if name.endsWith(".*") =>

val parts = UnresolvedAttribute.parseAttributeName(name.substring(0, name.length - 2))

UnresolvedStar(Some(parts))

case _ => UnresolvedAttribute.quotedString(name)

})

...

Right now the client API is very far from completion. We will add new methods in coming PRs. I am sure there will be a Column(name: String) for users to use. But it is out the scope of this PR to include all public constructors needed for the client.

The compatibility check added with this PR will grow the check coverage when more and more methods are added in the client. The current check ensures when a new method are added, the new method should be binary compatible with the existing SQL API. When the client API coverage is up (~80%) we can switch to a more aggressive check to ensure we did not miss any methods by mistake.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK, I see what you mean. It seems that this is just an intermediate state. So it really doesn't need to consider users' use now.

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,22 @@ | ||

| /* | ||

| * Licensed to the Apache Software Foundation (ASF) under one or more | ||

| * contributor license agreements. See the NOTICE file distributed with | ||

| * this work for additional information regarding copyright ownership. | ||

| * The ASF licenses this file to You under the Apache License, Version 2.0 | ||

| * (the "License"); you may not use this file except in compliance with | ||

| * the License. You may obtain a copy of the License at | ||

| * | ||

| * http://www.apache.org/licenses/LICENSE-2.0 | ||

| * | ||

| * Unless required by applicable law or agreed to in writing, software | ||

| * distributed under the License is distributed on an "AS IS" BASIS, | ||

| * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| * See the License for the specific language governing permissions and | ||

| * limitations under the License. | ||

| */ | ||

|

|

||

| package org.apache.spark | ||

|

|

||

| package object sql { | ||

| type DataFrame = Dataset[Row] | ||

| } |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,153 @@ | ||

| /* | ||

| * Licensed to the Apache Software Foundation (ASF) under one or more | ||

| * contributor license agreements. See the NOTICE file distributed with | ||

| * this work for additional information regarding copyright ownership. | ||

| * The ASF licenses this file to You under the Apache License, Version 2.0 | ||

| * (the "License"); you may not use this file except in compliance with | ||

| * the License. You may obtain a copy of the License at | ||

| * | ||

| * http://www.apache.org/licenses/LICENSE-2.0 | ||

| * | ||

| * Unless required by applicable law or agreed to in writing, software | ||

| * distributed under the License is distributed on an "AS IS" BASIS, | ||

| * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | ||

| * See the License for the specific language governing permissions and | ||

| * limitations under the License. | ||

| */ | ||

| package org.apache.spark.sql.connect.client | ||

|

|

||

| import java.io.File | ||

| import java.net.URLClassLoader | ||

| import java.util.regex.Pattern | ||

|

|

||

| import com.typesafe.tools.mima.core._ | ||

| import com.typesafe.tools.mima.lib.MiMaLib | ||

| import org.scalatest.funsuite.AnyFunSuite // scalastyle:ignore funsuite | ||

| import org.apache.spark.sql.connect.client.util.IntegrationTestUtils._ | ||

|

|

||

| /** | ||

| * This test checks the binary compatibility of the connect client API against the spark SQL API | ||

| * using MiMa. We did not write this check using a SBT build rule as the rule cannot provide the | ||

| * same level of freedom as a test. With a test we can: | ||

| * 1. Specify any two jars to run the compatibility check. | ||

| * 1. Easily make the test automatically pick up all new methods added while the client is being | ||

| * built. | ||

| * | ||

| * The test requires the following artifacts built before running: | ||

| * {{{ | ||

| * spark-sql | ||

| * spark-connect-client-jvm | ||

| * }}} | ||

| * To build the above artifact, use e.g. `sbt package` or `mvn clean install -DskipTests`. | ||

| * | ||

| * When debugging this test, if any changes to the client API, the client jar need to be built | ||

| * before running the test. An example workflow with SBT for this test: | ||

| * 1. Compatibility test has reported an unexpected client API change. | ||

| * 1. Fix the wrong client API. | ||

| * 1. Build the client jar: `sbt package` | ||

| * 1. Run the test again: `sbt "testOnly | ||

| * org.apache.spark.sql.connect.client.CompatibilitySuite"` | ||

| */ | ||

| class CompatibilitySuite extends AnyFunSuite { // scalastyle:ignore funsuite | ||

|

|

||

| private lazy val clientJar: File = | ||

| findJar( | ||

| "connector/connect/client/jvm", | ||

| "spark-connect-client-jvm-assembly", | ||

| "spark-connect-client-jvm") | ||

|

|

||

| private lazy val sqlJar: File = findJar("sql/core", "spark-sql", "spark-sql") | ||

|

|

||

| /** | ||

| * MiMa takes an old jar (sql jar) and a new jar (client jar) as inputs and then reports all | ||

| * incompatibilities found in the new jar. The incompatibility result is then filtered using | ||

| * include and exclude rules. Include rules are first applied to find all client classes that | ||

| * need to be checked. Then exclude rules are applied to filter out all unsupported methods in | ||

| * the client classes. | ||

| */ | ||

| test("compatibility MiMa tests") { | ||

| val mima = new MiMaLib(Seq(clientJar, sqlJar)) | ||

|

||

| val allProblems = mima.collectProblems(sqlJar, clientJar, List.empty) | ||

| val includedRules = Seq( | ||

| IncludeByName("org.apache.spark.sql.Column"), | ||

| IncludeByName("org.apache.spark.sql.Column$"), | ||

| IncludeByName("org.apache.spark.sql.Dataset"), | ||

| // TODO(SPARK-42175) Add the Dataset object definition | ||

| // IncludeByName("org.apache.spark.sql.Dataset$"), | ||

| IncludeByName("org.apache.spark.sql.DataFrame"), | ||

| IncludeByName("org.apache.spark.sql.SparkSession"), | ||

| IncludeByName("org.apache.spark.sql.SparkSession$")) ++ includeImplementedMethods(clientJar) | ||

| val excludeRules = Seq( | ||

| // Filter unsupported rules: | ||

| // Two sql overloading methods are marked experimental in the API and skipped in the client. | ||

| ProblemFilters.exclude[Problem]("org.apache.spark.sql.SparkSession.sql"), | ||

| // Skip all shaded dependencies in the client. | ||

| ProblemFilters.exclude[Problem]("org.sparkproject.*"), | ||

| ProblemFilters.exclude[Problem]("org.apache.spark.connect.proto.*")) | ||

| val problems = allProblems | ||

| .filter { p => | ||

| includedRules.exists(rule => rule(p)) | ||

| } | ||

| .filter { p => | ||

| excludeRules.forall(rule => rule(p)) | ||

| } | ||

|

|

||

| if (problems.nonEmpty) { | ||

| fail( | ||

| s"\nComparing client jar: $clientJar\nand sql jar: $sqlJar\n" + | ||

| problems.map(p => p.description("client")).mkString("\n")) | ||

| } | ||

| } | ||

|

|

||

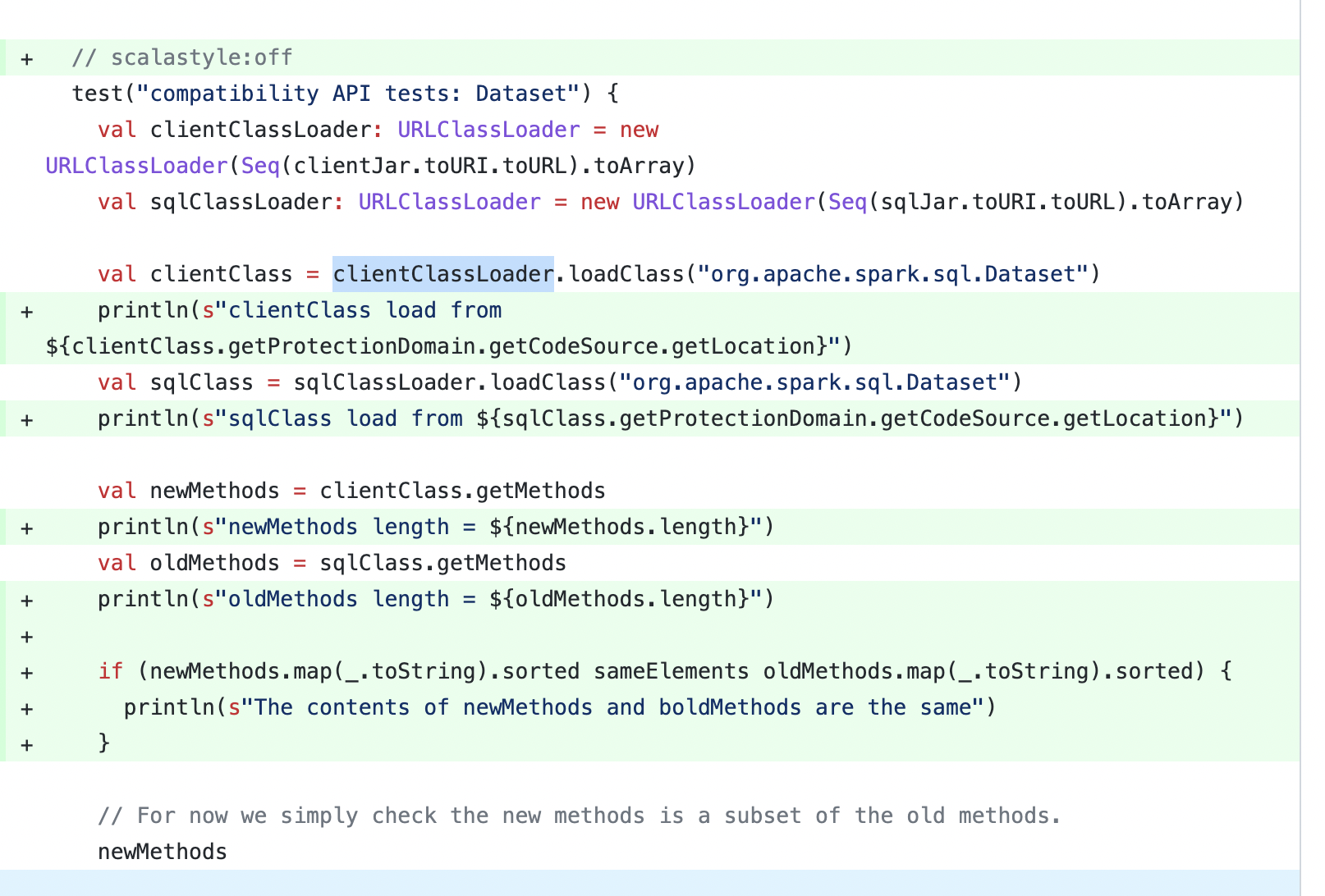

| test("compatibility API tests: Dataset") { | ||

| val clientClassLoader: URLClassLoader = new URLClassLoader(Seq(clientJar.toURI.toURL).toArray) | ||

| val sqlClassLoader: URLClassLoader = new URLClassLoader(Seq(sqlJar.toURI.toURL).toArray) | ||

|

|

||

| val clientClass = clientClassLoader.loadClass("org.apache.spark.sql.Dataset") | ||

|

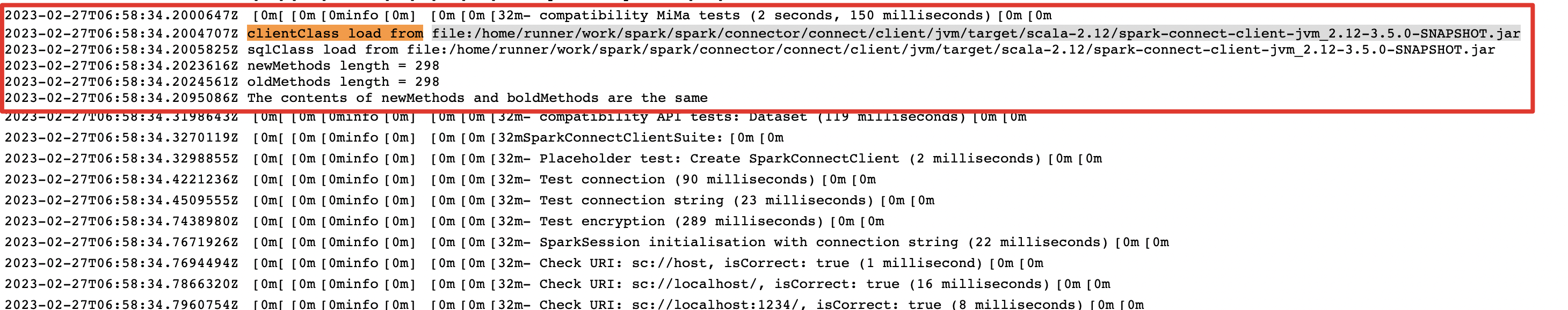

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. HI ~ @zhenlineo @HyukjinKwon , there may be some problems with this test case, I add some logs as follows: From the log, I found that both

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. At present, using this way to check, at least 4 apis should be incompatible: Because when using Java reflection, the above 4 methods will be identified as public apis, even though three of them are

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. also cc @hvanhovell

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Thanks so much for looking into this. The dataset test is not as important as Mima test. I will check if we can fix the issue you found. Otherwise it should be safe to delete as the test is already covered by mima.

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Thanks @zhenlineo, If it has been covered, we can delete it :)

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Thank you for the investigation, @LuciferYang . |

||

| val sqlClass = sqlClassLoader.loadClass("org.apache.spark.sql.Dataset") | ||

|

|

||

| val newMethods = clientClass.getMethods | ||

| val oldMethods = sqlClass.getMethods | ||

|

|

||

| // For now we simply check the new methods is a subset of the old methods. | ||

| newMethods | ||

| .map(m => m.toString) | ||

| .foreach(method => { | ||

| assert(oldMethods.map(m => m.toString).contains(method)) | ||

| }) | ||

| } | ||

|

|

||

| /** | ||

| * Find all methods that are implemented in the client jar. Once all major methods are | ||

| * implemented we can switch to include all methods under the class using ".*" e.g. | ||

| * "org.apache.spark.sql.Dataset.*" | ||

| */ | ||

| private def includeImplementedMethods(clientJar: File): Seq[IncludeByName] = { | ||

| val clsNames = Seq( | ||

| "org.apache.spark.sql.Column", | ||

| // TODO(SPARK-42175) Add all overloading methods. Temporarily mute compatibility check for \ | ||

| // the Dataset methods, as too many overload methods are missing. | ||

| // "org.apache.spark.sql.Dataset", | ||

| "org.apache.spark.sql.SparkSession") | ||

|

|

||

| val clientClassLoader: URLClassLoader = new URLClassLoader(Seq(clientJar.toURI.toURL).toArray) | ||

| clsNames | ||

| .flatMap { clsName => | ||

| val cls = clientClassLoader.loadClass(clsName) | ||

| // all distinct method names | ||

| cls.getMethods.map(m => s"$clsName.${m.getName}").toSet | ||

| } | ||

| .map { fullName => | ||

| IncludeByName(fullName) | ||

| } | ||

| } | ||

|

|

||

| private case class IncludeByName(name: String) extends ProblemFilter { | ||

| private[this] val pattern = | ||

| Pattern.compile(name.split("\\*", -1).map(Pattern.quote).mkString(".*")) | ||

|

|

||

| override def apply(problem: Problem): Boolean = { | ||

| pattern.matcher(problem.matchName.getOrElse("")).matches | ||

| } | ||

| } | ||

| } | ||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The latest version is 1.1.1

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, there is a bug in 1.1.1, where the MiMa will not be able to check the class methods if the object is marked

private. Thus I used the same one that our SBT build uses, which is 1.1.0.