-

-

Notifications

You must be signed in to change notification settings - Fork 3.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Options for disabling all parallelism for single-threaded performance #6689

Comments

|

Strongly agree, I've wanted this for tests and parallel scientific computing workloads too. A single global setting would be perfect. |

|

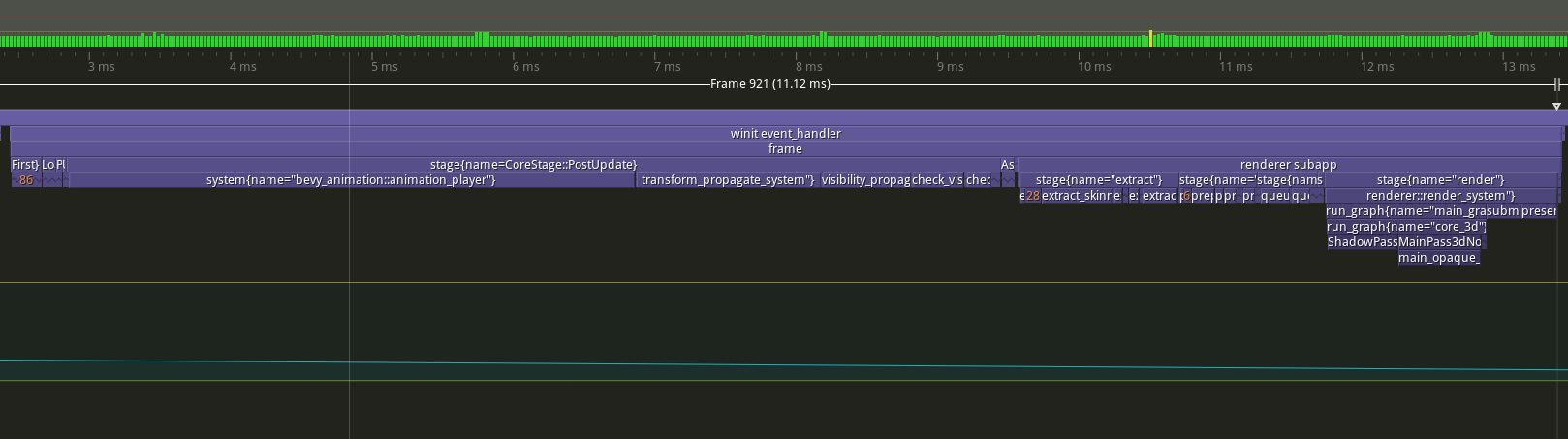

For reference, here are numbers I captured on a single vCPU $5/mo Vultr VPS. Using my fork of ecs_bench_suite here with Bevy 0.9: https://github.com/recatek/ecs_bench_suite/tree/9caa6e401e393267f0d7539eaf53c27e03d3aa88 Benchmark upgrades are a best guess, but if anyone has recommendations for more accurate results on this let me know. |

|

I saw a comment somewhere saying something like this can eliminate tracking access. While care would be taken to avoid accidentally introducing ambiguity in systems it would be neat to be able to avoid tracking access metadata everywhere since it is all single threaded anyway. |

|

Much needed feature for me running deterministic simulations, hope it works out! |

# Objective Fixes #6689. ## Solution Add `single-threaded` as an optional non-default feature to `bevy_ecs` and `bevy_tasks` that: - disable the `ParallelExecutor` as a default runner - disables the multi-threaded `TaskPool` - internally replace `QueryParIter::for_each` calls with `Query::for_each`. Removed the `Mutex` and `Arc` usage in the single-threaded task pool.  ## Future Work/TODO Create type aliases for `Mutex`, `Arc` that change to single-threaaded equivalents where possible. --- ## Changelog Added: Optional default feature `multi-theaded` to that enables multithreaded parallelism in the engine. Disabling it disables all multithreading in exchange for higher single threaded performance. Does nothing on WASM targets. --------- Co-authored-by: Carter Anderson <mcanders1@gmail.com>

What problem does this solve or what need does it fill?

In certain environments, like low cost VPS game hosting, it is often more efficient to host multiple single threaded game instances instead of hosting. For these use cases, the additional synchronization overhead of many

Send/Synctypes can be quite high, particularly with atomics.What solution would you like?

A feature flag on

bevy_ecsandbevy_tasksto:ParallelExecutoras a default runnerTaskPoolQuery::par_for_eachcalls withfor_each.!Sendor!Syncoptions of common types (i.e.Mutex->RefCell,Arc->Rc) (questionable if this is possible, we already avoid the use of these).In these target environments, rendering is typically not required. Ideally this shouldn't require too much code change on the user's end other than changing some crate features so that code can be easily shared between client and server.

What alternative(s) have you considered?

Leave it as is, eat the cost of atomics in these systems.

Additional context

Original discussion: https://www.reddit.com/r/rust/comments/ytiv2a/comment/iw4q6ed/?utm_source=share&utm_medium=web2x&context=3

The text was updated successfully, but these errors were encountered: