-

Notifications

You must be signed in to change notification settings - Fork 82

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

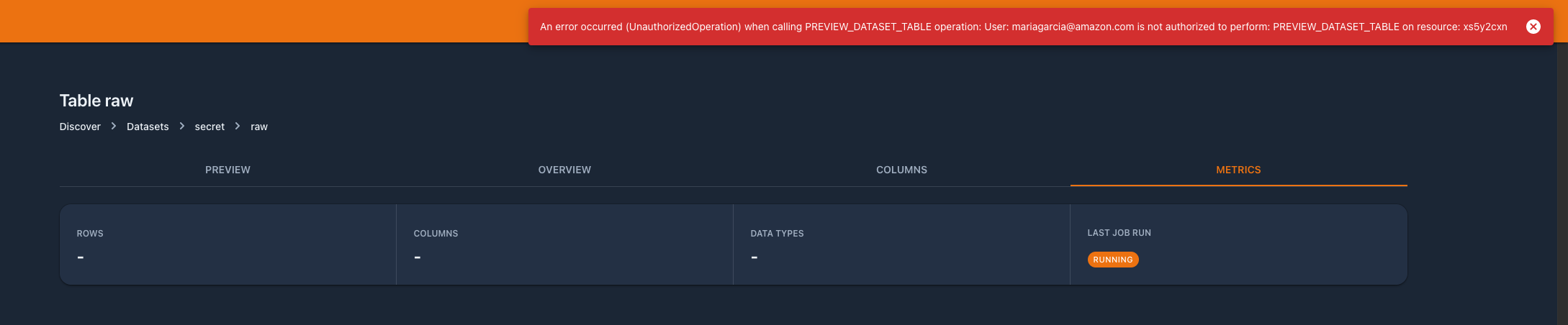

feat: Disabling profiling results from "secret" and "official" datase…

…ts (#482) ### Feature or Bugfix - Feature ### Detail - For datasets that are classified as "Secret", data preview is disabled. In the same way, data profiling results should alse be denied. - Added tests for profiling - removed unused methods  ### Relates - #478 By submitting this pull request, I confirm that my contribution is made under the terms of the Apache 2.0 license.

- Loading branch information

Showing

6 changed files

with

185 additions

and

205 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.