-

Notifications

You must be signed in to change notification settings - Fork 1

Realtime gesture recognition with Jnect and Esper

###Overview

This page is about a tech demo for EclipseCon Europe 2012. We connect Microsoft Kinect with Eclipse technologies using:

- the Jnect plugin for processing Kinect Data

- the Eclipse Modeling Framework as the underlying live model representation format

- EMF-IncQuery for realtime pattern matching to recognize static gestures

- the Esper complex event processor to recognize gesture sequences in the event stream generated by IncQuery.

The overall goal is to demonstrate that the combination of these technologies yields a system that is capable of recognizing gestures (both static states and dynamic sequences) in a (soft) realtime fashion with common, off-the-shelf hardware.

We are aware that gesture recognition can be implemented in many different (more efficient) ways, however the aim is to highlight the fact that Eclipse technologies and EMF(-IncQuery) in particular can be embedded into high performance applications, while retaining the well-known benefits of the model-driven/model-assisted approach.

####Acknowledgements

We wish to thank István Dávid for help with Esper and Dr. Jonas Helming for providing the original idea for the "YMCA" demo.

####Presentation

The following video illustrates the system in action as it was presented at EclipseCon Europe 2012:

The Jnect-IncQuery Realtime Complex Gesture Recognition Demo presented at ECE2012 from istvan Rath on Vimeo. The following slideshow contains a high-level overview of the architecture:

Gesture recognition with Jnect, EMF-IncQuery, Esper and GEF3D from Istvan Rath

###Trying the demo

####Getting the code

The complete source code for this demo is available under open source licences at Github.

Please note that several modifications were required to the original Jnect source to make this work, so the Github repository contains all necessary sources for running the software - the original Jnect should not be present in your configuration.

####Additional videos

####YMCA

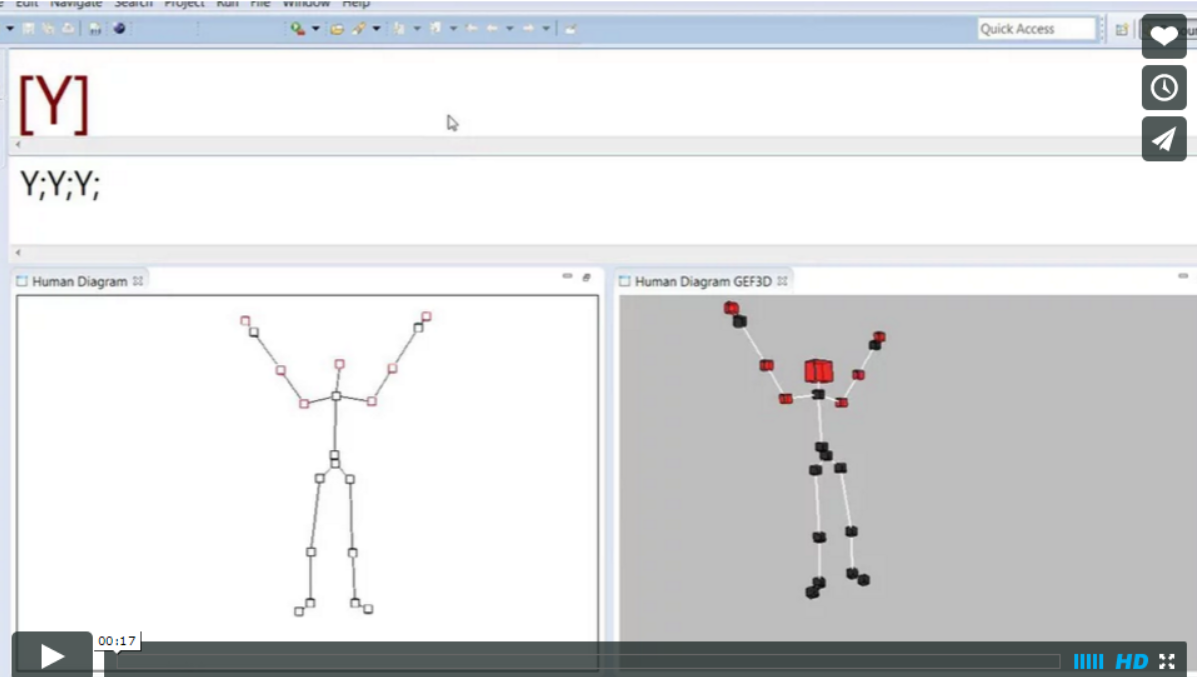

This video demonstrates the system in action. A test user performs the complete "YMCA" gesture sequence, where each character is represented by a particular body configuration as depicted here:

Each character is recognized as a graph pattern match on the live body model, and converted into an " atomic recognition event". (Shown as e.g. "[Y]" in the upper text field.) The Esper CEP engine continuously analyzes the event stream (managing time windows while doing so) and whenever the complete "YMCA" (or "IQ") sequence is recognized the "[ YMCA ]" character sequence is displayed.

####The Robot Demo

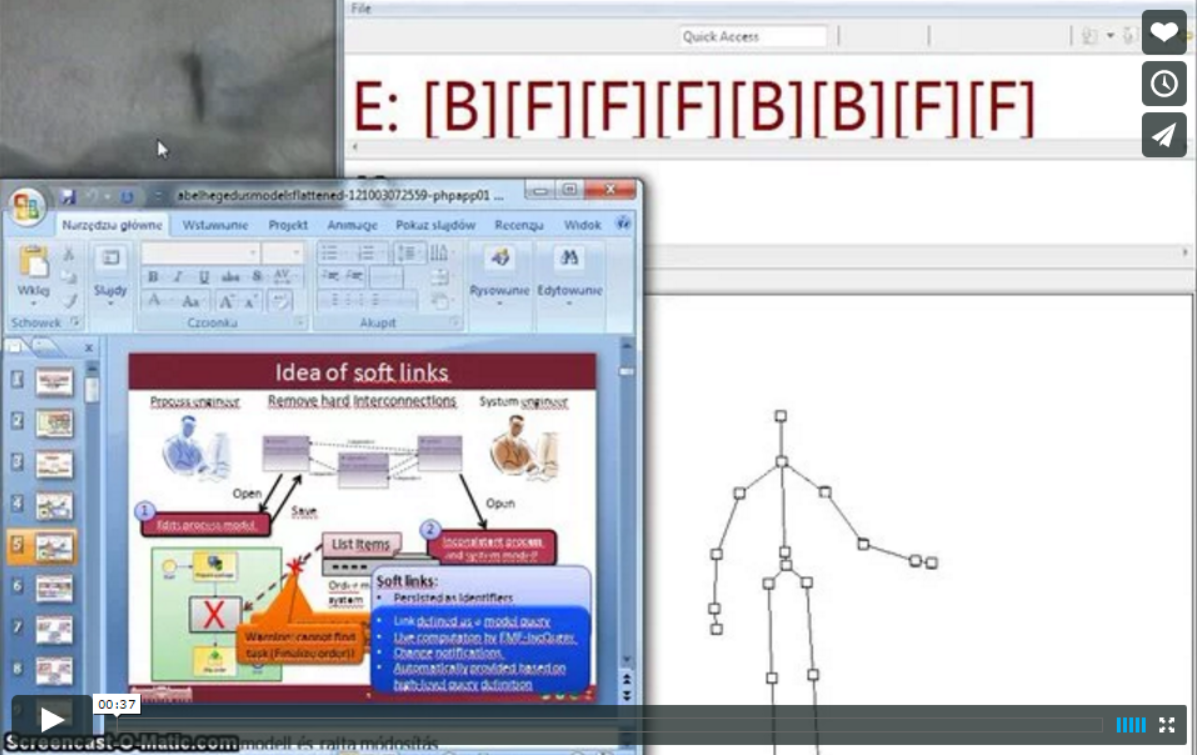

This video demonstrates two features:

- a gesture sequence (consisting of the "straight arm" as a starting state and the "bent arm" as the end state) is defined based on graph patterns and complex event patterns

- this gesture sequence is then translated to operating system-level keypresses so that an external tool (Powerpoint) can be controlled.

###How it works

The basis for this demo is the live EMF model maintained by the Jnect plugin. Essentially, Jnect converts the XML stream coming from the Microsoft Kinect SDK into an EMF instance model. In each "frame", the XML document contents are copied into the EMF model - these informations mostly contain coordinates of body parts. Approximately 20 such frames are processed per second.

####Realtime pattern matching by EMF-IncQuery

Jnect's live EMF model is processed by EMF-IncQuery. EMF-IncQuery is an incremental query engine that instantiates complex graph-pattern based queries over EMF instance models, and automatically processes the model's changes (using the EMF Notification API) so that the query results are always kept up-to-date.

/**

* The body is in the "Y" position.

*/

pattern Y(RH:RightHand,

RE:RightElbow,

RS:RightShoulder,

LH:LeftHand,

LE:LeftElbow,

LS:LeftShoulder,

H:Head

) {

// both arms are stretched

find stretchedRightArm(RH,RE,RS);

find stretchedLeftArm(LH,LE,LS);

// both hands are above the head

find rightHandAboveHead(RH,H);

find leftHandAboveHead(LH,H);

// angle between upper arms is around PI/3

find upperArmsInY(LE,LS,RE,RS);

}

The "Y" pattern demonstrates an example for such a query. EMF-IncQuery queries are best read as a high-level, declarative specification (consisting of a set of constraints) that need to be fulfilled by the model. Those model parts that fulfil the constraints of the query make up the match set.

In this example, the "match set" of the Y query will consist of a tuple defined by the RH, RE, RS, LH, LE, LS, H variables that will be bound to the EObjects corresponding to the user's body parts. The Y pattern is fulfilled if the body of the user reaches a state where all sub-queries (constraints) of the Y pattern hold.

pattern upperArmsInY(LE:LeftElbow,

LS:LeftShoulder,

RE:RightElbow,

RS:RightShoulder

) {

// indicate coordinates to be used for check calculation

PositionedElement.x(LE,LEX);

PositionedElement.y(LE,LEY);

PositionedElement.z(LE,LEZ);

PositionedElement.x(LS,LSX);

PositionedElement.y(LS,LSY);

PositionedElement.z(LS,LSZ);

PositionedElement.x(RE,REX);

PositionedElement.y(RE,REY);

PositionedElement.z(RE,REZ);

PositionedElement.x(RS,RSX);

PositionedElement.y(RS,RSY);

PositionedElement.z(RS,RSZ);

// check that the angle between the left upper arm (LE-LS)

// and right upper arm (RE-RS) is around PI/3 (60')

check({

var float angleBetween = bodymodel::ymca::VectorMaths::angleBetween(

bodymodel::ymca::VectorMaths::createVector(

bodymodel::MovingAverageCalculator::getCalculator("LEX").addValue(LEX).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("LEY").addValue(LEY).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("LEZ").addValue(LEZ).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("LSX").addValue(LSX).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("LSY").addValue(LSY).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("LSZ").addValue(LSZ).movingAvg

),

bodymodel::ymca::VectorMaths::createVector(

bodymodel::MovingAverageCalculator::getCalculator("REX").addValue(REX).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("REY").addValue(REY).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("REZ").addValue(REZ).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("RSX").addValue(RSX).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("RSY").addValue(RSY).movingAvg,

bodymodel::MovingAverageCalculator::getCalculator("RSZ").addValue(RSZ).movingAvg

)

)

Math::abs(Math::PI/3 - angleBetween) < bodymodel::ymca::YMCAConstants::angleTolerance

});

}

The "upperArmsInY" pattern may be of particular interest. This pattern is used to define a geometrical constraint on the upper arms of the user. EMF-IncQuery allows the developer to include Xbase expressions (that will be compiled to Java code) and here this feature is heavily used to wire vector math calculations into the query.

####Complex event detection by Esper

EMF-IncQuery not only keeps the result/match set of queries up-to-date all the time, but it also provides event-driven deltas through its DeltaMonitor API that allows the developer to process changes in the query results in an efficient, event-driven way.

#####Feeding IncQuery pattern match events into Esper's event stream

/**

* Utility class to adapt IncQuery DeltaMonitor events to Esper events.

* @author istvan rath

*

*/

public class EsperAdapter {

public EsperAdapter(IncQueryMatcher<? extends IPatternMatch> m) {

matcher = m;

dm = matcher.newDeltaMonitor(true);

matcher.addCallbackAfterUpdates(new Runnable(){

@Override

public void run() {

for (IPatternMatch pm : dm.matchFoundEvents) {

EsperManager.getInstance().sendEvent(

new PatternMatchEvent(pm, pm.patternName(), PatternMatchEventType.NEW));

}

for (IPatternMatch pm : dm.matchLostEvents) {

EsperManager.getInstance().sendEvent(

new PatternMatchEvent(pm, pm.patternName(), PatternMatchEventType.LOST));

}

dm.clear();

}

});

}

}

This code snippet demonstrates how the DeltaMonitor API can be used. In each turn (the callbackAfterUpdates callback Runnable is called ONLY if there is a change in the result set of the query -- that is, the body state has changed enough so that gestures need to be updated), the .matchFoundEvents and .matchLostEvents collections are processed and each newly found match is converted into a PatternMatchEvent DTO that will be pushed into the Event stream.

#####Recognizing IncQuery pattern match events in discrete time windows

The Esper complex event processor is then used to process the stream of such events.

public class AtomicPatternFilter extends AbstractFilter {

public AtomicPatternFilter(EPAdministrator admin, String pName) {

String stmt = "SELECT * FROM pattern[every-distinct(p.patternName, 1 seconds) p=PatternMatchEvent(patternName='"+pName+"' AND type='NEW')]";

statement = admin.createEPL(stmt);

}

}

The AtomicPatternFilter demonstrates a basic EPL query that picks out a single ("distinct") atomic pattern match event from a 1 second time window, and then

public class EsperPatternListenerFeedback extends EsperPatternListener {

@Override

protected void logEvent(EventBean event) {

super.logEvent(event);

// insert EsperMatchEvent into event stream

EsperManager.getInstance().sendEvent(new EsperMatchEvent(pName));

}

}

EventPatternListener provides feedback into the Esper event stream, turning the isolated low-level PatternMatchEvent into an EsperMatchEvent.

#####Recognizing complex event patterns

Finally, complex event filters such as YMCAFilterWithWindowFeedback are used to search the stream of higher level EsperMatchEvents for complete gesture sequences like "YMCA".

public class YMCAFilterWithWindowFeedback extends AbstractFilter {

public YMCAFilterWithWindowFeedback(EPAdministrator admin) {

String stmt = "SELECT * FROM pattern[" +

"every(EsperMatchEvent(patternName='Y') -> " +

"EsperMatchEvent(patternName='M') -> " +

"EsperMatchEvent(patternName='C') -> " +

"EsperMatchEvent(patternName='A') WHERE timer:within(10 sec))]";

statement = admin.createEPL(stmt);

}

}