-

Notifications

You must be signed in to change notification settings - Fork 18k

net: SetDeadline performance is poor #25729

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

Please show us the whole profile and/or show us the code so that we can recreate the problem ourselves. Thanks. |

|

@ianlancetaylor Can I upload the svg file? |

|

yes, you can uploads the svg file

…On 5 June 2018 at 16:55, ChenMingjie ***@***.***> wrote:

@ianlancetaylor <https://github.com/ianlancetaylor> Can I upload the svg

file?

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

<#25729 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/AAAcA-q5Txv7XYhH-3Lk8XxECDNeyXmhks5t5it2gaJpZM4UaHJP>

.

|

|

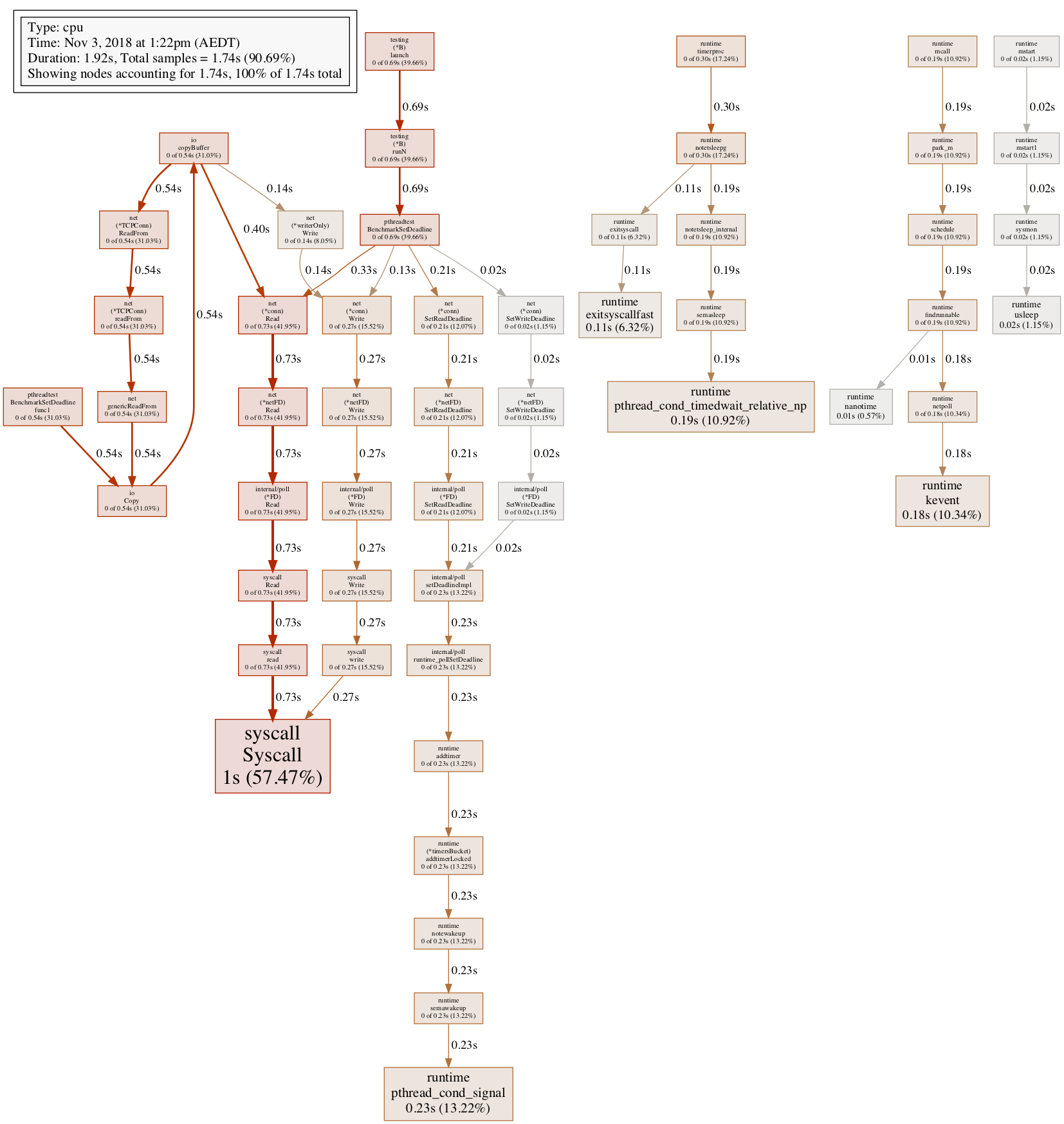

@sandyskies , could you build and profile the same program under go 1.9 ? This may be related to #15133 The svg file shows the program spends 15% of CPU time in a It looks like the program calls |

|

@valyala Thanks for the answer. I have run it under go 1.9,and have a worse performance, because of the muti cpu timmer problem in the issue that u mentioned, I have to upgrade to 1.10 . I mentioned in my issue than I am performing a benchmark ,it's a sever and client model, which client send some data to server ,and server simply sends back. transport.ConnectHandler.send is the function that server use to sends the data back to client . Because it is a shot connection ,So I have to use SetDeadline in every socket/connection . Benchmark is use in this case ,so I should not change the program logic I used. |

|

Hello, I had an opportunity to spend some time with the OP last week and I believe the following benchmark reproduces the issue they are seeing. On this laptop I see 18.95% of the time spent in From the spelunking the OP and I did my interpretation of the trace is thrashing between the goroutine that owns the timer and the main goroutine which is needs to wake the former so it can wrestle control of the lock over the timer wheel. |

|

Some results on a different laptop |

|

A bunch of things to improve here. I am on it. |

|

Change https://golang.org/cl/146342 mentions this issue: |

|

Change https://golang.org/cl/146340 mentions this issue: |

|

Change https://golang.org/cl/146345 mentions this issue: |

|

Change https://golang.org/cl/146339 mentions this issue: |

|

Change https://golang.org/cl/146343 mentions this issue: |

|

Change https://golang.org/cl/146337 mentions this issue: |

|

Change https://golang.org/cl/146341 mentions this issue: |

|

Change https://golang.org/cl/146338 mentions this issue: |

|

@sandyskies could you post full pprof profile? Or at least the netpoll part. You stripped the most important info. We see that lots of time is spent in descendants of setDeadlineImpl, but we don't see where exactly. setDeadlineImpl itself does not consume any sigfnificant time. |

|

@davecheney for your benchmark for the whole series I got: and for the standard net conn benchmark and SetDeadline stress: |

|

@sandyskies The file seems to be corrupted, it gives me: |

|

@dvyukov I try show it as blame in github, and save it as svg file ,and open it with ie explore ,it works fine. |

|

Whatever I try to open it, it fails. Firefox fails with: ovenmitts fails with: and github webpage fails too: |

|

Please try

go tool pprof -png $url

This will generate a png which you can upload directly.

… On 1 Nov 2018, at 23:49, Dmitry Vyukov ***@***.***> wrote:

Whatever I try to open it, it fails.

Chrome fails with:

This page contains the following errors:

error on line 44 at column 89: Specification mandates value for attribute data-pjax-transient

Below is a rendering of the page up to the first error.

test/profile003.svg at master · sandyskies/test

Firefox fails with:

XML Parsing Error: not well-formed

Location: file:///tmp/profile003.svg

Line Number 44, Column 89: <meta name="request-id" content="9D4D:44ED:488F43:7E3ACF:5BDADD61" data-pjax-transient>

----------------------------------------------------------------------------------------^

ovenmitts fails with:

Error domain 1 code 41 on line 44 column 89 of file:///tmp/profile003.svg: Specification mandate value for attribute data-pjax-transient

and github webpage fails too:

https://github.com/sandyskies/test/blob/master/profile003.svg

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

I think the reason is that , git covert the svg file automatically. I put it into a zip file , please check again. @davecheney @dvyukov |

|

This works. |

It's not always necessary to wake timerproc even if we add a new timer to the top of the heap. Since we don't wake and reset timerproc when we remove timers, it still can be sleeping with shorter timeout. It such case it's more profitable to let it sleep and then update timeout when it wakes on its own rather than proactively wake it, let it update timeout and go to sleep again. name old time/op new time/op delta TCP4OneShotTimeout-6 18.6µs ± 1% 17.2µs ± 0% -7.66% (p=0.008 n=5+5) SetReadDeadline-6 562ns ± 5% 319ns ± 1% -43.27% (p=0.008 n=5+5) Update #25729 Change-Id: Iec8eacb8563dbc574a82358b3bac7ac479c16826 Reviewed-on: https://go-review.googlesource.com/c/146337 Reviewed-by: Ian Lance Taylor <iant@golang.org>

Currently we always delete both read and write timers and then add them again. However, if user setups read and write deadline separately, then we don't need to touch the other one. name old time/op new time/op delta TCP4OneShotTimeout-6 17.2µs ± 0% 17.2µs ± 0% ~ (p=0.310 n=5+5) SetReadDeadline-6 319ns ± 1% 274ns ± 2% -13.94% (p=0.008 n=5+5) Update #25729 Change-Id: I4c869c3083521de6d0cd6ca99a7609d4dd84b4e4 Reviewed-on: https://go-review.googlesource.com/c/146338 Reviewed-by: Ian Lance Taylor <iant@golang.org>

Currently when netpoll deadline is incrementally prolonged, we delete and re-add timer each time. Add modtimer function that does both and use it when we need to modify an existing netpoll timer to avoid unnecessary lock/unlock. TCP4OneShotTimeout-6 17.2µs ± 0% 17.0µs ± 0% -0.82% (p=0.008 n=5+5) SetReadDeadline-6 274ns ± 2% 261ns ± 0% -4.89% (p=0.008 n=5+5) Update #25729 Change-Id: I08b89dbbc1785dd180e967a37b0aa23b0c4613a8 Reviewed-on: https://go-review.googlesource.com/c/146339 Reviewed-by: Ian Lance Taylor <iant@golang.org>

Move startNano from runtime to time package. In preparation for a subsequent change that speeds up Since and Until. This also makes code simpler as we have less assembly as the result, monotonic time handling is better localized in time package. This changes values returned from nanotime on windows (it does not account for startNano anymore), current comments state that it's important, but it's unclear how it can be important since no other OS does this. Update #25729 Change-Id: I2275d57b7b5ed8fd0d53eb0f19d55a86136cc555 Reviewed-on: https://go-review.googlesource.com/c/146340 Reviewed-by: Ian Lance Taylor <iant@golang.org>

time.now is somewhat expensive (much more expensive than nanotime), in the common case when Time has monotonic time we don't actually need to call time.now in Since/Until as we can do calculation based purely on monotonic times. name old time/op new time/op delta TCP4OneShotTimeout-6 17.0µs ± 0% 17.1µs ± 1% ~ (p=0.151 n=5+5) SetReadDeadline-6 261ns ± 0% 234ns ± 1% -10.35% (p=0.008 n=5+5) Benchmark that only calls Until: benchmark old ns/op new ns/op delta BenchmarkUntil 54.0 29.5 -45.37% Update #25729 Change-Id: I5ac5af3eb1fe9f583cf79299f10b84501b1a0d7d Reviewed-on: https://go-review.googlesource.com/c/146341 Run-TryBot: Dmitry Vyukov <dvyukov@google.com> Reviewed-by: Ian Lance Taylor <iant@golang.org> TryBot-Result: Gobot Gobot <gobot@golang.org>

The nanotime wrappers in runtime introduce a bunch of unnecessary code onto hot paths, e.g.: 0000000000449d70 <time.runtimeNano>: 449d70: 64 48 8b 0c 25 f8 ff mov %fs:0xfffffffffffffff8,%rcx 449d77: ff ff 449d79: 48 3b 61 10 cmp 0x10(%rcx),%rsp 449d7d: 76 26 jbe 449da5 <time.runtimeNano+0x35> 449d7f: 48 83 ec 10 sub $0x10,%rsp 449d83: 48 89 6c 24 08 mov %rbp,0x8(%rsp) 449d88: 48 8d 6c 24 08 lea 0x8(%rsp),%rbp 449d8d: e8 ae 18 01 00 callq 45b640 <runtime.nanotime> 449d92: 48 8b 04 24 mov (%rsp),%rax 449d96: 48 89 44 24 18 mov %rax,0x18(%rsp) 449d9b: 48 8b 6c 24 08 mov 0x8(%rsp),%rbp 449da0: 48 83 c4 10 add $0x10,%rsp 449da4: c3 retq 449da5: e8 56 e0 00 00 callq 457e00 <runtime.morestack_noctxt> 449daa: eb c4 jmp 449d70 <time.runtimeNano> Move them to the corresponding packages which eliminates all of this. name old time/op new time/op delta TCP4OneShotTimeout-6 17.1µs ± 1% 17.0µs ± 0% -0.66% (p=0.032 n=5+5) SetReadDeadline-6 234ns ± 1% 232ns ± 0% -0.77% (p=0.016 n=5+4) Update #25729 Change-Id: Iee05027adcdc289ba895c5f5a37f154e451bc862 Reviewed-on: https://go-review.googlesource.com/c/146342 Run-TryBot: Dmitry Vyukov <dvyukov@google.com> TryBot-Result: Gobot Gobot <gobot@golang.org> Reviewed-by: Ian Lance Taylor <iant@golang.org>

…imers We only need the memory barrier in poll_runtime_pollSetDeadline only when one of the timers has fired, which is not the expected case. Memory barrier can be somewhat expensive on some archs, so execute it only if one of the timers has in fact fired. name old time/op new time/op delta TCP4OneShotTimeout-6 17.0µs ± 0% 17.1µs ± 0% +0.35% (p=0.032 n=5+5) SetReadDeadline-6 232ns ± 0% 230ns ± 0% -1.03% (p=0.000 n=4+5) Update #25729 Change-Id: Ifce6f505b9e7ba3717bad8f454077a2e94ea6e75 Reviewed-on: https://go-review.googlesource.com/c/146343 Reviewed-by: Ian Lance Taylor <iant@golang.org>

We only need the memory barrier from these stores, and we only store nil over nil or over a static function value. The write barrier is unnecessary. name old time/op new time/op delta TCP4OneShotTimeout-6 17.0µs ± 0% 17.0µs ± 0% -0.43% (p=0.032 n=5+5) SetReadDeadline-6 205ns ± 1% 205ns ± 1% ~ (p=0.683 n=5+5) Update #25729 Change-Id: I66c097a1db7188697ddfc381f31acec053dfed2c Reviewed-on: https://go-review.googlesource.com/c/146345 Run-TryBot: Dmitry Vyukov <dvyukov@google.com> TryBot-Result: Gobot Gobot <gobot@golang.org> Reviewed-by: Ian Lance Taylor <iant@golang.org>

|

@dvyukov thank you very much, this patch set has had a substantial impact on my benchmark. |

|

@dvyukov I've removed the errant allocations in the benchmark (they were coming from the Both the improvements in throughput and latency are far less affected by |

|

@dvyukov - Did you have anything else in mind for this issue ? |

|

@agnivade no |

|

Thank u all! |

Please answer these questions before submitting your issue. Thanks!

What version of Go are you using (

go version)?go version go1.10.2 linux/amd64

Does this issue reproduce with the latest release?

yes

What operating system and processor architecture are you using (

go env)?GOARCH="amd64"

GOBIN=""

GOCACHE="/home/svn/jessemjchen/.cache/go-build"

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="linux"

GOPATH="/home/svn/jessemjchen"

GORACE=""

GOROOT="/home/svn/go"

GOTMPDIR=""

GOTOOLDIR="/home/svn/go/pkg/tool/linux_amd64"

GCCGO="gccgo"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build019705885=/tmp/go-build"

What did you do?

I write a server in golang,and I use SetReadDeadline/SetWriteDeadline to set a timeout . But when I benchmark ,and use pprof to get cpu pprof ,I get a bad performance because of this two function.

What did you expect to see?

SetReadDeadline/SetWriteDeadline occupy less cpu time.

What did you see instead?

SetReadDeadline/SetWriteDeadline occupy lots of cpu time.

The text was updated successfully, but these errors were encountered: