-

Notifications

You must be signed in to change notification settings - Fork 89

Shmif

The shmif (shared memory interface) is used both to split engine components into smaller, less privileged processes for improving security, stability and performance but also to allow a higher flexibility and choice when it comes to data sources and data providers for specific tasks like video encoding and decoding, network communications and so on.

It is also used as a bridging transport for translating display server protocols and for implementing dedicated arcan-capable software backends.

The basic implementation can be found in src/shmif/ along with its engine backend in platform/posix/frameserver.c. It covers:

- connecting and authenticating with a known running arcan application

- passing / packing / unpacking initial arguments

- mapping resources like display surfaces and audio buffers

- synchronized data transfers

- initializing from a pre-set context (authoritative mode)

- event (device input, metadata)

- block transfers (descriptor passing)

- extended operations for specialized applications (negotiated)

While it is possible to link- and connect using libarcan-shmif for other projects, it will remain in a state of aggressive development and flux for a long time to come, which makes it a poor choice for a display server API or communication protocol. The related subprojects and the engine itself thus requires it to be updated in lockstep and synched/rebuilt whenever a new version arrives.

The set of features present in shmif has evolved over many years of experimentation to encompass most of what current and future desktops and programs require, balanced against reducing global system complexity in favor of stronger 'desktop kernel' like local complexity. The display server should be hard to write, clients should be easy.

Particular emphasis has been placed in not accepting loss of currently expected features, but allowing strong, user defined compartmentation and control (sandboxing) without relying on side channels or other IPC systems 'picking up the slack'.

Ideally, a client should be able to operate in a reduced capability and negotiate up to "normal", if permitted by the user, even in an extremely reduced set of system calls on an isolated / temporary file system.

To summarize the scope of basic and extended features covered by shmif:

There is always at least one video buffer available, but it can be renegotiated and resized to account for most synchronization needs. The default color packing format is compile-time locked to R8G8B8A8 linear RGB, though platform- native handle passing mechanisms can be used, and there are advanced renegotiation options to handle floating-point HDR formats and sRGB.

The shmif- connection can be negotiated to carry audio at a custom samplerate, interleaved over a set of caller-requested buffers, sized negotiated between client needs and output device needs. The packing, channels and data format is currently locked compile-time.

There is a provision to request and negotiate additional complete shmif segments with a life-span locked to the 'primary' one - although only one segment is actually guaranteed. These can be of a variety of subtypes to account for alternative representations, popups, decorations e.g. titlebars and custom mouse cursors.

The input model covers keyboards, mice, trackpad, touch displays, game pads and various analog sensors. Keyboard data covers both lower level primitives like scancode and keycode, mid-level abstractions like keysyms and unicode code points, and high-level abstractions like client-defined labels.

A segment has a single direction, either "input" (from the server perspective) or "output" where the latter is used for screen recording, streaming and similar purposes.

Since each segment and requested subsegments are bound to an immutable type from a predefined set, this type can also be used to announce support for alternative data representations, such as textual serialization of menus, or screen reader friendly simplified 'disability' outputs - but also for more traditional forms like initial/dynamic desktop and tray icon.

There are two mechanisms for providing ancillary data, both involve file descriptor passing. The client can indicate an estimate as to its current state size to signal that it is possible to snapshot and restore state, allowing for network serialization and safer suspend/resume operations. It is also possible to indicate that it is ready/willing to accept or provide binary transfers as a stream or sealed blob, in order to provide uniform load/save actions.

Clipboard operations are treated as two explicit subsegments. One input segment that is requested by a client that can provide clipboard data, and an output that can be dynamically pushed into the client as a means of a paste operation. The layout and management of a clipboard is similar to a normal segment, so it can carry both raw texts, descriptor encapsulated contents and raw audio /video.

It is possible to notify the server about application specific key/value/range config data that can be retained across connections for uniform configuration sharing and persistance.

Specific segment types can be initiated and setup (not negotiated) from the server to allow specially privileged, trusted and preauthenticated client access to more sensitive operations, like injecting input events into the shared event loop.

The server can dynamically provide / switch fonts, both actual typeface in use (along with secondary, tertiary, ... fallbacks) and rasterization parameters such as hinting and physical metrics.

There is event triggered output reconfiguration that indicate a change in display density and more subtle details like LED layout along with font metadata explicitly convers physical size (mm) of what the user currently deems as a readable component size.

Among the metadata that can be both definied initially and overriden dynamically include language hinting in terms of input language and text output language.

For accelerated use, there is a mechanism for indicating which GPU device or subdevice to use, and to signal when that device is invalidated (due to load balancing or thermal mitigation). The same mechanism is also used to indicate a migration connection point or a fallback connection point for a client to be able to resume a connection later, should the server crash.

There are also provisions for providing custom or extended data transfers that may be privileged, unavailable or other reasons that might require a capability negotiation. These are intended for more specialized targets, with the current (experimental) ones being:

With this feature enabled, a client gets access to two sets of color/gamma ramps along with metadata describing additional details about the abilities of the current output display. This is used for games that expose brightness controls, for professional color calibration and so on.

With this feature enabled, a client can provide sensor-fusion updates to a skeletal/joint- model of an avatar, along with a control interface for working with head mounted displays for virtual reality applications. It is also possible for the server side to provide data about user viewing angle, field of view and so on- for nested 3D/VR compositing.

With this feature enabled, a client can provide 3D or 2D vector data with relevant metadata (vertices, indices, colors) and use the normal video buffering for packing a texture - in order to defer rasterization to the last possible stage as a means of increasing output quality and faster response to dynamic changes in output density.

This feature is not available in any public builds and the experiments have not progressed to a reasonable state as of yet. RBC will be a rendering and transformation format that takes the safe subset of the functions tied to the Lua API used in LWA builds and to the main instance and then forwards to another arcan instance for remote rendering.

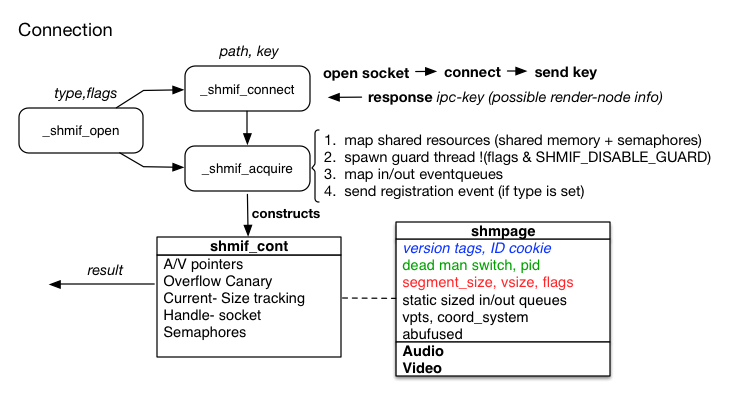

As the name hopefully implies, SHMIF is a shared-memory based interface - and theoretically, only the contents of a shared memory page is needed, although fpr practical and efficiency reasons, additional IPC primitives such as sockets and semaphores are also used in order to account for efficiency on a wider range of operating systems.

The shared memory page contains (at least) negotiation metadata, two ring-buffers that act as input- and output- event queues, a subprotocol region (default empty) a dynamically sized video region and a dynamically sized audio region. Each side of the interface has a local copy of the metadata that it considers to be the current agreed upon interpretation, and upon a mismatch with the contents of the metadata region, the contents are treated as either corrupted (reason to terminate) or as a request to renegotiate ('resize').

The metadata covers information like dimensions of the page itself, the format and slicing of the buffer regions and type information. A connection between an Arcan client and server can have multiple pages like this, though one is considered the 'primary segment' and additional ones are referred to as 'subsegments'.

One side act as a producer (populating the audio, video and subprotocol regions) while the other act as a consumer. Which one is which depends on the type of the segment. With an 'OUTPUT' segment, for instance, it is the server that populates the audio and video buffers (use for recording, streaming and sharing).

This model borrows heavily from a 'memory map' in embedded systems engineering where different components are mapped into a shared memory space into fixed or semi-fixed locations or offsets. There are many reasons as to why this was chosen rather than the arguably more common way of serializing data over a socket, but some of the dominant ones is that it can be used to bring down the amount of data pack/unpack, read/write operations to an absolute minimum, requiring no OS system calls after setup (allowing for very restrictive sandboxing policies and reduced syscall latency jitter). It also means that it can theoretically be used without process separation or an MMU in highly specialized environments.

Here, the socket is only used as for signalling/assisting I/O multiplexing (when a client wants to be reactive on events happening on both the shmif- connection and to some other source) and for opaque handle passing when the OS does not provide other out-of-band mechanisms for this kind of operation.

The event-ring buffers is explicitly polling in the main Arcan process (and can be polled, blocked or multiplexed in clients) in order to prevent priority- inversion, resource exhaustion or denial of service scenarios and multiplexed/filtered into a main internal event queue unless saturated above a certain ratio). Enqueue operations that would compromise this instead trigger a warning and possible loss of events (with a bias towards dropping or merging events that are less dangerous, e.g. analog sensor input). It also doubles as an ANR (application-not-responding) mechanism.

This implies that a malicious or unresponsive client gets punished for being malicious or unresponsive. It is also not, strictly speaking, treated exactly like a queue as some events override others or merge (the big examples being DISPLAYHINT and FONTHINT).

The video and audio regions are initially one buffer each, but can be requested to be split up into several smaller buffers as part of an extended resize request.

In the multi-buffer case, a bitmask (apending, vpending) indicates which slots that are populated with unsynchronized data and which one is the most recent, allowing video synchronization to pick only the latest one, and audio synchronization to use buffer sizes that match the current output device without repacking. This allows for most possible synchronization-latency-bandwidth tradeoff-schemes, from single to double/triple- buffering to swap with tear control.

Other metadata indicate spatial relationship between segments (think popup menus), rendering hints (coordinate system with origo in upper or lower left), a dead man switch (if any side of the connection change the value, the connection is terminated), versioning numbers, overflow cookies and dirty region tracking (for partial updates).

Semaphores are used as a block/wakeup mechanism for buffer transfer synchronization and for event-queue saturation recovery.

We distinguish between authoritative and non-authoritative connections. An authoritative connection is set up and prepared by a parent process, while a non-authoritative connection needs some kind of connection and authentication mechanism (typically DOMAIN sockets where available). The parent process can, for an authoritative connection, be rather aggressive (i.e. outright kill the child process if it does not follow protocol) while only the connection will be terminated in the non-authoritative case.

To better explain how this works, we will go through a few code examples. To start with how a connection is made from a client perspective:

struct arg_arr** aarr;

struct arcan_shmif_cont cont = arcan_shmif_open(

SEGID_APPLICATION, SHMIF_ACQUIRE_FATALFAIL, &aarr);

struct arcan_shmif_initial* initial;

if (arcan_shmif_initial(cont, &initial) == sizeof(struct arcan_shmif_initial))

{

/* ... */

}The wrapper function arcan_shmif_open takes care of checking the environment

to see if we are in authoritative or non-authoritative mode and getting

hold of arcan specific arguments (arg_arr), used to avoid mixing

parsing with other forms of externally supplied arguments, like

the ARGV in C and other languages.

The specific flag, SHMIF_ACQUIRE_FATALFAIL will terminate the process with an EXIT_FAILURE error code and possibly an error message on stderr if a running arcan session could not be found. There are other flags that can enable a retry loop, trigger callbacks on the event of a connection being abruptly terminated and so on.

The SEGID_APPLICATION value indicates that the connection should identify

the primary segment as an application. This is a hint to the appl, and there

is a number of different segment types to pick from to indicate what the

purpose of the connection is (especially important for odd drawing scenarios

like head-mounted displays, debugging and for accessibility purposes).

The full list of segment types are described in shmif/arcan_shmif_event.h.

There is also an extended connection version that takes care of additional registration for situations where an initial title, identity, GUID and other parameters are desired.

By default, there is also an (optional) guard thread associated with each connection in order to account for the corner cases where the other side of the connection is unresponsive or has crashed. It is simply a sleeping / polling loop that uses some monitor handle (like a process identifier or pipe-descriptor, though the actual mechanism can vary between operating systems).

Should periodic verification fail, the guard thread will pull a volatile variable (the dead mans switch) and forcibly release the set of semaphores to wake up any blocked local threads.

The structure that is returned, arcan_shmif_cont is the key reference to

other operations that involves the shared memory interface. It contains aligned

video buffer pointers, currently negotiated dimensions and the usual convenience

fields for tagging user-defined data. It reflects the client's view of the

agreed upon interpretation of the shared memory page.

On its own, the code above will not really succeed with connecting as there is nothing listening on the other end. A strong point in the engine design is that there should be a least-surprise principle in place, as little as possible is assumed or determined in advance and, by default, no running appl listens to external connections.

The initial properties carries metadata that was provided by the running appl in the preroll stage (figuring out initial dimensions, sets of fonts to use etc), an optimization that should reduce the need for renegotiations that would otherwise contribute to startup event 'in-rush' and latency.

The code snippet below shows a quick and dirty prologue for how connections are set up and managed from the scripting context of an appl:

local chain_call = function()

target_alloc("demo", function(source, status)

print(status.kind);

if (status.kind == "connected") then

chain_call();

end

end);

endThis simply sets up a listening endpoint with the name demo. A listening endpoint is consumed when a client connects in order for the script to be able to limit the amount of connections (and associated video- etc. resources) that are permitted, so the first response to a connection is to setup the listening endpoint again.

Source here is like any other VID and represents both a control handle for sending messages, input and for managing how the application is drawn. See the target_ set of functions for what that entails.

The chain of events is: connected (new connection established) then

register (requested segment type is defined) then preroll (send initial

hints) and later resized on the first delivered frame (and any subsequent

segment resizes).

For a connection to go through, the arcan_shmif_open call will have to have

ARCAN_CONNPATH environment variable set to demo. This is comparable to the

DISPLAY mechanism used in the X windows system. The rules for underlying IPC

primitives and namespace for resolving demo is platform and build-time

configuration dependent.

This approach allows us to have multiple listening endpoints, with different rendering options and UI behavior depending on which endpoint that is being used, allowing load balancing while also supporting multiple display servers running simultaneously.

Let us look a little closer at the structures mentioned in the layout section, expanding on arcan_shmif_cont and the associated shared memory page.

shmpage contains shared information that is being disputed, and shmif_cont contains agreed upon states and connection management related handles from the client side (On the arcan- side, the structure in frameserver.h fills the same role).

The fields marked in blue (version tags, ID cookie) are calculated prior to mapping and is versioning and detection for deviations in how compilers see the layout based on compilation parameters and any deviations here will have either side terminate the connection.

The fields marked in green (dead man switch and pid) are primarily used by the optional guard threads as means of detecting and recovering from errors, they are one- directional in the sense that the parent do not care about these values, they are only provided for the benefit of the client.

The fields marked in red (segment_size, vsize, flags) are bidirectional in that they are used for metadata communication and signaling (see Sharing Data and Renegotiation below).

The fields marked in black contains data, with two fields used for hinting (vpts in milliseconds relative last reset as epoch, and coordinate system for video buffer transfers that may need specialized rendering).

Static sized input / output queues are the two ring buffers (one for client to server, one for server to client) used as storage for event queues. Data model for the events themselves is legacy ridden and subject to changes, and separated out into _arcan_shmif_event.h.

apending is a bitmask to indicate which of the negotiated audio buffers have been consumed locally but not synchronized, with aready (-1) indicating which one that is the current head buffer slot.

abufused is to indicate how much of each negotiated audio buffer slot have been used.

vpending and vready works in a similar way but synchronization here will only consume the most recent (vready -1) as a latency tradeoff.

The video buffer is dynamically sized aligned on an implementation defined boundary but typically matching DMA page alignment. Even if the underlying platforms support explicit buffer sharing of GPU memory, this buffer will still be available as an immediate fallback, should the parent start refusing GPU- buffer handles due to driver failures, load balancing or a pending GPU suspend.

The code below simply populates the aligned video pointer with a full-bright red and alpha channel, the RGBA macro is responsible for packing in whatever video buffer format that is considered the system native.

for (size_t row = 0; row < cont.h; row++)

for (size_t col = 0; col < cont.w; col++)

cont.vidp[ row * cont.stride + col ] = SHMIF_RGBA(0xff, 0, 0, 0xff);

arcan_shmif_signal(&cont, SHMIF_SIGVID);The important bit is the call to arcan_shmif_signal that will block the

running thread until the transfer has been acknowledged by the server. There is

also the option to signal an audio only transfer (SHMIF_SIGAUD) or a

combination (SHMIF_SIGVID | SHMIF_SIGAUD). During such a call, the behaviour

of other operations (_resize etc.), with the exception of the event queues (if

the shmif has been built marked as thread-safe), (_resize etc.) are undefined.

The blocking operation is partly used as a dynamic rate-limit, it should not be used as a clocking mechanism for monotonic time keeping or similar purposes.

Other signal synchronization modes include SHMIF_SIGBLK_FORCE (always wait for parent to acknowledge, default) SHMIF_SIGBLK_NONE (return immediately, subsequent calls may result in tearing).

For more advanced platforms that may support handle- based buffer passing, the video buffer is simply ignored and the signaling mechanism replaced with calls to _arcan_shmif_signalhandle, taking care to look for TARGET_COMMAND_BUFFER_FAIL events as an indication that the parent no longer supports handle based buffer passing.

The next code example shows a minimal (blocking) event loop:

struct arcan_event ev;

bool running = true;

while(running && arcan_event_wait(&cont, &ev)){

if (ev.category == EVENT_TARGET){

switch(event.kind){

case TARGET_COMMAND_DISPLAYHINT:

arcan_shmif_resize(&cont, ev->tgt.ioevs[0].iv, ev->tgt.ioevs[1].iv);

break;

case TARGET_COMMAND_EXIT:

running = false;

break;

default:

break;

}

}

}There are also corresponding polling and I/O multiplexer friendly versions. The full list of possible events is those in the subcategories (in:EVENT_TARGET, EVENT_IO, out:EVENT_EXTERNAL) present in the rather horrible shmif_event.h. Other categories are reserved for internal or frameserver specific use.

It is possible for one connection to have additional segments that work much like a normal connection. The exception is that the corresponding resources are linked. If the primary segment is terminated, subsegments will be dropped in a cascade. Subsegments themselves have many advanced uses ranging from multiple windows indicating alternative data views (subtitle overlays, debugging information, popup menus, clipboard, custom mouse cursor, titlebars and so on). They are synchronized individually and can live in other threads or processes. The caveat is that each request has to be explicitly accepted by the running appl and the default behavior is to reject subsegment requests to prevent denial- of service, UI redressing and other problems. The following code illustrates requesting and mapping subsegments:

arcan_event_enqueue(&cont, &(struct arcan_event){

.kind = ARCAN_EVENT(SEGREQ),

.ext.segreq.width = 512,

.ext.segreq.height = 512,

.ext.segreq.kind = SEGID_SENSOR, /* the type/role we want */

.ext.segreq.id = 0xcafe /* to help us find the response */

});

/* in event loop where */

case TARGET_COMMAND_REQFAIL:

/* note that our segment request failed */

break;

case TARGET_COMMAND_NEWSEGMENT:

if (ev->tgt.ioevs[0].iv == 0xcafe)

new_cont = arcan_shmif_acquire(&cont, NULL,

SEGID_SENSOR,

SHMIF_DISABLE_GUARD);

break;We set the SHMIF_DISABLE_GUARD to indicate that we do not need / want a guardthread for this segment (as we already have one for the main one) and that the segment should identify as a SENSOR (see event.h for long list of subtypes).

Note that a parent can explicitly create segments and push segments without a previous request. This is used for things like clipboard-paste, probing functionality (DEBUG, ACCESSIBILITY) and for PROVIDING data.

The last bit is interesting as such segments (OUTPUT/RECORD) are used for controlled display sharing / streaming. They have the same kind of use and operation, with the restriction that the resize class of functions are disabled and the number of audio/video buffers are locked to 1.

There is an extended support library to help with the setup boilerplate needed in order to activate accelerated graphics, like OpenGL or Vulkan. The following snippets show the ideal / trivial setup approach:

struct arcan_shmif_cont con = arcan_shmif_open(SEGID_GAME,

SHMIF_ACQUIRE_FATALFAIL, NULL);

struct arcan_shmifext_setup defs = arcan_shmifext_defaults(&con);

enum shmifext_setupstatus status = arcan_shmifext_setup(&con, defs);

if (status != SHMIEXT_OK){

printf("couldn't setup acceleration\n");

return;

}

arcan_shmifext_make_current(&con);

glClearColor(0.5, 0.5, 0.5, 1.0);

glClear(GL_COLOR_BUFFER_BIT);

arcan_shmifext_signal(&con, 0, SHMIF_SIGVID, SHMIFEXT_BUILTIN);

arcan_shmifext_drop(&con); /* back to normal connection */The shmifext_defaults retrieves the initial settings preferred by the server

at the other end of the connection. It can still be modified to tune API,

version, buffer sizes and so on.

The advanced / extended data from the featurelist at the top of the page are

activated by an extended resize request, where a bitmask is filled out with the

desired properties. This will result in a possible update of the apad,

apad_type and adata fields to cover the extended data model that is now

available, and the reply mask being set in the public part of the context

structure. The layout of this region is slightly more complicated, with a table

of relative- offsets and a series of substructures. Look into the arcan_shmif_sub.h/c

files and the tests/frameservers/gamma for examples on its use.

A DEVICE_NODE event can carry either connection or device primitives in order to indicate a new suggested server to use, a different GPU to switch to or a connection path to use if the current one fails.

It is also possible to explicitly migrate away through arcan_shmif_migrate

and explicitly specify a new server to switch to, with the downside of having

to renegotiate subsegments and other privileges.

Although migration, right now, serve as a fallback mechanism for recovering from server crashes, the intermediate step is proxy-shmif implementation that can translate to a line protocol and back for enabling remote connection and detach/attach behaviour.

The caveat is that this only applies for the primary segment, subsegments need to be renegotiated as the window management scheme in the new connection point may accept/reject different sets of subsegments.