-

Notifications

You must be signed in to change notification settings - Fork 48

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add RFC: Virtual Camera #15

Conversation

If we thing about bigger infrastructures admins or software would like to search for the camera name. It really helps to keep the machine-name on all machines the same. No matter what language you're in.

Let's keep the name constant.

|

I have commented on this in another issue, but was told this is the proper place for this discussion.

This point right here. OBS outputting a generic RTMP, just as it currently does, and someone writing a generic virtual camera that accepts a RTMP stream, is a better solution due to the sheer amount of flexibility this provides. Pros:

Cons:

I think the pros outweigh the cons -- a slight inefficiency due to using a standardized RTMP protocol is well worth all the flexibility and interoperability of the virtual camera being just another RTMP server. |

|

This RFC is explicitly for an OBS-specific implementation of output from OBS to a virtual camera. A generic middleware solution to this problem is out of scope of what this RFC is for. Additionally, RTMP would be an extremely poor choice of protocol for such an application, as the latency would be too extreme for usage in instances of live video chat, which is a primary use case for this feature. |

A discussion of a virtual camera implementation is out of scope (would be discussed somewhere else?), only a discussion on how OBS would output audio/video data to an unknown virtual camera is on topic? Fair enough, I must misunderstood the RFC. It is a bit surprising to have a RFC just for one half of the job required to make OBS-composed video appear in videoconferencing applications like Skype and Zoom, but I can see "Add the ability to render output to a virtual camera device on Linux, Mac, and Windows" being interpreted that way.

Of course a protocol more suitable for low latency must be used. I only mentioned RTMP because that's what OBS uses to live stream to Twitch and YouTube. Anyway, I will follow this conversation and will try to see where the virtual camera details - the other one half required to make this all work - are being discussed. This is quite an interesting topic! |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Alright, based on prior discussions in the OBS Community Discord, the bounty Issue in the main repo, and my own limited understanding of the work involved, I've added my own comments. Feel free to expand the wording of the RFC based on what I've provided. Happy to answer any questions.

| # Detailed design | ||

| - TODO - I don't know anything about OBS internals and so don't know how to design this. | ||

| - Platform specific device driver? | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Inserting quote from Jim:

My biggest requirement will be on Windows: this specifically means means utilizing the libdshowcapture library (which probably should have just been named "libdshow" at this point, but just ignore the name). libdshowcapture has a ton of directshow code that should be utilized to implement a directshow output. This is the primary reason why I have not just simply merged other people's plugins in to our repository or something. It should be implemented as part of the win-dshow plugin, and as part of the libdshowcapture library. The libdshowcapture library is probably the most safe way to implement DirectShow code possible on Windows. It also helps ensure the code is clean, as DirectShow code otherwise is some of the most unclean code on the planet. There are also things like registering the DirectShow filter on Windows; that will requires the installer and the auto-updater to be updated accodingly.

On macOS and Linux, things are probably a bit more flexible because the code is a bit more isolated there and it's less feasible to share code, but ideally it would be nice to prevent any unnecessary code duplication there if possible as well.

In summary:

- On Windows, modify libdshowcapture and the win-dshow plugin included in OBS.

| * [The Snap Camera](https://snapcamera.snapchat.com) is an example of an application that consumes input from a hardware webcam, processes the video stream, and outputs it in real time as a virtual device that appears in apps like Skype, Hangouts, or Zoom. It supports both Mac & PC. | ||

|

|

||

| # Detailed design | ||

| - TODO - I don't know anything about OBS internals and so don't know how to design this. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

API reference for OBS outputs (which this would have to be) https://obsproject.com/docs/plugins.html#outputs

| - Platform specific device driver? | ||

|

|

||

| ## Technical considerations | ||

|

|

This comment was marked as resolved.

This comment was marked as resolved.

Sorry, something went wrong.

|

|

||

| * Extend Mac & Linux OBS via plugin interface ala Windows-only OBS virtual camera. Clunkier, harder to use especially for novice users, but doesn't add as much complexity to base OBS. | ||

|

|

||

| * Pursue a more generic output interface and (encourage someone else to?) build a generic virtual camera to consume it. E.g. output via RTSP, NDI and have a "NDI Cam" that has nothing to do with OBS. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Note: all these alternatives require more effort for the user to know what needs to be done. Keep in mind, the goal is to make it easy for anyone to install OBS, and start using a virtual camera.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I wouldn’t consider NDI viable: it’s very very picky to get working reliably for long streams, and older hardware (e.g first gen trash can macs) have trouble with high resolution FPS. On long streams, AV sync isn’t an issue I’ve been able to resolve fully.

Additionally, if built in, licenses may be an issue with NDI’s libraries

| - TODO | ||

|

|

||

| ## User UX | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Initial configuration should require very little input from the user, if any. Resolution and framerate of the camera will be pre-defined by the Video settings of OBS, and no effects outside those already provided by OBS will be specially exposed for this output.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

One of the things I don't like about the obs-virtualcam plugin is that, in the installation, it asks how many virtual cameras you want to create. While this is a neat feature for someone who understands what they are doing, I think it's too complicated for generalized OBS use. I think it's perfectly acceptable to assume a single camera output from OBS that outputs whatever is going to PGM. The only setting I really could see being useful would be a checkbox to auto-start the output on OBS launch (though even that might be bad, since having an active output can confusingly lock output settings).

|

|

||

| # Drawbacks | ||

|

|

||

| Specializing output to "virtual camera" is more maintenance load for OBS team, more complexity, and presumably, 3x complexity as the approach to create a virtual device probably involves a fair amount of platform-specific driver code. It's a very important case of video streaming with many millions of users, but it's still something of a special case. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

On the contrary, this is a logical progression of the standard requirements and expectations of OBS as a compositor - you create the scene, and you output it wherever you need it. While RTMP & recordings were the original outputs in OBS, it has since expanded to FTL (for Mixer.com) and SRT, with third parties also expanding to NDI and similar.

Platform-specific driver code, however, is definitely a drawback, and would require maintenance as operating systems (especially in more recent times) tend to limit access (or require user permission) to access/provide to AV hardware. This especially will mean more testing/debugging will be required each time Windows, macOS, and officially supported Linux flavours (currently Ubuntu) provide a major update.

| OBS is a powerful set of tools to manipulate live video streams that natively supports output to popular streaming services as well as rendering to a local video file. There are a huge number of people who engage in 1:1 or small-scale streaming using video conferencing software like Zoom or Google Hangouts. Many of these people have similar needs to those of traditional streamers, but for one reason or another cannot switch the video conferencing software they use (social graph, corporate policy). One way to deliver OBS output to these applications is to provide a virtual camera in OBS. | ||

|

|

||

| Similar functionality: | ||

| * OBS can be extended by the [OBS-VirtualCam plugin](https://obsproject.com/forum/resources/obs-virtualcam.539/). Currently, only Windows is supported. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Note: a relatively similar plugin is also available for Linux, called obs-v4l2sink, made by the same developer. Note, however, it requires another component, like v4l2loopback, to actually output to another application to the system. No such plugin is currently available on macOS.

You misunderstand. The issue being taken with the alternative mentioned in jebjeb's RFC draft and with your proposed solution, is that the RFC process is for features or changes to be made to OBS. Writing a generic dedicated program to consume a protocol and output a virtual camera would not be an OBS feature, merely something it could work with. We're asking that the discussion be kept to how virtual cameras can be implemented in OBS. Other people are more than welcome to make a program that does what is being described (although I see a number of issues with it I won't get into), but this isn't the place to discuss that, therefore it is out of scope. |

|

Related question: Would this also require having a virtual audio output of the master mix from OBS as well that can be selected as a microphone? |

If simplicity is the goal, then yes. Having the master audio output of OBS be the audio 'source' of the VCam output would be the easiest and most logical choice. If one wanted to add complexity, then being able to explicitly select the audio source from a list of currently available sources in OBS (in addition to the master audio out of OBS and maybe a 'no audio' option, with the Master Out being the default) would provide some flexibility without making things too egregious. On the other hand, by not including audio from OBS in the output, one is now relying on the receiver software for that function. Since OBS is much better equipped on this front (and all the APIs we're talking about on each platform support audio on such devices) it would help provide a better experience for the end user if audio was included in the VCam feed/source. If you're asking about having just an audio virtual out (or rather device/end point that other programs can use), I'm not sure. I think those would require a different set of APIs, and if that's the case, that should be it's own RFC/request/issue. |

|

@dodgepong I think that could even be a separate RFC |

|

I've made a proof of concept on mac of this just to find out what's possible. And it works! Here it is sending OBS to Google Hangouts in Chrome: I basically just shoehorned part of lvsti/CoreMediaIO-DAL-Example into OBS (after making a few fixes). To create a virtual camera on OSX you need a Next you need some process running that can serve frames to the plugin. In my proof of concept I opted to vend frames directly from OBS. This is based on the Vending frames directly from OBS may not be ideal in the real implementation. In its current state, if OBS is closed when you open Chrome, then Chrome does not recognize the virtual webcam (same goes for QuickTime, etc -- it seems that many apps resolve available sources at startup and then do not re-evaluate ones that did not successfully start). I see two (maybe three) ways around this:

My next step is to bring the CoreMedia plugin code into my branch and get it working with cmake so that this implementation isn't split between two repos. |

I would vote this be a separate RFC, even if it's to extend the virtual camera output with audio. As long as we design this RFC with the expectation/plan to expand with audio in future, that should be fine. For most use cases (I'd hope), the user's mic should be plenty audio to go with the virtual camera. |

This is what camTwist does on OSX, and it's really quite simple in practice, (no idea of execution.) When the application isn't running, you can still select it as a video source, but all consumers get is a static test pattern. (SMPTE color bars with a superimposed logo) |

|

@Gavitron do you know if camtwist runs a background process in addition to the plugin? |

|

@johnboiles I don't think you need an assistant process. In the plugin I think you should be able to create a device that is outputing waiting for another process to connect or signal through MIG that there is input available. We should be able to change this method to do so: We can discuss this 1:1 on discord if you want. I can have a working POC (or at least try to have one) |

|

I was able to get the cmake build for the plugin working. If you want to try it out, it's easier to build/install now that everything's in one place: Now you should be able to open an app that uses the camera (I've been using Quick Time) and find the @lsamayoa I tried briefly to tweak that method to not fail if the assistant process was unreachable, but it wasn't as simple as that -- there were a few places the assistant was referenced that were failing. Probably need to read the code more and understand where we might need to add logic to try again to reconnect at the right time if the IPC server is not available. |

afaik, it does not. there is a device present, which serves a static (bmp? png?) until the process starts. |

|

@johnboiles wow! awesome! I followed your instructions. everything compiles |

|

@signalwerk The camera should start automatically when OBS starts. My typical testing flow has been:

However, it stopped working for me this afternoon (and throwing code signing errors in the console). The same binaries that used to work no longer work so I think there must be something that changed in my system configuration and/or my locally signed version of my plugin expired. Could you take a look in Console.app and see if you're getting any errors that mention |

|

@johnboiles I'm sorry. The virtual device is not showing up. here is the log of the console when starting up: Startup (click triangel left)If you wanna have a screen-sharing to see what's going on. Let me know. In my github-profile you see my mail or on twitter @sgnlwrk. I'm in Europe (GMT+2). So I'll be around for an other 40min or in about 7h again. |

|

So, to divert a bit from the Mac technical implementation details and back to something like UX: Starting and Stopping the Virtual CamRight now the RFC proposes having a "Start Virtual Camera" button above the "Studio Mode" button. In the core app, most outputs are started from this menu, but we also have the Decklink output, which is started from a window from the Tools menu. The @CatxFish obs-virtualcam plugin also adds the start/stop button and options to the tools menu, as does the NDI plugin. Essentially, we have two places where one might put output start/stop: the control dock, or the tools menu. The control dock increases visibility, but is maybe not a future-proof place to put more output start/stop toggles as we add more output types, or perhaps allow multiple simultaneous RTMP streams or local recordings. On the other hand, while the Tools menu is more flexible for holding more outputs, it's not very discoverable ("Tools" is maybe a bad menu for this) and it adds extra clicks to start/stop. Revamping the entire outputs UI is outside the scope of this PR, but I would be interested to hear people's thoughts on which way it should go for now. SettingsMy ideal plugin would have as few settings as needed:

The only setting I could see being necessary would be a setting to toggle whether or not the output should be started automatically with OBS or not. The main reason an auto-start would be bad right now us because you can't change output settings when an output is active. I'm not sure if there might be a way around this, but perhaps that's a question for @jp9000. The other reason is because there may be a non-zero amount of extra resource usage associated with an always-on virtualcam output that users might not want to have. Are there any other settings I'm not thinking of? If so, how could we simplify them such that users won't have to bother with them? |

|

@signalwerk huh it doesn't look like it built the |

|

I can completely confirm that the latest commit of @johnboiles (see description above) compiles and outputs 720x480 (hardcoded) to a virtual camera. 🥳 This is a great start! |

|

Great progress. Thanks for kicking the RFC off. The discussion is all on point. One thing I'd like to spec is that the virtual camera outputs video as well as audio. The current windows plugin does not do this yet. |

|

@tobi I think you should open a different RFC for virtual audio output. There is a lot of work that is involved with just video. Even though @johnboiles work has moved it a lot forward in the OSX side, there is still lots of issues to fix with that implementation. Not only that, that is just for OSX. |

|

I agree that virtual audio output should be a separate RFC. While virtual audio and virtual video are related features conceptually, they involve almost completely separate areas of the code, and present completely different UX and engineering problems. |

|

Agreed, the vast majority of users are not going to need multiple virtual cameras. However if we wanted to do this, I think the graceful way would be to simply expose multiple virtual cameras only when multiple instances of OBS are open. If only one instance of OBS is open (as will be the case the vast majority of the time) only one virtual camera would be visible. If multiple instances of OBS are open, we could expose additional virtual cameras. I'm pretty sure this is possible (at least on macOS, not sure about Linux/Windows) So I think this would be possible to do without adding much user-facing complexity. But I don't think it's useful to very many users and thus is extremely low low priority. |

That isn't the use case, though. The use case is to send different sources/scenes to different virtual camera outputs from a single OBS instance. That is a useful thing to do, to be sure, but it is completely unnecessary for a first iteration. |

Nothing quite so permanent as a temporary implementation. That said, users who DO need multiple camera outputs can continue using catxfish's plugin in the meantime, assuming this wouldn't conflict. |

To be quite frank, the reality is that I have a hard time ever seeing us adding more than one virtual camera output. I think the added featureset does not justify the added complexity for the general use case. And yes, people can continue to use the existing plugin if they wish. |

|

@johnboiles Thank you for the instructions. However, I could not compile. I receive many fatal errors on high sierra 10.13.6 like: I have no idea how to prevent this. Any suggestion? |

|

@StefanJordan if you're talking about the plugin by johnboiles, please write issues there. |

|

🎉 🎉 🎉 🎉 🎉 🎉 |

|

Looks like the "related issue" link might not be correct:

|

|

Yes, you're correct, I'll update it. |

|

That is what this RFC is effectively going to provide (though not through stream settings, it would be its own output). |

|

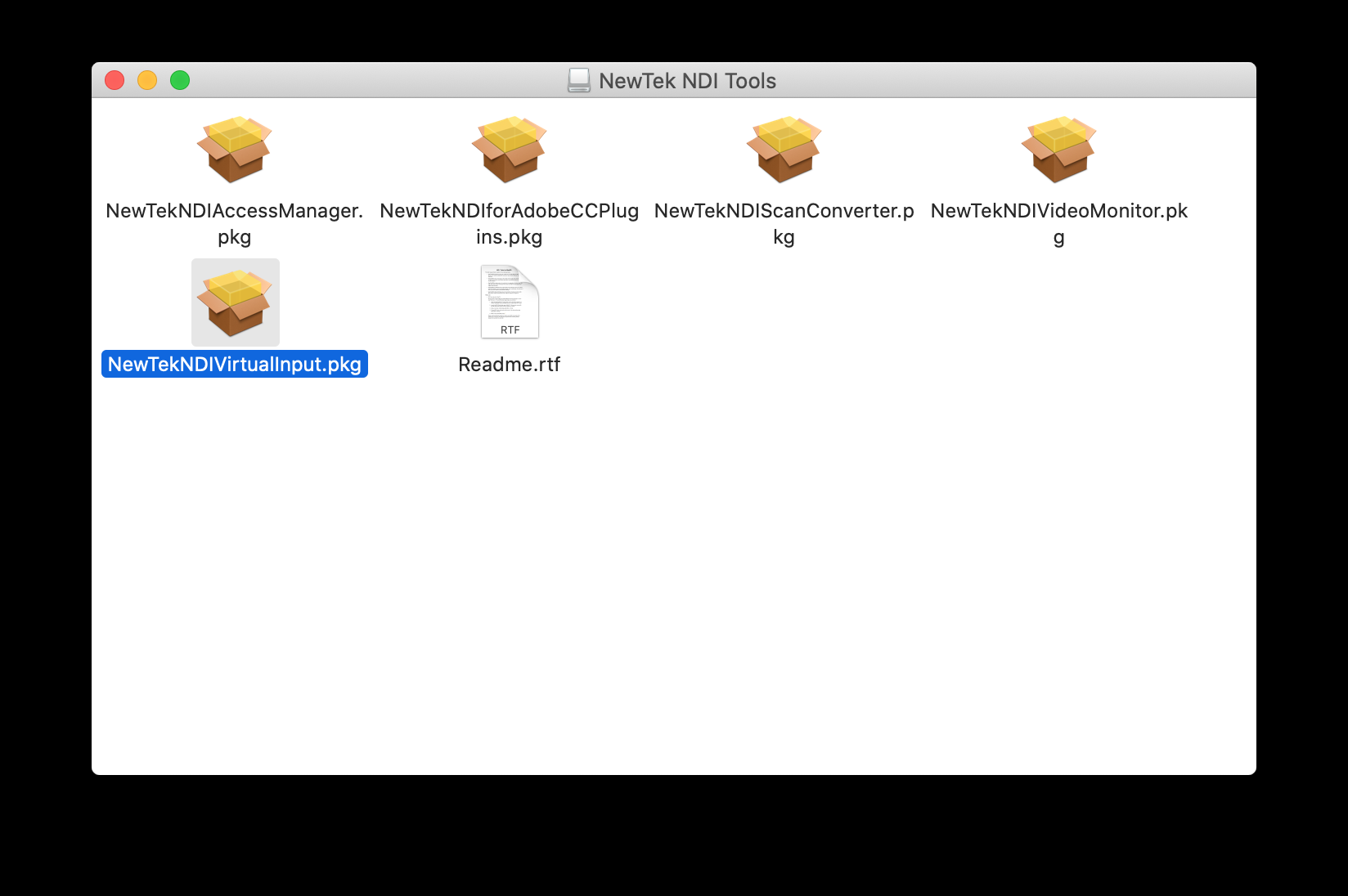

NDI Virtual Input for Mac is now available from NewTek (not an official source of information) |

|

I can confirm that using the NDI OBS plugin to export the stream along with NDI Virtual Input as a virtual camera works fine on macOS as a workaround until the native virtual camera is working. |

|

can also confirm that the new NDI Virtual Input works fine in both OBS and Zoom. so, at least we've got another option. |

|

I can't get the NDI Virutal Input to work. Any idea on when this RFC: Virtual Camera will be released? |

|

It's an RFC, not an implementation. People are working on implementation right now, but there is not an ETA. I understand that people want a solution to this problem in OBS as soon as possible but posting about it here is not really appropriate. Please keep discussion confined the actual contents of the RFC. Posts regarding the NDI Virtual Input aren't really relevant to this specific conversation. |

|

Apologies if OT but a lot of people are following this issue hoping for a solution. This OBS Mac Virtual Camera plugin works for me: Installation guide: Amazing work by everyone involved! This team is an inspiration to other open source projects. Folks, please consider donating $ to support them if their product has helped you. |

|

We're starting to track what will be required to make my plugin compliant with this RFC here: johnboiles/obs-mac-virtualcam#105 |

| ## Platform specific implementations | ||

|

|

||

| * On **Windows**, the implementation of this plugin should leverage [libdshowcapture](https://github.com/obsproject/libdshowcapture). OBS already depends on libdshowcapture, so using this existing library service limits the need to add additional dependecies. | ||

| * On **macOS**, OBS will need a plugin to output over IPC to a CoreMediaIO DAL plugin that is registered on the system upon OBS install. This will require repackaging the installer as a `.pkg` instead of a compressed `.app`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As an alternative to this, when the user first starts the virtual camera, we could potentially prompt to install the DAL plugin at that time. We'd need to show a password prompt to be able to have access to write to /Library.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How is that better than installing it at OBS install time? It's already elevated at that point and cuts out an extra step.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

On macOS, since OBS distributes a .app bundle inside a .dmg image, we don't have elevated privileges at install time currently. When the user first opens OBS, macOS prompts for permission for things that require elevated approval/privileges (e.g. Microphone, Camera, etc). A common pattern I've seen recently for apps that need additional files installed is that they distribute as a .app but have menu actions to install things with elevated privileges. This allows the end user to decide to what extent they want to give an app access to their system.

For me, I'm generally suspicious when apps require a .pkg installer since it's harder to know exactly what they're installing on my system. I try not to install apps via .pkg if I can help it. .pkg installers can install sneaky things that break security like Zoom did. Maybe others feel similar to me, or maybe they don't care 🤷

But it probably doesn't really matter either way. I bet OBS has enough notoriety that it won't really affect how people perceive it. And if we believe that the virtual camera is going to be used by the majority of OBS users, then a .pkg is definitely the right way to go.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

A common pattern I've seen recently for apps that need additional files installed is that they distribute as a .app but have menu actions to install things with elevated privileges.

IMO this is absolutely miserable UX. I'm fairly confident that the wide majority of people will absolutely not care if it's a .pkg or an .app, but they will care if it works out of the box or not.

The Mac build of OBS was distributed as a .pkg for years with no objections, and it was only switched to a .app with version 24.0.0 because it didn't need to be a .pkg. Shipping a .pkg would just be going back to the way things used to be, except now it's properly justified.

Finally, unlike Zoom, OBS is an open source application that anybody can inspect, down to the packaging scripts. If people were concerned, they can inspect the source code and the packaging scripts themselves.

I appreciate the concern on this issue, but as a matter or practicality I don't think we will have anything to worry about vis-à-vis public perception.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IMO this is absolutely miserable UX.

100% agree. But it's miserable UX in the name of security, which is basically the whole story of macOS Catalina 😂

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The issue there is that we don't really have much onboarding to speak of for Mac, other than the one-time prompt to run the auto-config, so that would have to be developed almost from scratch.

For what it's worth, we really should already have an onboarding flow for Mac to do things like request permission to capture the microphone/screen/camera.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed, what I tried to get at was that the menu bar item would be needed anyway and thus would still be a necessary implementation detail.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, fair point then. Do you think an entry in the Tools menu is appropriate for this? Or do you have any other ideas of where it would best go?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah "Tools" seems to be the current home for all additional functionality and plugins. Simplest implementation might be to have the current single item do the additional checks and installation of the DAL plugin and keeps the "Enable" state if it fails or a user cancels the process.

There might be more ways to make this menu item more verbose about state of the plugin, but I have no idea if it's feasible using Qt.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@johnboiles I've been working on code for an onboarding flow and also investigated how to install/update the DAL plugin at runtime without the need for an installer with elevated privileges.

The short story is that it's possible with a little "hack" as OBS is not a sandboxed app and thus the usual ways to get elevated write permissions for the target folders do not work (e.g. show a file browsing dialog defaulting to the target dir, which would give write permissions for a file operations).

The only way to make this work is to invoke an AppleScript that runs a cp -R with elevated privileges. It does work without issue though.

Now the pickle: We could have this code run as part of the plugin when it's being activated, then remove it later once we have a proper macOS onboarding flow. It would allow release of this plugin earlier, but introduces a small amount of maintenance later.

This in essence does the deed:

When OBS is run as a bundled app, the permission dialog will look "proper", alas there is no way to add an explanation what OBS needs it for, but I hope that triggering this through the menu item will make it clear (excuse the German-ness of it):

The DAL plugin is copied dutifully to where it should be and I checked that e.g. Quicktime picked up the virtual cam output directly after copying over the plugin (no restarts of OBS or the system needed).

|

So far everything seems to be working fantastically on Windows, but it seems the feature to autostart a virtual camera when OBS launches was left out. I don't know if it's absent because it was forgotten about, or because there's fear for a crash loop if something goes wrong, but this feature will be sorely missed. |

|

Hey @Technetium1 , better post issues regarding the implementation here: https://github.com/obsproject/obs-studio/milestone/1 |

|

For the record. Since in version 26.1 the Virtual Camera Support finally landed in the official release I will keep my promise made in march 2020 and donate the other 50% of my bounty ($200 in total for the mac-version) to @johnboiles ((sorry to bother people whit this message, but I just wanted to make sure there is a record and I hope all the other bounty donators found also a good solution to pay the community)) |

|

T |

Summary

Beginning of an RFC to add a Virtual Camera output to OBS

Motivation

OBS is a powerful set of tools to manipulate live video streams that natively supports output to popular streaming services as well as rendering to a local video file. There are a huge number of people who engage in 1:1 or small-scale streaming using video conferencing software like Zoom or Google Hangouts. Many of these people have similar needs to those of traditional streamers, but for one reason or another cannot switch the video conferencing software they use (social graph, corporate policy). One way to deliver OBS output to these applications is to provide a virtual camera in OBS.

Similar functionality:

Read RFC