-

Notifications

You must be signed in to change notification settings - Fork 10

MusicHackLab

The MiningSuite requires Matlab 7.6 (r2008a) or newer versions. However version 8.2 (r2013b) or newer is strongly recommended, because MiningSuite easily crashes on previous versions. The MiningSuite also requires that the Signal Processing Toolbox, one of the optional sub-packages of Matlab, be properly installed. But actually, a certain number of operators can adapt to the absence of this toolbox, and can produce more or less reliable results. But for serious use of the MiningSuite, we strongly recommend a proper installation of the Signal Processing Toolbox.

If you are student or employee at the University of Oslo, you can directly access a suitable version of Matlab from your browser.

Download the latest version of MiningSuite in the GitHub repository.

To use the toolbox, simply add the downloaded folder miningsuite-master to your Matlab path. (Here is how to do that)

The list of operators available in the MiningSuite, stored in file Contents.m, can also be displayed by typing help miningsuite-master in Matlab.

If you prefer, you can rename the downloaded folder miningsuite-master to simply miningsuite. (Don't forget to update the path in Matlab.) In that way, you can simply write help miningsuite

You can access the documentation online.

The sensor data collected during MusicLab vol.1 and 2 are stored in CSV files.

Here we will focus on one piece, Harpen by Edvard Grieg, beautifully performed during vol. 2 by Njål Sparbo (voice) and Ellen Sejersted Bødtker (harp).

You can find both the audio recording (P2.wav) and the breath data (P2.csv) in this zip file.

To facilitate the work, please store both sensor and audio in a single folder (you can simply keep the folder extracted from the ZIP file for instance) and choose that folder as Current Directory in Matlab.

You can load and display any of the sensor CSV files (for instance the file P2.csv in vol. 2) by using this command in Matlab:

sig.signal('p2.csv','Sampling',10)

Here the breath data was collected with a sampling rate of 10 Hz. That is why we indicate this sampling rate when calling sig.signal.

A new window appears, with a figure showing the 10 different breath signals.

As we need to store this signal for further computation, we can save it in a variable, for instance 's':

s = sig.signal('p2.csv','Sampling',10)

We can play the audio recording of the piece while looking at the curve (with a cursor progressively showing us the successive temporal positions in each curve).

First we need to sync together the sensor data and the audio recording. Corresponding sensor data and audio recordings do not start at the same time, so we need to indicate the delay between the two signals.

For instance, for the second piece in vol. 2, there is a 23 s delay of the audio with respect to the breath data. We can sync them together using this command:

t = sig.sync(s,'P2.wav',23)

In this way, we can now play the two files in sync:

t.play

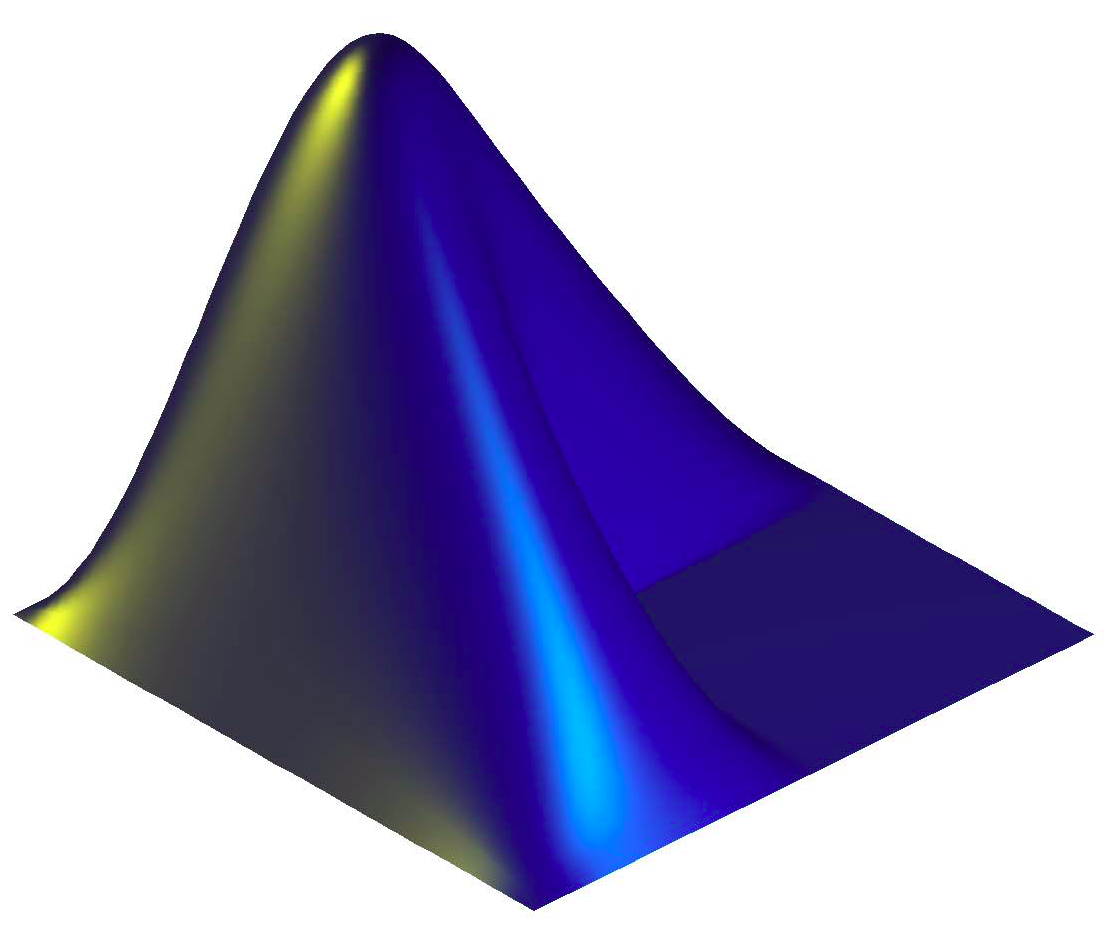

Lots of various operations can be performed on the signal using the MiningSuite. But one operation that is particularly adapted to this kind of signal is to detect periodic patterns in the signal. To do, we can compute an autocorrelation function:

sig.autocor(s)

Peaks in the autocorrelation curves indicate periodicities at particular frequencies. These periodicities are estimated globally throughout the whole signals.

We can also detect the presence of periodicities locally at particular temporal regions in the signals. To do so, we can decompose the signal into frames and compute the autocorrelation function for each successive frame:

sig.autocor(s,'FrameSize',10,'FrameHop',.1)

Here we indicate frames of 10 seconds, that moves every 10% of the frame length (hence 1 second).

We can also check if the breath signal of a participant (or musician) is similar to the breath signal of another participant. We can compute the cross-correlation between each pairs of those signals.

sig.crosscor(s)

Peaks in the crosscorrelation curves indicate that two participants have same behaviour, with a delay between each other indicated by the lag (X axis). These periodicities are estimated globally throughout the whole signals.

We can also detect the presence of similar behaviour between pairs of participants locally at particular temporal regions in the signals. To do so, we can decompose the signal into frames and compute the crosscorrelation function for each successive frame:

sig.crosscor(s,'Frame','FrameSize',20,'FrameHop',.1)

Here we indicate frames of 20 seconds, that moves every 10% of the frame length (hence 2 seconds).

We can extract a lot of information for the audio recordings. We will focus in particular on analyses that are well suited to the particular type of music under study.

We can first simply display the audio signal itself.

a = sig.signal('P2.wav')

We can display the temporal evolution of the dynamics, by computing the energy (or more precisely the Root-Mean-Square) for the successive time frames in the signal:

r = sig.rms('P2.wav','Frame','FrameSize',.1,'FrameHop',1)

Here the frame are .1 second long and move every .1 second, hence we obtain a signal that has the same sampling rate as for the sensor data (10 Hz).

We can play the music while looking at the RMS curve, with a cursor showing the successive temporal locations:

t = sig.sync('P2.wav',r)

t.play

We can display both the sensor data and the audio waveform and RMS and play the audio at the same time:

t = sig.sync(s,'P2.wav',23,a,23,r,23)

t.play

We can compute the spectrogram, for instance:

sp = sig.spectrum('P2.wav','Frame','Max',20000,'dB')

We can compute the spectral centroid, either using our previous spectrogram or not, for instance:

sig.centroid('P2.wav','Frame','FrameSize',.1,'FrameHop',1)

Other spectral moments can be considered, such as sig.spread, sig.skewness, sig.kurtosis, etc.

Because the piece P2 does not use percussion, and is based on very subtle pulsation that is not clearly shown in the signal, a complete rhythmical/metrical analysis is quite challenging. For the time being, we can observe the clarity of the underlying pulsation:

mus.pulseclarity('P2.wav','Frame','Mix')

In order to get a simple curve showing some aspects related to tonality, we can estimate the tonal clarity, which is the second output of this command:

mus.key('P2.wav','Frame','Mix')

We can also get an idea about the degree of "majorness" of each instant of time in the piece:

mus.majorness('P2.wav','Frame','Mix')

These are just examples, check the online documentation for more inspiration...