-

Notifications

You must be signed in to change notification settings - Fork 2.6k

bad memory behaviour substrate 3.0.0 #8117

Comments

|

Where is your node implementation? How do you run the node? (which CLI flags) |

|

https://github.com/dotmog/substrate/tree/dotmog_v3.0.0 I run the bin/node/ compiled with

running it with this service ...

|

|

This is windows with the Linux subsystem? |

|

Its a windows vm setup like described here (guide), that was just a test to reproduce? The nodes who crash are running on digital ocean, ubuntu. Is there something I can do on my ubuntu nodes to gather more informations about this behaviour? |

|

I created a fresh new vm on digital ocean applied following commands to install substrate ... 4 GB Memory / 80 GB Disk / LON1 - Ubuntu 20.04 (LTS) x64

then

... monitoring with top and digital oceans monitoring tool. more comming |

|

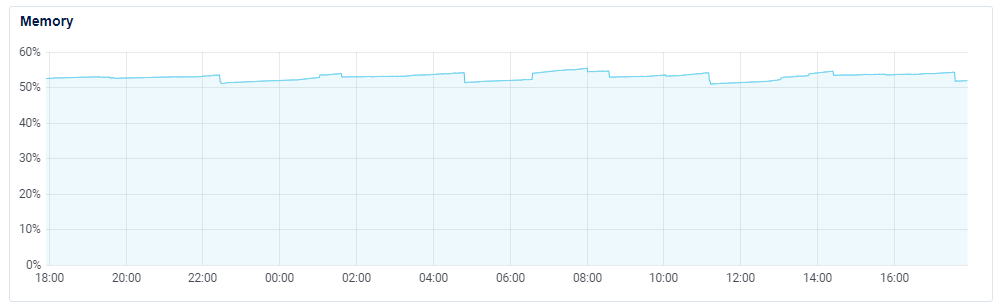

on my end (tested on macos) I have an initial footprint of around 90MB and after roughly one hour 1.8GB. is this due to the state kept in memory, or is there a better explanation I am not aware of? your mem chart looks more like leaking. weird, as it is kind of dynamic in the first hour or so. |

|

the first hour is setting up the machine and compiling substrate ... time from digital ocean monitoring is 1 hour shifted in the grafics, so the substrate process was started on 13:05 in the blue diagram. @2075 thx, for confirming the issue. |

|

@darkfriend77 you are running a single On the top, when the graph says 100% memory, how much physical memory is that? Substrate/Polkadot use about 500Mb RAM for us here... |

|

First I noticed the bad behaviour on a basic installation with two nodes which where only updated to 3.0.0 and they where working fine on 2.0.0 .. post Then I reproduced the behaviour on my local machine under windows, only using substrate --dev post And the latest was reproducing it with a vm on digital ocean only using substrate --dev, on a 4gb mem machine.

The first test is a basic 2 peer installation, so it's not --dev related. |

|

@gnunicorn @bkchr can one of you guys confirm the issue concerning bad memory usage on the Substrate v3.0/0.9 – Apollo 14 release, please. |

|

@darkfriend77 We are aware that there is something. We don't know yet what it is. However, we already know that something on Windows with Linux subsystem triggers some huge memory leak as you already detected. |

@bkchr Okay, thank you for the answer. Just to clarify, I've reproduced the bug only on WSL. I observed it on the native linux nodes first runing substrate 3.0.0. Only then I started to look at it the bug. Ubuntu 20.04 (LTS) x64 / 4 GB Mem / 80 GB Disk memory leak LINK |

|

Yeah I have seen this. We are investigating it, but it is rather hard, because we can not reproduce it that easily. |

|

@bkchr Profiling screenshot suggests It could be caused by a TLS leak in

|

|

This issue really looks related. However, I also checked our nodes and the number of activate threads is around 15. So, we don't have an unlimited number of active threads and if I read this issue correctly, cleaning up the thread local should work. |

|

Apparently it still does not work on Windows, if you read the last few comments on the issue. The number of active threads at any given moment may be hovering at 15, but if some of them are shut down and restarted all the time that may be a problem. Also how many cores do our nodes have? I suspect the issue might only surface on underpowered machines with low CPU count. E.g. On a machine with 2 cores tokio thread pool only keeps around 2 threads and spawns additional short lived threads when required for blocking tasks, or when the load is high. On a machine with 8 cores it keeps 8 threads and there's rarely any need to spawn additional. That's just my guess but I wouldn't be surprised if there's some weird interaction like that going on. |

|

I will do a test on 3 machines native Ubuntu 20.04 (LTS) x64 on Digital Ocean... same setup Linux only

Will let them run for a few hours then post the monitoring graphs and informations ... maybe this helps you to elaborate more ... on this issue |

|

@darkfriend77 I'm curious with this issue, but unfortunately I can't reproduce your result, can you apply this patch, recompile and retest?, please update us with the result. The patch I made just to validate my assumption of my early investigation. |

|

@anvie will do that. |

I've checked the code to confirm this. Tokio blocking pool uses idle worker threads if available. And if not, it spawns a temporary thread. And we do execute all network handlers as blocking tasks. Having short lived temporary threads is a bad idea in general, as it leads to hard-to-diagnose issues, such as this one. I'd suggest reverting #5909 and maybe setting a fixed minimum of worker threads instead. |

|

As a general opinion, I don't think we should avoid spawning threads because of unfixed leaks with thread-local storage. That's killing the messenger. |

|

here what it looks like after 2 hours ... #8117 (comment) |

Right, I've misinterpreted it. The short-lived threads have nothing to do with it indeed. It looks to be an issue in |

|

so, if I read this correctly, there is no code-change necessary for the fix from our side, but a We don't even have to release a new version, but we could tag for a new docker-image with the updated dependency, if that shows to fix the error. |

|

@gnunicorn updateing and creating the test in a few minutes start after 1.5 hourse seems fixed .. ^^ :-) |

* Enable clippy check * Revert clippy check * Remove CC1 (#446) * Bump 2.0.8-1 (#449) * Update Substrate to bb0fb2965f9eb59c305ce0c294962d3317a29ece but incomplete, basically make it compile, there are still some FIXMEs and TODOs. * Fix format * Fix test compile * Remove debugging print * Workaround for a potential Substrate glitch * Add malan runtime (#463) * ChainX TC0 * Update btc testnet header * Use Testnet in xpallet-system * Add ptc0 chain * Bump spec version to 7 * Fast governance processure * Add malan runtime * Add malan.json * Rename chainx-dev-runtime to dev-runtime * Use dns in bootnode * Fix clippy under runtime-benchmarks feature * Update error message for invalid chain option * Add two more malan bootnodes * Rebuild malan genesis config * Update btc genesis * Use new manlan config * Update malan.json * Use new malan.json * Rebuild malan runtime Since we currently has only one executor(`chainx`), we must use spec_name = "chainx" in the runtime code. * Update malan.json * Use malan.json * Clean up unused code * Revert chainx.json changes * Fix malan runtime build * Add btc_2.0.json * Handle multi runtime properly (#473) * Handle multi runtime properly * Split out service crate * Run cargo fmt * Reenable new_light() * Split out service/client.rs * Cargo fmt * Clean up * Update Substrate to 93b231e79f5b4e551c34234e89fa4a2e5e9c1510 * Remove tmp btc_2.0.json * Update Substrate to 81ca765646c35c7676ec2f86e718bf1f6a5cc274 * Update Substrate to bc69520ae4abb78ca89a57ff9af8887d598ce048 * Update Substrate to 075796f75f754a712ebb417c8b17633f7b88adf1 * Update Substrate to f14488dfca012659297d2b4676fab91c179095dd * Fix test * Fix todo! with current impl * Update Substrate to 3.0.0 * MIgrate assets-registrar test to construct_runtime! * Migrate xpallet_assets test to construct_runtime! * Migrate all tests to construct_runtime! * Update orml-* to 0.4.0 * Remove patches in Cargo.toml * Impl ReportLongevity properly * Cargo fmt * Reset malan (#479) * Add Proxy module (#478) * Add Proxy module * Cargo fmt * Bump spec version * Update to the latest master of Substrate Ref paritytech/substrate#8117 * Fix wrong feature gate on grandpa after splitting out service crate * Cargo fmt * Replace sc-finality-grandpa-wrap-sync git dep using crates.io dep

* Fix wrong feature gate about grandpa in service * Update btc bridge 2.0 to Substrate 3.0.0 (#509) * Enable clippy check * Revert clippy check * Remove CC1 (#446) * Bump 2.0.8-1 (#449) * Update Substrate to bb0fb2965f9eb59c305ce0c294962d3317a29ece but incomplete, basically make it compile, there are still some FIXMEs and TODOs. * Fix format * Fix test compile * Remove debugging print * Workaround for a potential Substrate glitch * Add malan runtime (#463) * ChainX TC0 * Update btc testnet header * Use Testnet in xpallet-system * Add ptc0 chain * Bump spec version to 7 * Fast governance processure * Add malan runtime * Add malan.json * Rename chainx-dev-runtime to dev-runtime * Use dns in bootnode * Fix clippy under runtime-benchmarks feature * Update error message for invalid chain option * Add two more malan bootnodes * Rebuild malan genesis config * Update btc genesis * Use new manlan config * Update malan.json * Use new malan.json * Rebuild malan runtime Since we currently has only one executor(`chainx`), we must use spec_name = "chainx" in the runtime code. * Update malan.json * Use malan.json * Clean up unused code * Revert chainx.json changes * Fix malan runtime build * Add btc_2.0.json * Handle multi runtime properly (#473) * Handle multi runtime properly * Split out service crate * Run cargo fmt * Reenable new_light() * Split out service/client.rs * Cargo fmt * Clean up * Update Substrate to 93b231e79f5b4e551c34234e89fa4a2e5e9c1510 * Remove tmp btc_2.0.json * Update Substrate to 81ca765646c35c7676ec2f86e718bf1f6a5cc274 * Update Substrate to bc69520ae4abb78ca89a57ff9af8887d598ce048 * Update Substrate to 075796f75f754a712ebb417c8b17633f7b88adf1 * Update Substrate to f14488dfca012659297d2b4676fab91c179095dd * Fix test * Fix todo! with current impl * Update Substrate to 3.0.0 * MIgrate assets-registrar test to construct_runtime! * Migrate xpallet_assets test to construct_runtime! * Migrate all tests to construct_runtime! * Update orml-* to 0.4.0 * Remove patches in Cargo.toml * Impl ReportLongevity properly * Cargo fmt * Reset malan (#479) * Add Proxy module (#478) * Add Proxy module * Cargo fmt * Bump spec version * Update to the latest master of Substrate Ref paritytech/substrate#8117 * Fix wrong feature gate on grandpa after splitting out service crate * Cargo fmt * Replace sc-finality-grandpa-wrap-sync git dep using crates.io dep * Cargo fmt

* Update Substrate * Fix cargo fmt * Add runtime common * Rename Trait to Config * Fix tests * Fix runtime benchmarks * Make it compile * Make tests compile * Fix format * Fix bitcoin header test * Enable clippy check * Revert clippy check * Update Substrate to bb0fb2965f9eb59c305ce0c294962d3317a29ece but incomplete, basically make it compile, there are still some FIXMEs and TODOs. * Fix format * Fix test compile * Remove debugging print * Workaround for a potential Substrate glitch * Fix malan runtime build * Add btc_2.0.json * Update Substrate to 93b231e79f5b4e551c34234e89fa4a2e5e9c1510 * Remove tmp btc_2.0.json * Update Substrate to 81ca765646c35c7676ec2f86e718bf1f6a5cc274 * Update Substrate to bc69520ae4abb78ca89a57ff9af8887d598ce048 * Update Substrate to 075796f75f754a712ebb417c8b17633f7b88adf1 * Update Substrate to f14488dfca012659297d2b4676fab91c179095dd * Fix test * Fix todo! with current impl * Update Substrate to 3.0.0 * MIgrate assets-registrar test to construct_runtime! * Migrate xpallet_assets test to construct_runtime! * Migrate all tests to construct_runtime! * Update orml-* to 0.4.0 * Remove patches in Cargo.toml * Impl ReportLongevity properly * Cargo fmt * Update to the latest master of Substrate Ref paritytech/substrate#8117 * Fix wrong feature gate on grandpa after splitting out service crate * Cargo fmt * Replace sc-finality-grandpa-wrap-sync git dep using crates.io dep * Link the Substrate issue in comment * Do some cleaning * Use sp_staking::SessionIndex and uprade wasm-builder to 4.0

If been installing the https://github.com/paritytech/substrate/releases/tag/v3.0.0 on two nodes and they have both a bad memory behavior, the same installation is running smoothly with substrate 2.0.0 on two other nodes, see comparison.

same configuration same code, but with substrate 2.0.0

seem there is something bad happening ... how can I help to find the bug? The nodes crash every hour, when mem is running full.

Installed both on 2 GB Memory / 50 GB Disk - Ubuntu 20.04 (LTS) x64

NEW NODES

https://polkadot.js.org/apps/?rpc=wss%3A%2F%2Fmogiway-01.dotmog.com#/explorer

https://polkadot.js.org/apps/?rpc=wss%3A%2F%2Fmogiway-02.dotmog.com#/explorer

OLD NODES

https://polkadot.js.org/apps/?rpc=wss%3A%2F%2Fnode01.dotmog.com#/explorer

https://polkadot.js.org/apps/?rpc=wss%3A%2F%2Fnode02.dotmog.com#/explorer

The text was updated successfully, but these errors were encountered: