-

-

Notifications

You must be signed in to change notification settings - Fork 13

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Nonlinear solid solver #24

Conversation

- read parameter file according to command line - remove unneccessary parameter options - replace deprecated functions - remove option for automated differentiation

class and redefine convergence criteria with absolute errors criteria.

Add update functions needed for velocity and acceleration Integrate acceleration in assembler Define parameters for newmark scheme

|

@uekerman A few details here and some points to discuss, also where we might want to invest some additional work:

|

|

and as a last point:

|

|

Looks good 👌

Yes, should not be too complicated, but also "only" nice-to-have for our purpose in my opinion.

With displacement you mean the update in the Newton iteration? If yes I guess only the residuum should be sufficient.

I guess that for now "only" having a direct solver is sufficient. The purpose of the solver should anyway not be to handle very large cases.

Great 👍

Great again 👍 Could you please upload a screenshot and mention the commit you used here? Just as a future reference.

Similar argument as above. I don't think we need parallelization for the moment. If we go for it later on shared-memory parallelization with a direct solver is completely sufficient.

I agree. It could be even good to split up the solver in several files and better hide some complexity. We could then also think about modifying the linear solver in a similar way? Maybe they could even use the same "adapter file" then? (last two points have lower priority) |

|

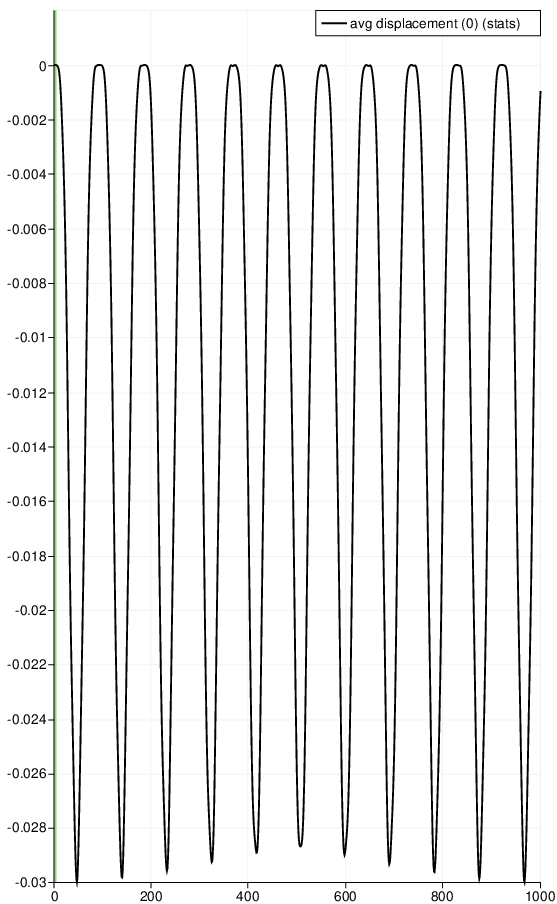

Alright, here the results of CSM3, feel free to compare t against the original paper. |

…for each component

|

Here is a first result of the FSI3 benchmark. Unfortunately, the simulation breaks at this point, which is a little bit curious for me. I have not seen any complete graph for the y-displacement, only for later times. It might take some time for the amplitude to stagnate. |

Hi David, just a more or less educated guess: depending on your time stepping scheme your coupled simulation can become unstable. We are working on this in precice/precice#133. Richard, for example, made this observation with a FSI case in his thesis (see Fig 5.10) when using the generalized alpha method with certain parameters. Modifying the parameters (and thus the way the time stepping works) helped in the end. Best regards, |

Very interesting. Also the fact, that his simulation converged for the larger time step of 0.005s. In my current setup 0.005 works without any problem using our tutorial setups. For smaller sizes (as in the reference) I needed to select a different time integration in the Fluid solver to make this stable. Since these kind of instabilities might be of interest for many users, it might be worth opening a discourse issue collecting some lessons learnt or best guesses similar to the FAQs already there. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added now some comments and need to push the applied changes.

Still, I need to add more documentation in the whole code WIP.

Add documentation in the main code Add ouput path to keep preCICE simulation directory clean Simplify loops to range based loops

Co-authored-by: Benjamin Uekermann <benjamin.uekermann@gmail.com>

|

Perfect. I will merge here. The linear solver integration becomes its own PR |

This PR adds a new nonlinear solver written in deal.II

Here a small list of features not yet implemented and to further work on

change licenseThis implementation is not directly the theory described in #15, but it resolves it anyway.