-

Notifications

You must be signed in to change notification settings - Fork 291

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

fix(chat): support non-streaming chat completion requests (#5565)

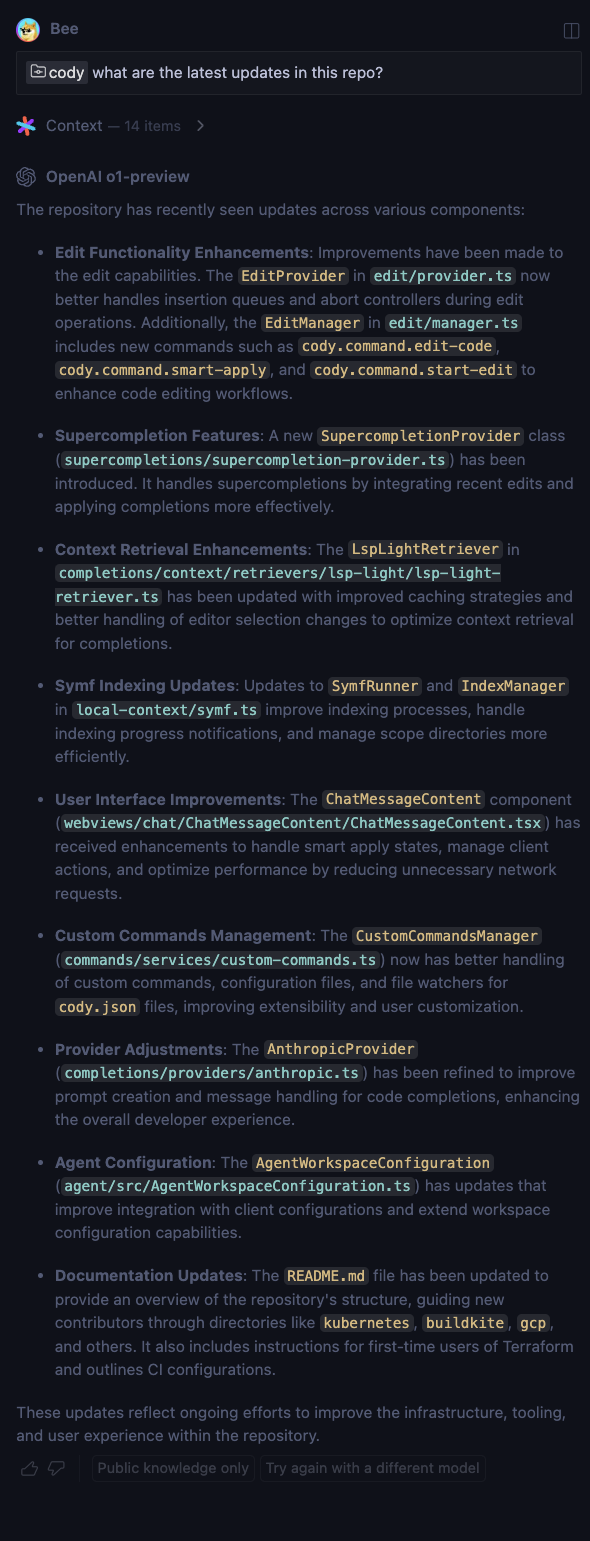

Follow up on #5508 CLOSE https://linear.app/sourcegraph/issue/CODY-3741/oai-o1-hits-parsing-error This change adds support for non-streaming requests, particularly for new OpenAI models that do not support streaming. It also fixes a "Parse Error: JS exception" issue that occurred when handling non-streaming responses in the streaming client. - Remove HACK for handling non-streaming requests in the stream method in nodeClient.ts that's resulting in parsing error - Implement a new `_fetchWithCallbacks` method in the `SourcegraphCompletionsClient` class to handle non-streaming requests - Update the `SourcegraphNodeCompletionsClient` and `SourcegraphBrowserCompletionsClient` classes to use the new `_fetchWithCallbacks` method when `params.stream` is `false` - This change ensures that non-streaming requests, such as those for the new OpenAI models that do not support streaming, are handled correctly and avoid issues like "Parse Error: JS exception" that can occur when the response is not parsed correctly in the streaming client The `SourcegraphCompletionsClient` class now has a new abstract method `_fetchWithCallbacks` that must be implemented by subclasses ## Test plan <!-- Required. See https://docs-legacy.sourcegraph.com/dev/background-information/testing_principles. --> Verify that chat requests using non-streaming models (e.g., OpenAI O1, OpenAI O1-mini) work correctly without any parsing errors. Example: ask Cody about cody repo with "cody what are the latest updates in this repo?" ### After First try  Second try  ### Before First try  ## Changelog <!-- OPTIONAL; info at https://www.notion.so/sourcegraph/Writing-a-changelog-entry-dd997f411d524caabf0d8d38a24a878c --> feat(chat): support non-streaming requests --------- Co-authored-by: Valery Bugakov <skymk1@gmail.com>

- Loading branch information

1 parent

ccab0ed

commit 24192e4

Showing

4 changed files

with

143 additions

and

17 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters