-

Notifications

You must be signed in to change notification settings - Fork 291

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Chat: waitlist for OpenAI-o1 & OpenAI-o1 mini #5508

Conversation

- Added a new `ModelTag.StreamDisabled` tag to indicate models that do not support streaming - Updated the `AssistantMessageCell` and `HumanMessageEditor` components to handle models without streaming support - Displayed a message to the user when a non-streaming model is used - Filtered out the initial codebase context when using a non-streaming model to avoid longer processing times - Updated the `ModelSelectField` component to display an "Early Access" badge for models with the `ModelTag.Preview` tag - Expanded the `ModelRef` interface and `ModelsService` to better handle model ID matching Models with the `ModelTag.StreamDisabled` tag will no longer display the initial codebase context to avoid longer processing times.

43bd8e8

to

b17213b

Compare

| @@ -200,6 +200,17 @@ export class SourcegraphNodeCompletionsClient extends SourcegraphCompletionsClie | |||

| bufferText += str | |||

| bufferBin = buf | |||

|

|

|||

| // HACK: Handles non-stream request. | |||

| // TODO: Implement a function to make and process non-stream requests. | |||

| if (params.stream === false) { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Don't have time to implement a proper function to process non-streaming requests so will require a follow-up.

| @@ -83,11 +84,12 @@ export const ModelSelectField: React.FunctionComponent<{ | |||

| }) | |||

| return | |||

| } | |||

| getVSCodeAPI().postMessage({ | |||

| command: 'event', | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

deprecated

vscode/CHANGELOG.md

Outdated

| @@ -6,6 +6,8 @@ This is a log of all notable changes to Cody for VS Code. [Unreleased] changes a | |||

|

|

|||

| ### Added | |||

|

|

|||

| - Chat: The new `Gemini 1.5 Pro Latest` and `Gemini 1.5 Flash Latest` models are now available for Cody Pro users. [pull/5508](https://github.com/sourcegraph/cody/pull/5508) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

is this correct

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good catch! 🦅

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Really impressive work @abeatrix !

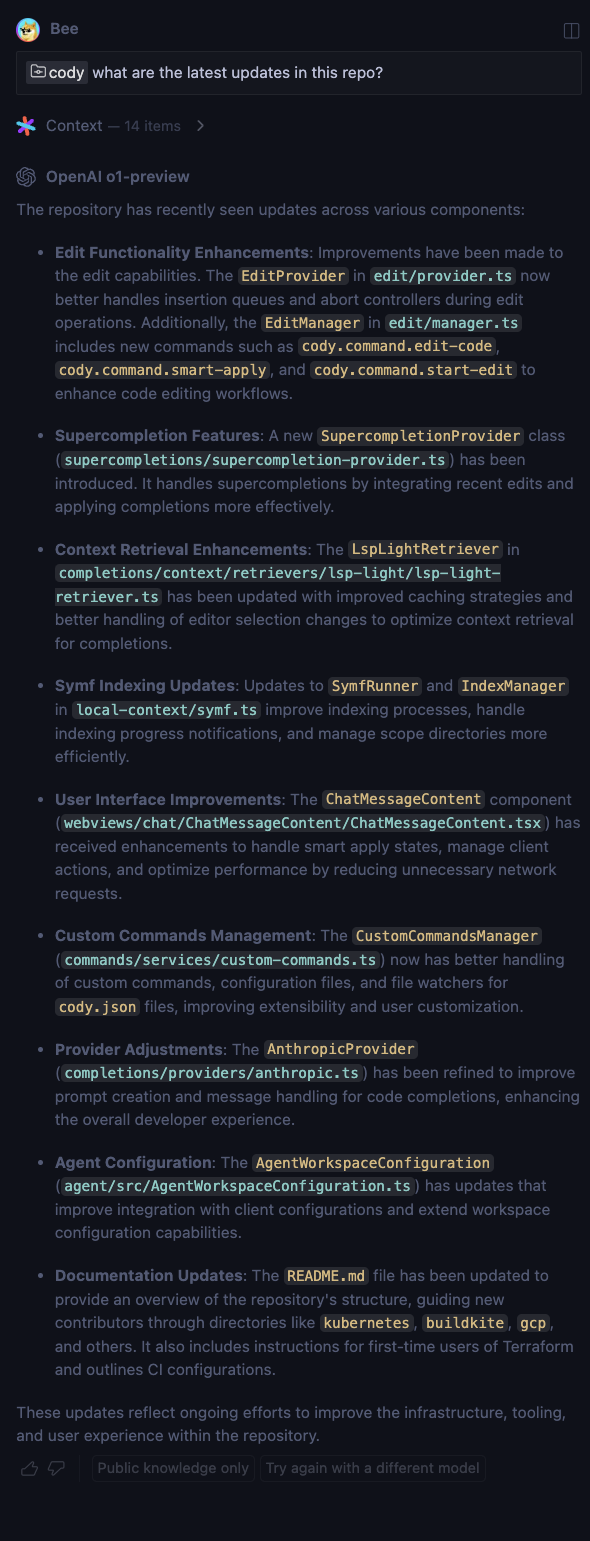

Follow up on #5508 CLOSE https://linear.app/sourcegraph/issue/CODY-3741/oai-o1-hits-parsing-error This change adds support for non-streaming requests, particularly for new OpenAI models that do not support streaming. It also fixes a "Parse Error: JS exception" issue that occurred when handling non-streaming responses in the streaming client. - Remove HACK for handling non-streaming requests in the stream method in nodeClient.ts that's resulting in parsing error - Implement a new `_fetchWithCallbacks` method in the `SourcegraphCompletionsClient` class to handle non-streaming requests - Update the `SourcegraphNodeCompletionsClient` and `SourcegraphBrowserCompletionsClient` classes to use the new `_fetchWithCallbacks` method when `params.stream` is `false` - This change ensures that non-streaming requests, such as those for the new OpenAI models that do not support streaming, are handled correctly and avoid issues like "Parse Error: JS exception" that can occur when the response is not parsed correctly in the streaming client The `SourcegraphCompletionsClient` class now has a new abstract method `_fetchWithCallbacks` that must be implemented by subclasses ## Test plan <!-- Required. See https://docs-legacy.sourcegraph.com/dev/background-information/testing_principles. --> Verify that chat requests using non-streaming models (e.g., OpenAI O1, OpenAI O1-mini) work correctly without any parsing errors. Example: ask Cody about cody repo with "cody what are the latest updates in this repo?" ### After First try  Second try  ### Before First try  ## Changelog <!-- OPTIONAL; info at https://www.notion.so/sourcegraph/Writing-a-changelog-entry-dd997f411d524caabf0d8d38a24a878c --> feat(chat): support non-streaming requests --------- Co-authored-by: Valery Bugakov <skymk1@gmail.com>

CLOSE https://linear.app/sourcegraph/issue/CODY-3681/improve-support-for-previewexperimental-models

Related to https://github.com/sourcegraph/sourcegraph/pull/323

OpenAI o1andOpenAI o1 minito dropdown list where users can join the waitlistJoin Waitlistwill open the blog post, fire a telemetry event calledcody.joinLlmWaitlistand update theJoin Waitlistlabel toOn WaitlistModelTag.StreamDisabledtag to indicate models that do not support streamingAssistantMessageCellandHumanMessageEditorcomponents to handle models without streaming supportTry again with a different modelinstead ofTry again with different contextbelow each assistant responseModelSelectFieldcomponent to display an "Early Access" badge for models with theModelTag.PreviewtagModelRefinterface andModelsServiceto better handle model ID matchingchatSessioncommand to the webview message protocol, withduplicateandnewactionsduplicateSessionmethod in theChatControllerto create a new session with a unique ID based on the current sessionContextFocusActionscomponent to use the newchatSessioncommand when the "Try again with a different model" button is clickedModelTag.StreamDisabledtag will no longer display the initial codebase context to avoid longer processing times.OpenAI o1andOpenAI o1 minimodels to use the stable version instead of-latestversionsTest plan

Build from this branch and verify the following in your debug modes:

OpenAI o1&OpenAI o1 miniare added to the model dropdown listJoin WaitlistlabelJoin Waitlistlabel now turned toOn WaitlistWhen using these models:

Model without streaming support takes longer to response.when waiting for the LLM responseTry again with different contextunderneath the LLM response when using these models.Try again with a different modelinsteadChangelog

feat(chat): add support for preview models

Gemini 1.5 Pro Latest&Gemini 1.5 Flash Latestfeat(chat): Added ability to duplicate chat sessions