-

Notifications

You must be signed in to change notification settings - Fork 918

Hybrid parallel MPI #1009

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Hybrid parallel MPI #1009

Conversation

| void PostP2PRecvs(CGeometry *geometry, CConfig *config, unsigned short commType, | ||

| unsigned short countPerPoint, bool val_reverse) const; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

A consequence of this is that the current count-per-point needs to be explicitly passed to a few routines, whereas before we could always rely on the maximum value.

|

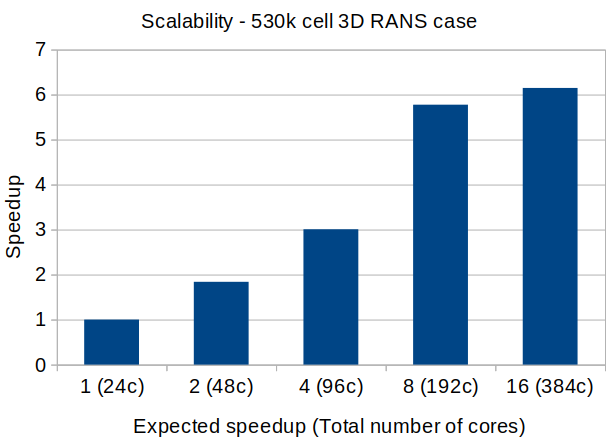

This is the sort of scalability (in terms of time to solution, not per iteration) we get now: Edit: The results at 192c are actually better, it depends on the position of the cluster nodes in the network, the update is apples to apples. |

talbring

left a comment

talbring

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @pcarruscag! Just two small comments below.

| CGeometry::CGeometry(void) : | ||

| size(SU2_MPI::GetSize()), | ||

| rank(SU2_MPI::GetRank()) { | ||

|

|

||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The requested spring cleaning is done, I think I'll stop here.

economon

left a comment

economon

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

Proposed Changes

This makes the routines InitiateComms and CompleteComms (and periodic counterparts) safe to call in parallel, until now they had to be guarded inside SU2_OMP_MASTER sections.

The calls to MPI are still only made by the master thread (funneled communication) but the buffers are packed and unpacked by all threads.

I also made a slight change which seems to make communications more efficient, we were always communicating the entire buffer, which is sized for the maximum number of variables per point, because the data was packed like this:

o o o o _ _ _ _ o o o o _ ...(count = 4, maxCount = 8);I changed it to:

o o o o o o o o ... _ _ _ _ ...Which allows only part of the buffer to be communicated.

(The maximum size

nPrimVarGrad*nDim*2is actually quite large compared to the mediannVar)In the process I also had to make a few more CGeometry routines thread safe.

Related Work

#789

Resolves #1011

PR Checklist