-

Notifications

You must be signed in to change notification settings - Fork 918

Hybrid Parallel Compressible RANS Solvers #861

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…id_parallel_and_SIMD

…ver residual routines

…structure with loose files

…m/su2code/SU2 into hybrid_parallel_compressible_rans

…compressible_rans

talbring

left a comment

talbring

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks @pcarruscag !

Quite a lot of changes in this PR. I like the implementation of the OpenMPI structures and the possibility to explore the application for RANS problems. We just have to make sure that people are aware of certain constructs. However, the only places where some special things occur are in the linear solvers and the limiter computations (which are now separate from the solvers anyway). Like I told you already, we have to find a place to put all your documentation that you provided here on github for people to easily find it. We also planning to have a virtual meeting where you can give some more details on the implementation. Furthermore, there will be probably a small session at the conference in June on these topics.

Thanks again for this contribution. @ALL: If there are any questions or problems when merging, please don't hesitate to contact @pcarruscag or myself.

| #include "../../include/numerics/template.hpp" | ||

| #include "../../include/numerics/transition.hpp" | ||

| #include "../../include/numerics/heat.hpp" | ||

| #include "../../include/numerics/flow/convection/roe.hpp" | ||

| #include "../../include/numerics/flow/convection/fds.hpp" | ||

| #include "../../include/numerics/flow/convection/fvs.hpp" | ||

| #include "../../include/numerics/flow/convection/cusp.hpp" | ||

| #include "../../include/numerics/flow/convection/hllc.hpp" | ||

| #include "../../include/numerics/flow/convection/ausm_slau.hpp" | ||

| #include "../../include/numerics/flow/convection/centered.hpp" | ||

| #include "../../include/numerics/flow/flow_diffusion.hpp" | ||

| #include "../../include/numerics/flow/flow_sources.hpp" | ||

| #include "../../include/numerics/continuous_adjoint/adj_convection.hpp" | ||

| #include "../../include/numerics/continuous_adjoint/adj_diffusion.hpp" | ||

| #include "../../include/numerics/continuous_adjoint/adj_sources.hpp" | ||

| #include "../../include/numerics/turbulent/turb_convection.hpp" | ||

| #include "../../include/numerics/turbulent/turb_diffusion.hpp" | ||

| #include "../../include/numerics/turbulent/turb_sources.hpp" | ||

| #include "../../include/numerics/elasticity/CFEAElasticity.hpp" | ||

| #include "../../include/numerics/elasticity/CFEALinearElasticity.hpp" | ||

| #include "../../include/numerics/elasticity/CFEANonlinearElasticity.hpp" | ||

| #include "../../include/numerics/elasticity/nonlinear_models.hpp" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for splitting the numerics classes. I am gonna create a factory class in #881 to avoid the includes here in the driver.

| #include "../../include/integration/CSingleGridIntegration.hpp" | ||

| #include "../../include/integration/CMultiGridIntegration.hpp" | ||

| #include "../../include/integration/CStructuralIntegration.hpp" | ||

| #include "../../include/integration/CFEM_DG_Integration.hpp" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Factory class is coming in #881. Thanks for splitting!

|

Thanks for the review @talbring I'm thinking of creating a page under developer docs for this OpenMP stuff, brief introduction (with links to external resources), some applied "theory" to SU2, explanation of the high level design, general do's and don'ts, some documentation of the mechanics that allow hiding #pragmas and #ifdefs, and finally links to all related PR's and issues which will serve to document the implementation. How does that sound? |

…compressible_rans

Proposed Changes

Extend the MPI+Threads framework to CEulerSolver, CNSSolver and the CTurb***Solver(s).

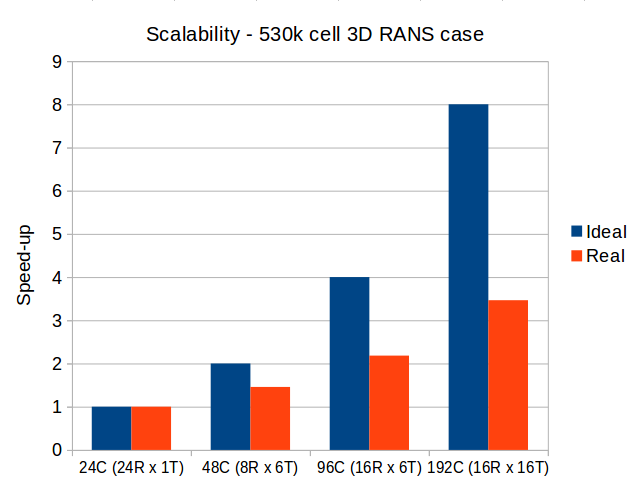

I can finally start showing what this effort is about in pictures, this is how the relatively small benchmark case from #716 scales on Imperial's cluster:

C is for cores, R for MPI ranks, and T for OpenMP threads per rank.

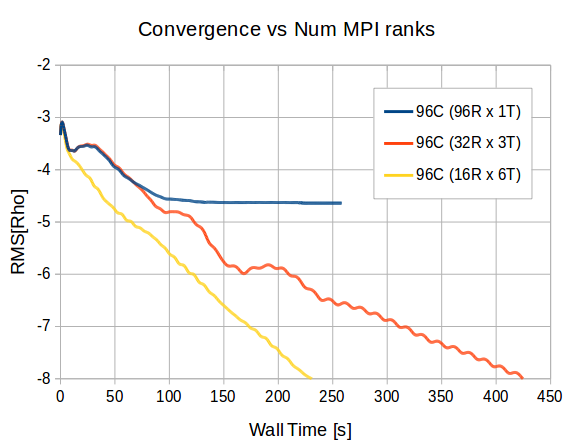

It may not look like much close to the ideal scaling but on 192 cores there are only 2.7k cells per core, and at different level of "hybridisation" this is what you get:

As mentioned in #789 this behaviour is due to the worse quality / quantity of coarse grids that can be generated as the domain is decomposed further and further.

EDIT

This is finished for now, apart from the odd routine that I will document below (and all the boundary conditions) every method called in

SingleGrid_IterationandMultiGrid_Iteration(including prolongations, smoothings and whatnot) is hybrid parallel.Threads are started in those functions which means that for a RANS case we enter parallel regions only twice per iteration, this should give minimal overhead, I will update the scalability results once they converge (statistically :) )

I will document the changes by "themes" below.

Related Work

Another snapshot of #824

PR Checklist