-

Notifications

You must be signed in to change notification settings - Fork 181

Cluster Provider development

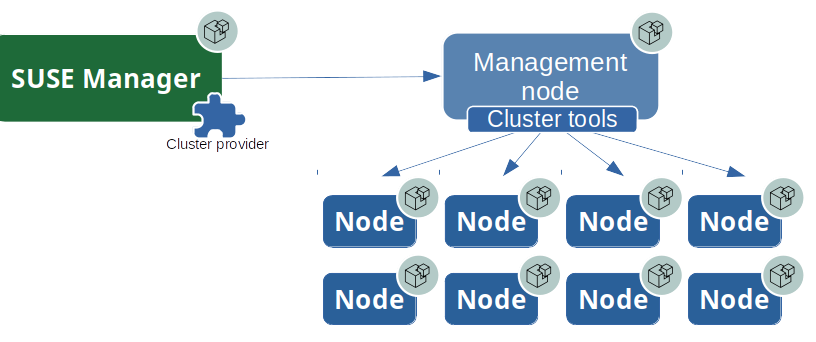

Uyuni can manage not only individual systems but also clusters of computers. The initial implementation of the clustering support was introduced by https://github.com/uyuni-project/uyuni/pull/2070. The target for the first version of the clustering support is SUSE CaaS Platform. However the intention is to be able to manage other kinds of clusters as well.

Uyuni uses a uniform interface to accesses a cluster. Currently this interface exposes the following operations:

- listing the nodes of a cluster

- joining a new node to the cluster

- removing a node from the cluster

- upgrading the entire cluster

This interface is implemented by a component called Cluster Provider. This has the role to implement the cluster operations using the tools and APIs that are specific to that type of cluster (e.g. skuba and kubectl for SUSE CaaSP).

Each cluster type will have its own Cluster Provider that knows the specifics of that particular clustering technology.

The main components are:

- Cluster Provider: metadata, states and custom modules specific to the cluster type

- Salt: used to integrate with the native cluster management tools and to execute the cluster operations

- Management node: a system that has the native cluster management tools installed and is registered to Uyuni. Must be able to access the cluster.

- Cluster nodes: must be registered to Uyuni in order to be managed

Under the hood a Cluster Provider uses Salt to implement the cluster operations mentioned above. This is done by integrating the native cluster management tools with salt.

There are two execution modules:

- the

mgrclusters.pyexecution module. This is called directly by Uyuni. It's just a thin wrapper that provides the common interface used by Uyuni. It delegates to the specific cluster module to do the actual work. - the cluster specific execution modules (e.g.

mgr_caasp_manager.py). This accesses the cluster using the specific tools and APIs.

The Cluster Provider must provide specific metadata so that it can be used inside Uyuni. The metadata must be installed in

/usr/share/susemanager/cluster-providers/metadata/<cluster_provider_name>/metadata.yml in order for the cluster provider to be detected.

Any custom states referenced in the metadata must be installed under /usr/share/susemanager/cluster-providers/states/<cluster_provider_name>/.

Example file structure for the caasp cluster provider:

/usr/share/susemanager/cluster-providers/

├── metadata

│ └── caasp

│ ├── metadata.yml # <= cluster provider metadata

│ ├── join_node.yml # <= join_node formula form

│ ├── remove_node.yml # <= remove_node formula form

│ └── upgrade_cluster.yml # <= upgrade_cluster formula form

└── states

└── caasp # <= specific states hooked before/after cluster operations

├── init_ssh_agent.sls

└── kill_ssh_agent.sls

The metadata is a yaml file with the following format:

name: <The Cluster Provider Name>

description:

<Description>

formulas: # formula forms used by this cluster provider

settings: # formula for storing the settings used to access the cluster

name: <settings formulas>

join_node:

name: <join_nodes_formula> # formula form for joining nodes

source: cluster-provider|system # formula type:

# system - globally available formula

# cluster-provider - stored in the same directory as this yaml file

data: # Initial data for the form. The structure mirrors that of the form layout data.

# Predefined variables (only available if the 'cluster' and 'nodes' parameters are passed

# into the REST call that fetches the initial form data):

# cluster_settings - the values stored in the 'settings` formula

# nodes - list of MinionServer instances corresponding to the ids passed into the `nodes` parameter

# E.g. from the CaaSP metadata:

use_ssh_agent: cluster_settings.use_ssh_agent # <= predefined variable 'cluster_settings`

ssh_auth_sock: cluster_settings.ssh_auth_sock

nodes:

$type: edit-group

$value: nodes # <= predefined variable 'nodes'

$item: node

$prototype:

node_name: node.hostname

target: node.hostname

remove_node:

name: <remove_nodes_formula> # formula form for joining nodes

source: cluster-provider|system # formula type

data: # Initial data for the form.

# ...

upgrade_cluster:

name: <upgrade_cluster_formula> # formula for upgrading the cluster

source: cluster-provider|system # formula type

data: # Initial data for the form.

# ...

state_hooks: # Cluster provider specific states to be "hooked" at certain predefined places

# in the Uyuni general cluster management states.

join: # hooks for join nodes state

before: # before joining nodes

- <cluster_provider_name.state>

after: # after joining nodes

- ...

remove: # hooks for the remove nodes state

before: # before removing nodes

- ...

after: # after removing nodes

- ...

upgrade: # hooks for the cluster upgrade states

before: # before upgrading the cluster

- ...

after: # after upgrading the cluster

- ...

management_node:

match: ... # Salt matcher expression for finding the available management nodes when adding a cluster to Uyuni

# See https://docs.saltstack.com/en/latest/topics/targeting/compound.html

channels:

required_packages:

- <package_name> # These packages are recommended to be available in the channels of a system

# before joining that system to a cluster.

# A warning will be shown if the packages are not available.

ui:

nodes_list:

fields:

- ... # The fields to shown for each node in the list of cluster nodes

# The field names are specific to the cluster provider implementation.

upgrade:

show_plan: true|false # Show the cluster upgrade plan in the UI before scheduling the upgradeThe cluster provider must be packages as an rpm. See the uyuni-cluster-provider-caasp package as an example on how to do the packaging.

Look at the following Uyuni RFCs for full details: