-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-42599][CONNECT][INFRA] Introduce dev/connect-jvm-client-mima-check instead of CompatibilitySuite

#40213

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-42599][CONNECT][INFRA] Introduce dev/connect-jvm-client-mima-check instead of CompatibilitySuite

#40213

Conversation

|

|

||

| val clientClass = clientClassLoader.loadClass("org.apache.spark.sql.Dataset") | ||

| val sqlClass = sqlClassLoader.loadClass("org.apache.spark.sql.Dataset") | ||

| private def checkDatasetApiCompatibility(clientJar: File, sqlJar: File): Seq[String] = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

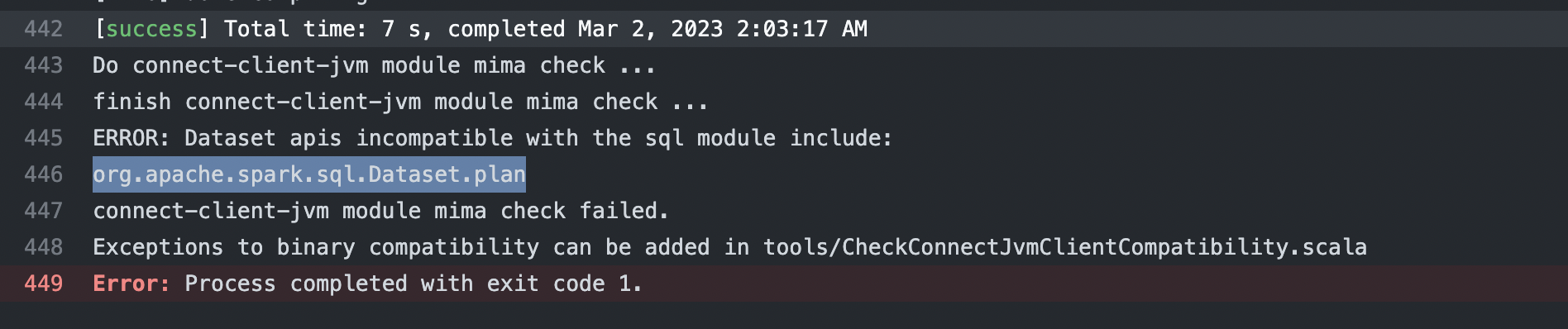

Note: When CheckConnectJvmClientCompatibility in the connect/jvm module, this check will fail due to #39712 (comment)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi, I would recommend to use this PR. For this failing tests. Let's just delete this. I was planning to delete it after this PR get merged. The test case is already covered by mima test. Thanks so much. This change looks awesome.

|

LGTM |

dev/connect-jvm-client-mima-check

Outdated

| rm -f .connect-mima-check-result | ||

|

|

||

| echo "Build sql module ..." | ||

| build/sbt sql/package |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You can issue a single command here, that will be a bit faster:

build/sbt "sql/package;connect-client-jvm/assembly;connect-client-jvm/Test/package"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks ~ fixed

|

|

||

| CONNECT_TEST_CLASSPATH="$(build/sbt -DcopyDependencies=false "export connect-client-jvm/Test/fullClasspath" | grep jar | tail -n1)" | ||

| CONNECT_CLASSPATH="$(build/sbt -DcopyDependencies=false "export connect-client-jvm/fullClasspath" | grep jar | tail -n1)" | ||

| SQL_CLASSPATH="$(build/sbt -DcopyDependencies=false "export sql/fullClasspath" | grep jar | tail -n1)" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There will be duplication of entries here. Is that an issue?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, there will be duplicate entries, but there is no problem for the time being,

| } | ||

|

|

||

| def collectResult(): SparkResult[T] = sparkSession.execute(plan, encoder) | ||

| private[sql] def collectResult(): SparkResult[T] = sparkSession.execute(plan, encoder) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why make this private[sql]? For advanced use cases this is a better way of interacting with results.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I guess it is because this is not an existing API?

I don't have a concrete idea now how to deal with new public API that is introduced by Spark Connect.

Maybe we do not expose such API for now given that they are new, and later change it to public if that is useful. I assume it will be hard to do the reversed way which becomes breaking changes.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

checkDatasetApiCompatibility('compatibility API tests: Dataset' in CompatibilitySuite) will find out the public Dataset api in connect/client module instead of in sql module. collectResult is such a case. There was no problem before because there was bad case in the test (classloader loaded the wrong class)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I guess it is because this is not an existing API?

Yes

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think If we accept similar changes (add some new public apis to connect/client module), we may need to maintain a whitelist(in checkDatasetApiCompatibility) or do not check this again

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

// For now we simply check the new methods is a subset of the old methods.

clientMethods.diff(sqlMethods)the new methods is a subset of the old method -> clientMethods.diff(sqlMethods) should be empty?

For example:

scala> val clientMethods = Seq(1,2,3)

clientMethods: Seq[Int] = List(1, 2, 3)

scala> val sqlMethods = Seq(1,2,3,4,5)

sqlMethods: Seq[Int] = List(1, 2, 3, 4, 5)

scala> clientMethods.diff(sqlMethods)

res0: Seq[Int] = List()

clientMethods is a subset of sqlMethods.

scala> val clientMethods = Seq(0,1,2,3)

clientMethods: Seq[Int] = List(0, 1, 2, 3)

scala> val sqlMethods = Seq(1,2,3,4,5)

sqlMethods: Seq[Int] = List(1, 2, 3, 4, 5)

scala> clientMethods.diff(sqlMethods)

res0: Seq[Int] = List(0)

clientMethods is not a subset of sqlMethods.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The original test code as follows. I think it is consistent with the current intention, am I right?

// For now we simply check the new methods is a subset of the old methods.

newMethods

.map(m => m.toString)

.foreach(method => {

assert(oldMethods.map(m => m.toString).contains(method))

})

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please feel free to ignore me and work with @hvanhovell on this case. :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@LuciferYang can we make an exception for the SparkResult class. Basically filter out the DataSet.collectResult method. That is all.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

let me add a exceptionMethods to checkDatasetApiCompatibility

|

@LuciferYang thanks for the hard work! Can we merge this one instead of #40191. |

|

Looks awesome overall! |

|

How would we deal with |

|

Ah I see now that we still need to update the excluding rules. |

| private def checkDatasetApiCompatibility(clientJar: File, sqlJar: File): Seq[String] = { | ||

|

|

||

| def methods(jar: File, className: String): Seq[String] = { | ||

| val classLoader: URLClassLoader = new URLClassLoader(Seq(jar.toURI.toURL).toArray) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

To be more safe about the class is loaded correctly. We can also use this class loader:

import org.apache.spark.util.ChildFirstURLClassLoader

val classLoader: URLClassLoader = new ChildFirstURLClassLoader(Seq(jar.toURI.toURL).toArray, this.getClass.getClassLoader)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

done

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is effective, the target class can also be loaded correctly in the connect module.

I checked it manually: removed the change of Dataset.scala and run dev/connect-jvm-client-mima-check, it can find out org.apache.spark.sql.Dataset.collectResult not exist in sql module.

Thanks @zhenlineo

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

And I do manually check for the original test compatibility API tests: Dataset, it also became failed:

failed.log (The error message is too large. I pasted it into the file)

Yes, just check in a different way :) |

|

The new change of |

| val clientMethods = methods(clientJar, className) | ||

| val sqlMethods = methods(sqlJar, className) | ||

| // Exclude some public methods that must be added through `exceptions` | ||

| val exceptionMethods = |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@hvanhovell exceptionMethods has been added and the revert the change of Dataset. Can we simply fix it like this first?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If I can refine this check, I will give followup later

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looks good

hvanhovell

left a comment

hvanhovell

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

| test("compatibility API tests: Dataset") { | ||

| val clientClassLoader: URLClassLoader = new URLClassLoader(Seq(clientJar.toURI.toURL).toArray) | ||

| val sqlClassLoader: URLClassLoader = new URLClassLoader(Seq(sqlJar.toURI.toURL).toArray) | ||

| private def checkDatasetApiCompatibility(clientJar: File, sqlJar: File): Seq[String] = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@zhenlineo is this only supposed to check Dataset? what about the other classes?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This test was initially added because we did not cover the Dataset in the mima check.

If we found such test is useful and want to expand to more classes we can run the mima check the other way around to ensure that our new code does not introduce more unwanted methods.

|

Alirght merging. |

…check` instead of `CompatibilitySuite` ### What changes were proposed in this pull request? The main changes of this pr as follows: - Refactor `CompatibilitySuite` as a new tool `CheckConnectJvmClientCompatibility` - Add a new shell script `dev/connect-jvm-client-mima-check`, it will use `CheckConnectJvmClientCompatibility` to check the mima compatibility of `connect-jvm-client` module. - Add `dev/connect-jvm-client-mima-check` to github task ### Why are the changes needed? For fix test error report in `[VOTE] Release Apache Spark 3.4.0 (RC1)` mail list. Testing `CompatibilitySuite` with maven requires some pre-work: ``` build/mvn clean install -DskipTests -pl sql/core -am build/mvn clean install -DskipTests -pl connector/connect/client/jvm -am ``` So if we run `build/mvn package test` to test whole project as before, `CompatibilitySuite` will failed as follows: ``` CompatibilitySuite: - compatibility MiMa tests *** FAILED *** java.lang.AssertionError: assertion failed: Failed to find the jar inside folder: /home/bjorn/spark-3.4.0/connector/connect/client/jvm/target at scala.Predef$.assert(Predef.scala:223) at org.apache.spark.sql.connect.client.util.IntegrationTestUtils$.findJar(IntegrationTestUtils.scala:67) at org.apache.spark.sql.connect.client.CompatibilitySuite.clientJar$lzycompute(CompatibilitySuite.scala:57) at org.apache.spark.sql.connect.client.CompatibilitySuite.clientJar(CompatibilitySuite.scala:53) at org.apache.spark.sql.connect.client.CompatibilitySuite.$anonfun$new$1(CompatibilitySuite.scala:69) at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85) at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83) at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104) at org.scalatest.Transformer.apply(Transformer.scala:22) at org.scalatest.Transformer.apply(Transformer.scala:20) ... - compatibility API tests: Dataset *** FAILED *** java.lang.AssertionError: assertion failed: Failed to find the jar inside folder: /home/bjorn/spark-3.4.0/connector/connect/client/jvm/target at scala.Predef$.assert(Predef.scala:223) at org.apache.spark.sql.connect.client.util.IntegrationTestUtils$.findJar(IntegrationTestUtils.scala:67) at org.apache.spark.sql.connect.client.CompatibilitySuite.clientJar$lzycompute(CompatibilitySuite.scala:57) at org.apache.spark.sql.connect.client.CompatibilitySuite.clientJar(CompatibilitySuite.scala:53) at org.apache.spark.sql.connect.client.CompatibilitySuite.$anonfun$new$7(CompatibilitySuite.scala:110) at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23) at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85) at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83) at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104) at org.scalatest.Transformer.apply(Transformer.scala:22) ``` So we need to fix this problem for developers. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #40213 from LuciferYang/connect-client-compatibility-backup. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Herman van Hovell <herman@databricks.com> (cherry picked from commit 12beadb) Signed-off-by: Herman van Hovell <herman@databricks.com>

|

Thanks @hvanhovell @amaliujia @zhenlineo |

…check` instead of `CompatibilitySuite` ### What changes were proposed in this pull request? The main changes of this pr as follows: - Refactor `CompatibilitySuite` as a new tool `CheckConnectJvmClientCompatibility` - Add a new shell script `dev/connect-jvm-client-mima-check`, it will use `CheckConnectJvmClientCompatibility` to check the mima compatibility of `connect-jvm-client` module. - Add `dev/connect-jvm-client-mima-check` to github task ### Why are the changes needed? For fix test error report in `[VOTE] Release Apache Spark 3.4.0 (RC1)` mail list. Testing `CompatibilitySuite` with maven requires some pre-work: ``` build/mvn clean install -DskipTests -pl sql/core -am build/mvn clean install -DskipTests -pl connector/connect/client/jvm -am ``` So if we run `build/mvn package test` to test whole project as before, `CompatibilitySuite` will failed as follows: ``` CompatibilitySuite: - compatibility MiMa tests *** FAILED *** java.lang.AssertionError: assertion failed: Failed to find the jar inside folder: /home/bjorn/spark-3.4.0/connector/connect/client/jvm/target at scala.Predef$.assert(Predef.scala:223) at org.apache.spark.sql.connect.client.util.IntegrationTestUtils$.findJar(IntegrationTestUtils.scala:67) at org.apache.spark.sql.connect.client.CompatibilitySuite.clientJar$lzycompute(CompatibilitySuite.scala:57) at org.apache.spark.sql.connect.client.CompatibilitySuite.clientJar(CompatibilitySuite.scala:53) at org.apache.spark.sql.connect.client.CompatibilitySuite.$anonfun$new$1(CompatibilitySuite.scala:69) at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85) at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83) at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104) at org.scalatest.Transformer.apply(Transformer.scala:22) at org.scalatest.Transformer.apply(Transformer.scala:20) ... - compatibility API tests: Dataset *** FAILED *** java.lang.AssertionError: assertion failed: Failed to find the jar inside folder: /home/bjorn/spark-3.4.0/connector/connect/client/jvm/target at scala.Predef$.assert(Predef.scala:223) at org.apache.spark.sql.connect.client.util.IntegrationTestUtils$.findJar(IntegrationTestUtils.scala:67) at org.apache.spark.sql.connect.client.CompatibilitySuite.clientJar$lzycompute(CompatibilitySuite.scala:57) at org.apache.spark.sql.connect.client.CompatibilitySuite.clientJar(CompatibilitySuite.scala:53) at org.apache.spark.sql.connect.client.CompatibilitySuite.$anonfun$new$7(CompatibilitySuite.scala:110) at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23) at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85) at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83) at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104) at org.scalatest.Transformer.apply(Transformer.scala:22) ``` So we need to fix this problem for developers. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes apache#40213 from LuciferYang/connect-client-compatibility-backup. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Herman van Hovell <herman@databricks.com> (cherry picked from commit 12beadb) Signed-off-by: Herman van Hovell <herman@databricks.com>

What changes were proposed in this pull request?

The main changes of this pr as follows:

CompatibilitySuiteas a new toolCheckConnectJvmClientCompatibilitydev/connect-jvm-client-mima-check, it will useCheckConnectJvmClientCompatibilityto check the mima compatibility ofconnect-jvm-clientmodule.dev/connect-jvm-client-mima-checkto github taskWhy are the changes needed?

For fix test error report in

[VOTE] Release Apache Spark 3.4.0 (RC1)mail list.Testing

CompatibilitySuitewith maven requires some pre-work:So if we run

build/mvn package testto test whole project as before,CompatibilitySuitewill failed as follows:So we need to fix this problem for developers.

Does this PR introduce any user-facing change?

No

How was this patch tested?

Pass GitHub Actions