-

-

Notifications

You must be signed in to change notification settings - Fork 3.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Improve Queue Phase parallelization and other small optimizations #4899

Closed

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

infmagic2047

reviewed

Jun 2, 2022

SkiFire13

reviewed

Jun 9, 2022

Co-authored-by: Giacomo Stevanato <giaco.stevanato@gmail.com>

bors bot

pushed a commit

that referenced

this pull request

Jun 23, 2022

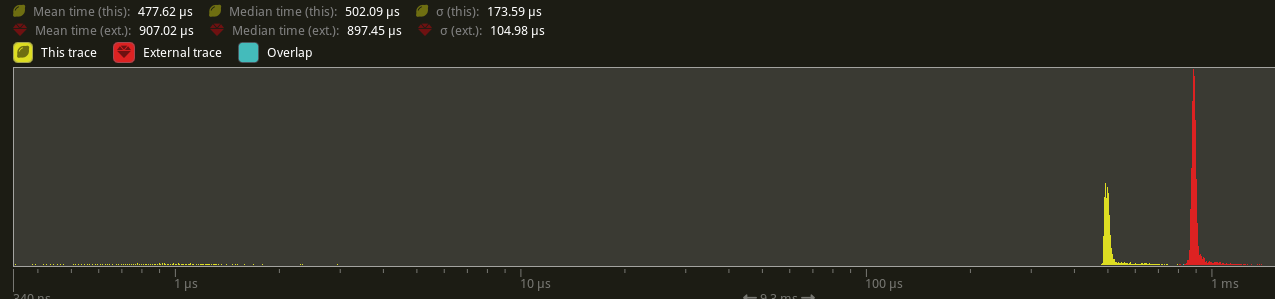

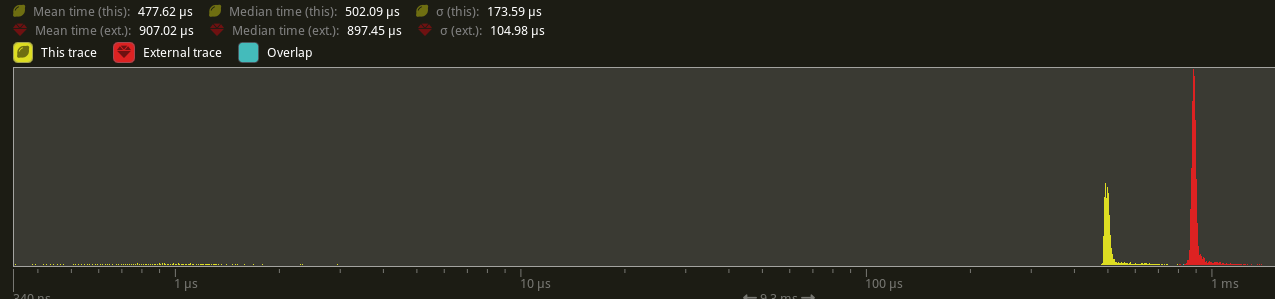

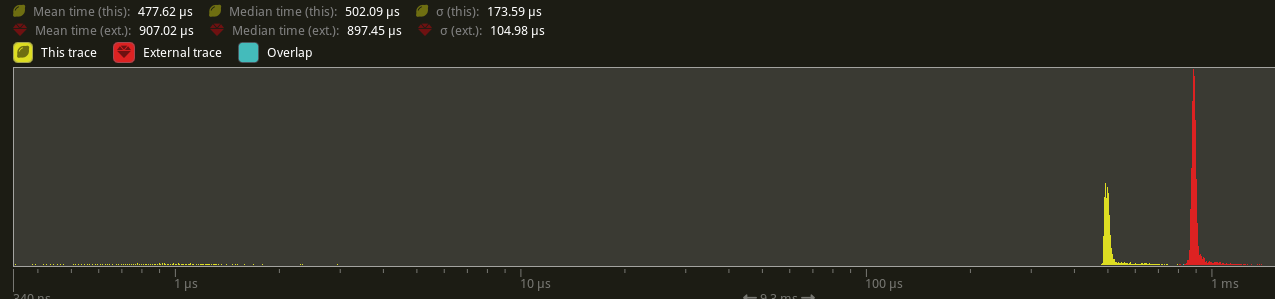

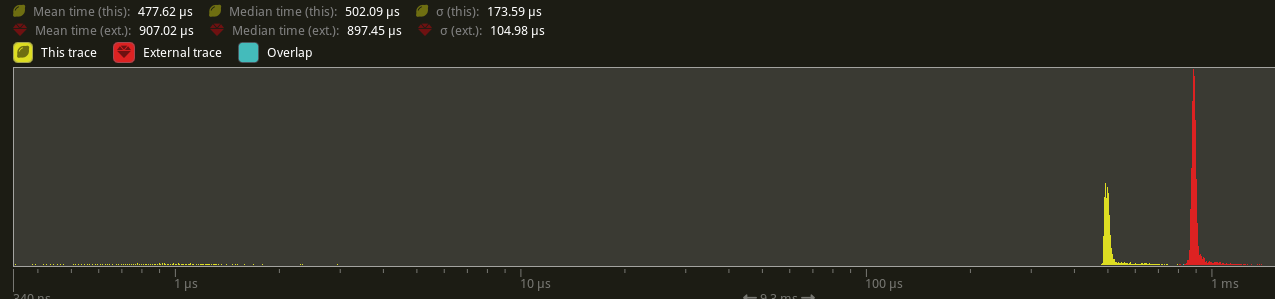

# Objective Partially addresses #4291. Speed up the sort phase for unbatched render phases. ## Solution Split out one of the optimizations in #4899 and allow implementors of `PhaseItem` to change what kind of sort is used when sorting the items in the phase. This currently includes Stable, Unstable, and Unsorted. Each of these corresponds to `Vec::sort_by_key`, `Vec::sort_unstable_by_key`, and no sorting at all. The default is `Unstable`. The last one can be used as a default if users introduce a preliminary depth prepass. ## Performance This will not impact the performance of any batched phases, as it is still using a stable sort. 2D's only phase is unchanged. All 3D phases are unbatched currently, and will benefit from this change. On `many_cubes`, where the primary phase is opaque, this change sees a speed up from 907.02us -> 477.62us, a 47.35% reduction.  ## Future Work There were prior discussions to add support for faster radix sorts in #4291, which in theory should be a `O(n)` instead of a `O(nlog(n))` time. [`voracious`](https://crates.io/crates/voracious_radix_sort) has been proposed, but it seems to be optimize for use cases with more than 30,000 items, which may be atypical for most systems. Another optimization included in #4899 is to reduce the size of a few of the IDs commonly used in `PhaseItem` implementations to shrink the types to make swapping/sorting faster. Both `CachedPipelineId` and `DrawFunctionId` could be reduced to `u32` instead of `usize`. Ideally, this should automatically change to use stable sorts when `BatchedPhaseItem` is implemented on the same phase item type, but this requires specialization, which may not land in stable Rust for a short while. --- ## Changelog Added: `PhaseItem::sort` ## Migration Guide RenderPhases now default to a unstable sort (via `slice::sort_unstable_by_key`). This can typically improve sort phase performance, but may produce incorrect batching results when implementing `BatchedPhaseItem`. To revert to the older stable sort, manually implement `PhaseItem::sort` to implement a stable sort (i.e. via `slice::sort_by_key`). Co-authored-by: Federico Rinaldi <gisquerin@gmail.com> Co-authored-by: Robert Swain <robert.swain@gmail.com> Co-authored-by: colepoirier <colepoirier@gmail.com>

inodentry

pushed a commit

to IyesGames/bevy

that referenced

this pull request

Aug 8, 2022

# Objective Partially addresses bevyengine#4291. Speed up the sort phase for unbatched render phases. ## Solution Split out one of the optimizations in bevyengine#4899 and allow implementors of `PhaseItem` to change what kind of sort is used when sorting the items in the phase. This currently includes Stable, Unstable, and Unsorted. Each of these corresponds to `Vec::sort_by_key`, `Vec::sort_unstable_by_key`, and no sorting at all. The default is `Unstable`. The last one can be used as a default if users introduce a preliminary depth prepass. ## Performance This will not impact the performance of any batched phases, as it is still using a stable sort. 2D's only phase is unchanged. All 3D phases are unbatched currently, and will benefit from this change. On `many_cubes`, where the primary phase is opaque, this change sees a speed up from 907.02us -> 477.62us, a 47.35% reduction.  ## Future Work There were prior discussions to add support for faster radix sorts in bevyengine#4291, which in theory should be a `O(n)` instead of a `O(nlog(n))` time. [`voracious`](https://crates.io/crates/voracious_radix_sort) has been proposed, but it seems to be optimize for use cases with more than 30,000 items, which may be atypical for most systems. Another optimization included in bevyengine#4899 is to reduce the size of a few of the IDs commonly used in `PhaseItem` implementations to shrink the types to make swapping/sorting faster. Both `CachedPipelineId` and `DrawFunctionId` could be reduced to `u32` instead of `usize`. Ideally, this should automatically change to use stable sorts when `BatchedPhaseItem` is implemented on the same phase item type, but this requires specialization, which may not land in stable Rust for a short while. --- ## Changelog Added: `PhaseItem::sort` ## Migration Guide RenderPhases now default to a unstable sort (via `slice::sort_unstable_by_key`). This can typically improve sort phase performance, but may produce incorrect batching results when implementing `BatchedPhaseItem`. To revert to the older stable sort, manually implement `PhaseItem::sort` to implement a stable sort (i.e. via `slice::sort_by_key`). Co-authored-by: Federico Rinaldi <gisquerin@gmail.com> Co-authored-by: Robert Swain <robert.swain@gmail.com> Co-authored-by: colepoirier <colepoirier@gmail.com>

james7132

added a commit

to james7132/bevy

that referenced

this pull request

Oct 28, 2022

# Objective Partially addresses bevyengine#4291. Speed up the sort phase for unbatched render phases. ## Solution Split out one of the optimizations in bevyengine#4899 and allow implementors of `PhaseItem` to change what kind of sort is used when sorting the items in the phase. This currently includes Stable, Unstable, and Unsorted. Each of these corresponds to `Vec::sort_by_key`, `Vec::sort_unstable_by_key`, and no sorting at all. The default is `Unstable`. The last one can be used as a default if users introduce a preliminary depth prepass. ## Performance This will not impact the performance of any batched phases, as it is still using a stable sort. 2D's only phase is unchanged. All 3D phases are unbatched currently, and will benefit from this change. On `many_cubes`, where the primary phase is opaque, this change sees a speed up from 907.02us -> 477.62us, a 47.35% reduction.  ## Future Work There were prior discussions to add support for faster radix sorts in bevyengine#4291, which in theory should be a `O(n)` instead of a `O(nlog(n))` time. [`voracious`](https://crates.io/crates/voracious_radix_sort) has been proposed, but it seems to be optimize for use cases with more than 30,000 items, which may be atypical for most systems. Another optimization included in bevyengine#4899 is to reduce the size of a few of the IDs commonly used in `PhaseItem` implementations to shrink the types to make swapping/sorting faster. Both `CachedPipelineId` and `DrawFunctionId` could be reduced to `u32` instead of `usize`. Ideally, this should automatically change to use stable sorts when `BatchedPhaseItem` is implemented on the same phase item type, but this requires specialization, which may not land in stable Rust for a short while. --- ## Changelog Added: `PhaseItem::sort` ## Migration Guide RenderPhases now default to a unstable sort (via `slice::sort_unstable_by_key`). This can typically improve sort phase performance, but may produce incorrect batching results when implementing `BatchedPhaseItem`. To revert to the older stable sort, manually implement `PhaseItem::sort` to implement a stable sort (i.e. via `slice::sort_by_key`). Co-authored-by: Federico Rinaldi <gisquerin@gmail.com> Co-authored-by: Robert Swain <robert.swain@gmail.com> Co-authored-by: colepoirier <colepoirier@gmail.com>

bors bot

pushed a commit

that referenced

this pull request

Dec 20, 2022

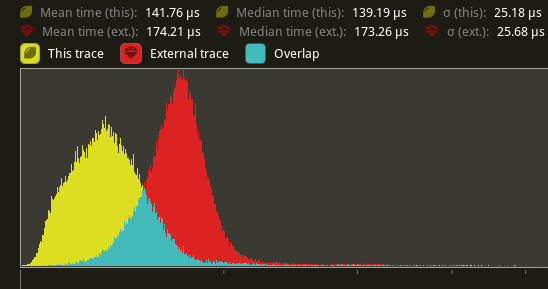

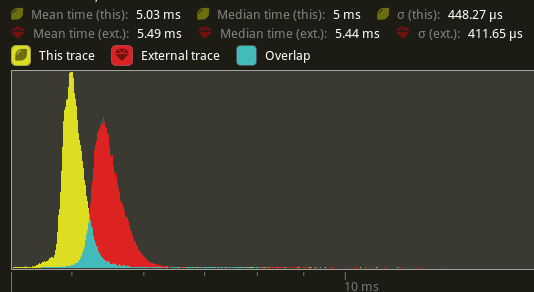

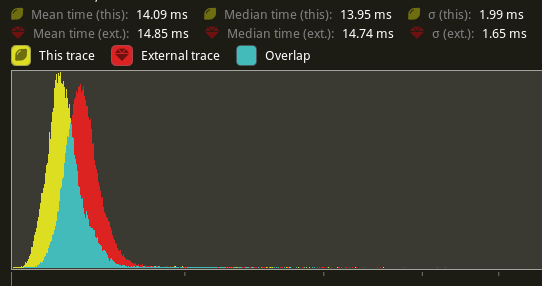

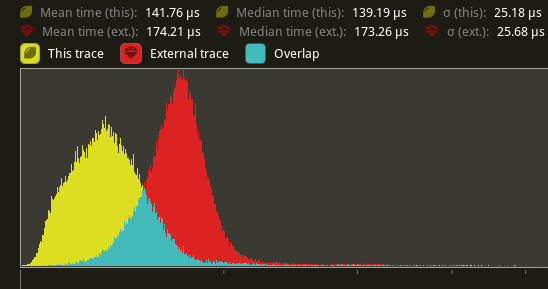

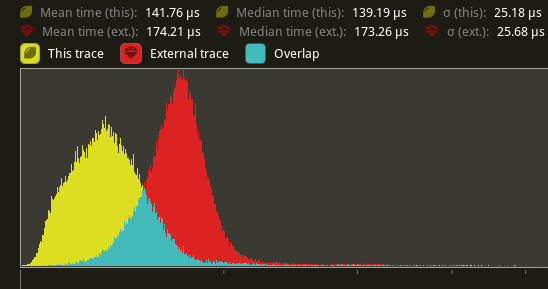

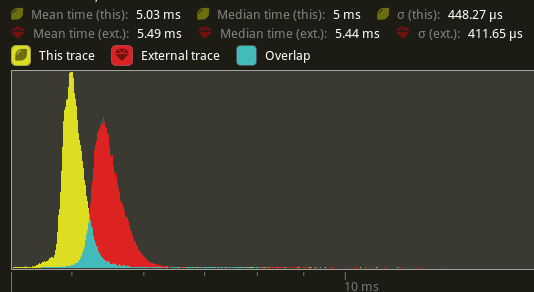

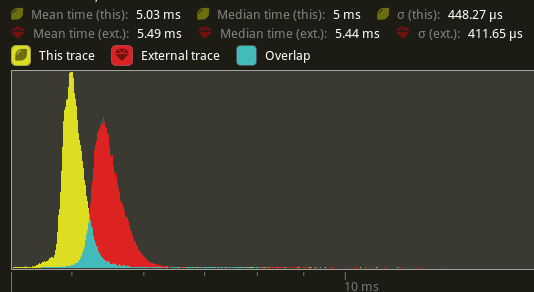

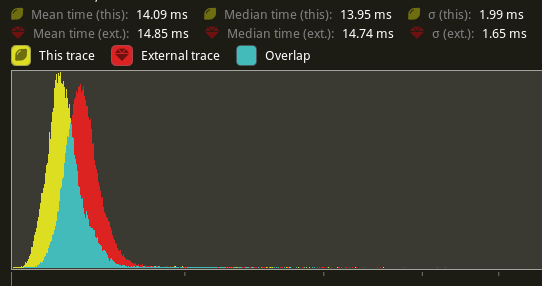

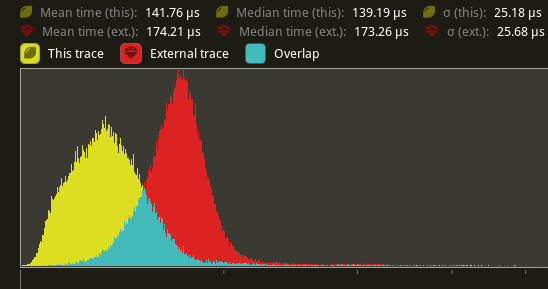

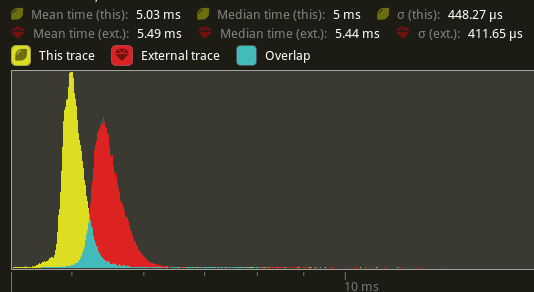

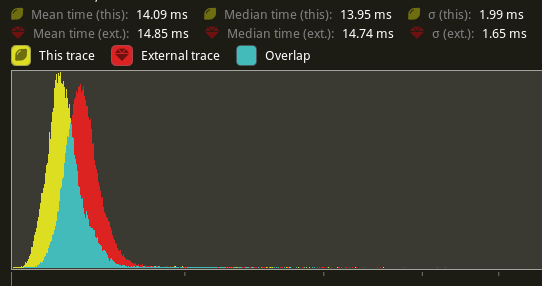

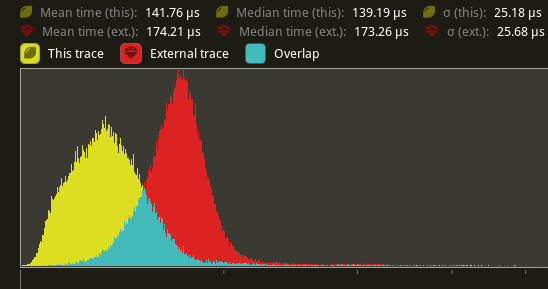

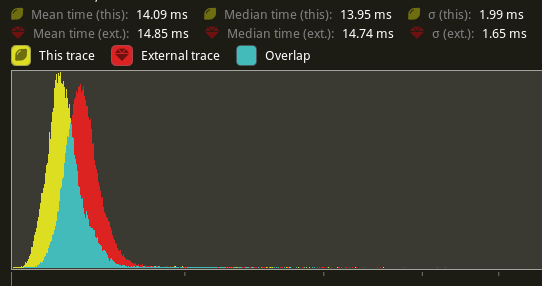

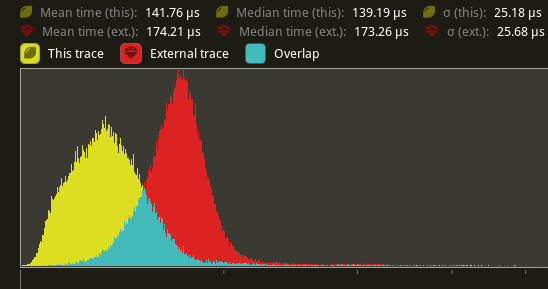

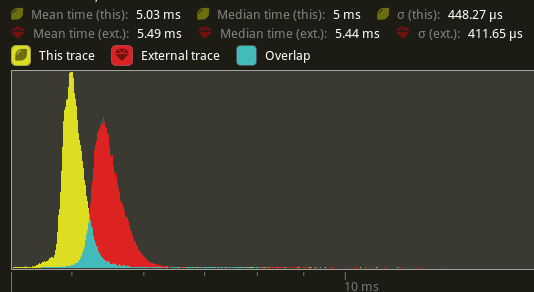

# Objective This includes one part of #4899. The aim is to improve CPU-side rendering performance by reducing the memory footprint and bandwidth required. ## Solution Shrink `DrawFunctionId` to `u32`. Enforce that `u32 as usize` conversions are always safe by forbidding compilation on 16-bit platforms. This shouldn't be a breaking change since #4736 disabled compilation of `bevy_ecs` on those platforms. Shrinking `DrawFunctionId` shrinks all of the `PhaseItem` types, which is integral to sort and render phase performance. Testing against `many_cubes`, the sort phase improved by 22% (174.21us -> 141.76us per frame).  The main opaque pass also imrproved by 9% (5.49ms -> 5.03ms)  Overall frame time improved by 5% (14.85ms -> 14.09ms)  There will be a followup PR that likewise shrinks `CachedRenderPipelineId` which should yield similar results on top of these improvements.

bors bot

pushed a commit

that referenced

this pull request

Dec 20, 2022

# Objective This includes one part of #4899. The aim is to improve CPU-side rendering performance by reducing the memory footprint and bandwidth required. ## Solution Shrink `DrawFunctionId` to `u32`. Enforce that `u32 as usize` conversions are always safe by forbidding compilation on 16-bit platforms. This shouldn't be a breaking change since #4736 disabled compilation of `bevy_ecs` on those platforms. Shrinking `DrawFunctionId` shrinks all of the `PhaseItem` types, which is integral to sort and render phase performance. Testing against `many_cubes`, the sort phase improved by 22% (174.21us -> 141.76us per frame).  The main opaque pass also imrproved by 9% (5.49ms -> 5.03ms)  Overall frame time improved by 5% (14.85ms -> 14.09ms)  There will be a followup PR that likewise shrinks `CachedRenderPipelineId` which should yield similar results on top of these improvements.

bors bot

pushed a commit

that referenced

this pull request

Dec 20, 2022

# Objective This includes one part of #4899. The aim is to improve CPU-side rendering performance by reducing the memory footprint and bandwidth required. ## Solution Shrink `DrawFunctionId` to `u32`. Enforce that `u32 as usize` conversions are always safe by forbidding compilation on 16-bit platforms. This shouldn't be a breaking change since #4736 disabled compilation of `bevy_ecs` on those platforms. Shrinking `DrawFunctionId` shrinks all of the `PhaseItem` types, which is integral to sort and render phase performance. Testing against `many_cubes`, the sort phase improved by 22% (174.21us -> 141.76us per frame).  The main opaque pass also imrproved by 9% (5.49ms -> 5.03ms)  Overall frame time improved by 5% (14.85ms -> 14.09ms)  There will be a followup PR that likewise shrinks `CachedRenderPipelineId` which should yield similar results on top of these improvements.

bors bot

pushed a commit

that referenced

this pull request

Dec 20, 2022

# Objective This includes one part of #4899. The aim is to improve CPU-side rendering performance by reducing the memory footprint and bandwidth required. ## Solution Shrink `DrawFunctionId` to `u32`. Enforce that `u32 as usize` conversions are always safe by forbidding compilation on 16-bit platforms. This shouldn't be a breaking change since #4736 disabled compilation of `bevy_ecs` on those platforms. Shrinking `DrawFunctionId` shrinks all of the `PhaseItem` types, which is integral to sort and render phase performance. Testing against `many_cubes`, the sort phase improved by 22% (174.21us -> 141.76us per frame).  The main opaque pass also imrproved by 9% (5.49ms -> 5.03ms)  Overall frame time improved by 5% (14.85ms -> 14.09ms)  There will be a followup PR that likewise shrinks `CachedRenderPipelineId` which should yield similar results on top of these improvements.

bors bot

pushed a commit

that referenced

this pull request

Dec 20, 2022

# Objective This includes one part of #4899. The aim is to improve CPU-side rendering performance by reducing the memory footprint and bandwidth required. ## Solution Shrink `DrawFunctionId` to `u32`. Enforce that `u32 as usize` conversions are always safe by forbidding compilation on 16-bit platforms. This shouldn't be a breaking change since #4736 disabled compilation of `bevy_ecs` on those platforms. Shrinking `DrawFunctionId` shrinks all of the `PhaseItem` types, which is integral to sort and render phase performance. Testing against `many_cubes`, the sort phase improved by 22% (174.21us -> 141.76us per frame).  The main opaque pass also imrproved by 9% (5.49ms -> 5.03ms)  Overall frame time improved by 5% (14.85ms -> 14.09ms)  There will be a followup PR that likewise shrinks `CachedRenderPipelineId` which should yield similar results on top of these improvements.

|

Closing in favor of #7205. |

|

There's still the other half of this that splays the RenderPhase into thread local queues. I'll open a separate PR for that. |

alradish

pushed a commit

to alradish/bevy

that referenced

this pull request

Jan 22, 2023

# Objective This includes one part of bevyengine#4899. The aim is to improve CPU-side rendering performance by reducing the memory footprint and bandwidth required. ## Solution Shrink `DrawFunctionId` to `u32`. Enforce that `u32 as usize` conversions are always safe by forbidding compilation on 16-bit platforms. This shouldn't be a breaking change since bevyengine#4736 disabled compilation of `bevy_ecs` on those platforms. Shrinking `DrawFunctionId` shrinks all of the `PhaseItem` types, which is integral to sort and render phase performance. Testing against `many_cubes`, the sort phase improved by 22% (174.21us -> 141.76us per frame).  The main opaque pass also imrproved by 9% (5.49ms -> 5.03ms)  Overall frame time improved by 5% (14.85ms -> 14.09ms)  There will be a followup PR that likewise shrinks `CachedRenderPipelineId` which should yield similar results on top of these improvements.

ItsDoot

pushed a commit

to ItsDoot/bevy

that referenced

this pull request

Feb 1, 2023

# Objective Partially addresses bevyengine#4291. Speed up the sort phase for unbatched render phases. ## Solution Split out one of the optimizations in bevyengine#4899 and allow implementors of `PhaseItem` to change what kind of sort is used when sorting the items in the phase. This currently includes Stable, Unstable, and Unsorted. Each of these corresponds to `Vec::sort_by_key`, `Vec::sort_unstable_by_key`, and no sorting at all. The default is `Unstable`. The last one can be used as a default if users introduce a preliminary depth prepass. ## Performance This will not impact the performance of any batched phases, as it is still using a stable sort. 2D's only phase is unchanged. All 3D phases are unbatched currently, and will benefit from this change. On `many_cubes`, where the primary phase is opaque, this change sees a speed up from 907.02us -> 477.62us, a 47.35% reduction.  ## Future Work There were prior discussions to add support for faster radix sorts in bevyengine#4291, which in theory should be a `O(n)` instead of a `O(nlog(n))` time. [`voracious`](https://crates.io/crates/voracious_radix_sort) has been proposed, but it seems to be optimize for use cases with more than 30,000 items, which may be atypical for most systems. Another optimization included in bevyengine#4899 is to reduce the size of a few of the IDs commonly used in `PhaseItem` implementations to shrink the types to make swapping/sorting faster. Both `CachedPipelineId` and `DrawFunctionId` could be reduced to `u32` instead of `usize`. Ideally, this should automatically change to use stable sorts when `BatchedPhaseItem` is implemented on the same phase item type, but this requires specialization, which may not land in stable Rust for a short while. --- ## Changelog Added: `PhaseItem::sort` ## Migration Guide RenderPhases now default to a unstable sort (via `slice::sort_unstable_by_key`). This can typically improve sort phase performance, but may produce incorrect batching results when implementing `BatchedPhaseItem`. To revert to the older stable sort, manually implement `PhaseItem::sort` to implement a stable sort (i.e. via `slice::sort_by_key`). Co-authored-by: Federico Rinaldi <gisquerin@gmail.com> Co-authored-by: Robert Swain <robert.swain@gmail.com> Co-authored-by: colepoirier <colepoirier@gmail.com>

ItsDoot

pushed a commit

to ItsDoot/bevy

that referenced

this pull request

Feb 1, 2023

# Objective This includes one part of bevyengine#4899. The aim is to improve CPU-side rendering performance by reducing the memory footprint and bandwidth required. ## Solution Shrink `DrawFunctionId` to `u32`. Enforce that `u32 as usize` conversions are always safe by forbidding compilation on 16-bit platforms. This shouldn't be a breaking change since bevyengine#4736 disabled compilation of `bevy_ecs` on those platforms. Shrinking `DrawFunctionId` shrinks all of the `PhaseItem` types, which is integral to sort and render phase performance. Testing against `many_cubes`, the sort phase improved by 22% (174.21us -> 141.76us per frame).  The main opaque pass also imrproved by 9% (5.49ms -> 5.03ms)  Overall frame time improved by 5% (14.85ms -> 14.09ms)  There will be a followup PR that likewise shrinks `CachedRenderPipelineId` which should yield similar results on top of these improvements.

github-merge-queue bot

pushed a commit

that referenced

this pull request

Feb 19, 2024

# Objective There's a repeating pattern of `ThreadLocal<Cell<Vec<T>>>` which is very useful for low overhead, low contention multithreaded queues that have cropped up in a few places in the engine. This pattern is surprisingly useful when building deferred mutation across multiple threads, as noted by it's use in `ParallelCommands`. However, `ThreadLocal<Cell<Vec<T>>>` is not only a mouthful, it's also hard to ensure the thread-local queue is replaced after it's been temporarily removed from the `Cell`. ## Solution Wrap the pattern into `bevy_utils::Parallel<T>` which codifies the entire pattern and ensures the user follows the contract. Instead of fetching indivdual cells, removing the value, mutating it, and replacing it, `Parallel::get` returns a `ParRef<'a, T>` which contains the temporarily removed value and a reference back to the cell, and will write the mutated value back to the cell upon being dropped. I would like to use this to simplify the remaining part of #4899 that has not been adopted/merged. --- ## Changelog TODO --------- Co-authored-by: Joseph <21144246+JoJoJet@users.noreply.github.com>

msvbg

pushed a commit

to msvbg/bevy

that referenced

this pull request

Feb 26, 2024

# Objective There's a repeating pattern of `ThreadLocal<Cell<Vec<T>>>` which is very useful for low overhead, low contention multithreaded queues that have cropped up in a few places in the engine. This pattern is surprisingly useful when building deferred mutation across multiple threads, as noted by it's use in `ParallelCommands`. However, `ThreadLocal<Cell<Vec<T>>>` is not only a mouthful, it's also hard to ensure the thread-local queue is replaced after it's been temporarily removed from the `Cell`. ## Solution Wrap the pattern into `bevy_utils::Parallel<T>` which codifies the entire pattern and ensures the user follows the contract. Instead of fetching indivdual cells, removing the value, mutating it, and replacing it, `Parallel::get` returns a `ParRef<'a, T>` which contains the temporarily removed value and a reference back to the cell, and will write the mutated value back to the cell upon being dropped. I would like to use this to simplify the remaining part of bevyengine#4899 that has not been adopted/merged. --- ## Changelog TODO --------- Co-authored-by: Joseph <21144246+JoJoJet@users.noreply.github.com>

msvbg

pushed a commit

to msvbg/bevy

that referenced

this pull request

Feb 26, 2024

# Objective There's a repeating pattern of `ThreadLocal<Cell<Vec<T>>>` which is very useful for low overhead, low contention multithreaded queues that have cropped up in a few places in the engine. This pattern is surprisingly useful when building deferred mutation across multiple threads, as noted by it's use in `ParallelCommands`. However, `ThreadLocal<Cell<Vec<T>>>` is not only a mouthful, it's also hard to ensure the thread-local queue is replaced after it's been temporarily removed from the `Cell`. ## Solution Wrap the pattern into `bevy_utils::Parallel<T>` which codifies the entire pattern and ensures the user follows the contract. Instead of fetching indivdual cells, removing the value, mutating it, and replacing it, `Parallel::get` returns a `ParRef<'a, T>` which contains the temporarily removed value and a reference back to the cell, and will write the mutated value back to the cell upon being dropped. I would like to use this to simplify the remaining part of bevyengine#4899 that has not been adopted/merged. --- ## Changelog TODO --------- Co-authored-by: Joseph <21144246+JoJoJet@users.noreply.github.com>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Labels

A-Rendering

Drawing game state to the screen

C-Performance

A change motivated by improving speed, memory usage or compile times

M-Needs-Migration-Guide

A breaking change to Bevy's public API that needs to be noted in a migration guide

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Objective

Fixes #3548. A mutable reference to

PipelineCacheprevents a good chunk of the systems in queue from parallelizing with each other, even though it's primary use withSpecialized*Pipelines<T>should rarely require mutation. Likewise&mut RenderPhase<I>on each render phase also requires exclusive access, which prohibits multiple systems from enqueuing phase items at the same time.Solution

Selectively leverage internal mutability and thread-local in a way that avoids adding per-entity overheads.

RenderPhase

ThreadLocalinside RenderPhase to create thread local queues of phase items.phase_scopefor enqueuing on each thread separately without locking overhead.Vec::sort_unstable_by_keyfor a slight sorting speedup.&mut RenderPhase<T>to&RenderPhase<T>, allow for increased parallelism.std::collections::BinaryHeapand drain?PipelineCache

LockablePipelineCache, a wrapper aroundRwLock<PipelineCache>.Specialized*Pipelinesto take a&LockablePipelineCacheinstead of&mut PipelineCache. Update systems to match.lock_pipeline_cache(in Extract) andunlock_pipeline_cache(in PhaseSort) that take the existingPipelineCacheresource and wraps it into aLockablePipelineCacheand vice versa. This allows Render phase draw functions to read from the cache without any contention, at the cost of one small command buffer.Performance

I tested this on the default configuration of

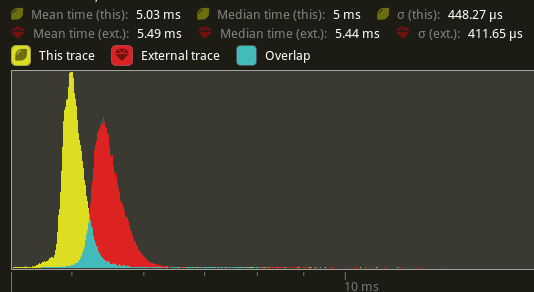

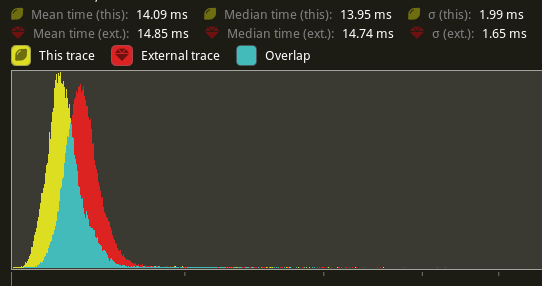

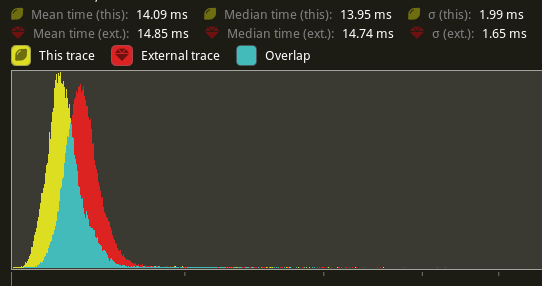

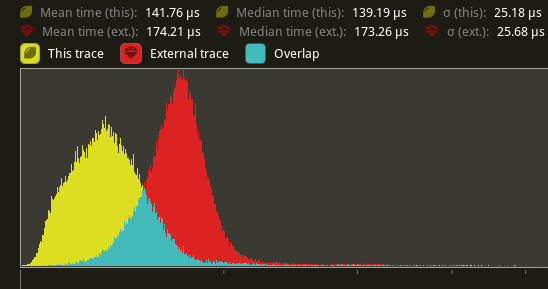

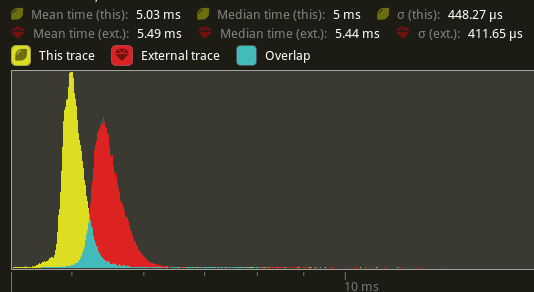

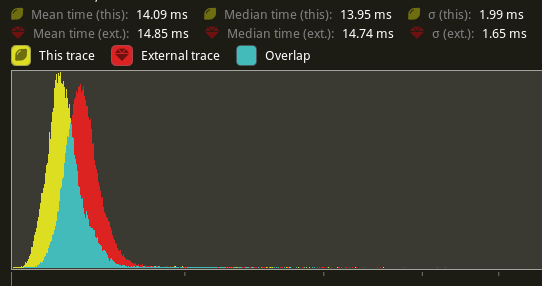

many_foxes, which has several heavy queue systems.The direct effects are as expected. The queue phase results show a 30% speedup on my machine due to the increased parallelism. (yellow is this PR, red is main)

For sort phase, there is a slight regression due to the additional copy into the sorted vec before sorting. This is slightly alleviated by shrinking the draw function type sizes. This can be further addressed by using more optimized sorting algorithms (i.e.

voracious).Overall, this sees a rough 0.3ms improvement (2 FPS, 73 -> 75) improvement on my machine.

Future Work

The changes to enable internal mutability in a thread-safe manner can be extended to also allow internal parallelism in heavy queue tasks.

Changelog

TODO

Migration Guide

TODO