-

Notifications

You must be signed in to change notification settings - Fork 7

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[WIP] Kernel IR Refactoring #249

Commits on Aug 7, 2020

-

[ONNX] Export tensor (pytorch#41872)

Summary: Adding tensor symbolic for opset 9 Pull Request resolved: pytorch#41872 Reviewed By: houseroad Differential Revision: D22968426 Pulled By: bzinodev fbshipit-source-id: 70e1afc7397e38039e2030e550fd72f09bac7c7c

Configuration menu - View commit details

-

Copy full SHA for 4959981 - Browse repository at this point

Copy the full SHA 4959981View commit details -

Optimization of Backward Implementation for Learnable Fake Quantize P…

…er Tensor Kernels (CPU and GPU) (pytorch#42384) Summary: Pull Request resolved: pytorch#42384 In this diff, the original backward pass implementation is sped up by merging the 3 iterations computing dX, dScale, and dZeroPoint separately. In this case, a native loop is directly used on a byte-wise level (referenced by `strides`). In the benchmark test on the operators, for an input of shape `3x3x256x256`, we have observed the following improvement in performance: - original python operator: 1021037 microseconds - original learnable kernel: 407576 microseconds - optimized learnable kernel: 102584 microseconds - original non-backprop kernel: 139806 microseconds **Speedup from python operator**: ~10x **Speedup from original learnable kernel**: ~4x **Speedup from non-backprop kernel**: ~1.2x Test Plan: To assert correctness of the new kernel, on a devvm, enter the command `buck test //caffe2/test:quantization -- learnable_backward_per_tensor` To benchmark the operators, on a devvm, enter the command 1. Set the kernel size to 3x3x256x256 or a reasonable input size. 2. Run `buck test //caffe2/benchmarks/operator_benchmark/pt:quantization_test` 3. The relevant outputs are as follows: (CPU) ``` # Benchmarking PyTorch: FakeQuantizePerTensorOpBenchmark # Mode: Eager # Name: FakeQuantizePerTensorOpBenchmark_N3_C3_H256_W256_nbits4_cpu_op_typepy_module # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: py_module Backward Execution Time (us) : 1021036.957 # Benchmarking PyTorch: FakeQuantizePerTensorOpBenchmark # Mode: Eager # Name: FakeQuantizePerTensorOpBenchmark_N3_C3_H256_W256_nbits4_cpu_op_typelearnable_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: learnable_kernel Backward Execution Time (us) : 102583.693 # Benchmarking PyTorch: FakeQuantizePerTensorOpBenchmark # Mode: Eager # Name: FakeQuantizePerTensorOpBenchmark_N3_C3_H256_W256_nbits4_cpu_op_typeoriginal_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: original_kernel Backward Execution Time (us) : 139806.086 ``` (GPU) ``` # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typepy_module # Input: N: 3, C: 3, H: 256, W: 256, device: cuda, op_type: py_module Backward Execution Time (us) : 6548.350 # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typelearnable_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cuda, op_type: learnable_kernel Backward Execution Time (us) : 1340.724 # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typeoriginal_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cuda, op_type: original_kernel Backward Execution Time (us) : 656.863 ``` Reviewed By: vkuzo Differential Revision: D22875998 fbshipit-source-id: cfcd62c327bb622270a783d2cbe97f00508c4a16

Configuration menu - View commit details

-

Copy full SHA for 9152f2f - Browse repository at this point

Copy the full SHA 9152f2fView commit details -

[ONNX] Add preprocess pass for onnx export (pytorch#41832)

Summary: in `_jit_pass_onnx`, symbolic functions are called for each node for conversion. However, there are nodes that cannot be converted without additional context. For example, the number of outputs from split (and whether it is static or dynamic) is unknown until the point where it is unpacked by listUnpack node. This pass does a preprocess, and prepares the nodes such that enough context can be received by the symbolic function. * After preprocessing, `_jit_pass_onnx` should have enough context to produce valid ONNX nodes, instead of half baked nodes that replies on fixes from later postpasses. * `_jit_pass_onnx_peephole` should be a pass that does ONNX specific optimizations instead of ONNX specific fixes. * Producing more valid ONNX nodes in `_jit_pass_onnx` enables better utilization of the ONNX shape inference pytorch#40628. Pull Request resolved: pytorch#41832 Reviewed By: ZolotukhinM Differential Revision: D22968334 Pulled By: bzinodev fbshipit-source-id: 8226f03c5b29968e8197d242ca8e620c6e1d42a5

Configuration menu - View commit details

-

Copy full SHA for a6c8730 - Browse repository at this point

Copy the full SHA a6c8730View commit details -

Print TE CUDA kernel (pytorch#42692)

Summary: Pull Request resolved: pytorch#42692 Test Plan: Imported from OSS Reviewed By: mruberry Differential Revision: D22986112 Pulled By: bertmaher fbshipit-source-id: 52ec3389535c8b276858bef8c470a59aeba4946f

Configuration menu - View commit details

-

Copy full SHA for 9525268 - Browse repository at this point

Copy the full SHA 9525268View commit details -

Support iterating through an Enum class (pytorch#42661)

Summary: [5/N] Implement Enum JIT support Implement Enum class iteration Add aten.ne for EnumType Supported: Enum-typed function arguments using Enum type and comparing them Support getting name/value attrs of enums Using Enum value as constant Support Enum-typed return values Support iterating through Enum class (enum value list) TODO: Support serialization and deserialization Pull Request resolved: pytorch#42661 Reviewed By: SplitInfinity Differential Revision: D22977364 Pulled By: gmagogsfm fbshipit-source-id: 1a0216f91d296119e34cc292791f9aef1095b5a8

Configuration menu - View commit details

-

Copy full SHA for 9597af0 - Browse repository at this point

Copy the full SHA 9597af0View commit details -

[blob reorder] Seperate user embeddings and ad embeddings in large mo…

…del loading script Summary: Put user embedding before ads embedding in blobReorder, for flash verification reason. Test Plan: ``` buck run mode/opt-clang -c python.package_style=inplace sigrid/predictor/scripts:enable_large_model_loading -- --model_path_src="/home/$USER/models/" --model_path_dst="/home/$USER/models_modified/" --model_file_name="182560549_0.predictor" ``` https://www.internalfb.com/intern/anp/view/?id=320921 to check blobsOrder Reviewed By: yinghai Differential Revision: D22964332 fbshipit-source-id: 78b4861476a3c889a5ff62492939f717c307a8d2

Configuration menu - View commit details

-

Copy full SHA for cb1ac94 - Browse repository at this point

Copy the full SHA cb1ac94View commit details -

Updates alias pattern (and torch.absolute to use it) (pytorch#42586)

Summary: This PR canonicalizes our (current) pattern for adding aliases to PyTorch. That pattern is: - Copy the original functions native_functions.yaml entry, but replace the original function's name with their own. - Implement the corresponding functions and have them redispatch to the original function. - Add docstrings to the new functions that reference the original function. - Update the alias_map in torch/csrc/jit/passes/normalize_ops.cpp. - Update the op_alias_mappings in torch/testing/_internal/jit_utils.py. - Add a test validating the alias's behavior is the same as the original function's. An alternative pattern would be to use Python and C++ language features to alias ops directly. For example in Python: ``` torch.absolute = torch.abs ``` Let the pattern in this PR be the "native function" pattern, and the alternative pattern be the "language pattern." There are pros/cons to both approaches: **Pros of the "Language Pattern"** - torch.absolute is torch.abs. - no (or very little) overhead for calling the alias. - no native_functions.yaml redundancy or possibility of "drift" between the original function's entries and the alias's. **Cons of the "Language Pattern"** - requires manually adding doc entries - requires updating Python alias and C++ alias lists - requires hand writing alias methods on Tensor (technically this should require a C++ test to validate) - no single list of all PyTorch ops -- have to check native_functions.yaml and one of the separate alias lists **Pros of the "Native Function" pattern** - alias declarations stay in native_functions.yaml - doc entries are written as normal **Cons of the "Native Function" pattern** - aliases redispatch to the original functions - torch.absolute is not torch.abs (requires writing test to validate behavior) - possibility of drift between original's and alias's native_functions.yaml entries While either approach is reasonable, I suggest the "native function" pattern since it preserves "native_functions.yaml" as a source of truth and minimizes the number of alias lists that need to be maintained. In the future, entries in native_functions.yaml may support an "alias" argument and replace whatever pattern we choose now. Ops that are likely to use aliasing are: - div (divide, true_divide) - mul (multiply) - bucketize (digitize) - cat (concatenate) - clamp (clip) - conj (conjugate) - rad2deg (degrees) - trunc (fix) - neg (negative) - deg2rad (radians) - round (rint) - acos (arccos) - acosh (arcosh) - asin (arcsin) - asinh (arcsinh) - atan (arctan) - atan2 (arctan2) - atanh (arctanh) - bartlett_window (bartlett) - hamming_window (hamming) - hann_window (hanning) - bitwise_not (invert) - gt (greater) - ge (greater_equal) - lt (less) - le (less_equal) - ne (not_equal) - ger (outer) Pull Request resolved: pytorch#42586 Reviewed By: ngimel Differential Revision: D22991086 Pulled By: mruberry fbshipit-source-id: d6ac96512d095b261ed2f304d7dddd38cf45e7b0

Configuration menu - View commit details

-

Copy full SHA for 73642d9 - Browse repository at this point

Copy the full SHA 73642d9View commit details -

Add use_glow_aot, and include ONNX again as a backend for onnxifiGlow (…

…pytorch#4787) Summary: Pull Request resolved: pytorch/glow#4787 Resurrect ONNX as a backend through onnxifiGlow (was killed as part of D16215878). Then look for the `use_glow_aot` argument in the Onnxifi op. If it's there and true, then we override whatever `backend_id` is set and use the ONNX backend. Reviewed By: yinghai, rdzhabarov Differential Revision: D22762123 fbshipit-source-id: abb4c3458261f8b7eeae3016dda5359fa85672f0

Configuration menu - View commit details

-

Copy full SHA for fb8aa00 - Browse repository at this point

Copy the full SHA fb8aa00View commit details -

Blacklist to Blocklist in onnxifi_transformer (pytorch#42590)

Summary: Fixes issues in pytorch#41704 and pytorch#41705 Pull Request resolved: pytorch#42590 Reviewed By: ailzhang Differential Revision: D22977357 Pulled By: malfet fbshipit-source-id: ab61b964cfdf8bd2b469f4ff8f6486a76bc697de

Configuration menu - View commit details

-

Copy full SHA for 4eb02ad - Browse repository at this point

Copy the full SHA 4eb02adView commit details -

[vulkan] Ops registration to TORCH_LIBRARY_IMPL (pytorch#42194)

Summary: Pull Request resolved: pytorch#42194 Test Plan: Imported from OSS Reviewed By: AshkanAliabadi Differential Revision: D22803036 Pulled By: IvanKobzarev fbshipit-source-id: 2f402541aecf887d78f650bf05d758a0e403bc4d

Configuration menu - View commit details

-

Copy full SHA for 3c66a37 - Browse repository at this point

Copy the full SHA 3c66a37View commit details -

Fix cmake warning (pytorch#42707)

Summary: If argumenets in set_target_properties are not separated by whitespace, cmake raises a warning: ``` CMake Warning (dev) at cmake/public/cuda.cmake:269: Syntax Warning in cmake code at column 54 Argument not separated from preceding token by whitespace. ``` Fixes #{issue number} Pull Request resolved: pytorch#42707 Reviewed By: ailzhang Differential Revision: D22988055 Pulled By: malfet fbshipit-source-id: c3744f23b383d603788cd36f89a8286a46b6c00fConfiguration menu - View commit details

-

Copy full SHA for 31ed468 - Browse repository at this point

Copy the full SHA 31ed468View commit details -

[CPU] Added torch.bmm for complex tensors (pytorch#42383)

Summary: Pull Request resolved: pytorch#42383 Test Plan - Updated existing tests to run for complex dtypes as well. Also added tests for `torch.addmm`, `torch.badmm` Test Plan: Imported from OSS Reviewed By: ezyang Differential Revision: D22960339 Pulled By: anjali411 fbshipit-source-id: 0805f21caaa40f6e671cefb65cef83a980328b7d

Configuration menu - View commit details

-

Copy full SHA for c9346ad - Browse repository at this point

Copy the full SHA c9346adView commit details -

Adds torch.linalg namespace (pytorch#42664)

Summary: This PR adds the `torch.linalg` namespace as part of our continued effort to be more compatible with NumPy. The namespace is tested by adding a single function, `torch.linalg.outer`, and testing it in a new test suite, test_linalg.py. It follows the same pattern that pytorch#41911, which added the `torch.fft` namespace, did. Future PRs will likely: - add more functions to torch.linalg - expand the testing done in test_linalg.py, including legacy functions, like torch.ger - deprecate existing linalg functions outside of `torch.linalg` in preference to the new namespace Pull Request resolved: pytorch#42664 Reviewed By: ngimel Differential Revision: D22991019 Pulled By: mruberry fbshipit-source-id: 39258d9b116a916817b3588f160b141f956e5d0b

Configuration menu - View commit details

-

Copy full SHA for 9c8021c - Browse repository at this point

Copy the full SHA 9c8021cView commit details -

Fix some linking rules to allow path with whitespaces (pytorch#42718)

Summary: Essentially, replace `-Wl,--whole-archive,$<TARGET_FILE:FOO>` with `-Wl,--whole-archive,\"$<TARGET_FILE:FOO>\"` as TARGET_FILE might return path containing whitespaces Fixes pytorch#42657 Pull Request resolved: pytorch#42718 Reviewed By: ezyang Differential Revision: D22993568 Pulled By: malfet fbshipit-source-id: de878b17d20e35b51dd350f20d079c8b879f70b5

Configuration menu - View commit details

-

Copy full SHA for dcee893 - Browse repository at this point

Copy the full SHA dcee893View commit details -

Handle fused scale and bias in fake fp16 layernorm

Summary: Allow passing scale and bias to fake fp16 layernorm. Test Plan: net_runner. Now matches glow's fused layernorm. Reviewed By: hyuen Differential Revision: D22952646 fbshipit-source-id: cf9ad055b14f9d0167016a18a6b6e26449cb4de8

Configuration menu - View commit details

-

Copy full SHA for 2971bc2 - Browse repository at this point

Copy the full SHA 2971bc2View commit details -

[NNC] Remove VarBinding and go back to Let stmts (pytorch#42634)

Summary: Awhile back when commonizing the Let and LetStmt nodes, I ended up removing both and adding a separate VarBinding section the Block. At the time I couldn't find a counter example, but I found it today: Local Vars and Allocations dependencies may go in either direction and so we need to support interleaving of those statements. So, I've removed all the VarBinding logic and reimplemented Let statements. ZolotukhinM I think you get to say "I told you so". No new tests, existing tests should cover this. Pull Request resolved: pytorch#42634 Reviewed By: mruberry Differential Revision: D22969771 Pulled By: nickgg fbshipit-source-id: a46c5193357902d0f59bf30ab103fe123b1503f1

Configuration menu - View commit details

-

Copy full SHA for 944ac13 - Browse repository at this point

Copy the full SHA 944ac13View commit details -

Remove duplicate definitions of CppTypeToScalarType (pytorch#42640)

Summary: I noticed that `TensorIteratorDynamicCasting.h` defines a helper meta-function `CPPTypeToScalarType` which does exactly the same thing as the `c10::CppTypeToScalarType` meta-function I added in pytorchgh-40927. No need for two identical definitions. Pull Request resolved: pytorch#42640 Reviewed By: malfet Differential Revision: D22969708 Pulled By: ezyang fbshipit-source-id: 8303c7f4a75ae248f393a4811ae9d2bcacab44ff

Configuration menu - View commit details

-

Copy full SHA for 586399c - Browse repository at this point

Copy the full SHA 586399cView commit details -

[vulkan] Fix warnings: static_cast, remove unused (pytorch#42195)

Summary: Pull Request resolved: pytorch#42195 Test Plan: Imported from OSS Reviewed By: AshkanAliabadi Differential Revision: D22803035 Pulled By: IvanKobzarev fbshipit-source-id: d7bf256437eccb5c421a7fd0aa8ec23a8fec0470

Configuration menu - View commit details

-

Copy full SHA for 04c62d4 - Browse repository at this point

Copy the full SHA 04c62d4View commit details -

Minor typo fix (pytorch#42731)

Summary: Just fixed a typo in test/test_sparse.py Pull Request resolved: pytorch#42731 Reviewed By: ezyang Differential Revision: D22999930 Pulled By: mrshenli fbshipit-source-id: 1b5b21d7cb274bd172fb541b2761f727ba06302c

Configuration menu - View commit details

-

Copy full SHA for 9f88bcb - Browse repository at this point

Copy the full SHA 9f88bcbView commit details -

[JIT] Exclude staticmethods from TS class compilation (pytorch#42611)

Summary: Pull Request resolved: pytorch#42611 **Summary** This commit modifies the Python frontend to ignore static functions on Torchscript classes when compiling them. They are currently included along with methods, which causes the first argument of the staticfunction to be unconditionally inferred to be of the type of the class it belongs to (regardless of how it is annotated or whether it is annotated at all). This can lead to compilation errors depending on how that argument is used in the body of the function. Static functions are instead imported and scripted as if they were standalone functions. **Test Plan** This commit augments the unit test for static methods in `test_class_types.py` to test that static functions can call each other and the class constructor. **Fixes** This commit fixes pytorch#39308. Test Plan: Imported from OSS Reviewed By: ZolotukhinM Differential Revision: D22958163 Pulled By: SplitInfinity fbshipit-source-id: 45c3c372792299e6e5288e1dbb727291e977a2af

Configuration menu - View commit details

-

Copy full SHA for eba3502 - Browse repository at this point

Copy the full SHA eba3502View commit details -

C++ API TransformerEncoderLayer (pytorch#42633)

Summary: Pull Request resolved: pytorch#42633 Test Plan: Imported from OSS Reviewed By: ezyang Differential Revision: D22994332 Pulled By: glaringlee fbshipit-source-id: 873abdf887d135fb05bde560d695e2e8c992c946

Configuration menu - View commit details

-

Copy full SHA for 98de150 - Browse repository at this point

Copy the full SHA 98de150View commit details -

Speed up HistogramObserver by vectorizing critical path (pytorch#41041)

Summary: 22x speedup over the code this replaces. Tested on ResNet18 on a devvm using CPU only, using default parameters for HistogramObserver (i.e. 2048 bins). Pull Request resolved: pytorch#41041 Test Plan: To run the test against the reference (old) implementation, you can use `python test/test_quantization.py TestRecordHistogramObserver.test_histogram_observer_against_reference`. To run the benchmark, while in the folder `benchmarks/operator_benchmark`, you can use `python -m benchmark_all_quantized_test --operators HistogramObserverCalculateQparams`. Benchmark results before speedup: ``` # ---------------------------------------- # PyTorch/Caffe2 Operator Micro-benchmarks # ---------------------------------------- # Tag : short # Benchmarking PyTorch: HistogramObserverCalculateQparams # Mode: Eager # Name: HistogramObserverCalculateQparams_C3_M512_N512_dtypetorch.quint8_cpu_qschemetorch.per_tensor_affine # Input: C: 3, M: 512, N: 512, dtype: torch.quint8, device: cpu, qscheme: torch.per_tensor_affine Forward Execution Time (us) : 185818.566 # Benchmarking PyTorch: HistogramObserverCalculateQparams # Mode: Eager # Name: HistogramObserverCalculateQparams_C3_M512_N512_dtypetorch.quint8_cpu_qschemetorch.per_tensor_symmetric # Input: C: 3, M: 512, N: 512, dtype: torch.quint8, device: cpu, qscheme: torch.per_tensor_symmetric Forward Execution Time (us) : 165325.916 ``` Benchmark results after speedup: ``` # ---------------------------------------- # PyTorch/Caffe2 Operator Micro-benchmarks # ---------------------------------------- # Tag : short # Benchmarking PyTorch: HistogramObserverCalculateQparams # Mode: Eager # Name: HistogramObserverCalculateQparams_C3_M512_N512_dtypetorch.quint8_cpu_qschemetorch.per_tensor_affine # Input: C: 3, M: 512, N: 512, dtype: torch.quint8, device: cpu, qscheme: torch.per_tensor_affine Forward Execution Time (us) : 12242.241 # Benchmarking PyTorch: HistogramObserverCalculateQparams # Mode: Eager # Name: HistogramObserverCalculateQparams_C3_M512_N512_dtypetorch.quint8_cpu_qschemetorch.per_tensor_symmetric # Input: C: 3, M: 512, N: 512, dtype: torch.quint8, device: cpu, qscheme: torch.per_tensor_symmetric Forward Execution Time (us) : 12655.354 ``` Reviewed By: raghuramank100 Differential Revision: D22400755 Pulled By: durumu fbshipit-source-id: 639ac796a554710a33c8a930c1feae95a1148718

Configuration menu - View commit details

-

Copy full SHA for 7332c21 - Browse repository at this point

Copy the full SHA 7332c21View commit details -

BAND, BOR and BXOR for NCCL (all_)reduce should throw runtime errors (p…

…ytorch#42669) Summary: cc rohan-varma Fixes pytorch#41362 pytorch#39708 # Description NCCL doesn't support `BAND, BOR, BXOR`. Since the [current mapping](https://github.com/pytorch/pytorch/blob/0642d17efc73041e5209e3be265d9a39892e8908/torch/lib/c10d/ProcessGroupNCCL.cpp#L39) doesn't contain any of the mentioned bitwise operator, a default value of `ncclSum` is used instead. This PR should provide the expected behaviour where a runtime exception is thrown. # Notes - The way I'm throwing exceptions is derived from [ProcessGroupGloo.cpp](https://github.com/pytorch/pytorch/blob/0642d17efc73041e5209e3be265d9a39892e8908/torch/lib/c10d/ProcessGroupGloo.cpp#L101) Pull Request resolved: pytorch#42669 Reviewed By: ezyang Differential Revision: D22996295 Pulled By: rohan-varma fbshipit-source-id: 83a9fedf11050d2890f9f05ebcedf53be0fc3516

Configuration menu - View commit details

-

Copy full SHA for 6ebc050 - Browse repository at this point

Copy the full SHA 6ebc050View commit details -

[caffe2] add type annotations for caffe2.distributed.python

Summary: Add Python type annotations for the `caffe2.distributed.python` module. Test Plan: Will check sandcastle results. Reviewed By: jeffdunn Differential Revision: D22994012 fbshipit-source-id: 30565cc41dd05b5fbc639ae994dfe2ddd9e56cb1

Configuration menu - View commit details

-

Copy full SHA for 02f58bd - Browse repository at this point

Copy the full SHA 02f58bdView commit details -

Automated submodule update: FBGEMM (pytorch#42713)

Summary: This is an automated pull request to update the first-party submodule for [pytorch/FBGEMM](https://github.com/pytorch/FBGEMM). New submodule commit: pytorch/FBGEMM@a989b99 Pull Request resolved: pytorch#42713 Test Plan: Ensure that CI jobs succeed on GitHub before landing. Reviewed By: amylittleyang Differential Revision: D22990108 Pulled By: jspark1105 fbshipit-source-id: 3252a0f5ad9546221ef2fe908ce6b896252e1887

Configuration menu - View commit details

-

Copy full SHA for 4eb66b8 - Browse repository at this point

Copy the full SHA 4eb66b8View commit details -

fix celu in quantized benchmark (pytorch#42756)

Summary: Pull Request resolved: pytorch#42756 Similar to ELU, CELU was also broken in the quantized benchmark, fixing. Test Plan: ``` cd benchmarks/operator_benchmark python -m pt.qactivation_test ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D23010863 fbshipit-source-id: 203e63f9cff760af6809f6f345b0d222dc1e9e1b

Configuration menu - View commit details

-

Copy full SHA for faca3c4 - Browse repository at this point

Copy the full SHA faca3c4View commit details -

Restrict conversion to SmallVector (pytorch#42694)

Summary: Pull Request resolved: pytorch#42694 The old implementation allowed calling SmallVector constructor and operator= for any type without restrictions, but then failed with a compiler error when the type wasn't a collection. Instead, we should only use it if Container follows a container concept and just not match the constructor otherwise. This fixes an issue kimishpatel was running into. ghstack-source-id: 109370513 Test Plan: unit tests Reviewed By: kimishpatel, ezyang Differential Revision: D22983020 fbshipit-source-id: c31264f5c393762d822f3d64dd2a8e3279d8da44

Configuration menu - View commit details

-

Copy full SHA for 95f4f67 - Browse repository at this point

Copy the full SHA 95f4f67View commit details -

Skips some complex tests on ROCm (pytorch#42759)

Summary: Fixes ROCm build on OSS master. Pull Request resolved: pytorch#42759 Reviewed By: ngimel Differential Revision: D23011560 Pulled By: mruberry fbshipit-source-id: 3339ecbd5a0ca47aede6f7c3f84739af1ac820d5

Configuration menu - View commit details

-

Copy full SHA for 55b1706 - Browse repository at this point

Copy the full SHA 55b1706View commit details -

Exposing Percentile Caffe2 Operator in PyTorch

Summary: As titled. Test Plan: ``` buck test caffe2/caffe2/python/operator_test:torch_integration_test -- test_percentile ``` Reviewed By: yf225 Differential Revision: D22999896 fbshipit-source-id: 2e3686cb893dff1518d533cb3d78c92eb2a6efa5

Configuration menu - View commit details

-

Copy full SHA for 2b04712 - Browse repository at this point

Copy the full SHA 2b04712View commit details

Commits on Aug 8, 2020

-

Add fake quantize operator that works in backward pass (pytorch#40532)

Summary: This diff adds FakeQuantizeWithBackward. This works the same way as the regular FakeQuantize module, allowing QAT to occur in the forward pass, except it has an additional quantize_backward parameter. When quantize_backward is enabled, the gradients are fake quantized as well (dynamically, using hard-coded values). This allows the user to see whether there would be a significant loss of accuracy if the gradients were quantized in their model. Pull Request resolved: pytorch#40532 Test Plan: The relevant test for this can be run using `python test/test_quantization.py TestQATBackward.test_forward_and_backward` Reviewed By: supriyar Differential Revision: D22217029 Pulled By: durumu fbshipit-source-id: 7055a2cdafcf022f1ea11c3442721ae146d2b3f2

Configuration menu - View commit details

-

Copy full SHA for 48e978b - Browse repository at this point

Copy the full SHA 48e978bView commit details -

Fix lite trainer unit test submodule registration (pytorch#42714)

Summary: Pull Request resolved: pytorch#42714 Change two unit tests for the lite trainer to register two instances/objects of the same submodule type instead of the same submodule object twice. Test Plan: Imported from OSS Reviewed By: iseeyuan Differential Revision: D22990736 Pulled By: ann-ss fbshipit-source-id: 2bf56b5cc438b5a5fc3db90d3f30c5c431d3ae77

Configuration menu - View commit details

-

Copy full SHA for 13bc542 - Browse repository at this point

Copy the full SHA 13bc542View commit details -

[fbgemm] use new more general depthwise 3d conv interface (pytorch#42697

) Summary: Pull Request resolved: pytorch#42697 Pull Request resolved: pytorch/FBGEMM#401 As title Test Plan: CI Reviewed By: dskhudia Differential Revision: D22972233 fbshipit-source-id: a2c8e989dee84b2c0587faccb4f8e3bcb05c797c

Configuration menu - View commit details

-

Copy full SHA for 3fa0581 - Browse repository at this point

Copy the full SHA 3fa0581View commit details -

[caffe2] Fix the timeout (stuck) issues of dedup SparseAdagrad C2 kernel

Summary: Backout D22800959 (pytorch@f30ac66). This one is causing the timeout (machine stuck) issues for dedup kernels. Reverting it make the unit test pass. Still need to investigate why this is the culprit... Original commit changeset: 641d52a51070 Test Plan: ``` buck test mode/dev-nosan //caffe2/caffe2/fb/net_transforms/tests:fuse_sparse_ops_test -- 'test_fuse_sparse_adagrad_with_sparse_lengths_sum_gradient \(caffe2\.caffe2\.fb\.net_transforms\.tests\.fuse_sparse_ops_test\.TestFuseSparseOps\)' --print-passing-details ``` Reviewed By: jspark1105 Differential Revision: D23008389 fbshipit-source-id: 4f1b9a41c78eaa5541d57b9d8aa12401e1d495f2

Configuration menu - View commit details

-

Copy full SHA for d4a4c62 - Browse repository at this point

Copy the full SHA d4a4c62View commit details -

[NCCL] DDP communication hook: getFuture() without cudaStreamAddCallb…

…ack (pytorch#42335) Summary: Pull Request resolved: pytorch#42335 **Main goal:** For DDP communication hook, provide an API called "get_future" to retrieve a future associated with the completion of c10d.ProcessGroupNCCL.work. Enable NCCL support for this API in this diff. We add an API `c10::intrusive_ptr<c10::ivalue::Future> getFuture()` to `c10d::ProcessGroup::Work`. This API will only be supported by NCCL in the first version, the default implementation will throw UnsupportedOperation. We no longer consider a design that involves cudaStreamAddCallback which potentially was causing performance regression in [pytorch#41596](pytorch#41596). ghstack-source-id: 109461507 Test Plan: ```(pytorch) [sinannasir@devgpu017.ash6 ~/local/pytorch] python test/distributed/test_c10d.py Couldn't download test skip set, leaving all tests enabled... ..............................s.....................................................s................................ ---------------------------------------------------------------------- Ran 117 tests in 298.042s OK (skipped=2) ``` ### Facebook Internal: 2\. HPC PT trainer run to validate no regression. Check the QPS number: **Master:** QPS after 1000 iters: around ~34100 ``` hpc_dist_trainer --fb-data=none --mtml-fusion-level=1 --target-model=ifr_video --max-ind-range=1000000 --embedding-partition=row-wise mast --domain $USER"testvideo_master" --trainers 16 --trainer-version 1c53912 ``` ``` [0] I0806 142048.682 metrics_publishers.py:50] Finished iter 999, Local window NE: [0.963963 0.950479 0.953704], lifetime NE: [0.963963 0.950479 0.953704], loss: [0.243456 0.235225 0.248375], QPS: 34199 ``` [detailed logs](https://www.internalfb.com/intern/tupperware/details/task/?handle=priv3_global%2Fmast_hpc%2Fhpc.sinannasirtestvideo_mastwarm.trainer.trainer%2F0&ta_tab=logs) **getFuture/new design:** QPS after 1000 iters: around ~34030 ``` hpc_dist_trainer --fb-data=none --mtml-fusion-level=1 --target-model=ifr_video --max-ind-range=1000000 --embedding-partition=row-wise mast --domain $USER"testvideo_getFutureCyclicFix" --trainers 16 --trainer-version 8553aee ``` ``` [0] I0806 160149.197 metrics_publishers.py:50] Finished iter 999, Local window NE: [0.963959 0.950477 0.953704], lifetime NE: [0.963959 0.950477 0.953704], loss: [0.243456 0.235225 0.248375], QPS: 34018 ``` [detailed logs](https://www.internalfb.com/intern/tupperware/details/task/?handle=priv3_global%2Fmast_hpc%2Fhpc.sinannasirtestvideo_getFutureCyclicFix.trainer.trainer%2F0&ta_tab=logs) **getFuture/new design Run 2:** QPS after 1000 iters: around ~34200 ``` hpc_dist_trainer --fb-data=none --mtml-fusion-level=1 --target-model=ifr_video --max-ind-range=1000000 --embedding-partition=row-wise mast --domain $USER"test2video_getFutureCyclicFix" --trainers 16 --trainer-version 8553aee ``` ``` [0] I0806 160444.650 metrics_publishers.py:50] Finished iter 999, Local window NE: [0.963963 0.950482 0.953706], lifetime NE: [0.963963 0.950482 0.953706], loss: [0.243456 0.235225 0.248375], QPS: 34201 ``` [detailed logs](https://www.internalfb.com/intern/tupperware/details/task/?handle=priv3_global%2Fmast_hpc%2Fhpc.sinannasirtest2video_getFutureCyclicFix.trainer.trainer%2F0&ta_tab=logs) **getFuture/old design (Regression):** QPS after 1000 iters: around ~31150 ``` hpc_dist_trainer --fb-data=none --mtml-fusion-level=1 --target-model=ifr_video --max-ind-range=1000000 --embedding-partition=row-wise mast --domain $USER”testvideo_OLDgetFutureD22583690 (pytorch@d904ea5)" --trainers 16 --trainer-version 1cb5cbb ``` ``` priv3_global/mast_hpc/hpc.sinannasirtestvideo_OLDgetFutureD22583690 (https://github.com/pytorch/pytorch/commit/d904ea597277673eefbb3661430d3f905e8760d5).trainer.trainer/0 [0] I0805 101320.407 metrics_publishers.py:50] Finished iter 999, Local window NE: [0.963964 0.950482 0.953703], lifetime NE: [0.963964 0.950482 0.953703], loss: [0.243456 0.235225 0.248375], QPS: 31159 ``` 3\. `flow-cli` tests; roberta_base; world_size=4: **Master:** f210039922 ``` total: 32 GPUs -- 32 GPUs: p25: 0.908 35/s p50: 1.002 31/s p75: 1.035 30/s p90: 1.051 30/s p95: 1.063 30/s forward: 32 GPUs -- 32 GPUs: p25: 0.071 452/s p50: 0.071 449/s p75: 0.072 446/s p90: 0.072 445/s p95: 0.072 444/s backward: 32 GPUs -- 32 GPUs: p25: 0.821 38/s p50: 0.915 34/s p75: 0.948 33/s p90: 0.964 33/s p95: 0.976 32/s optimizer: 32 GPUs -- 32 GPUs: p25: 0.016 2037/s p50: 0.016 2035/s p75: 0.016 2027/s p90: 0.016 2019/s p95: 0.016 2017/s ``` **getFuture new design:** f210285797 ``` total: 32 GPUs -- 32 GPUs: p25: 0.952 33/s p50: 1.031 31/s p75: 1.046 30/s p90: 1.055 30/s p95: 1.070 29/s forward: 32 GPUs -- 32 GPUs: p25: 0.071 449/s p50: 0.072 446/s p75: 0.072 445/s p90: 0.072 444/s p95: 0.072 443/s backward: 32 GPUs -- 32 GPUs: p25: 0.865 37/s p50: 0.943 33/s p75: 0.958 33/s p90: 0.968 33/s p95: 0.982 32/s optimizer: 32 GPUs -- 32 GPUs: p25: 0.016 2037/s p50: 0.016 2033/s p75: 0.016 2022/s p90: 0.016 2018/s p95: 0.016 2017/s ``` Reviewed By: ezyang Differential Revision: D22833298 fbshipit-source-id: 1bb268d3b00335b42ee235c112f93ebe2f25b208

Configuration menu - View commit details

-

Copy full SHA for 0a804be - Browse repository at this point

Copy the full SHA 0a804beView commit details -

Adding Peter's Swish Op ULP analysis. (pytorch#42573)

Summary: Pull Request resolved: pytorch#42573 * Generate the ULP png files for different ranges. Test Plan: test_op_ulp_error.py Reviewed By: hyuen Differential Revision: D22938572 fbshipit-source-id: 6374bef6d44c38e1141030d44029dee99112cd18

Configuration menu - View commit details

-

Copy full SHA for e95fbaa - Browse repository at this point

Copy the full SHA e95fbaaView commit details -

Set proper return type (pytorch#42454)

Summary: This function was always expecting to return a `size_t` value Pull Request resolved: pytorch#42454 Reviewed By: ezyang Differential Revision: D22993168 Pulled By: ailzhang fbshipit-source-id: 044df8ce17983f04681bda8c30cd742920ef7b1e

Configuration menu - View commit details

-

Copy full SHA for 6755e49 - Browse repository at this point

Copy the full SHA 6755e49View commit details -

[vulkan] inplace add_, relu_ (pytorch#41380)

Summary: Pull Request resolved: pytorch#41380 Test Plan: Imported from OSS Reviewed By: AshkanAliabadi Differential Revision: D22754939 Pulled By: IvanKobzarev fbshipit-source-id: 19b0bbfc5e1f149f9996b5043b77675421ecb2ed

Configuration menu - View commit details

-

Copy full SHA for 5dd230d - Browse repository at this point

Copy the full SHA 5dd230dView commit details -

update DispatchKey::toString() (pytorch#42619)

Summary: Pull Request resolved: pytorch#42619 Added missing entries to `DispatchKey::toString()` and reordered to match declaration order in `DispatchKey.h` Test Plan: Imported from OSS Reviewed By: ezyang Differential Revision: D22963407 Pulled By: bhosmer fbshipit-source-id: 34a012135599f497c308ba90ea6e8117e85c74ac

Configuration menu - View commit details

-

Copy full SHA for c889de7 - Browse repository at this point

Copy the full SHA c889de7View commit details -

integrate int8 swish with net transformer

Summary: add a fuse path for deq->swish->quant update swish fake op interface to take arguments accordingly Test Plan: net_runner passes unit tests need to be updated Reviewed By: venkatacrc Differential Revision: D22962064 fbshipit-source-id: cef79768db3c8af926fca58193d459d671321f80

Configuration menu - View commit details

-

Copy full SHA for 18ca999 - Browse repository at this point

Copy the full SHA 18ca999View commit details -

Revert D22217029: Add fake quantize operator that works in backward pass

Test Plan: revert-hammer Differential Revision: D22217029 (pytorch@48e978b) Original commit changeset: 7055a2cdafcf fbshipit-source-id: f57a27be412c6fbfd5a5b07a26f758ac36be3b67

Configuration menu - View commit details

-

Copy full SHA for b7a9bc0 - Browse repository at this point

Copy the full SHA b7a9bc0View commit details -

[PyFI] Update hypothesis and switch from tp2 (pytorch#41645)

Summary: Pull Request resolved: pytorch#41645 Pull Request resolved: facebookresearch/pytext#1405 Test Plan: buck test Reviewed By: thatch Differential Revision: D20323893 fbshipit-source-id: 54665d589568c4198e96a27f0ed8e5b41df7b86b

Configuration menu - View commit details

-

Copy full SHA for 5cd0f5e - Browse repository at this point

Copy the full SHA 5cd0f5eView commit details -

fix asan failure for module freezing in conv bn folding (pytorch#42739)

Summary: Pull Request resolved: pytorch#42739 This is a test case which fails with ASAN on at the module freezing step. Test Plan: ``` USE_ASAN=1 USE_CUDA=0 python setup.py develop LD_PRELOAD=/usr/lib64/libasan.so.4 python test/test_mobile_optimizer.py TestOptimizer.test_optimize_for_mobile_asan // output tail: https://gist.github.com/vkuzo/7a0018b9e10ffe64dab0ac7381479f23 ``` Imported from OSS Reviewed By: kimishpatel Differential Revision: D23005962 fbshipit-source-id: b7d4492e989af7c2e22197c16150812bd2dda7cc

Configuration menu - View commit details

-

Copy full SHA for d8801f5 - Browse repository at this point

Copy the full SHA d8801f5View commit details -

optimize_for_mobile: bring packed params to root module (pytorch#42740)

Summary: Pull Request resolved: pytorch#42740 Adds a pass to hoist conv packed params to root module. The benefit is that if there is nothing else in the conv module, subsequent passes will delete it, which will reduce module size. For context, freezing does not handle this because conv packed params is a custom object. Test Plan: ``` PYTORCH_JIT_LOG_LEVEL=">hoist_conv_packed_params.cpp" python test/test_mobile_optimizer.py TestOptimizer.test_hoist_conv_packed_params ``` Imported from OSS Reviewed By: kimishpatel Differential Revision: D23005961 fbshipit-source-id: 31ab1f5c42a627cb74629566483cdc91f3770a94

Configuration menu - View commit details

-

Copy full SHA for 79b8328 - Browse repository at this point

Copy the full SHA 79b8328View commit details -

Include/ExcludeDispatchKeySetGuard API (pytorch#42658)

Summary: Pull Request resolved: pytorch#42658 Test Plan: Imported from OSS Reviewed By: ezyang Differential Revision: D22971426 Pulled By: bhosmer fbshipit-source-id: 4d63e0cb31745e7b662685176ae0126ff04cdece

Configuration menu - View commit details

-

Copy full SHA for b6810c1 - Browse repository at this point

Copy the full SHA b6810c1View commit details

Commits on Aug 9, 2020

-

Adds 'clip' alias for clamp (pytorch#42770)

Summary: Per title. Also updates our guidance for adding aliases to clarify interned_string and method_test requirements. The alias is tested by extending test_clamp to also test clip. Pull Request resolved: pytorch#42770 Reviewed By: ngimel Differential Revision: D23020655 Pulled By: mruberry fbshipit-source-id: f1d8e751de9ac5f21a4f95d241b193730f07b5dc

Configuration menu - View commit details

-

Copy full SHA for 87970b7 - Browse repository at this point

Copy the full SHA 87970b7View commit details

Commits on Aug 10, 2020

-

Fix op benchmark (pytorch#42757)

Summary: A benchmark relies on abs_ having a functional variant. Pull Request resolved: pytorch#42757 Reviewed By: ngimel Differential Revision: D23011037 Pulled By: mruberry fbshipit-source-id: c04866015fa259e4c544e5cf0c33ca1e11091d92

Configuration menu - View commit details

-

Copy full SHA for 162972e - Browse repository at this point

Copy the full SHA 162972eView commit details -

[ONNX] Fix scalar type cast for comparison ops (pytorch#37787)

Summary: Always promote type casts for comparison operators, regardless if the input is tensor or scalar. Unlike arithmetic operators, where scalars are implicitly cast to the same type as tensors. Pull Request resolved: pytorch#37787 Reviewed By: hl475 Differential Revision: D21440585 Pulled By: houseroad fbshipit-source-id: fb5c78933760f1d1388b921e14d73a2cb982b92f

Configuration menu - View commit details

-

Copy full SHA for 55ac240 - Browse repository at this point

Copy the full SHA 55ac240View commit details -

Fix TensorPipe submodule (pytorch#42789)

Summary: Not sure what happened, but possibly I landed a PR on PyTorch which updated the TensorPipe submodule to a commit hash of a *PR* of TensorPipe. Now that the latter PR has been merged though that same commit has a different hash. The commit referenced by PyTorch, therefore, has become orphaned. This is causing some issues. Hence here I am updating the commit, which however does not change a single line of code. Pull Request resolved: pytorch#42789 Reviewed By: houseroad Differential Revision: D23023238 Pulled By: lw fbshipit-source-id: ca2dcf6b7e07ab64fb37e280a3dd7478479f87fd

Configuration menu - View commit details

-

Copy full SHA for 05f0053 - Browse repository at this point

Copy the full SHA 05f0053View commit details -

generalize circleci docker build.sh and add centos support (pytorch#4…

…1255) Summary: Add centos Dockerfile and support to circleci docker builds, and allow generic image names to be parsed by build.sh, so both hardcoded images and custom images can be built. Currently only adds a ROCm centos Dockerfile. CC ezyang xw285cornell sunway513 Pull Request resolved: pytorch#41255 Reviewed By: mrshenli Differential Revision: D23003218 Pulled By: malfet fbshipit-source-id: 562c53533e7fb9637dc2e81edb06b2242afff477

Configuration menu - View commit details

-

Copy full SHA for bc77966 - Browse repository at this point

Copy the full SHA bc77966View commit details -

Add python unittest target to

caffe2/test/TARGETS(pytorch#42766)Summary: Pull Request resolved: pytorch#42766 **Summary** Some python tests are missing in `caffe2/test/TARGETS`, add them to be more comprehension. According to [run_test.py](https://github.com/pytorch/pytorch/blob/master/test/run_test.py#L125), some tests are slower. Slow tests are added as independent targets and others are put together into one `others` target. The reason is because we want to reduce overhead, especially for code covarge collection. Tests in one target can be run as a bundle, and then coverage can be collected together. Typically coverage collection procedure is time-expensive, so this helps us save time. Test Plan: Run all the new test targets locally in dev server and record the time they cost. **Statistics** ``` # jit target real 33m7.694s user 653m1.181s sys 58m14.160s --------- Compare to Initial Jit Target runtime: ---------------- real 32m13.057s user 613m52.843s sys 54m58.678s ``` ``` # others target real 9m2.920s user 164m21.927s sys 12m54.840s ``` ``` # serialization target real 4m21.090s user 23m33.501s sys 1m53.308s ``` ``` # tensorexpr real 11m28.187s user 33m36.420s sys 1m15.925s ``` ``` # type target real 3m36.197s user 51m47.912s sys 4m14.149s ``` Reviewed By: malfet Differential Revision: D22979219 fbshipit-source-id: 12a30839bb76a64871359bc024e4bff670c5ca8b

Configuration menu - View commit details

-

Copy full SHA for e5adf45 - Browse repository at this point

Copy the full SHA e5adf45View commit details -

Automated submodule update: FBGEMM (pytorch#42781)

Summary: Pull Request resolved: pytorch#42781 This is an automated pull request to update the first-party submodule for [pytorch/FBGEMM](https://github.com/pytorch/FBGEMM). New submodule commit: pytorch/FBGEMM@fbd813e Pull Request resolved: pytorch#42771 Test Plan: Ensure that CI jobs succeed on GitHub before landing. Reviewed By: dskhudia Differential Revision: D23015890 Pulled By: jspark1105 fbshipit-source-id: f0f62969f8744df96a4e7f5aff2ce95baabb2f76

Configuration menu - View commit details

-

Copy full SHA for 77305c1 - Browse repository at this point

Copy the full SHA 77305c1View commit details -

include missing settings import

Summary: from hypothesis import given, settings Test Plan: test_op_nnpi_fp16.py Differential Revision: D23031038 fbshipit-source-id: 751547e6a6e992d8816d4cc2c5a699ba19a97796

Configuration menu - View commit details

-

Copy full SHA for e7b5a23 - Browse repository at this point

Copy the full SHA e7b5a23View commit details -

[ONNX] Add support for scalar src in torch.scatter ONNX export. (pyto…

…rch#42765) Summary: `torch.scatter` supports two overloads – one where `src` input tensor is same size as the `index` tensor input, and second, where `src` is a scalar. Currrently, ONNX exporter only supports the first overload. This PR adds export support for the second overload of `torch.scatter`. Pull Request resolved: pytorch#42765 Reviewed By: hl475 Differential Revision: D23025189 Pulled By: houseroad fbshipit-source-id: 5c2a3f3ce3b2d69661a227df8a8e0ed7c1858dbf

Configuration menu - View commit details

-

Copy full SHA for d83cc92 - Browse repository at this point

Copy the full SHA d83cc92View commit details -

.circleci: Only do comparisons when available (pytorch#42816)

Summary: Pull Request resolved: pytorch#42816 Comparisons were being done on branches where the '<< pipeline.git.base_revision >>' didn't exist before so let's just move it so that comparison / code branch is only run when that variable is available Example: https://app.circleci.com/pipelines/github/pytorch/pytorch/198611/workflows/8a316eef-d864-4bb0-863f-1454696b1e8a/jobs/6610393 Signed-off-by: Eli Uriegas <eliuriegas@fb.com> Test Plan: Imported from OSS Reviewed By: ezyang Differential Revision: D23032900 Pulled By: seemethere fbshipit-source-id: 98a49c78b174d6fde9c6b5bd3d86a6058d0658bd

Configuration menu - View commit details

-

Copy full SHA for d7aaa33 - Browse repository at this point

Copy the full SHA d7aaa33View commit details -

DDP communication hook: skip dividing grads by world_size if hook reg…

…istered. (pytorch#42400) Summary: Pull Request resolved: pytorch#42400 mcarilli spotted that in the original DDP communication hook design described in [39272](pytorch#39272), the hooks receive grads that are already predivided by world size. It makes sense to skip the divide completely if hook registered. The hook is meant for the user to completely override DDP communication. For example, if the user would like to implement something like GossipGrad, always dividing by the world_size would not be a good idea. We also included a warning in the register_comm_hook API as: > GradBucket bucket's tensors will not be predivided by world_size. User is responsible to divide by the world_size in case of operations like allreduce. ghstack-source-id: 109548696 **Update:** We discovered and fixed a bug with the sparse tensors case. See new unit test called `test_ddp_comm_hook_sparse_gradients` and changes in `reducer.cpp`. Test Plan: python test/distributed/test_c10d.py and perf benchmark tests. Reviewed By: ezyang Differential Revision: D22883905 fbshipit-source-id: 3277323fe9bd7eb6e638b7ef0535cab1fc72f89e

Configuration menu - View commit details

-

Copy full SHA for 752f433 - Browse repository at this point

Copy the full SHA 752f433View commit details -

change pt_defs.bzl to python file (pytorch#42725)

Summary: Pull Request resolved: pytorch#42725 This diff changes pt_defs.bzl to pt_defs.py, so that it can be included as python source file. The reason is if we remove base ops, pt_defs.bzl becomes too big (8k lines) and we cannot pass its content to gen_oplist (python library). The easy solution is to change it to a python source file so that it can be used in gen_oplist. Test Plan: sandcastle Reviewed By: ljk53, iseeyuan Differential Revision: D22968258 fbshipit-source-id: d720fe2e684d9a2bf5bd6115b6e6f9b812473f12

Configuration menu - View commit details

-

Copy full SHA for e06b4be - Browse repository at this point

Copy the full SHA e06b4beView commit details -

Fix

torch.nn.functional.grid_samplecrashes ifgridhas NaNs (pyt……orch#42703) Summary: In `clip_coordinates` replace `minimum(maximum(in))` composition with `clamp_max(clamp_min(in))` Swap order of `clamp_min` operands to clamp NaNs in grid to 0 Fixes pytorch#42616 Pull Request resolved: pytorch#42703 Reviewed By: ezyang Differential Revision: D22987447 Pulled By: malfet fbshipit-source-id: a8a2d6de8043d6b77c8707326c5412d0250efae6

Configuration menu - View commit details

-

Copy full SHA for 3cf2551 - Browse repository at this point

Copy the full SHA 3cf2551View commit details -

[vulkan] cat op (concatenate) (pytorch#41434)

Summary: Pull Request resolved: pytorch#41434 Test Plan: Imported from OSS Reviewed By: AshkanAliabadi Differential Revision: D22754941 Pulled By: IvanKobzarev fbshipit-source-id: cd03577e1c2f639b2592d4b7393da4657422e23c

Configuration menu - View commit details

-

Copy full SHA for 8718524 - Browse repository at this point

Copy the full SHA 8718524View commit details -

Configuration menu - View commit details

-

Copy full SHA for 4d9c950 - Browse repository at this point

Copy the full SHA 4d9c950View commit details -

test_cpp_rpc: Build test_e2e_process_group.cpp only if USE_GLOO is tr…

…ue (pytorch#42836) Summary: Fixes pytorch#42776 Pull Request resolved: pytorch#42836 Reviewed By: seemethere Differential Revision: D23041274 Pulled By: malfet fbshipit-source-id: 8605332701271bea6d9b3a52023f548c11d8916f

Configuration menu - View commit details

-

Copy full SHA for 64a7939 - Browse repository at this point

Copy the full SHA 64a7939View commit details

Commits on Aug 11, 2020

-

BatchedTensor fallback: extended to support ops with multiple Tensor …

…returns (pytorch#42628) Summary: Pull Request resolved: pytorch#42628 This PR extends the BatchedTensor fallback to support operators with multiple Tensor returns. If an operator has multiple returns, we stack shards of each return to create the full outputs. Test Plan: - `pytest test/test_vmap.py -v`. Added a new test for an operator with multiple returns (torch.var_mean). Reviewed By: izdeby Differential Revision: D22957095 Pulled By: zou3519 fbshipit-source-id: 5c0ec3bf51283cc4493b432bcfed1acf5509e662

Configuration menu - View commit details

-

Copy full SHA for 8f67c7a - Browse repository at this point

Copy the full SHA 8f67c7aView commit details -

Rename some BatchedTensorImpl APIs (pytorch#42700)

Summary: Pull Request resolved: pytorch#42700 I was about to use `isBatched` somewhere not in the files used to implement vmap but then realized how silly that sounds due to ambiguity. This PR renames some of the BatchedTensor APIs to make a bit more sense to onlookers. - isBatched(Tensor) -> isBatchedTensor(Tensor) - unsafeGetBatched(Tensor) -> unsafeGetBatchedImpl(Tensor) - maybeGetBatched(Tensor) -> maybeGetBatchedImpl(Tensor) Test Plan: - build Pytorch, run tests. Reviewed By: ezyang Differential Revision: D22985868 Pulled By: zou3519 fbshipit-source-id: b8ed9925aabffe98085bcf5c81d22cd1da026f46

Configuration menu - View commit details

-

Copy full SHA for a255965 - Browse repository at this point

Copy the full SHA a255965View commit details -

Skip test_c10d.ProcessGroupNCCLTest under TSAN (pytorch#42750)

Summary: Pull Request resolved: pytorch#42750 All of these tests fail under TSAN since we fork in a multithreaded environment. ghstack-source-id: 109566396 Test Plan: CI Reviewed By: pritamdamania87 Differential Revision: D23007746 fbshipit-source-id: 65571607522b790280363882d61bfac8a52007a1

Configuration menu - View commit details

-

Copy full SHA for a414bd6 - Browse repository at this point

Copy the full SHA a414bd6View commit details -

[c10d] Template computeLengthsAndOffsets() (pytorch#42706)

Summary: Pull Request resolved: pytorch#42706 Different backends accept different type of length to, like MPI_Alltoallv, nccSend/Recv(), gloo::alltoallv(). So to make computeLengthsAndOffsets() template Test Plan: Sandcastle CI HPC: ./trainer_cmd.sh -p 16 -n 8 -d nccl Reviewed By: osalpekar Differential Revision: D22961459 fbshipit-source-id: 45ec271f8271b96f2dba76cd9dce3e678bcfb625

Configuration menu - View commit details

-

Copy full SHA for c9e8256 - Browse repository at this point

Copy the full SHA c9e8256View commit details -

adaptive_avg_pool[23]d: check output_size.size() (pytorch#42831)

Summary: Return an error if output_size is unexpected Fixes pytorch#42578 Pull Request resolved: pytorch#42831 Reviewed By: ezyang Differential Revision: D23039295 Pulled By: malfet fbshipit-source-id: d14a5e6dccdf785756635caee2c87151c9634872

Configuration menu - View commit details

-

Copy full SHA for c14a7f6 - Browse repository at this point

Copy the full SHA c14a7f6View commit details -

Fix "non-negative integer" error messages (pytorch#42734)

Summary: Fixes pytorch#42662 Use "positive integer" error message for consistency with: https://github.com/pytorch/pytorch/blob/17f76f9a7896eccdfdba5fd22fd3a24002b0d917/torch/optim/lr_scheduler.py#L958-L959 https://github.com/pytorch/pytorch/blob/ad7133d3c11a35a7aedf9786ccf8d7a52939b753/torch/utils/data/sampler.py#L102-L104 Pull Request resolved: pytorch#42734 Reviewed By: zdevito Differential Revision: D23039575 Pulled By: smessmer fbshipit-source-id: 1be1e0caa868891540ecdbe6f471a6cd51c40ede

Configuration menu - View commit details

-

Copy full SHA for 1038878 - Browse repository at this point

Copy the full SHA 1038878View commit details -

add net transforms for fusion (pytorch#42763)

Summary: Pull Request resolved: pytorch#42763 add the fp16 fusions as net transforms: -layernorm fused with mul+add -swish int8 Test Plan: added unit test, ran flows Reviewed By: yinghai Differential Revision: D23002043 fbshipit-source-id: f0b13d51d68c240b05d2a237a7fb8273e996328b

Configuration menu - View commit details

-

Copy full SHA for a4b763b - Browse repository at this point

Copy the full SHA a4b763bView commit details -

Fix ROCm CI by increasing test timeout (pytorch#42827)

Summary: ROCm is failing to run this test in the allotted time. See, for example, https://app.circleci.com/pipelines/github/pytorch/pytorch/198759/workflows/f6066acf-b289-46c5-aad0-6f4f663ce820/jobs/6618625. cc jeffdaily Pull Request resolved: pytorch#42827 Reviewed By: pbelevich Differential Revision: D23042220 Pulled By: mruberry fbshipit-source-id: 52b426b0733b7b52ac3b311466d5000334864a82

Configuration menu - View commit details

-

Copy full SHA for dedcc30 - Browse repository at this point

Copy the full SHA dedcc30View commit details -

[quant] Sorting the list of dispathes (pytorch#42758)

Summary: Pull Request resolved: pytorch#42758 Test Plan: Imported from OSS Reviewed By: vkuzo Differential Revision: D23011764 Pulled By: z-a-f fbshipit-source-id: df87acdcf77ae8961a109eaba20521bc4f27ad0e

Configuration menu - View commit details

-

Copy full SHA for 59b10f7 - Browse repository at this point

Copy the full SHA 59b10f7View commit details -

Revert D23002043: add net transforms for fusion

Test Plan: revert-hammer Differential Revision: D23002043 (pytorch@a4b763b) Original commit changeset: f0b13d51d68c fbshipit-source-id: d43602743af35db825e951358992e979283a26f6

Configuration menu - View commit details

-

Copy full SHA for ddcf3de - Browse repository at this point

Copy the full SHA ddcf3deView commit details -

Don't materialize output grads (pytorch#41821)

Summary: Added a new option in AutogradContext to tell autograd to not materialize output grad tensors, that is, don't expand undefined/None tensors into tensors full of zeros before passing them as input to the backward function. This PR is the second part that closes pytorch#41359. The first PR is pytorch#41490. Pull Request resolved: pytorch#41821 Reviewed By: albanD Differential Revision: D22693163 Pulled By: heitorschueroff fbshipit-source-id: a8d060405a17ab1280a8506a06a2bbd85cb86461

Configuration menu - View commit details

-

Copy full SHA for ffc3da3 - Browse repository at this point

Copy the full SHA ffc3da3View commit details -

vmap: temporarily disable support for random functions (pytorch#42617)

Summary: Pull Request resolved: pytorch#42617 While we figure out the random plan, I want to initially disable support for random operations. This is because there is an ambiguity in what randomness means. For example, ``` tensor = torch.zeros(B0, 1) vmap(lambda t: t.normal_())(tensor) ``` in the above example, should tensor[0] and tensor[1] be equal (i.e., use the same random seed), or should they be different? The mechanism for disabling random support is as follows: - We add a new dispatch key called VmapMode - Whenever we're inside vmap, we enable VmapMode for all tensors. This is done via at::VmapMode::increment_nesting and at::VmapMode::decrement_nesting. - DispatchKey::VmapMode's fallback kernel is the fallthrough kernel. - We register kernels that raise errors for all random functions on DispatchKey::VmapMode. This way, whenever someone calls a random function on any tensor (not just BatchedTensors) inside of a vmap block, an error gets thrown. Test Plan: - pytest test/test_vmap.py -v -k "Operators" Reviewed By: ezyang Differential Revision: D22954840 Pulled By: zou3519 fbshipit-source-id: cb8d71062d4087e10cbf408f74b1a9dff81a226d

Configuration menu - View commit details

-

Copy full SHA for e8f4b04 - Browse repository at this point

Copy the full SHA e8f4b04View commit details -

Added torch::cuda::manual_seed(_all) to mirror torch.cuda.manual_seed…

…(_all) (pytorch#42638) Summary: Pull Request resolved: pytorch#42638 Test Plan: Imported from OSS Reviewed By: glaringlee Differential Revision: D23030317 Pulled By: heitorschueroff fbshipit-source-id: b0d7bdf0bc592a913ae5b1ffc14c3a5067478ce3

Configuration menu - View commit details

-

Copy full SHA for d396d13 - Browse repository at this point

Copy the full SHA d396d13View commit details -

Raise error if

at::native::embeddingis given 0-D weight (pytorch#4……2550) Summary: Previously, `at::native::embedding` implicitly assumed that the `weight` argument would be 1-D or greater. Given a 0-D tensor, it would segfault. This change makes it throw a RuntimeError instead. Fixes pytorch#41780 Pull Request resolved: pytorch#42550 Reviewed By: smessmer Differential Revision: D23040744 Pulled By: albanD fbshipit-source-id: d3d315850a5ee2d2b6fcc0bdb30db2b76ffffb01

Configuration menu - View commit details

-

Copy full SHA for 42b4a71 - Browse repository at this point

Copy the full SHA 42b4a71View commit details -

Optimization with Backward Implementation of Learnable Fake Quantize …

…Per Channel Kernel (CPU and GPU) (pytorch#42810) Summary: Pull Request resolved: pytorch#42810 In this diff, the original backward pass implementation is sped up by merging the 3 iterations computing dX, dScale, and dZeroPoint separately. In this case, a native loop is directly used on a byte-wise level (referenced by `strides`). In addition, vectorization is used such that scale and zero point are expanded to share the same shape and the element-wise corresponding values to X along the channel axis. In the benchmark test on the operators, for an input of shape `3x3x256x256`, we have observed the following improvement in performance: **Speedup from python operator**: ~10x **Speedup from original learnable kernel**: ~5.4x **Speedup from non-backprop kernel**: ~1.8x Test Plan: To assert correctness of the new kernel, on a devvm, enter the command `buck test //caffe2/test:quantization -- learnable_backward_per_channel` To benchmark the operators, on a devvm, enter the command 1. Set the kernel size to 3x3x256x256 or a reasonable input size. 2. Run `buck test //caffe2/benchmarks/operator_benchmark/pt:quantization_test` 3. The relevant outputs for CPU are as follows: ``` # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cpu_op_typepy_module # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: py_module Backward Execution Time (us) : 989024.686 # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cpu_op_typelearnable_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: learnable_kernel Backward Execution Time (us) : 95654.079 # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cpu_op_typeoriginal_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: original_kernel Backward Execution Time (us) : 176948.970 ``` 4. The relevant outputs for GPU are as follows: The relevant outputs are as follows **Pre-optimization**: ``` # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typepy_module # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: py_module Backward Execution Time (us) : 6795.173 # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typelearnable_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: learnable_kernel Backward Execution Time (us) : 4321.351 # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typeoriginal_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: original_kernel Backward Execution Time (us) : 1052.066 ``` **Post-optimization**: ``` # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typepy_module # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: py_module Backward Execution Time (us) : 6737.106 # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typelearnable_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: learnable_kernel Backward Execution Time (us) : 2112.484 # Benchmarking PyTorch: FakeQuantizePerChannelOpBenchmark # Mode: Eager # Name: FakeQuantizePerChannelOpBenchmark_N3_C3_H256_W256_cuda_op_typeoriginal_kernel # Input: N: 3, C: 3, H: 256, W: 256, device: cpu, op_type: original_kernel Backward Execution Time (us) : 1078.79 Reviewed By: vkuzo Differential Revision: D22946853 fbshipit-source-id: 1a01284641480282b3f57907cc7908d68c68decd

Configuration menu - View commit details

-

Copy full SHA for d28639a - Browse repository at this point

Copy the full SHA d28639aView commit details -

[JIT] Fix typing.Final for python 3.8 (pytorch#39568)

Summary: fixes pytorch#39566 `typing.Final` is a thing since python 3.8, and on python 3.8, `typing_extensions.Final` is an alias of `typing.Final`, therefore, `ann.__module__ == 'typing_extensions'` will become False when using 3.8 and `typing_extensions` is installed. ~~I don't know why the test is skipped, seems like due to historical reason when python 2.7 was still a thing?~~ Edit: I know now, the `Final` for `<3.7` don't have `__origin__` Pull Request resolved: pytorch#39568 Reviewed By: smessmer Differential Revision: D23043388 Pulled By: malfet fbshipit-source-id: cc87a9e4e38090d784e9cea630e1c543897a1697

Configuration menu - View commit details

-

Copy full SHA for 9162352 - Browse repository at this point

Copy the full SHA 9162352View commit details -

Fix a typo in EmbeddingBag.cu (pytorch#42742)

Summary: Pull Request resolved: pytorch#42742 Reviewed By: smessmer Differential Revision: D23011029 Pulled By: mrshenli fbshipit-source-id: 615f8b876ef1881660af71b6e145fb4ca97d2ebb

Configuration menu - View commit details

-

Copy full SHA for 1041bde - Browse repository at this point

Copy the full SHA 1041bdeView commit details -

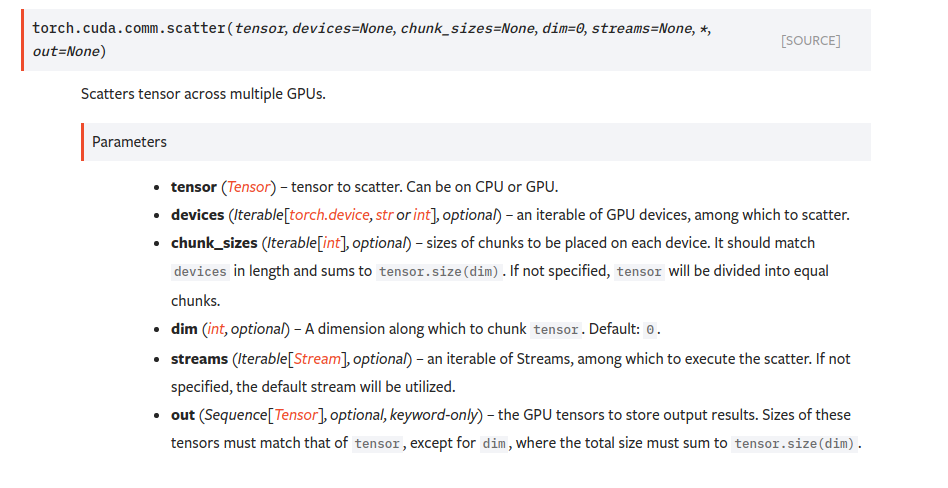

Update the documentation for scatter to include streams parameter. (p…

…ytorch#42814) Summary: Fixes pytorch#41827  Current: https://pytorch.org/docs/stable/cuda.html#communication-collectives Pull Request resolved: pytorch#42814 Reviewed By: smessmer Differential Revision: D23033544 Pulled By: mrshenli fbshipit-source-id: 88747fbb06e88ef9630c042ea9af07dafd422296

Configuration menu - View commit details

-

Copy full SHA for 42114a0 - Browse repository at this point

Copy the full SHA 42114a0View commit details -

Modify clang code coverage to CMakeList.txt (for MacOS) (pytorch#42837)

Summary: Pull Request resolved: pytorch#42837 Originally we use ``` list(APPEND CMAKE_C_FLAGS -fprofile-instr-generate -fcoverage-mapping) list(APPEND CMAKE_CXX_FLAGS -fprofile-instr-generate -fcoverage-mapping) ``` But when compile project on mac with Coverage On, it has the error: `clang: error: no input files /bin/sh: -fprofile-instr-generate: command not found /bin/sh: -fcoverage-mapping: command not found` The reason behind it, is `list(APPEND CMAKE_CXX_FLAGS` will add an additional `;` to the variable. This means, if we do `list(APPEND foo a)` and then `list(APPEND foo b)`, then `foo` will be `a;b` -- with the additional `;`. Since we have `CMAKE_CXX_FLAGS` defined before in the `CMakeList.txt`, we can only use `set(...)` here After changing it to ``` set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -fprofile-instr-generate -fcoverage-mapping") set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fprofile-instr-generate -fcoverage-mapping") ``` Test successufully in local mac machine. Test Plan: Test locally on mac machine Reviewed By: malfet Differential Revision: D23043057 fbshipit-source-id: ff6f4891b35b7f005861ee2f8e4c550c997fe961

Configuration menu - View commit details

-

Copy full SHA for 7524699 - Browse repository at this point

Copy the full SHA 7524699View commit details -

Introduce experimental FX library (pytorch#42741)

Summary: Pull Request resolved: pytorch#42741 Test Plan: Imported from OSS Reviewed By: dzhulgakov Differential Revision: D23006383 Pulled By: jamesr66a fbshipit-source-id: 6cb6d921981fcae47a07df581ffcf900fb8a7fe8

Configuration menu - View commit details

-

Copy full SHA for 575e749 - Browse repository at this point

Copy the full SHA 575e749View commit details -

Configuration menu - View commit details

-

Copy full SHA for 8257c65 - Browse repository at this point

Copy the full SHA 8257c65View commit details -

Configuration menu - View commit details

-

Copy full SHA for 566bd26 - Browse repository at this point

Copy the full SHA 566bd26View commit details -

Fix orgqr input size conditions (pytorch#42825)

Summary: * Adds support for `n > k` * Throw error if `m >= n >= k` is not true * Updates existing error messages to match argument names shown in public docs * Adds error tests Fixes pytorch#41776 Pull Request resolved: pytorch#42825 Reviewed By: smessmer Differential Revision: D23038916 Pulled By: albanD fbshipit-source-id: e9bec7b11557505e10e0568599d0a6cb7e12ab46

Configuration menu - View commit details

-

Copy full SHA for 2c8cbd7 - Browse repository at this point

Copy the full SHA 2c8cbd7View commit details -

align qconv benchmark to conv benchmark (pytorch#42761)

Summary: Pull Request resolved: pytorch#42761 Makes the qconv benchmark follow the conv benchmark exactly. This way it will be easy to compare q vs fp with the same settings. Test Plan: ``` cd benchmarks/operator_benchmark python -m pt.qconv_test python -m pt.conv_test ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D23012533 fbshipit-source-id: af30ee585389395569a6322f5210828432963077

Configuration menu - View commit details

-

Copy full SHA for a7bdf57 - Browse repository at this point

Copy the full SHA a7bdf57View commit details -

align qlinear benchmark to linear benchmark (pytorch#42767)

Summary: Pull Request resolved: pytorch#42767 Same as previous PR, forcing the qlinear benchmark to follow the fp one Test Plan: ``` cd benchmarks/operator_benchmark python -m pt.linear_test python -m pt.qlinear_test ``` Imported from OSS Reviewed By: jerryzh168 Differential Revision: D23013937 fbshipit-source-id: fffaa7cfbfb63cea41883fd4d70cd3f08120aaf8

Configuration menu - View commit details

-

Copy full SHA for 57b056b - Browse repository at this point

Copy the full SHA 57b056bView commit details -

[NNC] Registerizer for GPU [1/x] (pytorch#42606)

Summary: Adds a new optimization pass, the Registerizer, which looks for common Stores and Loads to a single item in a buffer and replaces them with a local temporary scalar which is cheaper to write. For example it can replace: ``` A[0] = 0; for (int x = 0; x < 10; x++) { A[0] = (A[0]) + x; } ``` with: ``` int A_ = 0; for (int x = 0; x < 10; x++) { A_ = x + A_; } A[0] = A_; ``` This is particularly useful on GPUs when parallelizing, since after replacing loops with metavars we have a lot of accesses like this. Early tests of simple reductions on a V100 indicates this can speed them up by ~5x. This diff got a bit unwieldy with the integration code so that will come in a follow up. Pull Request resolved: pytorch#42606 Reviewed By: bertmaher Differential Revision: D22970969 Pulled By: nickgg fbshipit-source-id: 831fd213f486968624b9a4899a331ea9aeb40180Configuration menu - View commit details

-

Copy full SHA for aabdef5 - Browse repository at this point

Copy the full SHA aabdef5View commit details -

Adds list of operator-related information for testing (pytorch#41662)

Summary: This PR adds: - an "OpInfo" class in common_method_invocations that can contain useful information about an operator, like what dtypes it supports - a more specialized "UnaryUfuncInfo" class designed to help test the unary ufuncs - the `ops` decorator, which can generate test variants from lists of OpInfos - test_unary_ufuncs.py, a new test suite stub that shows how the `ops` decorator and operator information can be used to improve the thoroughness of our testing The single test in test_unary_ufuncs.py simply ensures that the dtypes associated with a unary ufunc operator in its OpInfo entry are correct. Writing a test like this previously, however, would have required manually constructing test-specific operator information and writing a custom test generator. The `ops` decorator and a common place to put operator information make writing tests like this easier and allows what would have been test-specific information to be reused. The `ops` decorator extends and composes with the existing device generic test framework, allowing its decorators to be reused. For example, the `onlyOnCPUAndCUDA` decorator works with the new `ops` decorator. This should keep the tests readable and consistent. Future PRs will likely: - continue refactoring the too large test_torch.py into more verticals (unary ufuncs, binary ufuncs, reductions...) - add more operator information to common_method_invocations.py - refactor tests for unary ufuncs into test_unary_ufunc Examples of possible future extensions are [here](pytorch@616747e), where an example unary ufunc test is added, and [here](pytorch@d0b624f), where example autograd tests are added. Both tests leverage the operator info in common_method_invocations to simplify testing. Pull Request resolved: pytorch#41662 Reviewed By: ngimel Differential Revision: D23048416 Pulled By: mruberry fbshipit-source-id: ecce279ac8767f742150d45854404921a6855f2c

Configuration menu - View commit details

-

Copy full SHA for 4bafca1 - Browse repository at this point

Copy the full SHA 4bafca1View commit details -

Correct the type of some floating point literals in calc_digamma (pyt…

…orch#42846) Summary: They are double, but they are supposed to be of accscalar_t or a faster type. Pull Request resolved: pytorch#42846 Reviewed By: zou3519 Differential Revision: D23049405 Pulled By: mruberry fbshipit-source-id: 29bb5d5419dc7556b02768f0ff96dfc28676f257

Configuration menu - View commit details

-

Copy full SHA for 6471b5d - Browse repository at this point

Copy the full SHA 6471b5dView commit details -

Initial quantile operator implementation (pytorch#42755)

Summary: Pull Request resolved: pytorch#42755 Attempting to land quantile again after being landed here pytorch#39417 and reverted here pytorch#41616. Test Plan: Imported from OSS Reviewed By: mruberry Differential Revision: D23030338 Pulled By: heitorschueroff fbshipit-source-id: 124a86eea3aee1fdaa0aad718b04863935be26c7

Configuration menu - View commit details

-

Copy full SHA for c660d2a - Browse repository at this point

Copy the full SHA c660d2aView commit details -

Ensure IDEEP transpose operator works correctly

Summary: I found out that without exporting to public format IDEEP transpose operator in the middle of convolution net produces incorrect results (probably reading some out-of-bound memory). Exporting to public format might not be the most efficient solution, but at least it ensures correct behavior. Test Plan: Running ConvFusion followed by transpose should give identical results on CPU and IDEEP Reviewed By: bwasti Differential Revision: D22970872 fbshipit-source-id: 1ddca16233e3d7d35a367c93e72d70632d28e1ef

Configuration menu - View commit details

-

Copy full SHA for 9c8f5cb - Browse repository at this point

Copy the full SHA 9c8f5cbView commit details -

Add nn.functional.adaptive_avg_pool size empty tests (pytorch#42857)

Summary: Pull Request resolved: pytorch#42857 Reviewed By: seemethere Differential Revision: D23053677 Pulled By: malfet fbshipit-source-id: b3d0d517cddc96796461332150e74ae94aac8090

Configuration menu - View commit details

-

Copy full SHA for 4afbf39 - Browse repository at this point

Copy the full SHA 4afbf39View commit details -

Export BatchBucketOneHot Caffe2 Operator to PyTorch

Summary: As titled. Test Plan: ``` buck test caffe2/caffe2/python/operator_test:torch_integration_test -- test_batch_bucket_one_hot_op ``` Reviewed By: yf225 Differential Revision: D23005981 fbshipit-source-id: 1daa8d3e7d6ad75e97e94964db95ccfb58541672

Configuration menu - View commit details

-

Copy full SHA for 71dbfc7 - Browse repository at this point

Copy the full SHA 71dbfc7View commit details -

Fix incorrect aten::sorted.str return type (pytorch#42853)

Summary: aten::sorted.str output type was incorrectly set to bool[] due to a copy-paste error. This PR fixes it. Fixes https://fburl.com/0rv8amz7 Pull Request resolved: pytorch#42853 Reviewed By: yf225 Differential Revision: D23054907 Pulled By: gmagogsfm fbshipit-source-id: a62968c90f0301d4a5546e6262cb9315401a9729

Configuration menu - View commit details

-

Copy full SHA for 43613b4 - Browse repository at this point

Copy the full SHA 43613b4View commit details -

Summary: Pull Request resolved: pytorch#42866 Test Plan: Imported from OSS Reviewed By: zdevito Differential Revision: D23056813 Pulled By: jamesr66a fbshipit-source-id: d30cdffe6f0465223354dec00f15658eb0b08363

Configuration menu - View commit details

-

Copy full SHA for 0ff0fea - Browse repository at this point

Copy the full SHA 0ff0feaView commit details -

remove deadline enforcement for hypothesis (pytorch#42871)

Summary: Pull Request resolved: pytorch#42871 old version of hypothesis.testing was not enforcing deadlines after the library got updated, default deadline=200ms, but even with 1s or more, tests are flaky. Changing deadline to non-enforced which is the same behavior as the old version Test Plan: tested fakelowp/tests Reviewed By: hl475 Differential Revision: D23059033 fbshipit-source-id: 79b6aec39a2714ca5d62420c15ca9c2c1e7a8883

Configuration menu - View commit details

-

Copy full SHA for 3bf2978 - Browse repository at this point

Copy the full SHA 3bf2978View commit details -

format for readability (pytorch#42851)

Summary: Pull Request resolved: pytorch#42851 Test Plan: Imported from OSS Reviewed By: smessmer Differential Revision: D23048382 Pulled By: bhosmer fbshipit-source-id: 55d84d5f9c69be089056bf3e3734c1b1581dc127

Configuration menu - View commit details

-

Copy full SHA for eeb43ff - Browse repository at this point

Copy the full SHA eeb43ffView commit details -

[hypothesis] Deadline followup (pytorch#42842)

Summary: Pull Request resolved: pytorch#42842 Test Plan: `buck test` Reviewed By: thatch Differential Revision: D23045269 fbshipit-source-id: 8a3f4981869287a0f5fb3f0009e13548b7478086