-

Notifications

You must be signed in to change notification settings - Fork 32

PiTeR and OpenCV

PiTeR uses OpenCV for his vision system. OpenCV is a really powerful library of computer vision utilities which can run on Linux, Windows and Mac OSX. You can use OpenCV to make your robot do all kinds of extra-ordinary things. Find out much more about OpenCV here. Many of the examples found here were based on tutorials found on this site under the OpenCV Tutorials link. Much kudos and thanks to the wonderful people at OpenCV who put these tutorials together.

To use OpenCV on your Raspberry Pi, you need to install the python-opencv package. This can be installed using apt:

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install python-opencv

Obviously for live vision experiments, OpenCV needs to grab images from your camera. Video for Linux (v4l) enables easy access to the Raspberry Pi camera. If you do a websearch, you will find other ways to access the camera from OpenCV, including some which have been highlighted on the Raspberry Pi Foundation blog. However in my opinion, using v4l is by far the easiest way to get going. v4l2 is installed by default in the latest Raspberry Pi firmware release, but you must start the driver like this:

sudo modprobe bcm2835-v4l2

If you get a 'FATAL: Module not found.' response, You may need to update your firmware first to get the module installed:

sudo rpi-update

The python programs which test PiTeRs visual system are found here in the repository. You don’t need a robot to try these programs for yourself, you just need a Pi camera or webcam. Note that I have so far been unable to get a Pi NoIR camera to work. In theory only the colour balance should be affected, but even with the colour range optimised, the tracking script does not work properly with the NoIR camera. I will continue to experiment with this and report back if I find anything.

What follows here are some notes that relate the programs themselves to an OpenCV article published in the MagPi magazine issue 28, November 2014. They all require X to be running. All these programs can be exited by pressing escape while one of the output windows is focused.

imageCap.py - This program covers the first three steps covered in the article:

import cv2

cap = cv2.VideoCapture(-1)

success, frame = cap.read()

cv2.imshow(frame)

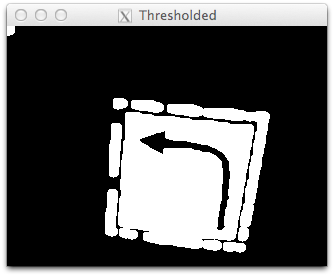

colour.py - This program displays six sliders to control the range of the colour values; Hue, Saturation and Value. We use these values rather than the more familiar Red, Green and Blue because they are more suited to setting up a colour range. Each colour value has a high and low which mark the ends of the range that will be accepted as the part of the image we’re looking for.

This program opens other windows, one shows the original view, another shows the result of applying the OpenCV inRange function and a third shows the final mask after noise reduction. The image capture and filtering happen inside a while loop, so that you can adjust the sliders and see the effect of your adjustments.

Note that the objective here is to locate a patch in the image, the mask does not have to be perfect. We're going to use this patch to crop the image further on down, and the symbol recognition will be done on the cropped part of the original image, so no detail will be lost.

When you exit colour.py, it prints the current settings of the high and low range values. This allows you to copy them from the terminal and paste them into the appropriate place in the following two programs. This makes it easy to customise patch.py and tracking.py for your local lighting environment. You really have to do this to get any kind of good results from the patch and tracking scripts.

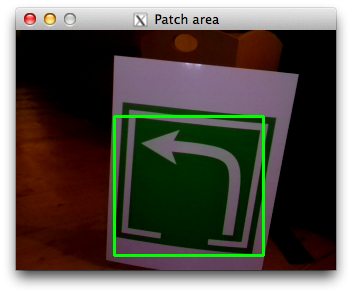

patch.py - This program incorporates more of the concepts discussed in the article. It takes a set of fixed colour range values and outputs the image captured by the camera. If it finds a patch of colour which passes the filter (as provided by colour.py above), it uses the findContours and boundingRect functions discussed in the article to draw a green rectangle around the relevant area in the output image.

tracking.py - Finally, this program builds on the previous three examples to perform full symbol recognition. It uses detectAndCompute to find the key points in the image and the knnMatch function to compute a match and locate the symbol. If the match is good enough, the matched points are used to compute a transformation of a rectangle within which the symbol is located.

![]()

If everything worked perfectly, the transformed rectangle shown in blue should look as if it lies right on top of the symbol in the camera image, even if the symbol appears at an angle to the camera. It doesn’t always get it perfectly right, but it does so often enough to be impressive in my opinion. Try it! If you have trouble, try stronger lighting. Don't forget though that if you change the lighting, you will likely need to retune the HSV parameters.

Important - Unfortunately, the version of openCV installed by default by apt-get does not include the SURF feature detection that tracking.py relies on. This will be fixed in upcoming revisions of OpenCV, but for now you'll need to manually upgrade to OpenCV 2.4.9 if you wish to run the tracking.py. See the section below called Upgrade to OpenCV 2.4.9 for the required steps.

The main robot control program PiTeR.py, integrates these experimental programs and uses their output to control PiTeR to autonomously find and visit each symbol in a trail. Have a look at symbolFinder.py and symbolIdentifier.py in the gz_piter/Python folder to see how this is done.

The next event is the cub scout meeting in December. Once that is complete, I hope to post some pictures here, so watch this space!

Wish me luck! :-)

Upgrade to OpenCV 2.4.9

You'll need to do these steps if you want to run the most advanced program that does the symbol recognition.

- Clone the prebuilt library files found at:

git clone https://github.com/Nolaan/libopencv_24 (thanks to Raspberry Pi forum member Nolaan for this)

- Create a new folder 'opencv' at /usr/local/lib

sudo mkdir /usr/local/lib/opencv

- Copy the cloned files libopencv_. to this new folder

sudo cp libopencv_24/libopencv_* /usr/local/lib/opencv

- Add the new libraries to the dynamic linker path:

export LD_LIBRARY_PATH=/usr/local/lib/opencv (or add the path to this variable if it is already set)

- Install new dependency libavformat

sudo apt-get install libavformat53

- Copy prebuilt python library into python configuration

sudo cp MagPi/opencv_pylib_249/cv2.so /usr/lib/pyshared/python2.7

Note that this last step changes the version of OpenCV that python uses. You may wish to copy aside the previous cv2.so library file so that you can reinstate it later if you wish.

Upgrade to OpenCV 2.4.10

After upgrading to OpenCV 2.4.9, you may notice that some of the example programs are a little slow and 'laggy'. The performance issue is largely on the output side and is made worse in 2.4.9 because setting the property which sets a smaller image to capture does not work in 2.4.9. This does not seem to be resolved in release 2.4.10 either. We may have to wait for OpenCV 3.0.