Releases: pyg-team/pytorch_geometric

PyG 2.1.0: Principled aggregations, link-level and temporal samplers, data pipe support, ...

We are excited to announce the release of PyG 2.1.0 🎉🎉🎉

PyG 2.1.0 is the culmination of work from over 60 contributors who have worked on features and bug-fixes for a total of over 320 commits since torch-geometric==2.0.4.

Highlights

Principled Aggregations

See here for the accompanying tutorial.

Aggregation functions play an important role in the message passing framework and the readout functions of Graph Neural Networks. Specifically, many works in the literature (Hamilton et al. (2017), Xu et al. (2018), Corso et al. (2020), Li et al. (2020), Tailor et al. (2021), Bartunov et al. (2022)) demonstrate that the choice of aggregation functions contributes significantly to the representational power and performance of the model.

To facilitate further experimentation and unify the concepts of aggregation within GNNs across both MessagePassing and global readouts, we have made the concept of Aggregation a first-class principle in PyG (#4379, #4522, #4687, #4721, #4731, #4762, #4749, #4779, #4863, #4864, #4865, #4866, #4872, #4927, #4934, #4935, #4957, #4973, #4973, #4986, #4995, #5000, #5021, #5034, #5036, #5039, #4522, #5033, #5085, #5097, #5099, #5104, #5113, #5130, #5098, #5191). As of now, PyG provides support for various aggregations — from simple ones (e.g., mean, max, sum), to advanced ones (e.g., median, var, std), learnable ones (e.g., SoftmaxAggregation, PowerMeanAggregation), and exotic ones (e.g., LSTMAggregation, SortAggregation, EquilibriumAggregation). Furthermore, multiple aggregations can be combined and stacked together:

from torch_geometric.nn import MessagePassing, SoftmaxAggregation

class MyConv(MessagePassing):

def __init__(self, ...):

# Combines a set of aggregations and concatenates their results.

# The interface also supports automatic resolution.

super().__init__(aggr=['mean', 'std', SoftmaxAggregation(learn=True)])Link-level Neighbor Loader

We added a new LinkNeighborLoader class for training scalable GNNs that perform edge-level predictions on giant graphs (#4396, #4439, #4441, #4446, #4508, #4509, #4868). LinkNeighborLoader comes with automatic support for both homogeneous and heterogenous data, and supports link prediction via automatic negative sampling as well as edge-level classification and regression models:

from torch_geometric.loader import LinkNeighborLoader

loader = LinkNeighborLoader(

data,

num_neighbors=[30] * 2, # Sample 30 neighbors for each node for 2 iterations

batch_size=128, # Use a batch size of 128 for sampling training links

edge_label_index=data.edge_index, # Use the entire graph for supervision

negative_sampling_ratio=1.0, # Sample negative edges

)

sampled_data = next(iter(loader))

print(sampled_data)

>>> Data(x=[1368, 1433], edge_index=[2, 3103], edge_label_index=[2, 256], edge_label=[256])Neighborhood Sampling based on Temporal Constraints

Both NeighborLoader and LinkNeighborLoader now support temporal sampling via the time_attr argument (#4025, #4877, #4908, #5137, #5173). If set, temporal sampling will be used such that neighbors are guaranteed to fulfill temporal constraints, i.e. neighbors have an earlier timestamp than the center node:

from torch_geometric.loader import NeighborLoader

data['paper'].time = torch.arange(data['paper'].num_nodes)

loader = NeighborLoader(

data,

input_nodes='paper',

time_attr='time', # Only sample papers that appeared before the seed paper

num_neighbors=[30] * 2,

batch_size=128,

)Note that this feature requires torch-sparse>=0.6.14.

Functional DataPipes

See here for the accompanying example.

PyG now fully supports data loading using the newly introduced concept of DataPipes in PyTorch for easily constructing flexible and performant data pipelines (#4302, #4345, #4349). PyG provides DataPipe support for batching multiple PyG data objects together and for applying any PyG transform:

datapipe = FileOpener(['SMILES_HIV.csv'])

datapipe = datapipe.parse_csv_as_dict()

datapipe = datapipe.parse_smiles(target_key='HIV_active')

datapipe = datapipe.in_memory_cache() # Cache graph instances in-memory.

datapipe = datapipe.shuffle()

datapipe = datapipe.batch_graphs(batch_size=32)datapipe = FileLister([root_dir], masks='*.off', recursive=True)

datapipe = datapipe.read_mesh()

datapipe = datapipe.in_memory_cache() # Cache graph instances in-memory.

datapipe = datapipe.sample_points(1024) # Use PyG transforms from here.

datapipe = datapipe.knn_graph(k=8)

datapipe = datapipe.shuffle()

datapipe = datapipe.batch_graphs(batch_size=32)Breaking Changes

- The

torch_geometric.utils.metricpackage has been removed. We now recommend to use thetorchmetricspacka...

2.0.4

PyG 2.0.4 🎉

A new minor PyG version release, bringing PyTorch 1.11 support to PyG. It further includes a variety of new features and bugfixes:

Features

- Added Quiver examples for multi-GU training using

GraphSAGE(#4103), thanks to @eedalong and @luomai nn.model.to_captum: Full integration of explainability methods provided by the Captum library (#3990, #4076), thanks to @RBendiasnn.conv.RGATConv: The relational graph attentional operator (#4031, #4110), thanks to @fork123aniketnn.pool.DMoNPooling: The spectral modularity pooling operator (#4166, #4242), thanks to @fork123aniketnn.*: Support for shape information in the documentation (#3739, #3889, #3893, #3946, #3981, #4009, #4120, #4158), thanks to @saiden89 and @arunppsg and @konstantinosKokosloader.TemporalDataLoader: A dataloader to load aTemporalDataobject in mini-batches (#3985, #3988), thanks to @otaviocxloader.ImbalancedSampler: A weighted random sampler that randomly samples elements according to class distribution (#4198)transforms.VirtualNode: A transform that adds a virtual node to a graph (#4163)transforms.LargestConnectedComponents: Selects the subgraph that corresponds to the largest connected components in the graph (#3949), thanks to @abojchevskiutils.homophily: Support for class-insensitive edge homophily (#3977, #4152), thanks to @hash-ir and @jinjh0123utils.get_mesh_laplacian: Mesh Laplacian computation (#4187), thanks to @daniel-unyi-42

Datasets

- Added a dataset cheatsheet to the documentation that collects import graph statistics across a variety of datasets supported in PyG (#3807, #3817) (please consider helping us filling its remaining content)

datasets.EllipticBitcoinDataset: A dataset of Bitcoin transactions (#3815), thanks to @shravankumar147

Minor Changes

nn.models.MLP: MLPs can now either be initialized via a list ofchannelsor by specifyinghidden_channelsandnum_layers(#3957)nn.models.BasicGNN: FinalLineartransformations are now always applied (except forjk=None) (#4042)nn.conv.MessagePassing: Message passing modules that make use ofedge_updaterare now jittable (#3765), thanks to @Padarnnn.conv.MessagePassing: (Official) support forminandmulaggregations (#4219)nn.LightGCN: Initialize embeddings viaxavier_uniformfor better model performance (#4083), thanks to @nishithshowri006nn.conv.ChebConv: Automatic eigenvalue approximation (#4106), thanks to @daniel-unyi-42nn.conv.APPNP: Added support for optionaledge_weight, (690a01d), thanks to @YueeXiangnn.conv.GravNetConv: Support fortorch.jit.script(#3885), thanks to @RobMcHnn.pool.global_*_pool: Thebatchvector is now optional (#4161)nn.to_hetero: Added a warning in caseto_heterois used onHeteroDatametadata with unused destination node types (#3775)nn.to_hetero: Support for nested modules (ea135bf)nn.Sequential: Support for indexing (#3790)nn.Sequential: Support forOrderedDictas input (#4075)datasets.ZINC: Added an in-depth description of the task (#3832), thanks to @gasteigerjodatasets.FakeDataset: Support for different feature distributions across different labels (#4065), thanks to @arunppsgdatasets.FakeDataset: Support for custom global attributes (#4074), thanks to @arunppsgtransforms.NormalizeFeatures: Features will no longer be transformed in-place (ada5b9a)transforms.NormalizeFeatures: Support for negative feature values (6008e30)utils.is_undirected: Improved efficiency (#3789)utils.dropout_adj: Improved efficiency (#4059)utils.contains_isolated_nodes: Improved efficiency (970de13)utils.to_networkx: Support forto_undirectedoptions (upper triangle vs. lower triangle) (#3901, #3948), thanks to @RemyLaugraphgym: Support for custom metrics and loggers (#3494), thanks to @RemyLaugraphgym.register: Register operations can now be used as class decorators (#3779, #3782)- Documentation: Added a few exercises at the end of documentation tutorials (#3780), thanks to @PabloAMC

- Documentation: Added better installation instructions to

CONTRIBUTUNG.md(#3803, #3991, #3995), thanks to @Cho-Geonwoo and @RBendias and @RodrigoVillatoro - Refactor: Clean-up dependencies (#3908, #4133, #4172), thanks to @adelizer

- CI: Improved test runtimes (#4241)

- CI: Additional linting check via

yamllint(#3886) - CI: Additional linting check via

isort(66b1780), thanks to @mananshah99 torch.package: Model packaging viatorch.package(#3997)

Bugfixes

data.HeteroData: Fixed a bug indata.{attr_name}_dictin casedata.{attr_name}does not exist (#3897)data.Data: Fixeddata.is_edge_attrin casedata.num_edges == 1(#3880)data.Batch: Fixed a device mismatch bug in case abatchobject was indexed that was created from GPU tensors (e6aa4c9, c549b3b)

*...

2.0.3

PyG 2.0.3 🎉

A new minor PyG version release, including a variety of new features and bugfixes:

Features

GLNN: Graph-less Neural Networks [Example] (#3572)LINKX: Large Scale Learning on Non-Homophilous Graphs [Example] (#3654)- Added an example for heterogeneous link classification (#3350) - thanks to @anniekmyatt

HANConv: The Heterogenous Graph Attention operator [Example] (#3444, #3577, #3581) - thanks to @rishubhkhurana and @wsad1LGConvandLightGCN: Simplifying and Powering Graph Convolution Network for Recommendation (#3685) - thanks to @LukasHaas and @KathyFeiyang- PyTorch Lightning

DataModulewrappers for PyG+PL multi-GPU training/inference without replicating datasets across processes :torch_geometric.data.LightningDatasetfor multi-GPU training via PL on graph-level tasks [Example] (#3596, #3634)torch_geometric.data.LightningNodeDatafor multi-GPU training via PL on node-level tasks [Example] (#3613, #3634)

NeighborLoader: Added CUDA support leading to major runtime improvements [Example] (#3736)MessagePassing: Added theedge_updater/edge_updateinterface for updating edge features (#3450) - thanks to @PadarnGNNExplainer: Added an example that reproduces the official BA-Shapes experiment (#3386) - thanks to @RBendiastorch_geometric.graphgym: Support for heterogeneous graphs and lazy initialization (#3460) - thanks to @JiaxuanYouMLP: Added a basic MLP implementation (#3553)PointTransformer: Classification and segmentation examples (#3344) - thanks to @QuanticDisaster and @wsad1ShaDowKHopSampler: Added an example (#3411) - thanks to @SubhajitDuttaChowdhuryData.subgraph(...)implementation (#3521)

Datasets

HGBDatasetbenchmark suite (#3454)MalNetTinydataset (#3472) - thanks to @rampasekOMDB: Organic Materials Database (#3506)BAShapes: The BA-Shapes dataset (#3386) - thanks to @RBendiasPolBlogsandEmailEUCoredatasets (#3534) - thanks to @AlexDuvalinhoStochasticBlockModelandRandomPartitiongraph datasets (#3586) - thanks to @dongkwan-kimLINKXDataset: A subset of the non-homophilous benchmark datasets fromLINKXFakeDatasetandFakeHeteroDatasetfor testing purposes (#3741) - thanks to @levulinh

Minor Changes

torch_geometric.nn.norm: Improved the runtimes of normalization layers - thanks to @johnpeterflynnDataLoaderandNeighborLoader: Output tensors are now written to shared memory to avoid an extra copy in casenum_workers > 0(#3401 and #3734) - thanks to @johnpeterflynnGATv2Conv: Support for edge features (#3421) - thanks to @Kenneth-SchroederBatch.from_data_list: Runtime improvementsTransformerConv: Runtime and memory consumption improvements (#3392) - thanks to @wsad1mean_iou: Added IoU computation via omitting NaNs (#3464) - thanks to @GericoViDataLoader:follow_batchandexclude_keysare now optional arguments- Improvements to the package metadata (#3445) - thanks to @cthoyt

- Updated the quick start widget to support PyTorch 1.10 (#3474) - thanks to @kathyfan

NeighborLoaderandHGTLoader: Removed thepersistent_workers=Truedefaultvoxel_grid: Thebatchargument is now optional (#3533) - thanks to @QuanticDisasterTransformerConv: JIT support (#3538) - thanks to @RobMcH- Lazy modules can now correctly be saved and loaded via

state_dict()andload_state_dict()(#3651) - thanks to @shubham-gupta-iitr from_networkx: Support fornx.MultiDiGraph(#3646) - thanks to @max-zipfl-fziGATv2Conv: Support for lazy initialization (#3678) - thanks to @richcmwangtorch_geometric.graphgym:register_*functions can now be used as decorators (#3684)AddSelfLoops: Now supports the full argument set oftorch_geometric.utils.add_self_loops(#3702) - thanks to @dongkwan-kim- Documentation: Added shape information to ...

2.0.2

A new minor version release, including further bugfixes, official PyTorch 1.10 support, as well as additional features and operators:

Features

- Added video tutorials and Colabs from the PyTorch Geometric Tutorial project (thanks to @AntonioLonga)

- Added the

GraphMultisetTransformeroperator (thanks to @JinheonBaek) - Added the

PointTransformerConvoperator (thanks to @QuanticDisaster) - Added the

HEATConvoperator (thanks to @Xiaoyu006) - Added the

PNAGNN model (thanks to @RBendias) - Added the

AddMetaPathstransform, which will add additional edge types to aHeteroDataobject based on a list of metapaths (thanks to @wsad1) - Added the

Data.to_heterogeneousmethod to allow for the conversion fromDatatoHeteroDataobjects - Added the

AttributedGraphDataset, containing a variety of attributes graphs - Added the

Airportsdatasets - Added the

structured_negative_sampling_feasiblemethod, which checks ifstructured_negative_samplingis feasible (thanks to @WuliangHuang) GATConvcan now make use of multi-dimensional edge features to compute attention scores (thanks to @dongkwan-kim)RandomNodeSplitandRandomLinkSplitnow supportHeteroDataas inputMessagePassinginference can now be sped up via thedecomposed_layersargument (thanks to @ZhouAo-ZA)negative_samplingandbatched_negative_samplingnow support negative sampling in bipartite graphsHeteroConvnow supports the inclusion of arbitrary node-level or edge-level information for the underlyingMessagePassingoperatorsGNNExplainernow supports multiple node-level masks and explaining regression problems (thanks to @gregorkrz)

Minor Changes

Data.to_homogeneouswill now addnode_typeinformation to the homogeneousDataobjectGINEConvnow allows to transform edge features automatically in case their dimensionalities do not match (thanks to @CaypoH)OGB_MAGwill now addnode_yearinformation to paper nodesEntitiesdatasets do now allow the processing ofHeteroDataobjects via thehetero=TrueoptionBatchobjects can now be batched together to form super batches- Added heterogeneous graph support for

Center,ConstantandLinearTransformationtransformations HeteroConvnow allows to return "stacked" embeddings- The

batchvector of aBatchobject will now be initialized on the GPU in case other attributes are held in GPU memory

Bugfixes

- Fixed the

num_neighborsargument ofNeighborLoaderin order to specify an edge-type specific number of neighbors - Fixed the

collatepolicy of lists of integers/strings to return nested lists - Fixed the

Delaunaytransformation in case thefaceattribute is not present in the data - Fixed the

TGNMemorymodule to only read from the latest update (thanks to @cwh104504) - Fixed the

pickle.PicklingErrorwhenBatchobjects are used in atorch.multiprocessing.manager.Queue()(thanks to @RasmusOrsoe) - Fixed an issue with

_parentstate changing after pickling ofDataobjects (thanks to @zepx) - Fixed the

ToUndirectedtransformation in case the number of edges and nodes are equal (thanks to @lmkmkrcc) - Fixed the

from_networkxroutine in case node-level and edge-level features share the same names - Removed the

num_nodeswarning when creatingPairDataobjects - Fixed the initialization of the

GeneralMultiLayermodule in GraphGym (thanks to @fjulian) - Fixed custom model registration in GraphGym

- Fixed a clash in the

run_dirnaming of GraphGym (thanks to @fjulian) - Includes a fix to prevent a GraphGym crash in case ROC-score is undefined (thanks to @fjulian)

- Fixed the

Batch.from_data_listroutine on dataset slices (thanks to @dtortorella) - Fixed the

MetaPath2Vecmodel in case there exists isolated nodes - Fixed

torch_geometric.utils.coalescewith CUDA tensors

2.0.1

PyG 2.0.1

This is a minor release, bringing some emergency fixes to PyG 2.0.

Bugfixes

- Fixed a bug in

loader.DataLoaderthat raised aPicklingErrorfornum_workers > 0(thanks to @r-echeveste, @arglog and @RishabhPandit-00) - Fixed a bug in the creation of

data.Batchobjects in case customizeddata.Dataobjects expect non-default arguments (thanks to @Emiyalzn) - Fixed a bug in which

SparseTensorattributes could not be batched along single dimensions (thanks to @rubenwiersma)

2.0.0

PyG 2.0 🎉 🎉 🎉

PyG (PyTorch Geometric) has been moved from my own personal account rusty1s to its own organization account pyg-team to emphasize the ongoing collaboration between TU Dortmund University, Stanford University and many great external contributors. With this, we are releasing PyG 2.0, a new major release that brings sophisticated heterogeneous graph support, GraphGym integration and many other exciting features to PyG.

If you encounter any bugs in this new release, please do not hesitate to create an issue.

Heterogeneous Graph Support

We finally provide full heterogeneous graph support in PyG 2.0. See here for the accompanying tutorial.

Highlights

-

Heterogeneous Graph Storage: Heterogeneous graphs can now be stored in their own dedicated

data.HeteroDataclass (thanks to @yaoyaowd):from torch_geometric.data import HeteroData data = HeteroData() # Create two node types "paper" and "author" holding a single feature matrix: data['paper'].x = torch.randn(num_papers, num_paper_features) data['author'].x = torch.randn(num_authors, num_authors_features) # Create an edge type ("paper", "written_by", "author") holding its graph connectivity: data['paper', 'written_by', 'author'].edge_index = ... # [2, num_edges]

data.HeteroDatabehaves similar to a regular homgeneousdata.Dataobject:print(data['paper'].num_nodes) print(data['paper', 'written_by', 'author'].num_edges) data = data.to('cuda')

-

Heterogeneous Mini-Batch Loading: Heterogeneous graphs can be converted to mini-batches for many small and single giant graphs via the

loader.DataLoaderandloader.NeighborLoaderloaders, respectively. These loaders can now handle both homogeneous and heterogeneous graphs:from torch_geometric.loader import DataLoader loader = DataLoader(heterogeneous_graph_dataset, batch_size=32, shuffle=True) from torch_geometric.loader import NeighborLoader loader = NeighborLoader(heterogeneous_graph, num_neighbors=[30, 30], batch_size=128, input_nodes=('paper', data['paper'].train_mask), shuffle=True)

-

Heterogeneous Graph Neural Networks: Heterogeneous GNNs can now easily be created from homogeneous ones via

nn.to_heteroandnn.to_hetero_with_bases. These processes take an existing GNN model and duplicate their message functions to account for different node and edge types:from torch_geometric.nn import SAGEConv, to_hetero class GNN(torch.nn.Module): def __init__(hidden_channels, out_channels): super().__init__() self.conv1 = SAGEConv((-1, -1), hidden_channels) self.conv2 = SAGEConv((-1, -1), out_channels) def forward(self, x, edge_index): x = self.conv1(x, edge_index).relu() x = self.conv2(x, edge_index) return x model = GNN(hidden_channels=64, out_channels=dataset.num_classes) model = to_hetero(model, data.metadata(), aggr='sum')

Additional Features

- A heterogeneous graph tutorial describing all newly released features (thanks to @mrjel)

- A variety of heterogeneous GNN examples

- Support for lazy initialization of GNN operators by passing

-1to thein_channelsargument (implemented viann.dense.Linear).

This allows to avoid calculating and keeping track of input tensor sizes, simplyfing the creation of heterogeneous graph models with varying feature dimensionalities across different node and edge types. Lazy initialization is supported for all existing PyG operators (thanks to @yaoyaowd):from torch_geometric.nn import GATConv conv = GATConv(-1, 64) # We can initialize the model’s parameters by calling it once: conv(x, edge_index)

nn.conv.HeteroConv: A generic wrapper for computing graph convolution on heterogeneous graphs (thanks to @RexYing)nn.conv.HGTConv: The heterogeneous graph transformer operator from the "Heterogeneous Graph Transformer" paperloader.HGTLoader: The heterogeneous graph sampler from the "Heterogeneous Graph Transformer" paper for learning on large-scale heterogeneous graphs (thanks to @chantat)- Support for heterogeneous graph transformations in

transforms.AddSelfLoops,transforms.ToSparseTensor,transforms.NormalizeFeaturesandtransforms.ToUndirected - New heterogeneous graph datasets:

datasets.OGB_MAG,datasets.IMDB,datasets.DBLPanddatasets.LastFM - Support for converting heterogeneous graphs to "typed" homogeneous ones via

data.HeteroData.to_homogeneous(thanks to @yzhao062) - A tutorial on creating a

data.HeteroDataobject from raw*.csvfiles (thanks to @yaoyaowd and @mrjel) - An example to scale heterogeneous graph models via PyTorch Lightning

Managing Experiments with GraphGym

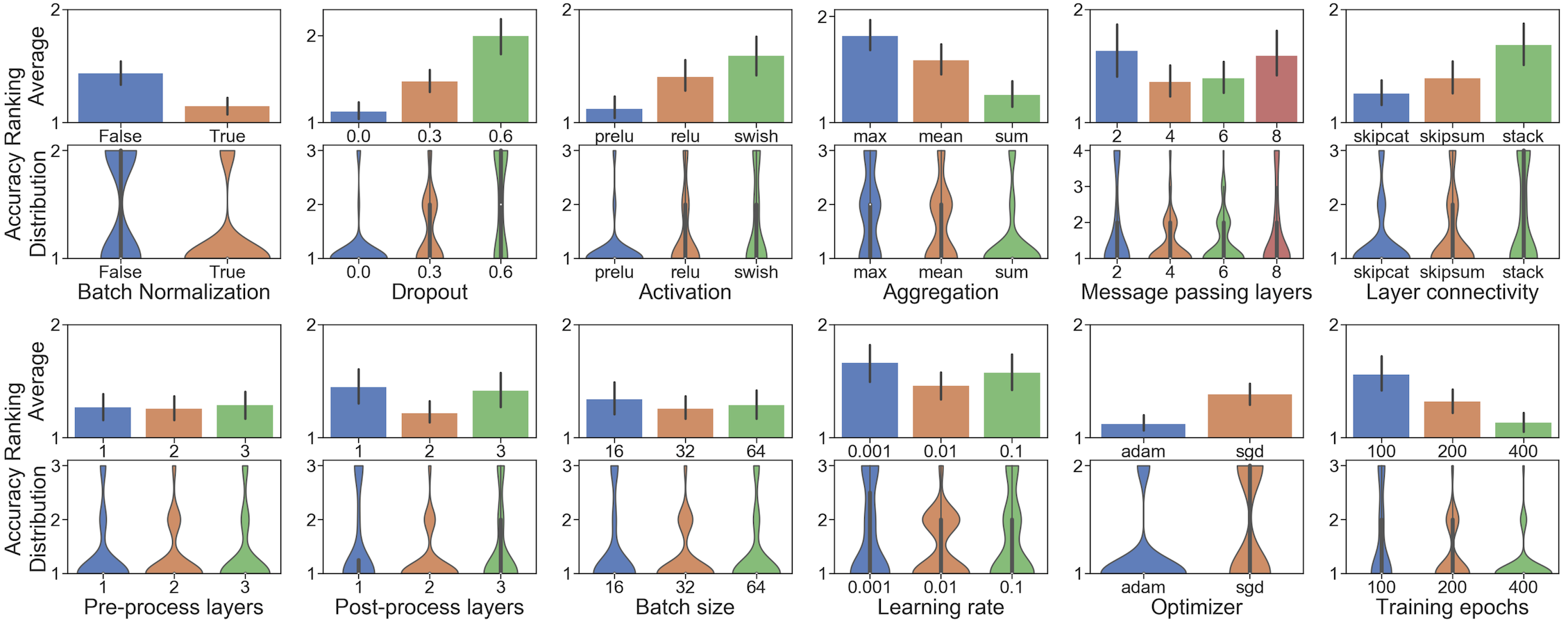

GraphGym is now officially supported in PyG 2.0 via torch_geometric.graphgym. See here for the accompanying tutorial. Overall, GraphGym is a platform for designing and evaluating Graph Neural Networks from configuration files via a highly modularized pipeline (thanks to @JiaxuanYou):

- GraphGym is the perfect place to start learning about standardized GNN implementation and evaluation

- GraphGym provides a simple interface to try out thousands of GNN architectures in parallel to find the best design for your specific task

- GraphGym lets you easily do hyper-parameter search and visualize what design choices are better

Breaking Changes

- The

datasets.AMinerdataset now returns adata.HeteroDataobject. See here for our updatedMetaPath2Vecexample onAMiner. transforms.AddTrainValTestMaskhas been replaced in favour of [transforms.RandomNodeSplit](https://pytorch-geometric.readthedocs.io/en/latest/modules/transforms.html#torch...

1.7.2

Datasets

- The

GitHubWeb and ML developer dataset (thanks to @benedekrozemberczki) - The

FacebookPagePagedataset (thanks to @benedekrozemberczki) - The

Twitchgamer datasets (thanks to @benedekrozemberczki) - The

DeezerEuropedataset (thanks to @benedekrozemberczki) - The

GemsecDeezerdataset (thanks to @benedekrozemberczki) - The

LastFMAsiadataset (thanks to @benedekrozemberczki) - The

WikipediaNetworkdatasets does now allow usage of the raw dataset as introduced in Multi-scale Attributed Node Embedding (thanks to @benedekrozemberczki)

Bugfixes

- Fixed an error in

DeepGCNLayerin case no normalization layer is provided (thanks to @lukasfolle) - Fixed a bug in

GNNExplainerwhich mixed the loss computation for graph-level and node-level predictions (thanks to @panisson and @wsad1)

1.7.1

A minor release that brings PyTorch 1.9.0 and Python 3.9 support to PyTorch Geometric. In case you are in the process of updating to PyTorch 1.9.0, please re-install the external dependencies for PyTorch 1.9.0 as well (torch-scatter and torch-sparse).

Features

EGConv(thanks to @shyam196)GATv2Conv(thanks to @shakedbr)GraphNormnormalization layerGNNExplainernow supports explaining graph-level predictions (thanks to @wsad1)broandginiregularization (thanks to @rhsimplex)train_test_split_edges()andto_undirected()can now edge features (thanks to @saiden89 and @SherylHYX)- Datasets can now be accessed with

np.ndarrayas well (thanks to @josephenguehard) dense_to_sparsecan now handle batched adjacency matricesnumbais now an optional dependency

Datasets

- The tree-structured fake news propagation

UPFDdataset (thanks to @YingtongDou) - The large-scale

AmazonProductsgraph from the GraphSAINT paper - Added support for two more datasets in the

SNAPDatasetbenchmark suite (thanks to @SherylHYX)

Issues

- Fixed an issue in which

SuperGATConvused all positive edges for computing the auxiliary loss (thanks to @anniekmyatt) - Fixed a bug in which

MemPoolingproduced NaN gradients (thanks to @wsad1) - Fixed an issue in which the

schnetpackpackage was required for trainingSchNet(thanks to @mshuaibii) - Modfied

XConvto sample without replacement in casedilation > 1(thanks to @mayur-ag) GraphSAINTSamplercan now be used in combination with PyTorch Lightning- Fixed a bug in

HypergraphConvin casenum_nodes > num_edges(thanks to @THinnerichs)

1.7.0

Major Features

- Temporal Graph Network and an example utilizing graph attention, (thanks to @emalgorithm)

- CorrectAndSmooth and an example on

ogbn-products - PyTorch Lightning support, see here for the accompanying examples (thanks to @tchaton)

SequentialAPI, see here for the accompanying example- FiLMConv and an example on

PPI(thanks to @ldv1) - SuperGAT and an example on

Cora(thanks to @dongkwan-kim) - MemPooling (thanks to @wsad1)

- PANConv (thanks to @YuGuangWang)

- DiffGroupNorm (thanks to @wsad1)

- ResGatedGraphConv (thanks to @ldv1)

- FAConv (thanks to @wsad1)

- AttentiveFP model for molecular graph learning and an example on

ESOL(thanks to @thegodone) Shadowk-hop Sampler (currently requirestorch-sparsefrom master)

Additional Features

- Inductive Deep Graph Infomax example (thanks to @harrygcoppock)

- WLConv and an example of the Weisfeiler-Lehman subtree kernel (thanks to @chrsmrrs)

- LabelPropagation

AddTrainValTestMasktransform for creating various splitting strategies (thanks to @dongkwan-kim)homophilymeasurement (thanks to @ldv1)to_cugraphconversion

Minor Changes

- More memory-efficient implementation of

GCN2Conv - Improved

TransformerConvwith thebetaargument being input and message dependent (thanks to @ldv1) NeighborSamplernow works withSparseTensorand supports an additionaltransformargumentBatch.from_data_listnow supports batching along a new dimension via returningNoneinData.__cat_dim__, see here for the accompanying tutorial (thanks to @Linux-cpp-lisp)MetaLayeris now "jittable"- Lazy loading of

torch_geometric.nnandtorch_geometric.datasets, leading to faster imports (thanks to @Linux-cpp-lisp) GNNExplainernow supports various output formats of the underlying GNN model (thanks to @wsad1)

Datasets

JODIEdatasets for temporal graph learningWordNet18RR(thanks to @minhtriet)Reddit2MixHopSyntheticDataset(thanks to @ldv1)NELL

Bugfixes

- Fixed

SparseAdamusage inexamples/metapath2vec.py(thanks to @declanmillar) - Fixed

from_networkxto support empty edge lists (thanks to @shakedbr) - Fixed a numerical issue in

softmax - Fixed an issue in

DenseGraphConvwithaggr="max"(thanks to @quqixun) - Fixed the norm computation in GraphSAINTSampler (thanks to @austintwang)

CartesianandLocalCartesiannow compute Cartesian coordinates from target to source nodes (thanks to @ldv1)

1.6.3

- Fixed a crucial bug in which

InMemoryDatasets with the usage ofpre_transformled to an error - New datasets:

WikipediaNetworkandActor - Added homophily ratio utility function:

torch_geometric.utils.homophily_ratio